2020 in Numbers

This year, the German labs contribute 138 publications in total to the 2020 ACM CHI Conference on Human Factors in Computing Systems. At the heart, there are 83 Papers, including 1 Best Paper and 14 Honorable Mentions. Further, we bring 34 Late-Breaking Works, 5 Demonstrations, 7 organized Workshops & Symposia, 2 Case Studies, 2 Journal Articles, 1 SIG, 1 SIGCHI Outstanding Dissertation Award and 1 Student Game Competition to CHI this year. All these publications are listed below.

'It’s in my other hand!' - Studying the Interplay of Interaction Techniques and Multi-Tablet Activities

Johannes Zagermann (University of Konstanz), Ulrike Pfeil (University of Konstanz), Philipp von Bauer (University of Konstanz), Daniel Fink (University of Konstanz), Harald Reiterer (University of Konstanz)

Abstract | Tags: Full Paper | Links:

@inproceedings{ZagermannStudying,

title = {'It’s in my other hand!' - Studying the Interplay of Interaction Techniques and Multi-Tablet Activities},

author = {Johannes Zagermann (University of Konstanz) and Ulrike Pfeil (University of Konstanz) and Philipp von Bauer (University of Konstanz) and Daniel Fink (University of Konstanz) and Harald Reiterer (University of Konstanz)},

url = {https://youtu.be/_LZsSPP1FM4, Video

https://www.twitter.com/HCIGroupKN, Twitter},

doi = {10.1145/3313831.3376540},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Cross-device interaction with tablets is a popular topic in HCI research. Recent work has shown the benefits of including multiple devices into users’ workflows while various interaction techniques allow transferring content across devices. However, users are only reluctantly using multiple devices in combination. At the same time, research on cross-device interaction struggles to find a frame of reference to compare techniques or systems. In this paper, we try to address these challenges by studying the interplay of interaction techniques, device utilization, and task-specific activities in a user study with 24 participants from different but complementary angles of evaluation using an abstract task, a sensemaking task, and three interaction techniques. We found that different interaction techniques have a lower influence than expected, that work behaviors and device utilization depend on the task at hand, and that participants value specific aspects of cross-device interaction.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

3D-Auth: Two-Factor Authentication with Personalized 3D-Printed Items

Karola Marky (TU Darmstadt), Martin Schmitz (TU Darmstadt), Verena Zimmer (TU Darmstadt), Martin Herbers (TU Darmstadt), Kai Kunze (Keio Media Design), Max Mühlhäuser (TU Darmstadt)

Abstract | Tags: Full Paper | Links:

@inproceedings{Marky3D,

title = {3D-Auth: Two-Factor Authentication with Personalized 3D-Printed Items},

author = {Karola Marky (TU Darmstadt) and Martin Schmitz (TU Darmstadt) and Verena Zimmer (TU Darmstadt) and Martin Herbers (TU Darmstadt) and Kai Kunze (Keio Media Design) and Max Mühlhäuser (TU Darmstadt)},

url = {https://youtu.be/_dHihnJTRek, Video

https://twitter.com/search?q=%23teamdarmstadt&src=typed_query&f=live, Twitter},

doi = {10.1145/3313831.3376189},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Two-factor authentication is a widely recommended security mechanism and already offered for different services. However, known methods and physical realizations exhibit considerable usability and customization issues. In this paper, we propose 3D-Auth, a new concept of two-factor authentication. 3D-Auth is based on customizable 3D-printed items that combine two authentication factors in one object. The object bottom contains a uniform grid of conductive dots that are connected to a unique embedded structure inside the item. Based on the interaction with the item, different dots turn into touch-points and form an authentication pattern. This pattern can be recognized by a capacitive touchscreen. Based on an expert design study, we present an interaction space with six categories of possible authentication interactions. In a user study, we demonstrate the feasibility of 3D-Auth items and show that the items are easy to use and the interactions are easy to remember.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

A Human Touch: Social Touch Increases the Perceived Human-likeness of Agents in Virtual Reality

Matthias Hoppe (LMU Munich), Beat Rossmy (LMU Munich), Daniel Peter Neumann (LMU Munich), Stephan Streuber (University of Konstanz), Albrecht Schmidt (LMU Munich), Tonja Machulla (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{HoppeAHumanTouch,

title = {A Human Touch: Social Touch Increases the Perceived Human-likeness of Agents in Virtual Reality},

author = {Matthias Hoppe (LMU Munich) and Beat Rossmy (LMU Munich) and Daniel Peter Neumann (LMU Munich) and Stephan Streuber (University of Konstanz) and Albrecht Schmidt (LMU Munich) and Tonja Machulla (LMU Munich)},

url = {https://www.twitter.com/mimuc, Twitter},

doi = {10.1145/3313831.3376719},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Virtual Reality experiences and games present believable virtual environments based on graphical quality, spatial audio, and interactivity. The interaction with in-game characters, controlled by computers (agents) or humans (avatars), is an important part of VR experiences. Pre-captured motion sequences increase the visual humanoid resemblance. However, this still precludes realistic social interactions (eye contact, imitation of body language), particularly for agents. We aim to make social interaction more realistic via social touch. Social touch is non-verbal, conveys feelings and signals (coexistence, closure, intimacy). In our research, we created an artificial hand to apply social touch in a repeatable and controlled fashion to investigate its effect on the perceived human-likeness of avatars and agents. Our results show that social touch is effective to further blur the boundary between computer- and human-controlled virtual characters and contributes to experiences that closely resemble human-to-human interactions.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

A Longitudinal Video Study on Communicating Status and Intent for Self-Driving Vehicle – Pedestrian Interaction

Stefanie M. Faas (Mercedes-Benz AG / Ulm University), Andrea C. Kao (Mercedes-Benz RD NA), Martin Baumann (Ulm University)

Abstract | Tags: Full Paper | Links:

@inproceedings{FassLongitudinal,

title = {A Longitudinal Video Study on Communicating Status and Intent for Self-Driving Vehicle – Pedestrian Interaction},

author = {Stefanie M. Faas (Mercedes-Benz AG / Ulm University) and Andrea C. Kao (Mercedes-Benz RD NA) and Martin Baumann (Ulm University)},

doi = {10.1145/3313831.3376484},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {With self-driving vehicles (SDVs), pedestrians cannot rely on communication with the driver anymore. Industry experts and policymakers are proposing an external Human-Machine Interface (eHMI) communicating the automated status. We investigated whether additionally communicating SDVs’ intent to give right of way further improves pedestrians’ street crossing. To evaluate the stability of these eHMI effects, we conducted a three-session video study with N=34 pedestrians where we assessed subjective evaluations and crossing onset times. This is the first work capturing long-term effects of eHMIs. Our findings add credibility to prior studies by showing that eHMI effects last (acceptance, user experience) or even increase (crossing onset, perceived safety, trust, learnability, reliance) with time. We found that pedestrians benefit from an eHMI communicating SDVs’ status, and that additionally communicating SDVs’ intent adds further value. We conclude that SDVs should be equipped with an eHMI communicating both status and intent.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

A View on the Viewer: Gaze-Adaptive Captions for Videos

Kuno Kurzhals (ETH Zürich), Fabian Göbel (ETH Zürich), Katrin Angerbauer (University of Stuttgart), Michael Sedlmair (University of Stuttgart), Martin Raubal (ETH Zürich)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{KurzhalsView,

title = {A View on the Viewer: Gaze-Adaptive Captions for Videos},

author = {Kuno Kurzhals (ETH Zürich) and Fabian Göbel (ETH Zürich) and Katrin Angerbauer (University of Stuttgart) and Michael Sedlmair (University of Stuttgart) and Martin Raubal (ETH Zürich)},

doi = {10.1145/3313831.3376266},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {"Subtitles play a crucial role in cross-lingual distribution ofmultimedia content and help communicate

information where auditory content is not feasible (loud environments, hearing impairments, unknown languages). Established methods utilize text at the bottom of the screen, which may distract from the video. Alternative techniques place captions closer to relatedcontent (e.g., faces) but are not applicable to arbitrary videos such as documentations. Hence, we propose to leverage live gaze as indirect input method to adapt captions to individual viewing behavior. We implemented two gaze-adaptive methods and compared them in a user study (n=54) to traditional captions and audio-only videos. The results show that viewers with less experience with captions prefer our gaze-adaptive methods as they assist them in reading. Furthermore, gaze distributions resulting from our methods are closer to natural viewing behavior compared to the traditional approach. Based on these results, we provide design implications for gaze-adaptive captions."},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

information where auditory content is not feasible (loud environments, hearing impairments, unknown languages). Established methods utilize text at the bottom of the screen, which may distract from the video. Alternative techniques place captions closer to relatedcontent (e.g., faces) but are not applicable to arbitrary videos such as documentations. Hence, we propose to leverage live gaze as indirect input method to adapt captions to individual viewing behavior. We implemented two gaze-adaptive methods and compared them in a user study (n=54) to traditional captions and audio-only videos. The results show that viewers with less experience with captions prefer our gaze-adaptive methods as they assist them in reading. Furthermore, gaze distributions resulting from our methods are closer to natural viewing behavior compared to the traditional approach. Based on these results, we provide design implications for gaze-adaptive captions."

AL: An Adaptive Learning Support System for Argumentation Skills

Thiemo Wambsganß (University of St.Gallen), Christina Niklaus (University of St.Gallen), Matthias Cetto (University of St. Gallen), Matthias Söllner (University of Kassel & University of St. Gallen), Siegfried Handschuh (University of St. Gallen & University of Passau),, Jan Marco Leimeister (University of St. Gallen & Kassel University)

Tags: Full Paper, Honorable Mention | Links:

@inproceedings{WambsganssAL,

title = {AL: An Adaptive Learning Support System for Argumentation Skills},

author = {Thiemo Wambsganß (University of St.Gallen), Christina Niklaus (University of St.Gallen), Matthias Cetto (University of St. Gallen), Matthias Söllner (University of Kassel & University of St. Gallen), Siegfried Handschuh (University of St. Gallen & University of Passau), and Jan Marco Leimeister (University of St. Gallen & Kassel University)},

doi = {10.1145/3313831.3376851},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

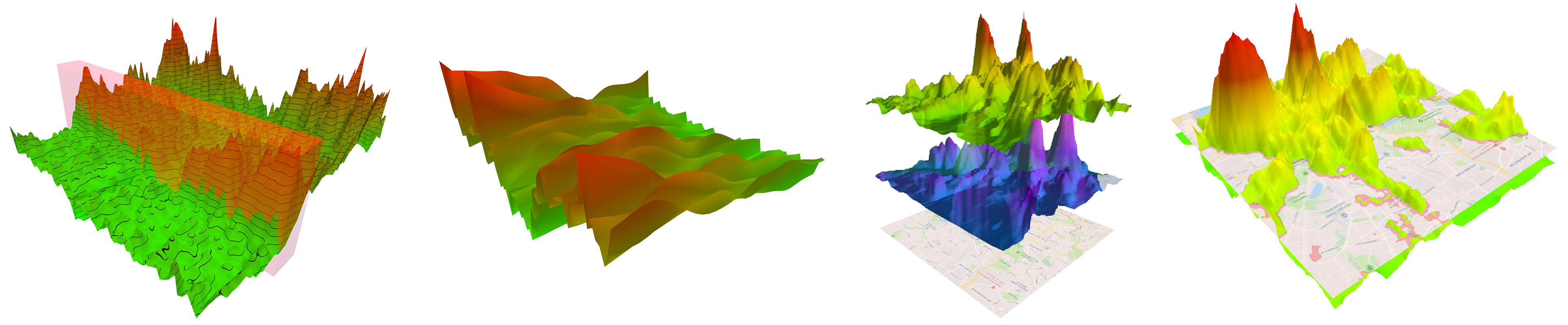

Assessing 2D and 3D Heatmaps for Comparative Analysis: An Empirical Study

Matthias Kraus (University of Konstanz), Katrin Angerbauer (University of Stuttgart), Juri Buchmüller (University of Konstanz), Daniel Schweitzer (University of Konstanz), Daniel Keim (University of Konstanz), Michael Sedlmair (University of Stuttgart), Johannes Fuchs (University of Konstanz)

Abstract | Tags: Full Paper | Links:

@inproceedings{KrausAssessing,

title = {Assessing 2D and 3D Heatmaps for Comparative Analysis: An Empirical Study},

author = {Matthias Kraus (University of Konstanz) and Katrin Angerbauer (University of Stuttgart) and Juri Buchmüller (University of Konstanz) and Daniel Schweitzer (University of Konstanz) and Daniel Keim (University of Konstanz) and Michael Sedlmair (University of Stuttgart) and Johannes Fuchs (University of Konstanz)},

url = {https://youtu.be/ybSj8ibu-qA, Video

https://www.twitter.com/dbvis, Twitter},

doi = {10.1145/3313831.3376675},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Heatmaps are a popular visualization technique that encode 2D density distributions using color or brightness. Experimental studies have shown though that both of these visual variables are inaccurate when reading and comparing numeric data values. A potential remedy might be to use 3D heatmaps by introducing height as a third dimension to encode the data. Encoding abstract data in 3D, however, poses many problems, too. To better understand this tradeoff, we conducted an empirical study (N=48) to evaluate the user performance of 2D and 3D heatmaps for comparative analysis tasks. We test our conditions on a conventional 2D screen, but also in a virtual reality environment to allow for real stereoscopic vision. Our main results show that 3D heatmaps are superior in terms of error rate when reading and comparing single data items. However, for overview tasks, the well-established 2D heatmap performs better.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Augmented Reality for Older Adults: Exploring Acceptability of Virtual Coaches for Home-based Balance Training in an Aging Population

Fariba Mostajeran (Uni Hamburg), Frank Steinicke (Uni Hamburg), Oscar Ariza (Uni Hamburg), Dimitrios Gatsios (University of Ioannina), Dimitrios Fotiadis (University of Ioannina)

Abstract | Tags: Full Paper | Links:

@inproceedings{MostajeranAugmented,

title = {Augmented Reality for Older Adults: Exploring Acceptability of Virtual Coaches for Home-based Balance Training in an Aging Population},

author = {Fariba Mostajeran (Uni Hamburg) and Frank Steinicke (Uni Hamburg) and Oscar Ariza (Uni Hamburg) and Dimitrios Gatsios (University of Ioannina) and Dimitrios Fotiadis (University of Ioannina)},

doi = {10.1145/3313831.3376565},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Balance training has been shown to be effective in reducing risks of falling, which is a major concern for older adults. Usually, exercise programs are individually prescribed and monitored by physiotherapeutic or medical experts. Unfortunately, supervision and motivation of older adults during home-based exercises cannot be provided on a large scale, in particular, considering an ageing population. Augmented reality (AR) in combination with virtual coaches could provide a reasonable solution to this challenge. We present a first investigation of the acceptance of an AR coaching system for balance training, which can be performed at home. In a human-centered design approach we developed several mock-ups and prototypes, and evaluated them with 76 older adults. The results suggest that older adults find the system encouraging and stimulating. The virtual coach is perceived as an alive, calm, intelligent, and friendly human. However, usability of the entire AR system showed a significant negative correlation with participants' age.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

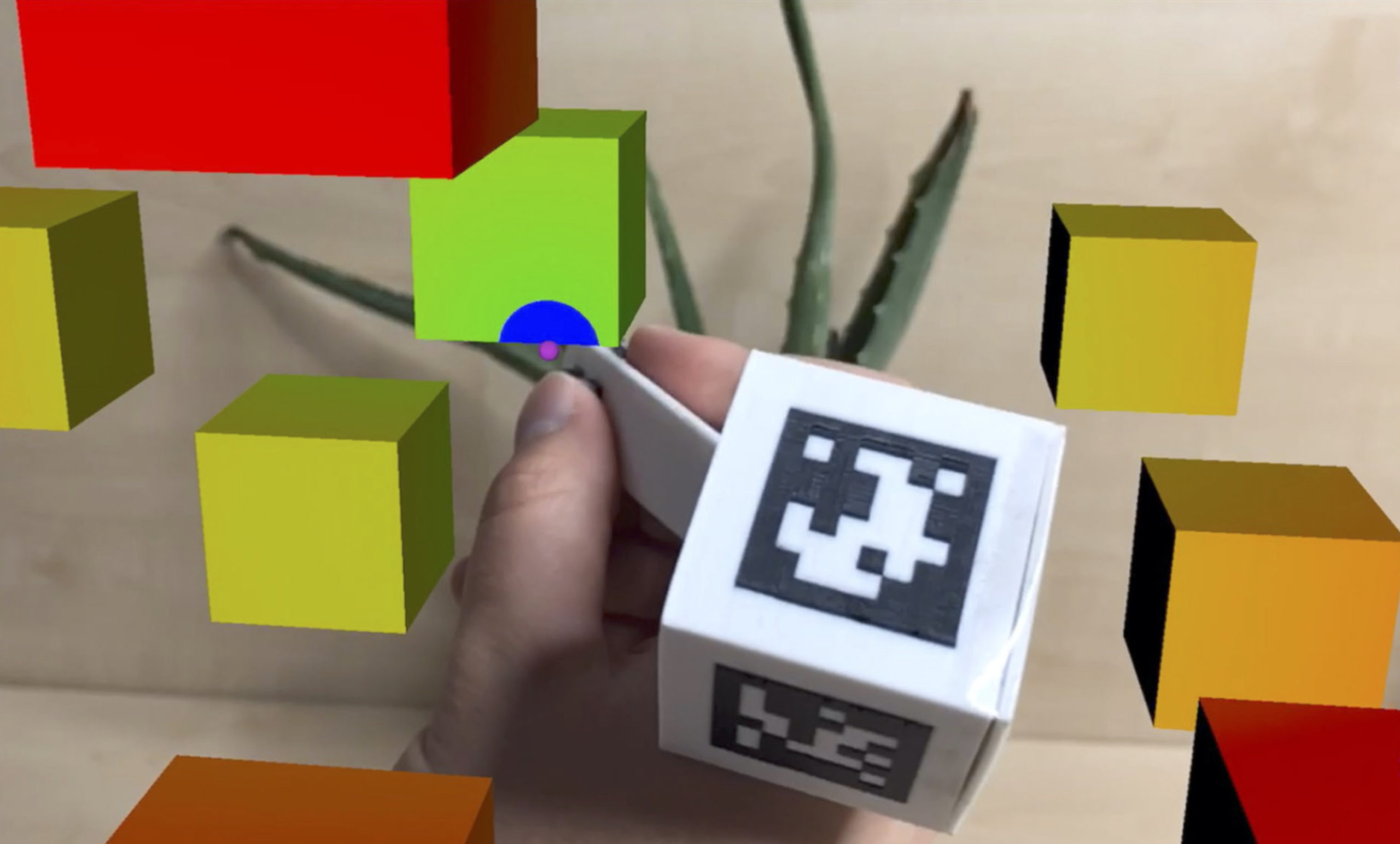

Augmented Reality to Enable Users in Learning Case Grammar from Their Real-World Interactions

Fiona Draxler (LMU Munich), Audrey Labrie (Polytechnique Montréal), Albrecht Schmidt (LMU Munich), Lewis L. Chuang (LMU Munich)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{DraxlerAugmented,

title = {Augmented Reality to Enable Users in Learning Case Grammar from Their Real-World Interactions},

author = {Fiona Draxler (LMU Munich) and Audrey Labrie (Polytechnique Montréal) and Albrecht Schmidt (LMU Munich) and Lewis L. Chuang (LMU Munich)},

url = {https://www.twitter.com/mimuc, Twitter},

doi = {10.1145/3313831.3376537},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Augmented Reality (AR) provides a unique opportunity to situate learning content in one's environment. In this work, we investigated how AR could be developed to provide an interactive context-based language learning experience. Specifically, we developed a novel handheld-AR app for learning case grammar by dynamically creating quizzes, based on real-life objects in the learner's surroundings. We compared this to the experience of learning with a non-contextual app that presented the same quizzes with static photographic images. Participants found AR suitable for use in their everyday lives and enjoyed the interactive experience of exploring grammatical relationships in their surroundings. Nonetheless, Bayesian tests provide substantial evidence that the interactive and context-embedded AR app did not improve case grammar skills, vocabulary retention, and usability over the experience with equivalent static images. Based on this, we propose how language learning apps could be designed to combine the benefits of contextual AR and traditional approaches.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

Augmented Reality Training for Industrial Assembly Work – Are Projection-based AR Assistive Systems an Appropriate Tool for Assembly Training?

Sebastian Büttner (TU Clausthal / TH OWL), Michael Prilla (TU Clausthal), Carsten Röcker (TH OWL)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{BuettnerAugmented,

title = {Augmented Reality Training for Industrial Assembly Work – Are Projection-based AR Assistive Systems an Appropriate Tool for Assembly Training?},

author = {Sebastian Büttner (TU Clausthal / TH OWL) and Michael Prilla (TU Clausthal) and Carsten Röcker (TH OWL)},

url = {https://www.twitter.com/HCISGroup},

doi = {10.1145/3313831.3376720},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {"Augmented Reality (AR) systems are on their way to industrial application, e.g. projection-based AR is used to enhance assembly work. Previous studies showed advantages of the systems in permanent-use scenarios, such as faster assembly times.

In this paper, we investigate whether such systems are suitable for training purposes. Within an experiment, we observed the training with a projection-based AR system over multiple sessions and compared it with a personal training and a paper manual training. Our study shows that projection-based AR systems offer only small benefits in the training scenario. While a systematic mislearning of content is prevented through immediate feedback, our results show that the AR training does not reach the personal training in terms of speed and recall precision after 24 hours. Furthermore, we show that once an assembly task is properly trained, there are no differences in the long-term recall precision, regardless of the training method."},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

In this paper, we investigate whether such systems are suitable for training purposes. Within an experiment, we observed the training with a projection-based AR system over multiple sessions and compared it with a personal training and a paper manual training. Our study shows that projection-based AR systems offer only small benefits in the training scenario. While a systematic mislearning of content is prevented through immediate feedback, our results show that the AR training does not reach the personal training in terms of speed and recall precision after 24 hours. Furthermore, we show that once an assembly task is properly trained, there are no differences in the long-term recall precision, regardless of the training method."

Becoming a Robot – Overcoming Anthropomorphism with Techno-Mimesis

Judith Dörrenbächer (University of Siegen), Diana Löffler (University of Siegen), Marc Hassenzahl (University of Siegen)

Abstract | Tags: Full Paper | Links:

@inproceedings{DoerrenbaecherBecoming,

title = {Becoming a Robot – Overcoming Anthropomorphism with Techno-Mimesis},

author = {Judith Dörrenbächer (University of Siegen) and Diana Löffler (University of Siegen) and Marc Hassenzahl (University of Siegen)},

doi = {10.1145/3313831.3376507},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Employing anthropomorphism in physical appearance and behavior is the most widespread strategy for designing social robots. In the present paper, we argue that imitating humans impedes the full exploration of robots’ social abilities. In fact, their very ‘thingness’ (e.g., sensors, rationality) is able to create ‘superpowers’ that go beyond human abilities, such as endless patience. To better identify these special abilities, we develop a performative method called ‘Techno-Mimesis’ and explore it in a series of workshops with robot designers. Specifically, we create ‘prostheses’ to allow designers to transform themselves into their future robot to experience use cases from the robot’s perspective, e.g., ‘seeing’ with a distance sensor rather than with eyes. This imperfect imitation helps designers to experience being human and being robot at the same time, making differences apparent and facilitating the discovery of a number of potential physical, cognitive, and communicational robotic superpowers.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Bot or not? User Perceptions of Player Substitution with Deep Player Behavior Models

Johannes Pfau (University of Bremen), Jan David Smeddinck (Newcastle University), Ioannis Bikas (University of Bremen), Rainer Malaka (University of Bremen)

Abstract | Tags: Full Paper | Links:

@inproceedings{PfauBot,

title = {Bot or not? User Perceptions of Player Substitution with Deep Player Behavior Models},

author = {Johannes Pfau (University of Bremen) and Jan David Smeddinck (Newcastle University) and Ioannis Bikas (University of Bremen) and Rainer Malaka (University of Bremen)},

url = {https://www.twitter.com/dmlabbremen, Twitter},

doi = {10.1145/3313831.3376223},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Many online games suffer when players drop off due to lost connections or quitting prematurely, which leads to match terminations or game-play imbalances. While rule-based outcome evaluations or substitutions with bots are frequently used to mitigate such disruptions, these techniques are often perceived as unsatisfactory. Deep learning methods have successfully been used in deep player behavior modelling (DPBM) to produce non-player characters or bots which show more complex behavior patterns than those modelled using traditional AI techniques. Motivated by these findings, we present an investigation of the player-perceived awareness, believability and representativeness, when substituting disconnected players with DPBM agents in an online-multiplayer action game. Both quantitative and qualitative outcomes indicate that DPBM agents perform similarly to human players and that players were unable to detect substitutions. In contrast, players were able to detect substitution with agents driven by more traditional heuristics.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

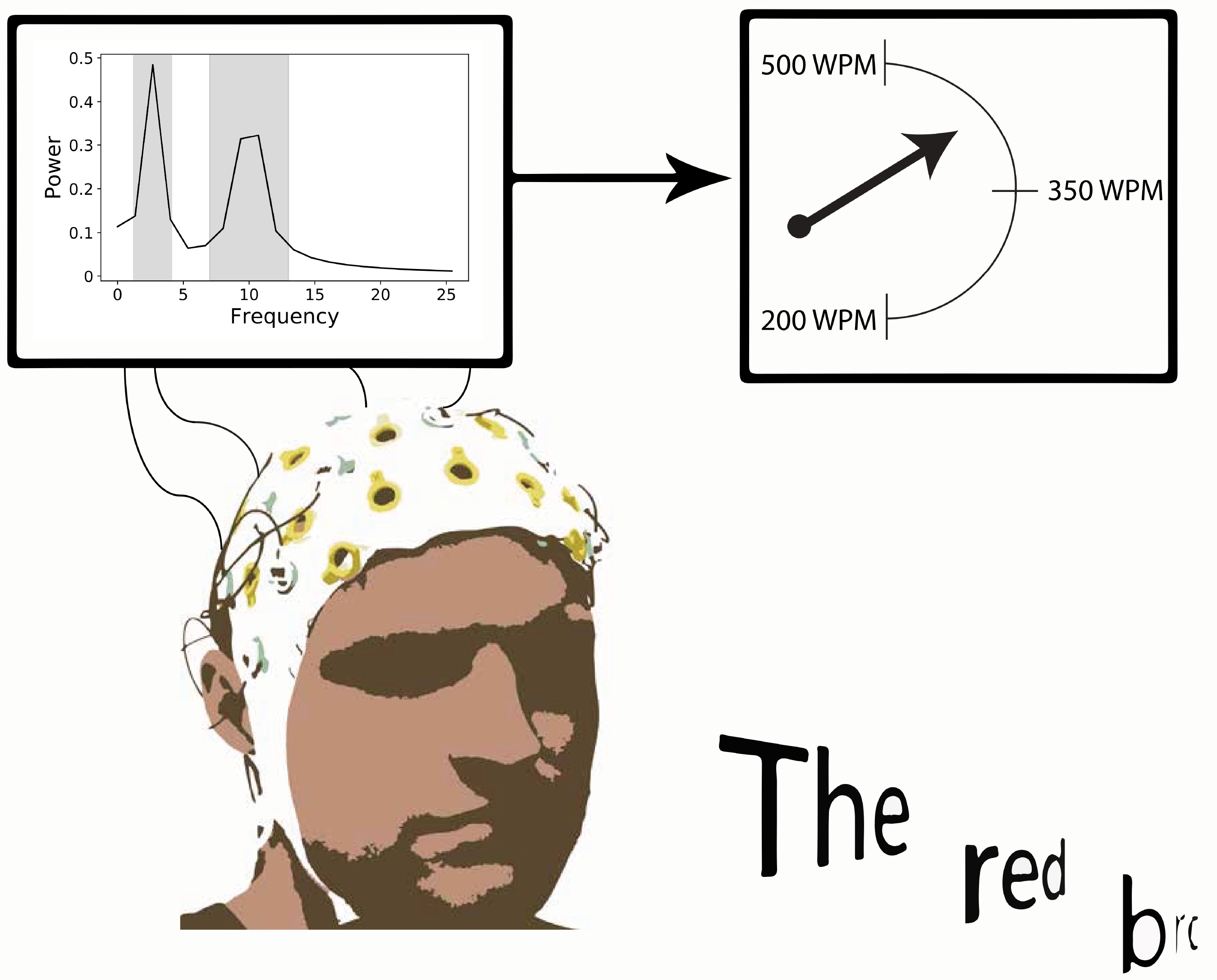

BrainCoDe: Electroencephalography-based Comprehension Detection during Reading and Listening

Christina Schneegass (LMU Munich), Thomas Kosch (LMU Munich), Andrea Baumann (LMU Munich), Marius Rusu (LMU Munich), Mariam Hassib (Bundeswehr University Munich), Heinrich Hussmann (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{SchneegassBrainCode,

title = {BrainCoDe: Electroencephalography-based Comprehension Detection during Reading and Listening},

author = {Christina Schneegass (LMU Munich) and Thomas Kosch (LMU Munich) and Andrea Baumann (LMU Munich) and Marius Rusu (LMU Munich) and Mariam Hassib (Bundeswehr University Munich) and Heinrich Hussmann (LMU Munich)},

url = {https://www.twitter.com/mimuc, Twitter},

doi = {10.1145/3313831.3376707},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {The pervasive availability of media in foreign languages is a rich resource for language learning. However, learners are forced to interrupt media consumption whenever comprehension problems occur. We present BrainCoDe, a method to implicitly detect vocabulary gaps through the evaluation of event-related potentials (ERPs). In a user study (N=16), we evaluate BrainCoDe by investigating differences in ERP amplitudes during listening and reading of known words compared to unknown words. We found significant deviations in N400 amplitudes during reading and in N100 amplitudes during listening when encountering unknown words. To evaluate the feasibility of ERPs for real-time applications, we trained a classifier that detects vocabulary gaps with an accuracy of 87.13% for reading and 82.64% for listening, identifying eight out of ten words correctly as known or unknown. We show the potential of BrainCoDe to support media learning through instant translations or by generating personalized learning content.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Breaking The Experience: Effects of Questionnaires in VR User Studies

Susanne Putze (University of Bremen), Dmitry Alexandrovsky (University of Bremen), Felix Putze (University of Bremen), Sebastian Höffner (University of Bremen), Jan David Smeddinck (Newcastle University), Rainer Malaka (University of Bremen)

Abstract | Tags: Full Paper | Links:

@inproceedings{PutzeBreaking,

title = {Breaking The Experience: Effects of Questionnaires in VR User Studies},

author = {Susanne Putze (University of Bremen) and Dmitry Alexandrovsky (University of Bremen) and Felix Putze (University of Bremen) and Sebastian Höffner (University of Bremen) and Jan David Smeddinck (Newcastle University) and Rainer Malaka (University of Bremen)},

url = {https://www.youtube.com/watch?v=iHdW3nphCZQ, Video

https://www.twitter.com/dmlabbremen, Twitter},

doi = {10.1145/3313831.3376144},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Questionnaires are among the most common research tools in virtual reality (VR) evaluations and user studies. However, transitioning from virtual worlds to the physical world to respond to VR experience questionnaires can potentially lead to systematic biases. Administering questionnaires in VR (inVRQs) is becoming more common in contemporary research. This is based on the intuitive notion that inVRQs may ease participation, reduce the Break in Presence (BIP) and avoid biases. In this paper, we perform a systematic investigation into the effects of interrupting the VR experience through questionnaires using physiological data as a continuous and objective measure of presence. In a user study (n=50), we evaluated question-asking procedures using a VR shooter with two different levels of immersion. The users rated their player experience with a questionnaire either inside or outside of VR. Our results indicate a reduced BIP for the employed INVRQ without affecting the self-reported player experience.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Capturing Experts' Mental Models to Organize a Collection of Haptic Devices: Affordances Outweigh Attributes

Hasti Seifi (Max Planck Institute for Intelligent Systems), Michael Oppermann (University of British Columbia), Julia Bullard (University of British Columbia), Karon MacLean (University of British Columbia), Katherine Kuchenbecker (Max Planck Institute for Intelligent Systems)

Tags: Full Paper | Links:

@inproceedings{SeifiCapturing,

title = {Capturing Experts' Mental Models to Organize a Collection of Haptic Devices: Affordances Outweigh Attributes},

author = {Hasti Seifi (Max Planck Institute for Intelligent Systems) and Michael Oppermann (University of British Columbia) and Julia Bullard (University of British Columbia) and Karon MacLean (University of British Columbia) and Katherine Kuchenbecker (Max Planck Institute for Intelligent Systems)},

doi = {10.1145/3313831.3376395},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Developing a Personality Model for Speech-based Conversational Agents Using the Psycholexical Approach

Sarah Theres Völkel (LMU Munich), Ramona Schödel (LMU Munich), Daniel Buschek (University of Bayreuth), Clemens Stachl (Stanford University), Verena Winterhalter (LMU Munich), Markus Bühner (LMU Munich), Heinrich Hussmann (LMU Munich)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{VoelkelDeveloping,

title = {Developing a Personality Model for Speech-based Conversational Agents Using the Psycholexical Approach},

author = {Sarah Theres Völkel (LMU Munich) and Ramona Schödel (LMU Munich) and Daniel Buschek (University of Bayreuth) and Clemens Stachl (Stanford University) and Verena Winterhalter (LMU Munich) and Markus Bühner (LMU Munich) and Heinrich Hussmann (LMU Munich)},

url = {https://www.twitter.com/mimuc, Twitter},

doi = {10.1145/3313831.3376210},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {We present the first systematic analysis of personality dimensions developed specifically to describe the personality of speech-based conversational agents. Following the psycholexical approach from psychology, we first report on a new multi-method approach to collect potentially descriptive adjectives from 1) a free description task in an online survey (228 unique descriptors), 2) an interaction task in the lab (176 unique descriptors), and 3) a text analysis of 30,000 online reviews of conversational agents (Alexa, Google Assistant, Cortana) (383 unique descriptors). We aggregate the results into a set of 349 adjectives, which are then rated by 744 people in an online survey. A factor analysis reveals that the commonly used Big Five model for human personality does not adequately describe agent personality. As an initial step to developing a personality model, we propose alternative dimensions and discuss implications for the design of agent personalities, personality-aware personalisation, and future research.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

Dynamics of Aimed Mid-air Movements

Myroslav Bachynskyi (University of Bayreuth), Jörg Müller (University of Bayreuth)

Tags: Full Paper | Links:

@inproceedings{BachynskyiDynamics,

title = {Dynamics of Aimed Mid-air Movements},

author = {Myroslav Bachynskyi (University of Bayreuth) and Jörg Müller (University of Bayreuth)},

doi = {10.1145/3313831.3376194},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

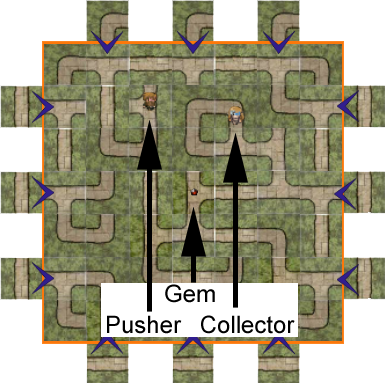

Enemy Within: Long-term Motivation Effects of Deep Player Behavior Models for Dynamic Difficulty Adjustment

Johannes Pfau (University of Bremen), Jan David Smeddinck (Newcastle University), Rainer Malaka (University of Bremen)

Abstract | Tags: Full Paper | Links:

@inproceedings{PfauEnemy,

title = {Enemy Within: Long-term Motivation Effects of Deep Player Behavior Models for Dynamic Difficulty Adjustment},

author = {Johannes Pfau (University of Bremen) and Jan David Smeddinck (Newcastle University) and Rainer Malaka (University of Bremen)},

url = {https://www.youtube.com/watch?v=QOdFmvQnPJQ, Video

https://www.twitter.com/dmlabbremen, Twitter},

doi = {10.1145/3313831.3376423},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Balancing games and producing content that remains interesting and challenging is a major cost factor in the design and maintenance of games. Dynamic difficulty adjustment (DDA) can successfully tune challenge levels to player abilities, but when implemented with classic heuristic parameter tuning (HPT) often turns out to be very noticeable, e.g. as “rubber-banding”. Deep learning techniques can be employed for deep player behavior modeling (DPBM), enabling more complex adaptivity, but effects over frequent and longer-lasting game engagements, as well as comparisons to HPT have not been empirically investigated. We present a situated study of the effects of DDA via DPBM as compared to HPT on intrinsic motivation, perceived challenge and player motivation in a real-world MMORPG. The results indicate that DPBM can lead to significant improvements in intrinsic motivation and players prefer game experience episodes featuring DPBM over experience episodes with classic difficulty management.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Evaluation of a Financial Portfolio Visualization using Computer Displays and Mixed Reality Devices with Domain Experts

Kay Schroeder (Zuyd University of Applied Sciences), Batoul Ajdadilish (Zuyd University of Applied Sciences), Alexander P. Henkel (Zuyd University of Applied Sciences), André Calero Valdez (RWTH Aachen University)

Abstract | Tags: Full Paper | Links:

@inproceedings{SchroederEvaluation,

title = {Evaluation of a Financial Portfolio Visualization using Computer Displays and Mixed Reality Devices with Domain Experts},

author = {Kay Schroeder (Zuyd University of Applied Sciences) and Batoul Ajdadilish (Zuyd University of Applied Sciences) and Alexander P. Henkel (Zuyd University of Applied Sciences) and André Calero Valdez (RWTH Aachen University)},

doi = {10.1145/3313831.3376556},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {With the advent of mixed reality devices such as the Microsoft HoloLens, developers have been faced with the challenge to utilize the third dimension in information visualization effectively. Research on stereoscopic devices has shown that three-dimensional representation can improve accuracy in specific tasks (e.g., network visualization). Yet, so far the field has remained mute on the underlying mechanism. Our study systematically investigates the differences in user perception between a regular monitor and a mixed reality device. In a real-life within-subject experiment in the field with twenty-eight investment bankers, we assessed subjective and objective task performance with two- and three-dimensional systems, respectively. We tested accuracy with regard to position, size, and color using single and combined tasks. Our results do not show a significant difference in accuracy between mixed-reality and standard 2D monitor visualizations.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Examining Design Choices of Questionnaires in VR User Studies

Dmitry Alexandrovsky (University of Bremen), Susanne Putze (University of Bremen), Michael Bonfert (University of Bremen), Sebastian Höffner (University of Bremen), Pitt Michelmann (University of Bremen), Dirk Wenig (University of Bremen), Rainer Malaka (University of Bremen), Jan David Smeddinck (Newcastle University)

Abstract | Tags: Full Paper | Links:

@inproceedings{AlexandrovskyExamining,

title = {Examining Design Choices of Questionnaires in VR User Studies},

author = {Dmitry Alexandrovsky (University of Bremen) and Susanne Putze (University of Bremen) and Michael Bonfert (University of Bremen) and Sebastian Höffner (University of Bremen) and Pitt Michelmann (University of Bremen) and Dirk Wenig (University of Bremen) and Rainer Malaka (University of Bremen) and Jan David Smeddinck (Newcastle University)},

url = {https://www.youtube.com/watch?v=T32Sop_LFu0&feature=youtu.be, Video

https://www.twitter.com/dmlabbremen, Twitter},

doi = {10.1145/3313831.3376260},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Questionnaires are among the most common research tools in virtual reality (VR) user studies. Transitioning from virtuality to reality for giving self-reports on VR experiences can lead to systematic biases. VR allows to embed questionnaires into the virtual environment which may ease participation and avoid biases. To provide a cohesive picture of methods and design choices for questionnaires in VR (inVRQ), we discuss 15 inVRQ studies from the literature and present a survey with 67 VR experts from academia and industry. Based on the outcomes, we conducted two user studies in which we tested different presentation and interaction methods of inVRQs and evaluated the usability and practicality of our design. We observed comparable completion times between inVRQs and questionnaires outside VR (outVRQs) with higher enjoyment but lower usability for INVRQS. These findings advocate the application of INVRQS and provide an overview of methods and considerations that lay the groundwork for inVRQ design.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

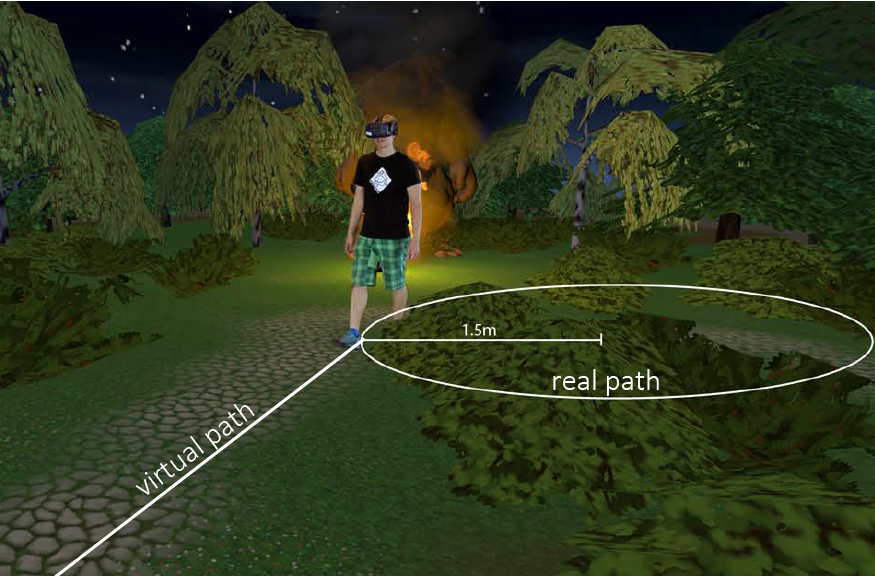

Exploring Human-Robot Interaction with the Elderly: Results from a Ten-Week Case Study in a Care Home

Felix Carros (Uni Siegen), Johanna Meurer (Uni Siegen), Diana Löffler (Uni Siegen), David Unbehaun (Uni Siegen), Sarah Matthies (Uni Siegen), Inga Koch (Uni Siegen), Rainer Wieching (Uni Siegen), Dave Randall (Uni Siegen), Marc Hassenzahl (Uni Siegen), Volker Wulf (Uni Siegen)

Abstract | Tags: Full Paper | Links:

@inproceedings{CarrosExploring,

title = {Exploring Human-Robot Interaction with the Elderly: Results from a Ten-Week Case Study in a Care Home},

author = {Felix Carros (Uni Siegen) and Johanna Meurer (Uni Siegen) and Diana Löffler (Uni Siegen) and David Unbehaun (Uni Siegen) and Sarah Matthies (Uni Siegen) and Inga Koch (Uni Siegen) and Rainer Wieching (Uni Siegen) and Dave Randall (Uni Siegen) and Marc Hassenzahl (Uni Siegen) and Volker Wulf (Uni Siegen)},

doi = {10.1145/3313831.3376402},

year = {2020},

date = {2020-05-01},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {We conducted an experiment to evaluate the LUI and our novel anchor-turning rotation control method regarding task performance, spatial cognition, VR sickness, sense of presence, usability and comfort in a path-integration task. The results show that VR Strider has a significant positive effect on the participants' angular and distance estimation, sense of presence and feeling of comfort compared to other established locomotion techniques, such as teleportation and joystick-based navigation.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

FaceHaptics: Robot Arm based Versatile Facial Haptics for Immersive Environments

Alexander Wilberz (Hochschule Bonn-Rhein-Sieg), Dominik Leschtschow (Hochschule Bonn-Rhein-Sieg), Christina Trepkowski (Hochschule Bonn-Rhein-Sieg), Jens Maiero (Hochschule Bonn-Rhein-Sieg), Ernst Kruijff (Hochschule Bonn-Rhein-Sieg), Bernhard Riecke (Simon Fraser University)

Tags: Full Paper | Links:

@inproceedings{WilberzFaceHaptics,

title = {FaceHaptics: Robot Arm based Versatile Facial Haptics for Immersive Environments},

author = {Alexander Wilberz (Hochschule Bonn-Rhein-Sieg) and Dominik Leschtschow (Hochschule Bonn-Rhein-Sieg) and Christina Trepkowski (Hochschule Bonn-Rhein-Sieg) and Jens Maiero (Hochschule Bonn-Rhein-Sieg) and Ernst Kruijff (Hochschule Bonn-Rhein-Sieg) and Bernhard Riecke (Simon Fraser University)},

doi = {10.1145/3313831.3376481},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Fairness and Decision-making in Collaborative Shift Scheduling Systems

Alarith Uhde (Uni Siegen), Nadine Schlicker (Ergosign GmbH), Dieter P. Wallach (Ergosign GmbH), Marc Hassenzahl (Uni Siegen)

Abstract | Tags: Full Paper | Links:

@inproceedings{UhdeFairness,

title = {Fairness and Decision-making in Collaborative Shift Scheduling Systems},

author = {Alarith Uhde (Uni Siegen) and Nadine Schlicker (Ergosign GmbH) and Dieter P. Wallach (Ergosign GmbH) and Marc Hassenzahl (Uni Siegen)},

doi = {10.1145/3313831.3376656},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {The strains associated with shift work decrease healthcare workers' well-being. However, shift schedules adapted to their individual needs can partially mitigate these problems. From a computing perspective, shift scheduling was so far mainly treated as an optimization problem with little attention given to the preferences, thoughts, and feelings of the healthcare workers involved. In the present study, we explore fairness as a central, human-oriented attribute of shift schedules as well as the scheduling process. Three in-depth qualitative interviews and a validating vignette study revealed that while on an abstract level healthcare workers agree on equality as the guiding norm for a fair schedule, specific scheduling conflicts should foremost be resolved by negotiating the importance of individual needs. We discuss elements of organizational fairness, including transparency and team spirit. Finally, we present a sketch for fair scheduling systems, summarizing key findings for designers in a readily usable way.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Feminist Living Labs as Research Infrastructures for HCI: The Case of a Video Game Company

Michael Ahmadi (University of Siegen), Rebecca Eilert (University of Siegen), Anne Weibert (University of Siegen), Volker Wulf (University of Siegen), Nicola Marsden (Heilbronn University)

Abstract | Tags: Full Paper | Links:

@inproceedings{AhmadiFeminist,

title = {Feminist Living Labs as Research Infrastructures for HCI: The Case of a Video Game Company},

author = {Michael Ahmadi (University of Siegen) and Rebecca Eilert (University of Siegen) and Anne Weibert (University of Siegen) and Volker Wulf (University of Siegen) and Nicola Marsden (Heilbronn University)},

doi = {10.1145/3313831.3376716},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {The number of women in IT is still low and companies struggle to integrate female professionals. The aim of our research is to provide methodological support for understanding and sharing experiences of gendered practices in the IT industry and encouraging sustained reflection about these matters over time. We established a Living Lab with that end in view, aiming to enhance female participation in the IT workforce and committing ourselves to a participatory approach to the sharing of women’s experiences. Here, using the case of a German video game company which participated in our Lab, we detail our lessons learned. We show that this kind of long-term participation involves challenges over the lifetime of the project but can lead to substantial benefits for organizations. Our findings demonstrate that Living Labs are suitable for giving voice to marginalized groups, addressing their concerns and evoking change possibilities. Nevertheless, uncertainties about long-term sustainability remain.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

GazeConduits: Calibration-Free Cross-Device Collaboration through Gaze and Touch

Simon Voelker (RWTH), Sebastian Hueber (RWTH), Christian Holz (ETH Zurich), Christian Remy (Aarhus University), Nicolai Marquardt (University College London)

Abstract | Tags: Full Paper | Links:

@inproceedings{VoelkerGaze,

title = {GazeConduits: Calibration-Free Cross-Device Collaboration through Gaze and Touch},

author = {Simon Voelker (RWTH) and Sebastian Hueber (RWTH) and Christian Holz (ETH Zurich) and Christian Remy (Aarhus University) and Nicolai Marquardt (University College London)},

url = {https://youtu.be/Q59SQi0JUkg, Video},

doi = {10.1145/3313831.3376578},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {We present GazeConduits, a calibration-free ad-hoc mobile device setup that enables users to collaboratively interact with tablets, other users, and content in a cross-device setting using gaze and touch input. GazeConduits leverages recently presented phone capabilities to detect facial features and estimate users’ gaze directions. To join a collaborative setting, users place one or more tablets onto a shared table and position their phone in the center, which then tracks present users as well as their gaze direction to predict the tablets they look at. Using GazeConduits, we demonstrate a series of techniques for collaborative interaction across mobile devices for content selection and manipulation. Our evaluation with 20 simultaneous tablets on a table showed that GazeConduits can reliably identify at which tablet or at which collaborator a user is looking, enabling a rich set of interaction techniques.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Getting out of Out of Sight: Evaluation of AR Mechanisms for Awareness and Orientation Support in Occluded Multi-Room Settings

Niklas Osmers (TU Clausthal), Michael Prilla (TU Clausthal)

Abstract | Tags: Full Paper | Links:

@inproceedings{OsmersGetting,

title = {Getting out of Out of Sight: Evaluation of AR Mechanisms for Awareness and Orientation Support in Occluded Multi-Room Settings},

author = {Niklas Osmers (TU Clausthal) and Michael Prilla (TU Clausthal)},

url = {https://www.twitter.com/HCISGroup, Twitter},

doi = {10.1145/3313831.3376742},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Augmented Reality can provide orientation and awareness in situations in which objects or people are occluded by physical structures. This is relevant for many situations in the workplace, where objects are scattered across rooms and people are out of sight. While several AR mechanisms have been proposed to provide awareness and orientation in these situations, little is known about their effect on people's performance when searching objects and coordinating with each other. In this paper, we compare three AR based mechanisms (map, x-ray, compass) according to their utility, usability, social presence, task load and users’ preferences. 48 participants had to work together in groups of four to find people and objects located around different rooms. Results show that map and x-ray performed best but provided least social presence among participants. We discuss these and other observations as well as potential impacts on designing AR awareness and orientation support.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

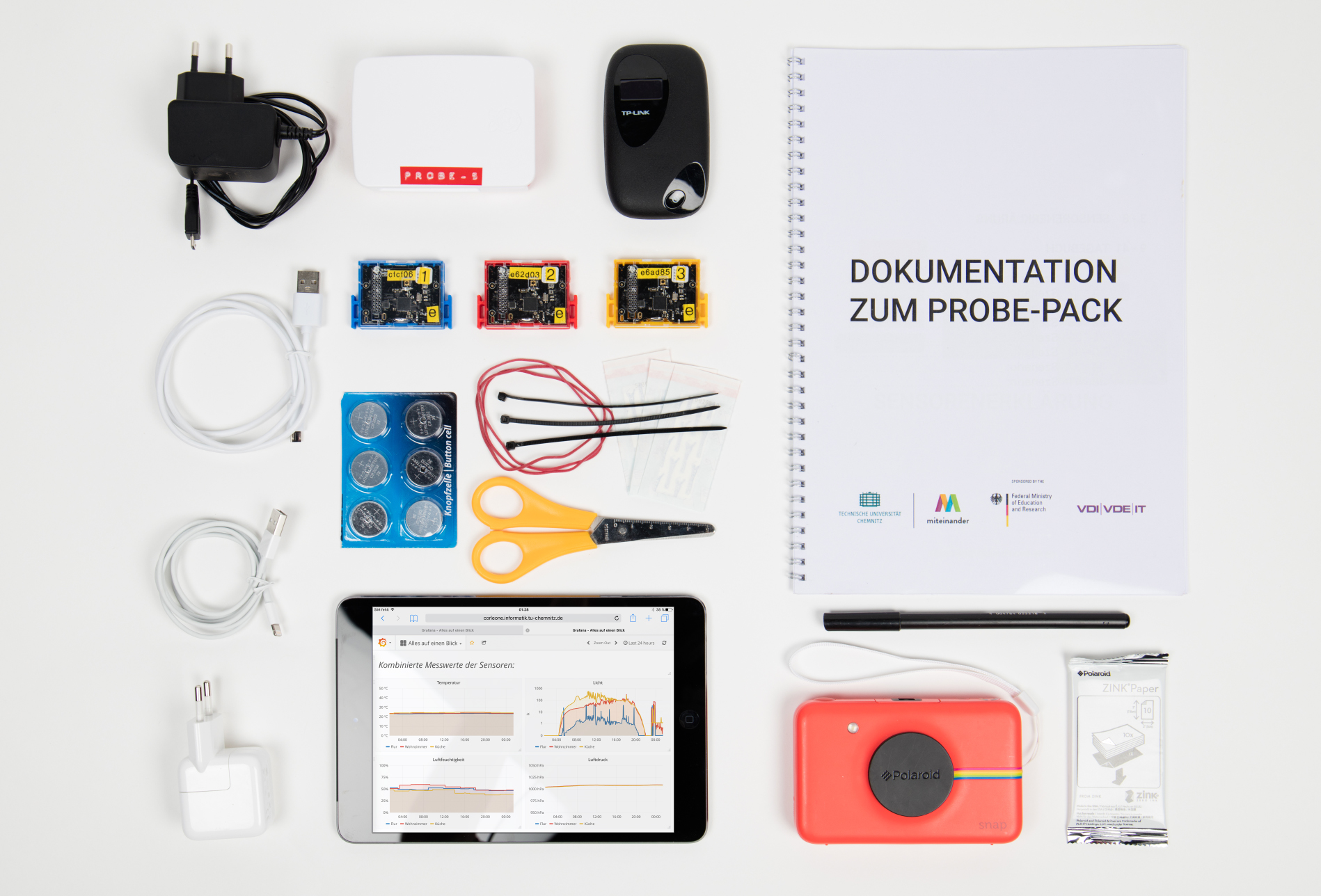

Guess the Data: Data Work to Understand How People Make Sense of and Use Simple Sensor Data from Homes

Albrecht Kurze (Chemnitz University of Technology), Andreas Bischof (Chemnitz University of Technology), Sören Totzauer (Chemnitz University of Technology), Michael Storz (Chemnitz University of Technology), Maximilian Eibl (Chemnitz University of Technology), Margot Brereton (Queensland University of Technology), Arne Berger (Anhalt University of Applied Sciences)

Abstract | Tags: Full Paper | Links:

@inproceedings{KurzeGuess,

title = {Guess the Data: Data Work to Understand How People Make Sense of and Use Simple Sensor Data from Homes},

author = {Albrecht Kurze (Chemnitz University of Technology) and Andreas Bischof (Chemnitz University of Technology) and Sören Totzauer (Chemnitz University of Technology) and Michael Storz (Chemnitz University of Technology) and Maximilian Eibl (Chemnitz University of Technology) and Margot Brereton (Queensland University of Technology) and Arne Berger (Anhalt University of Applied Sciences)},

url = {https://www.twitter.com/arneberger, Twitter},

doi = {10.1145/3313831.3376273},

year = {2020},

date = {2020-05-01},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Simple smart home sensors, e.g. for temperature or light, increasingly collect seemingly inconspicuous data. Prior work has shown that human sensemaking of such sensor data can reveal domestic activities. Such sensemaking presents an opportunity to empower people to understand the implications of simple smart home sensors. To investigate, we developed and field-tested the Guess the Data method, which enabled people to use and make sense of live data from their homes and to collectively interpret and reflect on anonymized data from the homes in our study. Our findings show how participants reconstruct behavior, both individually and collectively, expose the sensitive personal data of others, and use sensor data as evidence and for lateral surveillance within the household. We discuss the potential of our method as a participatory HCI method for investigating design of the IoT and implications created by doing data work on home sensors.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

HeadReach: Using Head Tracking to Increase Reachability on Mobile Touch Devices

Simon Voelker (RWTH), Sebastian Hueber (RWTH), Christian Corsten (RWTH), Christian Remy (Aarhus University)

Abstract | Tags: Full Paper | Links:

@inproceedings{VoelkerHeadReach,

title = {HeadReach: Using Head Tracking to Increase Reachability on Mobile Touch Devices},

author = {Simon Voelker (RWTH) and Sebastian Hueber (RWTH) and Christian Corsten (RWTH) and Christian Remy (Aarhus University)},

url = {https://youtu.be/IyVp5VFde2w, Video},

doi = {10.1145/3313831.3376868},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {People often operate their smartphones with only one hand, using just their thumb for touch input. With today’s larger smartphones, this leads to a reachability issue: Users can no longer comfortably touch everywhere on the screen without changing their grip. We investigate using the head tracking in modern smartphones to address this reachability issue. We developed three interaction techniques, pure head (PH), head+ touch (HT), and head area + touch (HA), to select targets beyond the reach of one’s thumb. In two user studies, we found that selecting targets using HT and HA had higher success rates than the default direct touch (DT) while standing (by about 9%) and walking (by about 12%), while being moderately slower. HT and HA were also faster than one of the best techniques, BezelCursor (BC) (by about 20% while standing and 6% while walking), while having the same success rate.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Heartbeats in the Wild: A Field Study Exploring ECG Biometrics in Everyday Life

Florian Lehmann (LMU Munich / University of Bayreuth), Daniel Buschek (University of Bayreuth)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{LehmannHeartbeats,

title = {Heartbeats in the Wild: A Field Study Exploring ECG Biometrics in Everyday Life},

author = {Florian Lehmann (LMU Munich / University of Bayreuth) and Daniel Buschek (University of Bayreuth)},

url = {https://www.twitter.com/mimuc, Twitter},

doi = {10.1145/3313831.3376536},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {This paper reports on an in-depth study of electrocardiogram (ECG) biometrics in everyday life. We collected ECG data from 20 people over a week, using a non-medical chest tracker. We evaluated user identification accuracy in several scenarios and observed equal error rates of 9.15% to 21.91%, heavily depending on 1) the number of days used for training, and 2) the number of heartbeats used per identification decision. We conclude that ECG biometrics can work in the wild but are less robust than expected based on the literature, highlighting that previous lab studies obtained highly optimistic results with regard to real life deployments. We explain this with noise due to changing body postures and states as well as interrupted measures. We conclude with implications for future research and the design of ECG biometrics systems for real world deployments, including critical reflections on privacy.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

Heatmaps, Shadows, Bubbles, Rays: Comparing Mid-Air Pen Position Visualizations in Handheld AR

Philipp Wacker (RWTH), Adrian Wagner (RWTH), Simon Voelker (RWTH), Jan Borchers (RWTH)

Abstract | Tags: Full Paper | Links:

@inproceedings{WackerHeatmaps,

title = {Heatmaps, Shadows, Bubbles, Rays: Comparing Mid-Air Pen Position Visualizations in Handheld AR},

author = {Philipp Wacker (RWTH) and Adrian Wagner (RWTH) and Simon Voelker (RWTH) and Jan Borchers (RWTH)},

url = {https://youtu.be/sFPP2xeAEP8, Video},

doi = {10.1145/3313831.3376848},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {In Handheld Augmented Reality, users look at AR scenes through the smartphone held in their hand. In this setting, having a mid-air pointing device like a pen in the other hand greatly expands the interaction possibilities. For example, it lets users create 3D sketches and models while on the go. However, perceptual issues in Handheld AR make it difficult to judge the distance of a virtual object, making it hard to align a pen to it. To address this, we designed and compared different visualizations of the pen's position in its virtual environment, measuring pointing precision, task time, activation patterns, and subjective ratings of helpfulness, confidence, and comprehensibility of each visualization. While all visualizations resulted in only minor differences in precision and task time, subjective ratings of perceived helpfulness and confidence favor a `heatmap' technique that colors the objects in the scene based on their distance to the pen.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

HiveFive: Immersion Preserving Attention Guidance in Virtual Reality

Daniel Lange (University of Oldenburg), Tim Claudius Stratmann (OFFIS - Institute for IT), Uwe Gruenefeld (OFFIS - Institute for IT), Susanne Boll (University of Oldenburg)

Abstract | Tags: Full Paper | Links:

@inproceedings{LangeHiveFive,

title = {HiveFive: Immersion Preserving Attention Guidance in Virtual Reality},

author = {Daniel Lange (University of Oldenburg) and Tim Claudius Stratmann (OFFIS - Institute for IT) and Uwe Gruenefeld (OFFIS - Institute for IT) and Susanne Boll (University of Oldenburg)},

url = {https://youtu.be/df_onXBj7cM, Video},

doi = {10.1145/3313831.3376803},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Recent advances in Virtual Reality (VR) technology, such as larger fields of view, have made VR increasingly immersive. However, a larger field of view often results in a user focusing on certain directions and missing relevant content presented elsewhere on the screen. With HiveFive, we propose a technique that uses swarm motion to guide user attention in VR. The goal is to seamlessly integrate directional cues into the scene without losing immersiveness. We evaluate HiveFive in two studies. First, we compare biological motion (from a prerecorded swarm) with non-biological motion (from an algorithm), finding further evidence that humans can distinguish between these motion types and that, contrary to our hypothesis, non-biological swarm motion results in significantly faster response times. Second, we compare HiveFive to four other techniques and show that it not only results in fast response times but also has the smallest negative effect on immersion.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

How to Trick AI: Users’ Strategies for Protecting Themselves From Automatic Personality Assessment

Sarah Theres Völkel (LMU Munich), Renate Häuslschmid (Madeira Interactive Technologies Institute), Anna Werner (LMU Munich), Heinrich Hussmann (LMU Munich), Andreas Butz (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{VoelkelHow,

title = {How to Trick AI: Users’ Strategies for Protecting Themselves From Automatic Personality Assessment},

author = {Sarah Theres Völkel (LMU Munich) and Renate Häuslschmid (Madeira Interactive Technologies Institute) and Anna Werner (LMU Munich) and Heinrich Hussmann (LMU Munich) and Andreas Butz (LMU Munich)},

url = {https://www.twitter.com/mimuc, Twitter},

doi = {10.1145/3313831.3376877},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Psychological targeting tries to influence and manipulate users' behaviour. We investigated whether users can protect themselves from being profiled by a chatbot, which automatically assesses users' personality. Participants interacted twice with the chatbot: (1) They chatted for 45 minutes in customer service scenarios and received their actual profile (baseline). (2) They then were asked to repeat the interaction and to disguise their personality by strategically tricking the chatbot into calculating a falsified profile. In interviews, participants mentioned 41 different strategies but could only apply a subset of them in the interaction. They were able to manipulate all Big Five personality dimensions by nearly 10%. Participants regarded personality as very sensitive data. As they found tricking the AI too exhaustive for everyday use, we reflect on opportunities for privacy protective designs in the context of personality-aware systems.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Improving Humans' Ability to Interpret Deictic Gestures in Virtual Reality

Sven Mayer (Carnegie Mellon University / University of Stuttgart), Jens Reinhardt (Hamburg University of Applied Sciences), Robin Schweigert (University of Stuttgart), Brighten Jelke (Macalester College), Valentin Schwind (University of Stuttgart / University of Regensburg), Katrin Wolf (Hamburg University of Applied Sciences), Niels Henze (University of Regensburg)

Abstract | Tags: Full Paper | Links:

@inproceedings{MayerImproving,

title = {Improving Humans' Ability to Interpret Deictic Gestures in Virtual Reality},

author = {Sven Mayer (Carnegie Mellon University / University of Stuttgart) and Jens Reinhardt (Hamburg University of Applied Sciences) and Robin Schweigert (University of Stuttgart) and Brighten Jelke (Macalester College) and Valentin Schwind (University of Stuttgart / University of Regensburg) and Katrin Wolf (Hamburg University of Applied Sciences) and Niels Henze (University of Regensburg)},

url = {https://www.youtube.com/watch?v=Afi4TPzHdlM, Youtube},

doi = {10.1145/3313831.3376340},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Collaborative Virtual Environments (CVEs) offer unique opportunities for human communication. Humans can interact with each other over a distance in any environment and visual embodiment they want. Although deictic gestures are especially important as they can guide other humans' attention, humans make systematic errors when using and interpreting them. Recent work suggests that the interpretation of vertical deictic gestures can be significantly improved by warping the pointing arm. In this paper, we extend previous work by showing that models enable to also improve the interpretation of deictic gestures at targets all around the user. Through a study with 28 participants in a CVE, we analyzed the errors users make when interpreting deictic gestures. We derived a model that rotates the arm of a pointing user's avatar to improve the observing users' accuracy. A second study with 24 participants shows that we can improve observers' accuracy by 22.9%. As our approach is not noticeable for users, it improves their accuracy without requiring them to learn a new interaction technique or distracting from the experience.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Improving the Usability and UX of the Swiss Internet Voting Interface

Karola Marky (TU Darmstadt), Verena Zimmermann (TU Darmstadt), Markus Funk (Cerence GmbH), Jörg Daubert (TU Darmstadt), Kira Bleck (TU Darmstadt), Max Mühlhäuser (TU Darmstadt)

Abstract | Tags: Full Paper | Links:

@inproceedings{MarkyImproving,

title = {Improving the Usability and UX of the Swiss Internet Voting Interface},

author = {Karola Marky (TU Darmstadt) and Verena Zimmermann (TU Darmstadt) and Markus Funk (Cerence GmbH) and Jörg Daubert (TU Darmstadt) and Kira Bleck (TU Darmstadt) and Max Mühlhäuser (TU Darmstadt)},

url = {https://twitter.com/search?q=%23teamdarmstadt&src=typed_query&f=live, Twitter},

doi = {10.1145/3313831.3376769},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Up to 20% of residential votes and up to 70% of absentee votes in Switzerland are cast online. The Swiss scheme aims to provide individual verifiability by different verification codes. The voters have to carry out verification on their own, making the usability and UX of the interface of great importance. To improve the usability, we first performed an evaluation with 12 human-computer interaction experts to uncover usability weaknesses of the Swiss Internet voting interface. Based on the experts' findings, related work, and an exploratory user study with 36 participants, we propose a redesign that we evaluated in a user study with 49 participants. Our study confirmed that the redesign indeed improves the detection of incorrect votes by 33% and increases the trust and understanding of the voters. Our studies furthermore contribute important recommendations for designing verifiable e-voting systems in general.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Improving Worker Engagement Through Conversational Microtask Crowdsourcing

Sihang Qiu (Delft University of Technology), Ujwal Gadiraju (Leibniz Universität Hannover), Alessandro Bozzon (Delft University of Technology)

Tags: Full Paper | Links:

@inproceedings{QiuImproving,

title = {Improving Worker Engagement Through Conversational Microtask Crowdsourcing},

author = {Sihang Qiu (Delft University of Technology) and Ujwal Gadiraju (Leibniz Universität Hannover) and Alessandro Bozzon (Delft University of Technology)},

doi = {10.1145/3313831.3376403},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

In-game and Out-of-game Social Anxiety Influences Player Motivations, Activities, and Experiences in MMORPGs

Martin Dechant (University of Saskatchewan), Susanne Poeller (University of Trier), Colby Johanson (University of Saskatchewan), Katelyn Wiley (University of Saskatchewn), Regan Mandryk (University of Saskatchewan)

Tags: Full Paper | Links:

@inproceedings{DechantInOut,

title = {In-game and Out-of-game Social Anxiety Influences Player Motivations, Activities, and Experiences in MMORPGs},

author = {Martin Dechant (University of Saskatchewan) and Susanne Poeller (University of Trier) and Colby Johanson (University of Saskatchewan) and Katelyn Wiley (University of Saskatchewn) and Regan Mandryk (University of Saskatchewan)},

doi = {10.1145/3313831.3376734},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Interaction Techniques for Visual Exploration Using Embedded Word-Scale Visualizations

Pascal Goffin (University of Utah), Tanja Blascheck (University of Stuttgart), Petra Isenberg (Inria), Wesley Willett (University of Calgary)

Abstract | Tags: Full Paper | Links:

@inproceedings{GoffinInteraction,

title = {Interaction Techniques for Visual Exploration Using Embedded Word-Scale Visualizations},

author = {Pascal Goffin (University of Utah) and Tanja Blascheck (University of Stuttgart) and Petra Isenberg (Inria) and Wesley Willett (University of Calgary)},

doi = {10.1145/3313831.3376842},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {We describe a design space of view manipulation interactions for small data-driven contextual visualizations (word-scale visualizations). These interaction techniques support an active reading experience and engage readers through exploration of embedded visualizations whose placement and content connect them to specific terms in a document. A reader could, for example, use our proposed interaction techniques to explore word-scale visualizations of stock market trends for companies listed in a market overview article. When readers wish to engage more deeply with the data, they can collect, arrange, compare, and navigate the document using the embedded word-scale visualizations, permitting more visualization-centric analyses. We support our design space with a concrete implementation, illustrate it with examples from three application domains, and report results from two experiments. The experiments show how view manipulation interactions helped readers examine embedded visualizations more quickly and with less scrolling and yielded qualitative feedback on usability and future opportunities.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Investigating User-Created Gamification in an Image Tagging Task

Marc Schubhan (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus), Maximilian Altmeyer (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus), Dominic Buchheit (Saarland University), Pascal Lessel (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus)

Abstract | Tags: Full Paper | Links:

@inproceedings{SchubhanInvestigating,

title = {Investigating User-Created Gamification in an Image Tagging Task},

author = {Marc Schubhan (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus) and Maximilian Altmeyer (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus) and Dominic Buchheit (Saarland University) and Pascal Lessel (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus)},

url = {https://www.youtube.com/watch?v=C_2RE_Tfzys, Video},

doi = {10.1145/3313831.3376360},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Commonly, gamification is designed by developers and not by end-users. In this paper we investigate an approach where users take control of this process. Firstly, users were asked to describe their own gamification concepts which would motivate them to put more effort into an image tagging task. We selected this task as gamification has already been shown to be effective here in previous work. Based on these descriptions, an implementation was made for each concept and given to the creator. In a between-subjects study (n=71), our approach was compared to a no-gamification condition and two conditions with fixed gamification settings. We found that providing participants with an implementation of their own concept significantly increased the amount of generated tags compared to the other conditions. Although the quality of tags was lower, the number of usable tags remained significantly higher in comparison, suggesting the usefulness of this approach.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

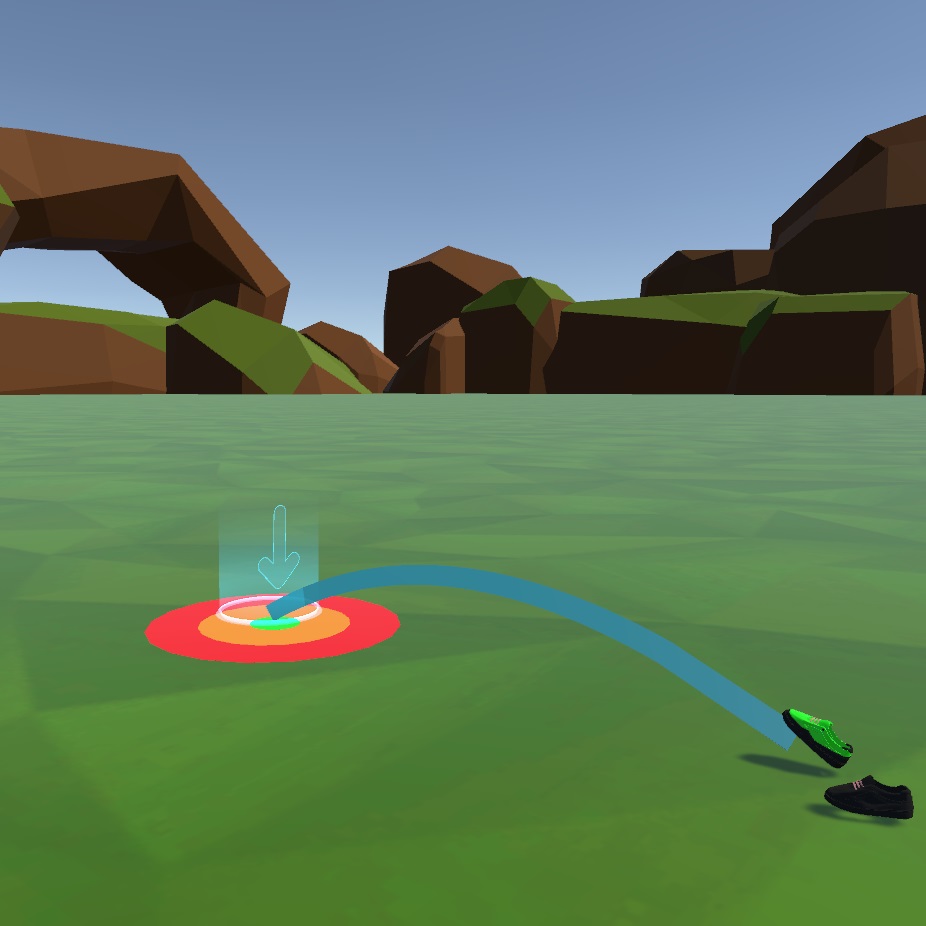

JumpVR: Jump-Based Locomotion Augmentation for Virtual Reality

Dennis Wolf (Ulm University), Katja Rogers (Ulm University), Christoph Kunder (Ulm University), Enrico Rukzio (Ulm University)

Abstract | Tags: Full Paper | Links:

@inproceedings{WolfJump,

title = {JumpVR: Jump-Based Locomotion Augmentation for Virtual Reality},

author = {Dennis Wolf (Ulm University) and Katja Rogers (Ulm University) and Christoph Kunder (Ulm University) and Enrico Rukzio (Ulm University)},

url = {https://youtu.be/JNWfs3-V1zQ, Video

https://www.twitter.com/mi_uulm, Twitter},

doi = {10.1145/3313831.3376243},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {One of the great benefits of virtual reality (VR) is the implementation of features that go beyond realism. Common “unrealistic” locomotion techniques (like teleportation) can avoid spatial limitation of tracking but minimize potential benefits of more realistic techniques (e.g., walking). As an alternative that combines realistic physical movement with hyper-realistic virtual outcome, we present JumpVR, a jump-based locomotion augmentation technique that virtually scales users’ physical jumps. In a user study (N=28), we show that jumping in VR (regardless of scaling) can significantly increase presence, motivation and immersion compared to teleportation, while largely not increasing simulator sickness. Further, participants reported higher immersion and motivation for most scaled jumping variants than forward-jumping. Our work shows the feasibility and benefits of jumping in VR and explores suitable parameters for its hyper-realistic scaling. We discuss design implications for VR experiences and research.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Leveraging Error Correction in Voice-based Text Entry by Talk-and-Gaze

Korok Sengupta (University of Koblenz), Sabin Bhattarai (University of Koblenz), Sayan Sarcar (University of Tsukuba), Scott MacKenzie (York University), Steffen Staab (University of Stuttgart)

Abstract | Tags: Full Paper | Links:

@inproceedings{SenguptaLeveraging,

title = {Leveraging Error Correction in Voice-based Text Entry by Talk-and-Gaze},

author = {Korok Sengupta (University of Koblenz) and Sabin Bhattarai (University of Koblenz) and Sayan Sarcar (University of Tsukuba) and Scott MacKenzie (York University) and Steffen Staab (University of Stuttgart)},

url = {https://www.twitter.com/AnalyticComp, Twitter},

doi = {10.1145/3313831.3376579},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {We present the design and evaluation of Talk-and-Gaze (TaG), a method for selecting and correcting errors with voice and gaze. TaG uses eye gaze to overcome the inability of voice- only systems to provide spatial information. The user’s point of gaze is used to select an erroneous word either by dwelling on the word for 800 ms (D-TaG) or by uttering a “select” voice command (V-TaG). A user study with 12 participants com- pared D-TaG, V-TaG, and a voice-only method for selecting and correcting words. Corrections were performed more than 20% faster with D-TaG compared to the V-TaG or voice-only methods. As well, D-TaG was observed to require 24% less selection effort than V-TaG and 11% less selection effort than voice-only error correction. D-TaG was well received in a subjective assessment with 66% of users choosing it as their preferred choice for error correction in voice-based text entry},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Levitation Simulator: Prototyping Ultrasonic Levitation Interfaces in Virtual Reality

Viktorija Paneva (University of Bayreuth), Myroslav Bachynskyi (University of Bayreuth), Jörg Müller (University of Bayreuth)

Tags: Full Paper, Honorable Mention | Links:

@inproceedings{PanevaLevitation,

title = {Levitation Simulator: Prototyping Ultrasonic Levitation Interfaces in Virtual Reality},

author = {Viktorija Paneva (University of Bayreuth) and Myroslav Bachynskyi (University of Bayreuth) and Jörg Müller (University of Bayreuth)},

doi = {10.1145/3313831.3376409},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

Listen to Developers! A Participatory Design Study on Security Warnings for Cryptographic APIs