2020 in Numbers

This year, the German labs contribute 138 publications in total to the 2020 ACM CHI Conference on Human Factors in Computing Systems. At the heart, there are 83 Papers, including 1 Best Paper and 14 Honorable Mentions. Further, we bring 34 Late-Breaking Works, 5 Demonstrations, 7 organized Workshops & Symposia, 2 Case Studies, 2 Journal Articles, 1 SIG, 1 SIGCHI Outstanding Dissertation Award and 1 Student Game Competition to CHI this year. All these publications are listed below.

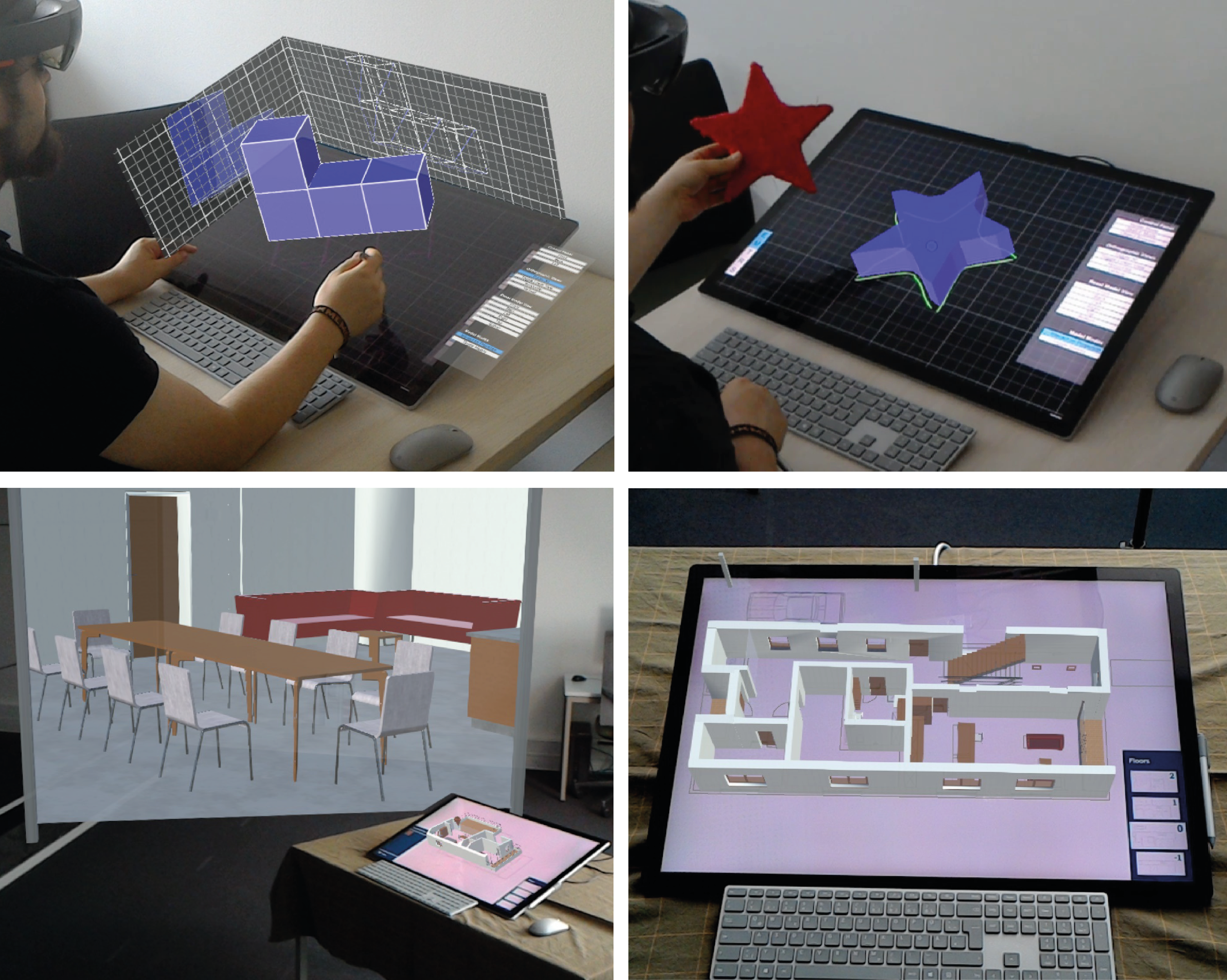

Augmented Displays: Seamlessly Extending Interactive Surfaces with Head-Mounted Augmented Reality

Patrick Reipschläger (Technische Universität Dresden), Severin Engert (Technische Universität Dresden), Raimund Dachselt (Technische Universität Dresden)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{ReipschlaegerAugmented,

title = {Augmented Displays: Seamlessly Extending Interactive Surfaces with Head-Mounted Augmented Reality},

author = {Patrick Reipschläger (Technische Universität Dresden) and Severin Engert (Technische Universität Dresden) and Raimund Dachselt (Technische Universität Dresden)},

url = {https://www.twitter.com/imldresden, Twitter},

doi = {10.1145/3334480.3383138},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {We present Augmented Displays, a new class of display systems directly combining high-resolution interactive surfaces with head-mounted Augmented Reality. This extends the screen real estate beyond the display and enables placing AR content directly at the display's borders or within the real environment. Furthermore, it enables people to interact with AR objects using natural pen and touch input in high precision on the surface. This combination allows for a variety of interesting applications. To illustrate them, we present two use cases: An immersive 3D modeling tool and an architectural design tool. Our goal is to demonstrate the potential of Augmented Displays as a foundation for future work in the design space of this exciting new class of systems.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

Demo of PolySense: How to Make Electrically Functional Textiles

Paul Strohmeier (Saarland University, SIC), Cedric Honnet (MIT Media Lab), Hannah Perner-Wilson (Kobakant), Marc Teyssier (Télécom Paris), Bruno Fruchard (Saarland University, SIC), Ana C. Baptista (CENIMAT/I3N), Jürgen Steimle (Saarland University, SIC)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{StrohmeierDemo,

title = {Demo of PolySense: How to Make Electrically Functional Textiles},

author = {Paul Strohmeier (Saarland University, SIC) and Cedric Honnet (MIT Media Lab) and Hannah Perner-Wilson (Kobakant) and Marc Teyssier (Télécom Paris) and Bruno Fruchard (Saarland University, SIC) and Ana C. Baptista (CENIMAT/I3N) and Jürgen Steimle (Saarland University, SIC)},

doi = {10.1145/3334480.33},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {We demonstrate a simple and accessible method for enhancing textiles with custom piezo-resistive properties. Based on in-situ polymerization, our method offers seamless integration at the material level, preserving a textile's haptic and mechanical properties. We demonstrate how to enhance a wide set of fabrics and yarns using only readily available tools. During each demo session, conference attendees may bring textile samples which will be polymerized in a shared batch. Attendees may keep these samples. While the polymerization is happening, attendees can inspect pre-made samples and explore how these might be integrated in functional circuits. Examples objects created using polymerization include rapid manufacturing of on-body interfaces, tie-dyed motion-capture clothing, and zippers that act as potentiometers.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

Demonstrating Rapid Iron-On User Interfaces: Hands-on Fabrication of Interactive Textile Prototypes.

Konstantin Klamka (Technische Universität Dresden), Raimund Dachselt (Technische Universität Dresden), Jürgen Steimle (Saarland University)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{KlamkaDemonstrating,

title = {Demonstrating Rapid Iron-On User Interfaces: Hands-on Fabrication of Interactive Textile Prototypes.},

author = {Konstantin Klamka (Technische Universität Dresden) and Raimund Dachselt (Technische Universität Dresden) and Jürgen Steimle (Saarland University)},

url = {https://youtu.be/FyPcMLBXIm0, Video

https://www.twitter.com/imldresden, Twitter},

doi = {10.1145/3334480.3383139},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {Rapid prototyping of interactive textiles is still challenging, since manual skills, several processing steps, and expert knowledge are involved. We demonstrate Rapid Iron-On User Interfaces, a novel fabrication approach for empowering designers and makers to enhance fabrics with interactive functionalities. It builds on heat-activated adhesive materials consisting of smart textiles and printed electronics, which can be flexibly ironed onto the fabric to create custom interface functionality. To support rapid fabrication in a sketching-like fashion, we developed a handheld dispenser tool for directly applying continuous functional tapes of desired length as well as discrete patches. We demonstrate versatile compositions techniques that allow to create complex circuits, utilize commodity textile accessories and sketch custom-shaped I/O modules. We further provide a comprehensive library of components for input, output, wiring and computing. Three example applications demonstrate the functionality, versatility and potential of this approach.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

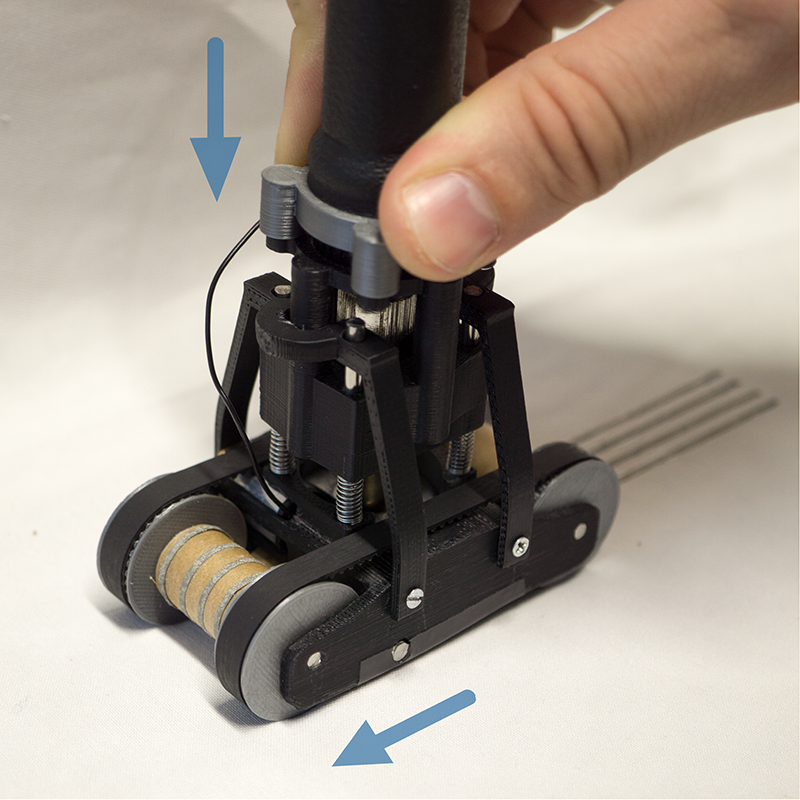

Demonstration of Drag:on – A VR Controller Providing Haptic Feedback Based on Drag and Weight Shift

André Zenner (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus), Donald Degraen (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus), Florian Daiber (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus), Antonio Krüger (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{ZennerDemonstration,

title = {Demonstration of Drag:on – A VR Controller Providing Haptic Feedback Based on Drag and Weight Shift},

author = {André Zenner (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus) and Donald Degraen (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus) and Florian Daiber (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus) and Antonio Krüger (German Research Center for Artificial Intelligence (DFKI), Saarland Informatics Campus)},

url = {https://youtu.be/kiNHqsaoJxc, Video},

doi = {10.1145/3334480.3383145},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {While standard VR controllers lack means to convey realistic, kinesthetic impressions of size, resistance or inertia, this demonstration presents Drag:on, an ungrounded shape-changing interaction device that provides dynamic passive haptic feedback based on drag, i.e. air resistance, and weight shift. Drag:on leverages the airflow at the controller during interaction. The device adjusts its surface area to change the drag and rotational inertia felt by the user. When rotated or swung, Drag:on conveys an impression of resistance, which we previously used in a VR user study to increase the haptic realism of virtual objects and interactions compared to standard controllers. Drag:on's feedback is suitable for rendering virtual mechanical resistances, virtual gas streams, and virtual objects differing in scale, material and fill state. In our demonstration, participants learn about this novel feedback concept, the implementation of our prototype and can experience the resistance feedback during a hands-on session.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

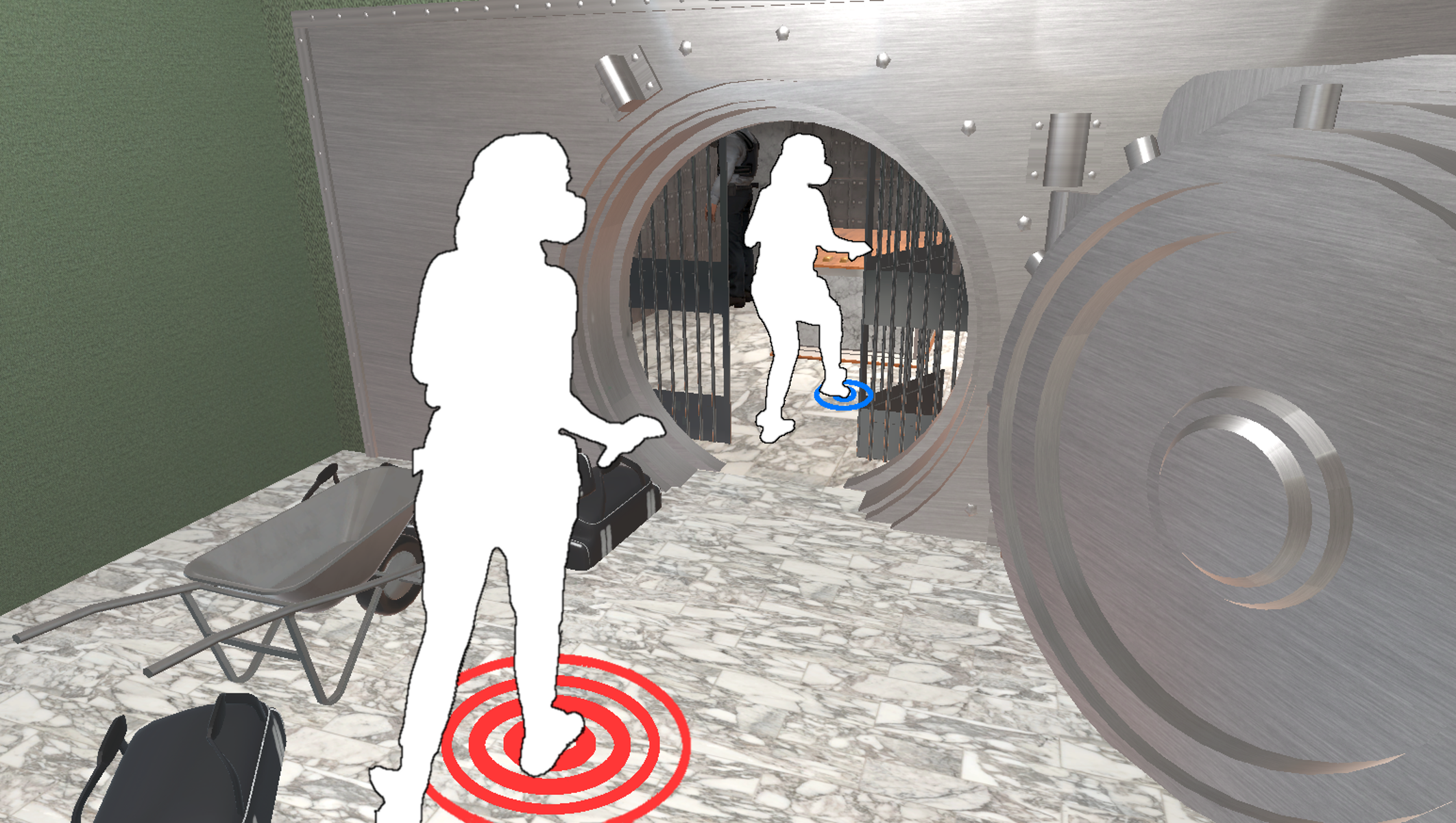

VRsneaky: Stepping into an Audible Virtual World with Gait-Aware Auditory Feedback

Felix Dietz (LMU Munich), Matthias Hoppe (LMU Munich), Jakob Karolus(LMU Munich), Paweł W. Wozniak (Utrecht University), Albrecht Schmidt (LMU Munich), Tonja Machulla (LMU Munich)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{DietzVR,

title = {VRsneaky: Stepping into an Audible Virtual World with Gait-Aware Auditory Feedback},

author = {Felix Dietz (LMU Munich) and Matthias Hoppe (LMU Munich) and Jakob Karolus(LMU Munich) and Paweł W. Wozniak (Utrecht University) and Albrecht Schmidt (LMU Munich) and Tonja Machulla (LMU Munich)},

url = {https://www.dropbox.com/s/skz61keimjm5hso/VRsneaky.mp4?dl=0, Video

https://www.twitter.com/mimuc, Twitter},

doi = {10.1145/3334480.3383168},

year = {2020},

date = {2020-04-26},

booktitle = {Proceedings of the ACM Conference on Human Factors in Computing Systems. CHI 2020},

publisher = {ACM},

abstract = {New VR experiences allow users to walk extensively in the virtual space. Bigger tracking spaces, treadmills and redirected walking solutions are now available. Yet, certain connections to the user's movement are still not made. Here, we specifically see a shortcoming of representations of locomotion feedback in state-of-the-art VR setups. As shown in our paper VRsneaky, providing synchronized step sounds is important for involving the user further into the experience and virtual world, but is often neglected. VRsneaky detects the user's gait and plays synchronized gait-aware step sounds accordingly by attaching force sensing resistors (FSR) and accelerometers to the user's shoe. In an exciting bank robbery the user will try to rob the bank behind a guards back. The tension will increase as the user has to be aware of each step in this atmospheric experience. Each step will remind the user to pay attention to every movement, as each step will be represented using adaptive step sounds resulting in different noise levels.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}