We are happy to present you this year’s contributions from the German HCI labs to CHI 2021! Feel free to browse our list of publications: There are 53 full papers (including one Best Paper and five Honorable Mentions), 16 LBWs, and many demonstrations, workshops, or other interesting publications. Please send us a mail if you feel that your paper is missing or to correct any entries.

“It's a kind of art!”: Understanding Food Influencers as Influential Content Creators

Philip Weber (Cyber-Physical Systems, University of Siegen) and Thomas Ludwig (Cyber-Physical Systems, University of Siegen), Sabrina Brodesser (Cyber-Physical Systems, University of Siegen), Laura Grönewald (Cyber-Physical Systems, University of Siegen)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021WeberFoodInfluencers,

title = {“It's a kind of art!”: Understanding Food Influencers as Influential Content Creators},

author = {Philip Weber (Cyber-Physical Systems, University of Siegen) and

Thomas Ludwig (Cyber-Physical Systems, University of Siegen) and Sabrina Brodesser (Cyber-Physical Systems, University of Siegen) and Laura Grönewald (Cyber-Physical Systems, University of Siegen)},

url = {https://cps.wineme.fb5.uni-siegen.de/ },

doi = {10.1145/3411764.3445607},

year = {2021},

date = {2021-05-08},

publisher = {ACM},

abstract = {Although the number of influencers is increasing and being an influencer is one of the most frequently mentioned career aspirations of young people, we still know very little about influencers’ motivations and actual practices from the HCI perspective. Driven by the emerging field of Human-Food Interaction and novel phenomena on social media such as Finstas, ASMR, Mukbang and live streaming, we would like to highlight the significance of food influencers as influential content creators and their social media practices. We have conducted a qualitative interview study and analyzed over 1,500 posts of food content creators on Instagram, focusing on practices of content creation, photography, staging, posting, and use of technology. Based on our findings, we have derived a process model that outlines the practices of this rather small, but influential user group. We contribute to the field of HCI by outlining the practices of food influencers as influential content creators within the social media sphere to open up design spaces for interaction researchers and practitioners.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

A Critical Assessment of the Use of SSQ as a Measure of General Discomfort in VR Head-Mounted Displays

Teresa Hirzle (Ulm University), Maurice Cordts (Ulm University), Enrico Rukzio (Ulm University), Jan Gugenheimer (Télécom Paris), Andreas Bulling (University of Stuttgart)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021HirzleCritical,

title = {A Critical Assessment of the Use of SSQ as a Measure of General Discomfort in VR Head-Mounted Displays},

author = {Teresa Hirzle (Ulm University) and Maurice Cordts (Ulm University) and Enrico Rukzio (Ulm University) and Jan Gugenheimer (Télécom Paris) and Andreas Bulling (University of Stuttgart)},

url = {https://www.uni-ulm.de/en/in/mi/, Website HCI Ulm University},

doi = {10.1145/3411764.3445361},

year = {2021},

date = {2021-05-01},

abstract = {Based on a systematic literature review of more than 300 papers published over the last 10 years, we provide indicators that the simulator sickness questionnaire (SSQ) is extensively used and widely accepted as a general discomfort measure in virtual reality (VR) research – although it actually only accounts for one category of symptoms. This results in important other categories (digital eye strain (DES) and ergonomics) being largely neglected. To contribute to a more comprehensive picture of discomfort in VR head-mounted displays, we further conducted an online study (N=352) on the severity and relevance of all three symptom categories. Most importantly, our results reveal that symptoms of simulator sickness are significantly less severe and of lower prevalence than those of DES and ergonomics. In light of these findings, we critically discuss the current use of SSQ as the only discomfort measure and propose a more comprehensive factor model that also includes DES and ergonomics.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

A Taxonomy of Vulnerable Road Users for HCI Based On A Systematic Literature Review

Kai Holländer (LMU Munich), Mark Colley (Institute of Media Informatics, Ulm University), Enrico Rukzio (Institute of Media Informatics, Ulm University), Andreas Butz (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021HollaenderTaxonomy,

title = {A Taxonomy of Vulnerable Road Users for HCI Based On A Systematic Literature Review},

author = {Kai Holländer (LMU Munich) and Mark Colley (Institute of Media Informatics, Ulm University) and Enrico Rukzio (Institute of Media Informatics, Ulm University) and Andreas Butz (LMU Munich)},

url = {http://www.medien.ifi.lmu.de/, Website - HCI Group LMU Munich

https://www.uni-ulm.de/en/in/mi/hci/, Website - HCI Group Ulm University

https://twitter.com/mimuc, Twitter - HCI Group LMU Munich

https://twitter.com/mi_uulm, Twitter - HCI Group Ulm University

},

doi = {10.1145/3411764.3445480},

year = {2021},

date = {2021-05-01},

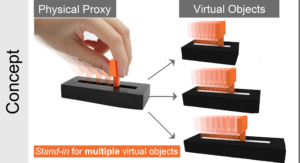

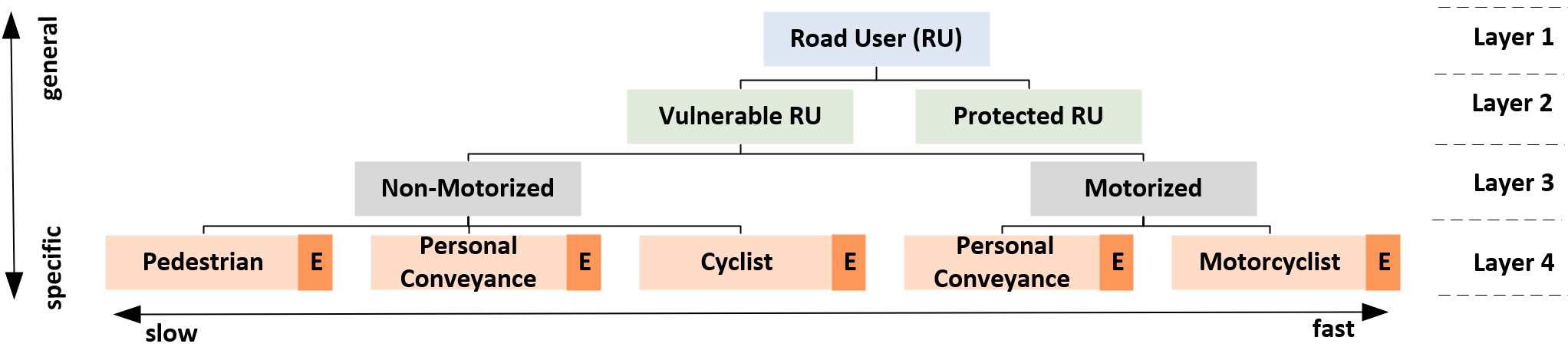

abstract = {Recent automotive research often focuses on automated driving, including the interaction between automated vehicles (AVs) and so-called ``vulnerable road users'' (VRUs). While road safety statistics and traffic psychology at least define VRUs as pedestrians, cyclists, and motorcyclists, many publications on human-vehicle interaction use the term without even defining it. The actual target group remains unclear. Since each group already poses a broad spectrum of research challenges, a one-fits-all solution seems unrealistic and inappropriate, and a much clearer differentiation is required. To foster clarity and comprehensibility,

we propose a literature-based taxonomy providing a structured separation of (vulnerable) road users, designed to particularly (but not exclusively) support research on the communication between VRUs and AVs.

It consists of two conceptual hierarchies and will help practitioners and researchers by providing a uniform and comparable set of terms needed for the design, implementation, and description of HCI applications.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

we propose a literature-based taxonomy providing a structured separation of (vulnerable) road users, designed to particularly (but not exclusively) support research on the communication between VRUs and AVs.

It consists of two conceptual hierarchies and will help practitioners and researchers by providing a uniform and comparable set of terms needed for the design, implementation, and description of HCI applications.

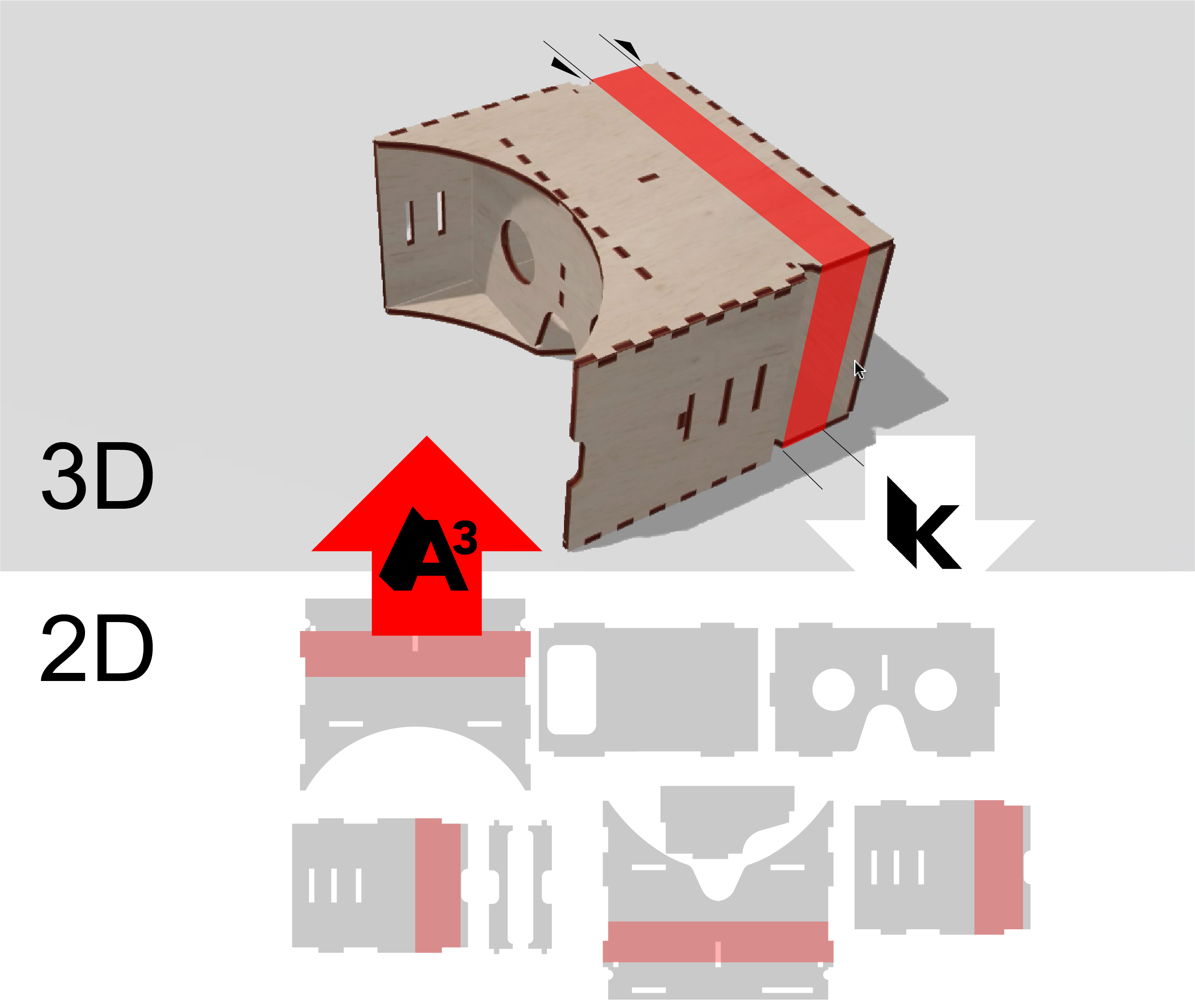

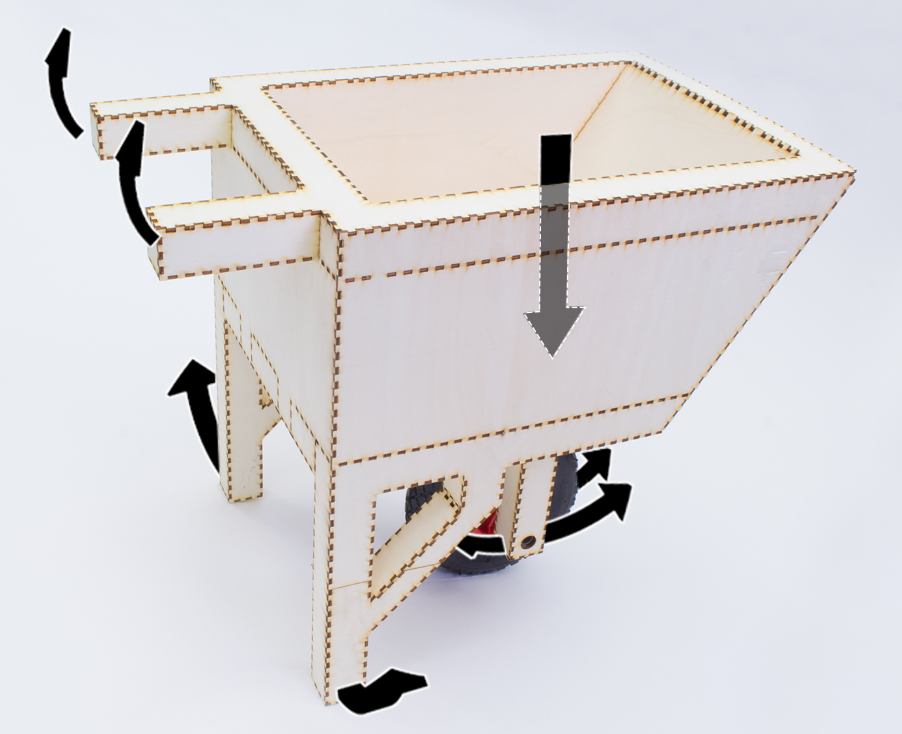

assembler3: 3D Reconstruction of Laser-Cut Models

Thijs Roumen (Hasso Plattner Institute), Yannis Kommana (Hasso Plattner Institute), Ingo Apel (Hasso Plattner Institute), Conrad Lempert (Hasso Plattner Institute), Markus Brand (Hasso Plattner Institute), Erik Brendel (Hasso Plattner Institute), Laurenz Seidel (Hasso Plattner Institute), Lukas Rambold (Hasso Plattner Institute), Carl Goedecken (Hasso Plattner Institute), Pascal Crenzin (Hasso Plattner Institute), Ben Hurdelhey (Hasso Plattner Institute), Muhammad Abdullah (Hasso Plattner Institute), Patrick Baudisch (Hasso Plattner Institute)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021RoumenAssembler,

title = {assembler3: 3D Reconstruction of Laser-Cut Models},

author = {Thijs Roumen (Hasso Plattner Institute) and Yannis Kommana (Hasso Plattner Institute) and Ingo Apel (Hasso Plattner Institute) and Conrad Lempert (Hasso Plattner Institute) and Markus Brand (Hasso Plattner Institute) and Erik Brendel (Hasso Plattner Institute) and Laurenz Seidel (Hasso Plattner Institute) and Lukas Rambold (Hasso Plattner Institute) and Carl Goedecken (Hasso Plattner Institute) and Pascal Crenzin (Hasso Plattner Institute) and Ben Hurdelhey (Hasso Plattner Institute) and Muhammad Abdullah (Hasso Plattner Institute) and Patrick Baudisch (Hasso Plattner Institute)},

url = {https://www.hpi.de/baudisch/, Website - HCI HPI

https://www.youtube.com/channel/UC74ZNPu98FIn8Wn3JNyTIVQ, YouTube - HCI HPI

https://www.youtube.com/watch?v=9NZNZpKxdfE&t=1s, YouTube - Video},

doi = {10.1145/3411764.3445453},

year = {2021},

date = {2021-05-01},

abstract = {We present Assembler3 a software tool that allows users to perform 3D parametric manipulations on 2D laser cutting plans. Assembler3 achieves this by semi-automatically converting 2D laser cutting plans to 3D, where users modify their models using available 3D tools (kyub), before converting them back to 2D. In our user study, this workflow allowed users to modify mod-els 10x faster than using the traditional approach of editing 2D cutting plans directly. Assembler3 converts models to 3D in 5 steps: (1) plate detection, (2) joint detection, (3) material thickness detection, (4) joint matching based on hashed joint "signatures", and (5) interactive reconstruction. In our technical evaluation, Assembler3 was able to reconstruct 100 of 105 models. Once 3D-reconstructed, we expect users to store and share their models in 3D, which can simplify collaboration and there-by empower the laser cutting community to create models of higher complexity.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Assisting Manipulation and Grasping in Robot Teleoperation with Augmented Reality Visual Cues

Stephanie Arevalo Arboleda (Westfälische Hochschule), Franziska Rücker (Westfälische Hochschule), Tim Dierks (Westfälische Hochschule), Jens Gerken (Westfälische Hochschule)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021ArboledaAssisting,

title = {Assisting Manipulation and Grasping in Robot Teleoperation with Augmented Reality Visual Cues},

author = {Stephanie Arevalo Arboleda (Westfälische Hochschule) and Franziska Rücker (Westfälische Hochschule) and Tim Dierks (Westfälische Hochschule) and Jens Gerken (Westfälische Hochschule) },

url = {https://hci.w-hs.de/, Website - HCI Group Westfälische Hochschule

http://twitter.com/doozer_jg, Twitter - Jens Gerken

https://www.youtube.com/watch?v=G_H84yliJsY&t=73s&ab_channel=HCIWestf%C3%A4lischeHochschule, YouTube - Teaser Video},

doi = {10.1145/3411764.3445398},

year = {2021},

date = {2021-05-01},

abstract = {Teleoperating industrial manipulators in co-located spaces can be challenging. Facilitating robot teleoperation by providing additional visual information about the environment and the robot affordances using augmented reality (AR), can improve task performance in manipulation and grasping. In this paper, we present two designs of augmented visual cues, that aim to enhance the visual space of the robot operator through hints about the position of the robot gripper in the workspace and in relation to the target. These visual cues aim to improve the distance perception and thus, the task performance. We evaluate both designs against a baseline in an experiment where participants teleoperate a robotic arm to perform pick-and-place tasks. Our results show performance improvements in different levels, reflecting in objective and subjective measures with trade-offs in terms of time, accuracy, and participants’ views of teleoperation. These findings show the potential of AR not only in teleoperation, but in understanding the human-robot workspace.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

BodyStylus: Freehand On-Body Design and Fabrication of Epidermal Interfaces

Narjes Pourjafarian (Saarland Informatics Campus), Marion Koelle (Saarland Informatics Campus), Bruno Fruchard (Saarland Informatics Campus), Sahar Mavali (Saarland Informatics Campus), Konstantin Klamka (Technische Universität Dresden), Daniel Groeger (Saarland Informatics Campus), Paul Strohmeier (Saarland Informatics Campus), Jürgen Steimle (Saarland Informatics Campus)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021PourjafarianBody,

title = {BodyStylus: Freehand On-Body Design and Fabrication of Epidermal Interfaces},

author = {Narjes Pourjafarian (Saarland Informatics Campus) and Marion Koelle (Saarland Informatics Campus) and Bruno Fruchard (Saarland Informatics Campus) and Sahar Mavali (Saarland Informatics Campus) and Konstantin Klamka (Technische Universität Dresden) and Daniel Groeger (Saarland Informatics Campus) and Paul Strohmeier (Saarland

Informatics Campus) and Jürgen Steimle (Saarland Informatics Campus)},

url = {https://hci.cs.uni-saarland.de/, Website - HCI Saarland University

https://www.youtube.com/watch?v=Lb7ru6AABDE&t=1s&ab_channel=EmbodiedInteraction, YouTube Video Teaser

https://www.youtube.com/watch?v=Lb7ru6AABDE&t=1s&ab_channel=EmbodiedInteraction, Youtube Video Figure},

doi = {10.1145/3411764.3445475},

year = {2021},

date = {2021-05-01},

abstract = {In traditional body-art, designs are adjusted to the body as they are applied, enabling creative improvisation and exploration. Conventional design and fabrication methods of epidermal interfaces, however, separate these steps. With BodyStylus we present the first computer-assisted approach for on-body design and fabrication of epidermal interfaces. Inspired by traditional techniques, we propose a hand-held tool that augments freehand inking with digital support: projected in-situ guidance assists creating valid on-body circuits and aesthetic ornaments that align with the human bodyscape, while pro-active switching between inking and non-inking creates error preventing constraints. We contribute BodyStylus's design rationale and interaction concept along with an interactive prototype that uses self-sintering conductive ink. Results of two focus group explorations showed that guidance was more appreciated by artists, while constraints appeared more useful to engineers, and that working on the body inspired critical reflection on the relationship between bodyscape, interaction, and designs.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

ClothTiles: Fabricating Customized Actuators on Textiles using 3D Printing and Shape-Memory Alloys

Sachith Muthukumarana (University of Auckland), Moritz Messerschmidt (University of Auckland), Denys Matthies (Technical University of Applied Sciences Lübeck), Jürgen Steimle (Saarland University), Philipp Scholl (University of Auckland), Suranga Nanayakkara (University of Auckland)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021MuthukumaranaCloth,

title = {ClothTiles: Fabricating Customized Actuators on Textiles using 3D Printing and Shape-Memory Alloys},

author = {Sachith Muthukumarana (University of Auckland) and Moritz Messerschmidt (University of Auckland) and Denys Matthies (Technical University of Applied Sciences Lübeck) and Jürgen Steimle (Saarland University) and Philipp Scholl (University of Auckland) and Suranga Nanayakkara (University of Auckland)},

url = {https://hci.cs.uni-saarland.de/, Website - HCI Lab Saarland University

https://www.youtube.com/watch?v=ZFUnVONFycI, YouTube - Video Teaser},

doi = {10.1145/3411764.3445613},

year = {2021},

date = {2021-05-07},

abstract = {Emerging research has demonstrated the viability of on-textile actuation mechanisms, however, an easily customizable and versatile on-cloth actuation mechanism is yet to be explored. In this paper, we present ClothTiles along with its rapid fabrication technique that enables actuation of clothes. ClothTiles leverage flexible 3D-printing and Shape-Memory Alloys (SMAs) alongside new parametric actuation designs. We validate the concept of fabric actuation using a base element, and then systematically explore methods of aggregating, scaling, and orienting prospects for ex-tended actuation in garments. A user study demonstrated that our technique enables multiple actuation types applied across a variety of clothes. Users identified both aesthetic and functional applications of ClothTiles. We conclude with a number of insights for the Do-It-Yourself community on how to employ 3D-printing with SMAs to enable actuation on clothes.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Comparison of Different Types of Augmented Reality Visualizations for Instructions

Florian Jasche (University of Siegen), Sven Hoffmann (University of Siegen), Thomas Ludwig (University of Siegen), Volker Wulf (University of Siegen)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021JascheComparison,

title = {Comparison of Different Types of Augmented Reality Visualizations for Instructions},

author = {Florian Jasche (University of Siegen), Sven Hoffmann (University of Siegen), Thomas Ludwig (University of Siegen), Volker Wulf (University of Siegen)},

url = {http://cps-research.de/, Cyber-Physical Systems University of Siegen},

doi = {10.1145/3411764.3445724},

year = {2021},

date = {2021-05-01},

abstract = {Augmented Reality (AR) is increasingly being used for providing guidance and supporting troubleshooting in industrial settings. While the general application of AR has been shown to provide clear benefts regarding physical tasks, it is important to understand how different visualization types infuence user’s performance during the execution of the tasks. Previous studies evaluating AR and user’s performance compared different media types or types of AR hardware as opposed to different types of visualization for the same hardware type. This paper provides details of our comparative study in which we identifed the infuence of visualization types on the performance of complex machine set-up processes. Although our results show clear advantages to using concrete rather than abstract visualizations, we also fnd abstract visualizations coupled with videos leads to similar user performance as with concrete visualizations.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Creepy Technology: What Is It and How Do You Measure It?

Paweł W. Woźniak (Utrecht University), Jakob Karolus (LMU Munich), Florian Lang (LMU Munich), Caroline Eckerth (LMU Munich), Johannes Schöning (University of Bremen), Yvonne Rogers (University College London, University of Bremen), Jasmin Niess (University of Bremen)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021WozniakCreepy,

title = {Creepy Technology: What Is It and How Do You Measure It?},

author = {Paweł W. Woźniak (Utrecht University), Jakob Karolus (LMU Munich), Florian Lang (LMU Munich), Caroline Eckerth (LMU Munich), Johannes Schöning (University of Bremen), Yvonne Rogers (University College London, University of Bremen), Jasmin Niess (University of Bremen)},

url = {https://hci.uni-bremen.de/, Website HCI Group University of Bremen

https://twitter.com/HCIBremen, HCI Group University of Bremen

https://youtu.be/3AQVJ8rUjf0, Youtube - Video},

doi = {10.1145/3411764.3445299},

year = {2021},

date = {2021-05-01},

abstract = {Interactive technologies are getting closer to our bodies and permeate the infrastructure of our homes. While such technologies offer many benefits, they can also cause an initial feeling of unease in users. It is important for Human-Computer Interaction to manage first impressions and avoid designing technologies that appear creepy. To that end, we developed the Perceived Creepiness of Technology Scale (PCTS), which measures how creepy a technology appears to a user in an initial encounter with a new artefact. The scale was developed based on past work on creepiness and a set of ten focus groups conducted with users from diverse backgrounds. We followed a structured process of analytically developing and validating the scale. The PCTS is designed to enable designers and researchers to quickly compare interactive technologies and ensure that they do not design technologies that produce initial feelings of creepiness in users.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Current Practices, Challenges, and Design Implications for Collaborative AR/VR Application Development

Veronika Krauß (Fraunhofer FIT, Universität Siegen), Alexander Boden (Fraunhofer FIT, Hochschule Bonn-Rhein-Sieg), Leif Oppermann (Fraunhofer FIT), René Reiners (Fraunhofer FIT)

Abstract | Tags: Full Paper | Links:

@inproceedings{FIT2021,

title = {Current Practices, Challenges, and Design Implications for Collaborative AR/VR Application Development},

author = {Veronika Krauß (Fraunhofer FIT, Universität Siegen) and Alexander Boden (Fraunhofer FIT, Hochschule Bonn-Rhein-Sieg) and Leif Oppermann (Fraunhofer FIT) and René Reiners (Fraunhofer FIT)},

doi = {10.1145/3411764.3445335},

year = {2021},

date = {2021-05-01},

abstract = {Augmented/Virtual Reality (AR/VR) is still a fragmented space to design for due to the rapidly evolving hardware, the interdisciplinarity of teams, and a lack of standards and best practices. We interviewed 26 professional AR/VR designers and developers to shed light on their tasks, approaches, tools, and challenges. Based on their work and the artifacts they generated, we found that AR/VR application creators fulfill four roles: concept developers, interaction designers, content authors, and technical developers. One person often incorporates multiple roles and faces a variety of challenges during the design process from the initial contextual analysis to the deployment. From analysis of their tool sets, methods, and artifacts, we describe critical key challenges. Finally, we discuss the importance of prototyping for the communication in AR/VR development teams and highlight design implications for future tools to create a more usable AR/VR tool chain.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

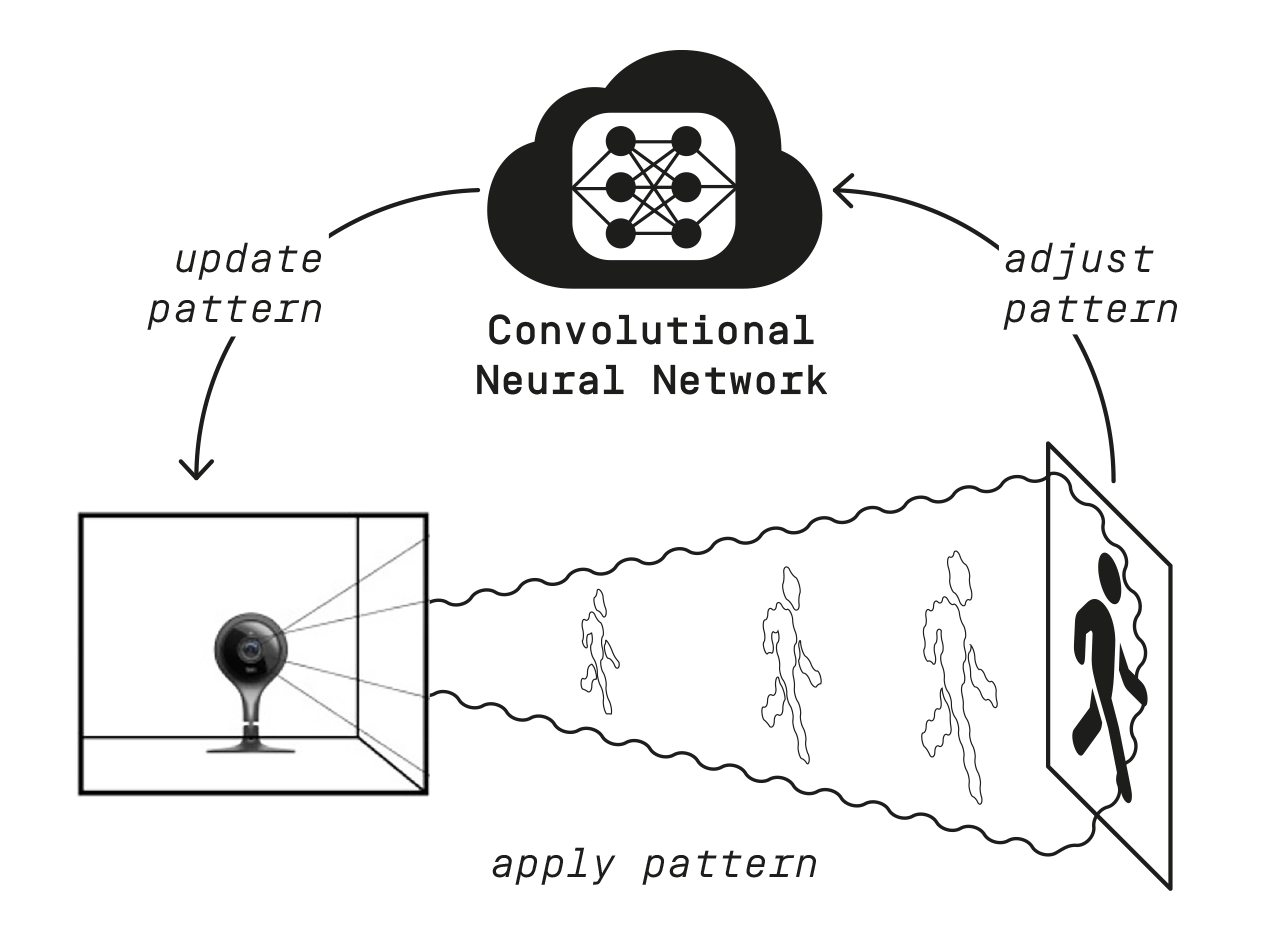

Effects of Semantic Segmentation Visualization on Trust, Situation Awareness, and Cognitive Load in Highly Automated Vehicles

Mark Colley (Institute of Media Informatics, Ulm University), Benjamin Eder (Institute of Media Informatics, Ulm University), Jan Ole Rixen (Institute of Media Informatics, Ulm University), Enrico Rukzio (Institute of Media Informatics, Ulm University)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021ColleyEffects,

title = {Effects of Semantic Segmentation Visualization on Trust, Situation Awareness, and Cognitive Load in Highly Automated Vehicles},

author = {Mark Colley (Institute of Media Informatics, Ulm University) and Benjamin Eder (Institute of Media Informatics, Ulm University) and Jan Ole Rixen (Institute of Media Informatics, Ulm University) and Enrico Rukzio (Institute of Media Informatics, Ulm University)},

url = {https://www.uni-ulm.de/en/in/mi/hci/, Website - HCI Group Ulm University

https://twitter.com/mi_uulm, Twitter - HCI Group Ulm University},

doi = {10.1145/3411764.3445351},

year = {2021},

date = {2021-05-01},

abstract = {Autonomous vehicles could improve mobility, safety, and inclusion in traffic. While this technology seems within reach, its successful introduction depends on the intended user's acceptance. A substantial factor for this acceptance is trust in the autonomous vehicle's capabilities. Visualizing internal information processed by an autonomous vehicle could calibrate this trust by enabling the perception of the vehicle's detection capabilities (and its failures) while only inducing a low cognitive load. Additionally, the simultaneously raised situation awareness could benefit potential take-overs. We report the results of two comparative online studies on visualizing semantic segmentation information for the human user of autonomous vehicles. Effects on trust, cognitive load, and situation awareness were measured using a simulation (N=32) and state-of-the-art panoptic segmentation on a pre-recorded real-world video (N=41). Results show that the visualization using Augmented Reality increases situation awareness while remaining low cognitive load."},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Eliciting and Analysing Users’ Envisioned Dialogues with Perfect Voice Assistants

Sarah Theres Völkel (LMU Munich), Daniel Buschek (University of Bayreuth), Malin Eiband (LMU Munich), Benjamin R. Cowan (University College Dublin), Heinrich Hussmann (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021VoelkelEliciting,

title = {Eliciting and Analysing Users’ Envisioned Dialogues with Perfect Voice Assistants},

author = {Sarah Theres Völkel (LMU Munich), Daniel Buschek (University of Bayreuth), Malin Eiband (LMU Munich), Benjamin R. Cowan (University College Dublin), Heinrich Hussmann (LMU Munich)},

url = {https://www.medien.ifi.lmu.de, Media Informatics Group LMU Munich

https://youtu.be/zRRnArDdS3k, Youtube Video},

doi = {10.1145/3411764.3445536},

year = {2021},

date = {2021-05-01},

abstract = {We present a dialogue elicitation study to assess how users envision conversations with a perfect voice assistant (VA). In an online survey, N=205 participants were prompted with everyday scenarios, and wrote the lines of both user and VA in dialogues that they imagined as perfect. We analysed the dialogues with text analytics and qualitative analysis, including number of words and turns, social aspects of conversation, implied VA capabilities, and the influence of user personality. The majority envisioned dialogues with a VA that is interactive and not purely functional; it is smart, proactive, and has knowledge about the user. Attitudes diverged regarding the assistant's role as well as it expressing humour and opinions. An exploratory analysis suggested a relationship with personality for these aspects, but correlations were low overall. We discuss implications for research and design of future VAs, underlining the vision of enabling conversational UIs, rather than single command "Q&As". },

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring Augmented Visual Alterations in Interpersonal Communication

Jan Ole Rixen (Ulm University), Mark Colley (Ulm University), Yannick Etzel (Ulm University), Enrico Rukzio (Ulm University), Jan Gugenheimer (Télécom Paris)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021RixenExploring,

title = {Exploring Augmented Visual Alterations in Interpersonal Communication},

author = {Jan Ole Rixen (Ulm University) and Mark Colley (Ulm University) and Yannick Etzel (Ulm University) and Enrico Rukzio (Ulm University) and Jan Gugenheimer (Télécom Paris)},

url = {https://www.uni-ulm.de/en/in/mi/hci/, Website - HCI Ulm University

https://twitter.com/mi_uulm, Twitter - HCI Ulm University},

doi = {10.1145/3411764.3445597},

year = {2021},

date = {2021-05-01},

abstract = {Augmented Reality (AR) glasses equip users with the tools to modify the visual appearance of their surrounding environment. This might severely impact interpersonal communication, as the conversational partners will no longer share the same visual perception of reality. Grounded in color-in-context theory, we present a potential

AR application scenario in which users can modify the color of the environment to achieve subconscious benefits. In a consecutive online survey (N=64), we measured the user’s comfort, acceptance of altering and being altered, and how it is impacted by being able to perceive or not perceive the alteration. We identified significant

differences depending on (1) who or what is the target of the alteration, (2) which body part is altered, and (3) which relationship the conversational partners share. In light of our quantitative and qualitative findings, we discuss ethical and practical implications for future devices and applications that employ visual alterations.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

AR application scenario in which users can modify the color of the environment to achieve subconscious benefits. In a consecutive online survey (N=64), we measured the user’s comfort, acceptance of altering and being altered, and how it is impacted by being able to perceive or not perceive the alteration. We identified significant

differences depending on (1) who or what is the target of the alteration, (2) which body part is altered, and (3) which relationship the conversational partners share. In light of our quantitative and qualitative findings, we discuss ethical and practical implications for future devices and applications that employ visual alterations.

Eyecam: Revealing Relations Between Humans and Sensing Devices Through an Anthropomorphic Webcam

Marc Teyssier (Saarland University), Marion Koelle (Saarland University), Paul Strohmeier (Saarland University), Bruno Fruchard (Saarland University), Jürgen Steimle (Saarland University)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021TeyssierEyecam,

title = {Eyecam: Revealing Relations Between Humans and Sensing Devices Through an Anthropomorphic Webcam},

author = {Marc Teyssier (Saarland University) and Marion Koelle (Saarland University) and Paul Strohmeier (Saarland University) and Bruno Fruchard (Saarland University) and Jürgen Steimle (Saarland University)},

url = {https://hci.cs.uni-saarland.de/, Website - HCI Lab Saarland University

https://www.youtube.com/watch?v=JMxr8Nq-w_w, YouTube - Video Figure},

doi = {10.1145/1122445.1122456},

year = {2021},

date = {2021-05-07},

abstract = {We routinely surround ourselves with sensing devices. We get accustomed to them, appreciate their benefits, and even create affective bonds and might neglect the implications they might have for our daily life. By presenting EyeCam, an anthropomorphic webcam mimicking a human eye, we challenge conventional relationships with ubiquitous sensing devices and call to re-think how these look and act. Inspired by critical design, EyeCam is an exaggeration of a familiar sensing device which allows for critical reflections on its perceived functionalities and its impact on human-human and human-device relations. We identify 5 different roles EyeCam can take: Mediator, Observer, Mirror, Feature, and Agent. Contributing design fictions and thinking prompts, we allow for articulation on privacy awareness and intrusion, affect in mediated communication, agency and self-perception along with speculation on potential futures. We envision this work to contribute to a bold and responsible design of ubiquitous sensing devices.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Fastforce: Real-Time Reinforcement of Laser-Cut Structures

Muhammad Abdullah (Hasso Plattner Institute), Martin Taraz (Hasso Plattner Institute), Yannis Kommana (Hasso Plattner Institute), Shohei Katakura (Hasso Plattner Institute), Robert Kovacs (Hasso Plattner Institute), Jotaro Shigeyama (Hasso Plattner Institute), Thijs Roumen (Hasso Plattner Institute), Patrick Baudisch (Hasso Plattner Institute)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021AbdullahFast,

title = {Fastforce: Real-Time Reinforcement of Laser-Cut Structures},

author = {Muhammad Abdullah (Hasso Plattner Institute) and Martin Taraz (Hasso Plattner Institute) and Yannis Kommana (Hasso Plattner Institute) and Shohei Katakura (Hasso Plattner Institute) and Robert Kovacs (Hasso Plattner Institute) and Jotaro Shigeyama (Hasso Plattner Institute) and Thijs Roumen (Hasso Plattner Institute) and Patrick Baudisch (Hasso Plattner Institute)},

url = {https://www.hpi.de/baudisch/, Website - HCI HPI

https://www.youtube.com/channel/UC74ZNPu98FIn8Wn3JNyTIVQ, YouTube - HCI HPI

https://www.youtube.com/watch?v=oMBZPJQuwBQ, YouTube - Video},

doi = {10.1145/3411764.3445466},

year = {2021},

date = {2021-05-01},

abstract = {We present fastForce, a software tool that detects structural flaws in laser cut 3D models and fixes them by introducing additional plates into the model, thereby making models up to 52x stronger. By focusing on a specific type of structural issue, i.e., poorly connected sub-structures in closed box structures, fastForce achieves real-time performance (106x faster than finite element analysis, in the specific case of the wheelbarrow from Figure 1). This allows fastForce to fix structural issues continuously in the background, while users stay focused on editing their models and without ever becoming aware of any structural issues. In our study, six of seven participants inadvertently introduced severe structural flaws into the guitar stands they designed. Similarly, we found 286 of 402 relevant models in the kyub [1] model library to contain such flaws. We integrated fastForce into a 3D editor for lasercutting (kyub) and found that even with high plate counts fastForce achieves real-time performance.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

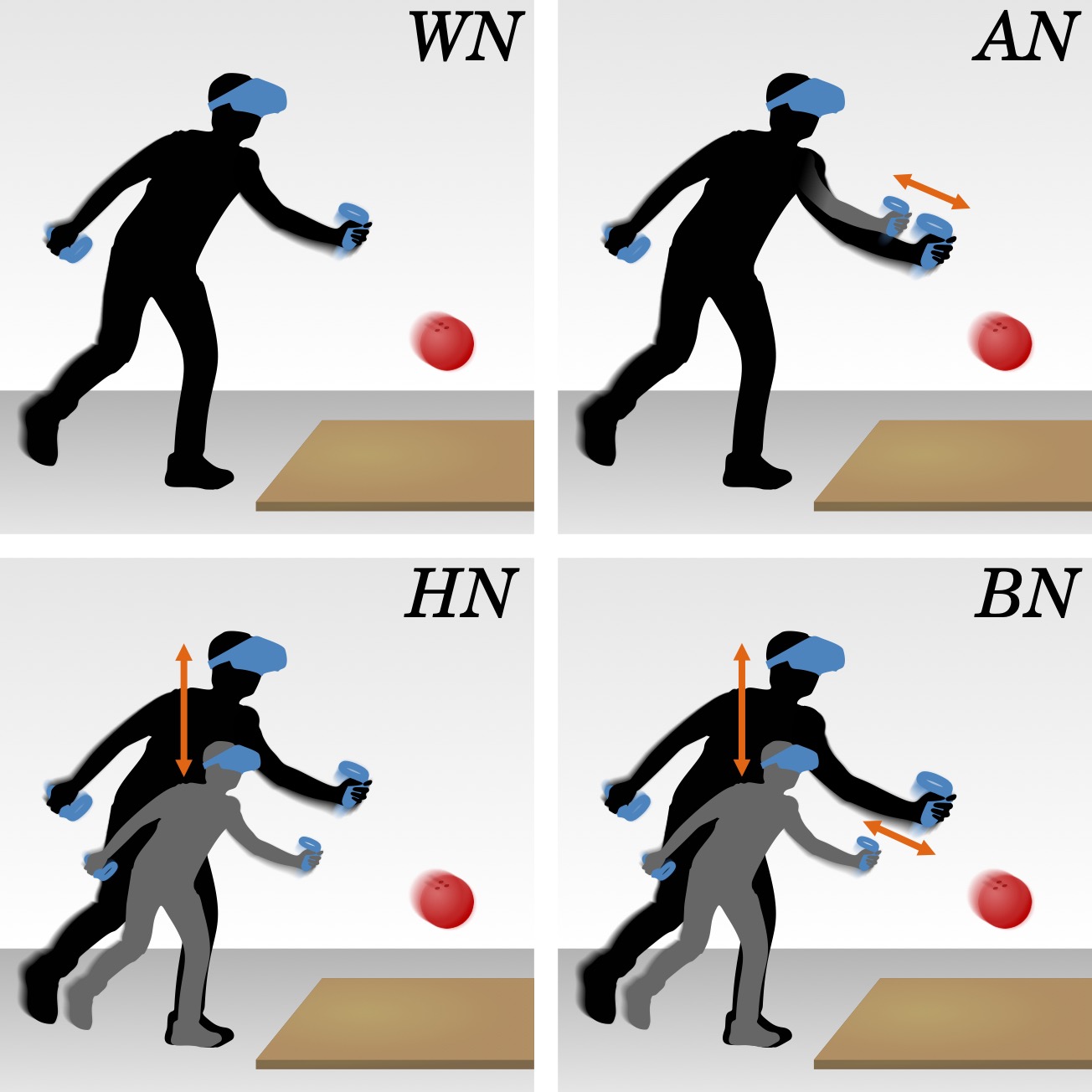

Feels like Team Spirit: Biometric and Strategic Interdependence in Asymmetric Multiplayer VR Games

Sukran Karaosmanoglu (Universität Hamburg), Katja Rogers (University of Waterloo), Dennis Wolf (Ulm University), Enrico Rukzio (Ulm University), Frank Steinicke (Universität Hamburg), Lennart E. Nacke (University of Waterloo)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021KaraosmanogluFeels,

title = {Feels like Team Spirit: Biometric and Strategic Interdependence in Asymmetric Multiplayer VR Games},

author = {Sukran Karaosmanoglu (Universität Hamburg) and Katja Rogers (University of Waterloo) and Dennis Wolf (Ulm University) and Enrico Rukzio (Ulm University) and Frank Steinicke (Universität Hamburg) and Lennart E. Nacke (University of Waterloo)},

url = {https://www.inf.uni-hamburg.de/en/inst/ab/hci.html, Website - HCI Universität Hamburg

https://twitter.com/uhhhci, Twitter - HCI Universität Hamburg},

doi = {10.1145/3411764.3445492},

year = {2021},

date = {2021-05-01},

abstract = {Virtual reality (VR) multiplayer games increasingly use asymmetry (e.g., differences in a person’s capability or the user interface) and resulting interdependence between players to create engagement even when one player has no access to a head-mounted display (HMD). Previous work shows this enhances player experience (PX). Until now, it remains unclear whether and how an asymmetric game design with interdependences creates comparably enjoyable PX for both an HMD and a non-HMD player. In this work, we designed and implemented an asymmetric VR game (different in its user interface) with two types of interdependence: strategic (difference in game information/player capability) and biometric (difference in player’s biometric influence). Our mixed-methods user study (N=30) shows that asymmetries positively impact PX for both player roles, that interdependence strongly affects players’ perception of agency, and that biometric feedback---while subjective---is a valuable game mechanic.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Fighting Fires and Powering Steam Locomotives: Distribution of Control and Its Role in Social Interaction at Tangible Interactive Museum Exhibits

Loraine Clarke, Eva Hornecker, Ian Ruthven

Abstract | Tags: Full Paper | Links:

@inproceedings{Clarke2021Fires,

title = {Fighting Fires and Powering Steam Locomotives: Distribution of Control and Its Role in Social Interaction at Tangible Interactive Museum Exhibits},

author = { Loraine Clarke and Eva Hornecker and Ian Ruthven},

doi = {10.1145/3411764.3445534},

isbn = {9781450380966},

year = {2021},

date = {2021-01-01},

booktitle = {Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {Yokohama, Japan},

series = {CHI '21},

abstract = {We present a video-analysis study of museum visitors’ interactions at two tangible interactive exhibits in a transport museum. Our focus is on groups’ social and shared interactions, in particular how exhibit setup and structure influence collaboration patterns. Behaviors at the exhibits included individuals focusing beyond their personal activity towards companions’ interaction, adults participating via physical interaction, and visitors taking opportunities to interact when companions moved between sections of the exhibit or stepped back from interaction. We demonstrate how exhibits’ physical configuration and interactive control engendered behavioral patterns. Systematic analysis reveals how different configurations (concerning physical-spatial hardware and interactive software) distribute control differently amongst visitors. We present four mechanisms for how control can be distributed at an interactive installation: functional, temporal, physical and indirect verbal. In summary, our work explores how mechanisms that distribute control influence patterns of shared interaction with the exhibits and social interaction between museum visitor companions.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

From Detectables to Inspectables: Understanding Qualitative Analysis of Audiovisual Data

Krishna Subramanian (RWTH Aachen University), Johannes Maas (RWTH Aachen University), Jan Borchers (RWTH Aachen University), and Jim Hollan (University of California, San Diego)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Subramanian2021detectables,

title = {From Detectables to Inspectables: Understanding Qualitative Analysis of Audiovisual Data},

author = {Krishna Subramanian (RWTH Aachen University) and Johannes Maas (RWTH Aachen University) and Jan Borchers (RWTH Aachen University) and and Jim Hollan (University of California, San Diego)},

url = {https://hci.rwth-aachen.de (lab page)

https://www.youtube.com/watch?v=YJw2gMJ8cSY (teaser)},

doi = {10.1145/3411764.3445458},

year = {2021},

date = {2021-05-07},

publisher = {ACM},

abstract = {Audiovisual recordings of user studies and interviews provide important data in qualitative HCI research. Even when a textual transcription is available, researchers frequently turn to these recordings due to their rich information content. However, the temporal, unstructured nature of audiovisual recordings makes them less efficient to work with than text. Through interviews and a survey, we explored how HCI researchers work with audiovisual recordings. We investigated researchers' transcription and annotation practice, their overall analysis workflow, and the prevalence of direct analysis of audiovisual recordings. We found that a key task was locating and analyzing inspectables, interesting segments in recordings. Since locating inspectables can be time consuming, participants look for detectables, visual or auditory cues that indicate the presence of an inspectable. Based on our findings, we discuss the potential for automation in locating detectables in qualitative audiovisual analysis.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

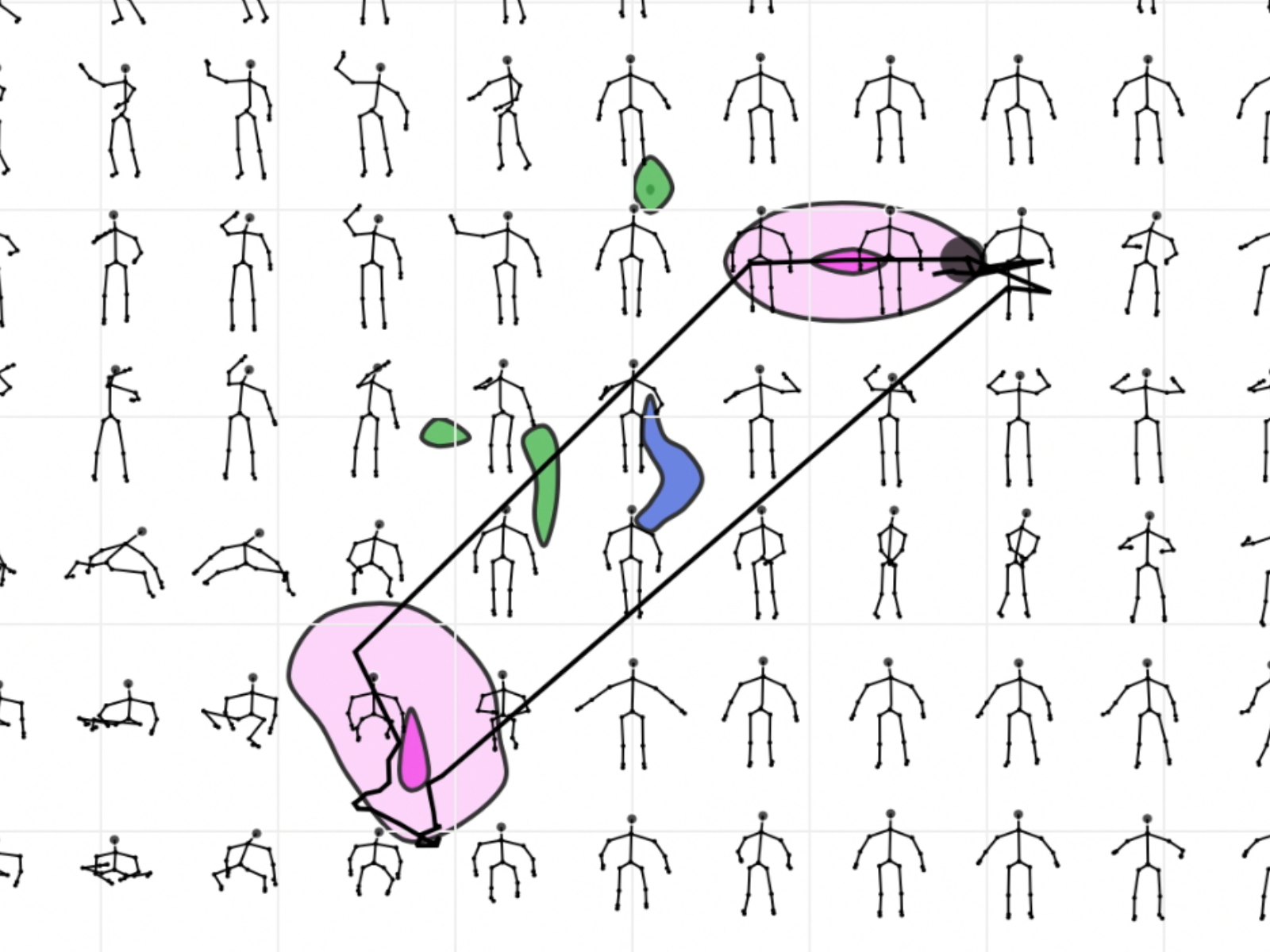

GestureMap: Supporting Visual Analytics and Quantitative Analysis of Motion Elicitation Data by Learning 2D Embeddings

Hai Dang (University of Bayreuth), Daniel Buschek (University of Bayreuth)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021DangGesture,

title = {GestureMap: Supporting Visual Analytics and Quantitative Analysis of Motion Elicitation Data by Learning 2D Embeddings},

author = {Hai Dang (University of Bayreuth) and Daniel Buschek (University of Bayreuth)},

url = {https://www.hciai.uni-bayreuth.de/, Website - HCI+AI University of Bayreuth

https://twitter.com/DBuschek, Twitter - Daniel Buschek

https://www.youtube.com/watch?v=4HYbMak47fs, YouTube - Teaser Video

https://www.youtube.com/watch?v=bh3An6NdQh8, YouTube - Video Figure},

doi = {10.1145/3411764.3445765},

year = {2021},

date = {2021-05-01},

abstract = {This paper presents GestureMap, a visual analytics tool for gesture elicitation which directly visualises the space of gestures. Concretely, a Variational Autoencoder embeds gestures recorded as 3D skeletons on an interactive 2D map. GestureMap further integrates three computational capabilities to connect exploration to quantitative measures: Leveraging DTW Barycenter Averaging (DBA), we compute average gestures to 1) represent gesture groups at a glance; 2) compute a new consensus measure (variance around average gesture); and 3) cluster gestures with k-means. We evaluate GestureMap and its concepts with eight experts and an in-depth analysis of published data. Our findings show how GestureMap facilitates exploring large datasets and helps researchers to gain a visual understanding of elicited gesture spaces. It further opens new directions, such as comparing elicitations across studies. We discuss implications for elicitation studies and research, and opportunities to extend our approach to additional tasks in gesture elicitation.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

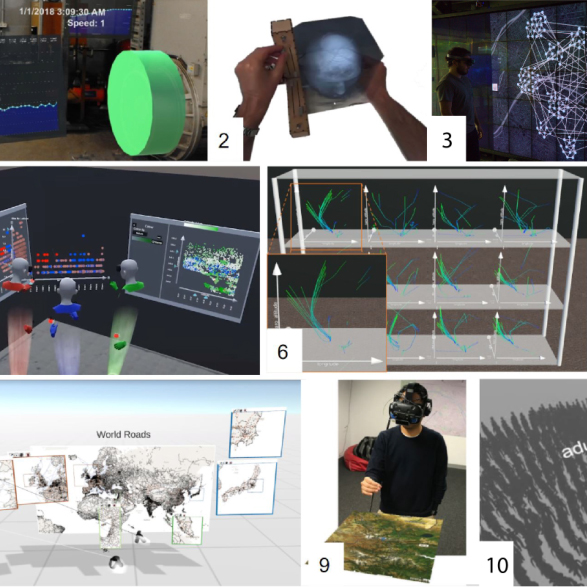

Grand Challenges in Immersive Analytics

Barrett Ens (Monash University), Benjamin Bach (University of Edinburgh), Maxime Cordeil (Monash University), Ulrich Engelke (CSIRO Data61), Marcos Serrano (IRIT - University of Toulouse), Wesley Willett (University of Calgary), Arnaud Prouzeau (Inria + Monash University), Christoph Anthes (HIVE, University of Applied Sciences Upper Austria), Wolfgang Büschel (Technische Universität Dresden), Cody Dunne (Northeastern University Khoury), Tim Dwyer (Monash University), Jens Grubert (Coburg University of Applied Sciences, Arts), Jason H. Haga (Digital Architecture Promotion Center, National Institute of Advanced Industrial Science, Technology), Nurit Kirshenbaum (LAVA, University of Hawaii at Manoa), Dylan Kobayashi (LAVA, University of Hawaii at Manoa), Tica Lin (Harvard University), Monsurat Olaosebikan (Tufts University), Fabian Pointecker (HIVE, University of Applied Sciences Upper Austria), David Saffo (Northeastern University Khoury), Nazmus Saquib (MIT Media Lab), Dieter Schmalstieg (Graz University of Technology), Danielle Albers Szafir (University of Colorado Boulder), Matthew Whitlock (University of Colorado Boulder), Yalong Yang (Harvard University)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021EnsGrand,

title = {Grand Challenges in Immersive Analytics},

author = {Barrett Ens (Monash University) and Benjamin Bach (University of Edinburgh) and Maxime Cordeil (Monash University) and Ulrich Engelke (CSIRO Data61) and Marcos Serrano (IRIT - University of Toulouse) and Wesley Willett (University of Calgary) and Arnaud Prouzeau (Inria + Monash University) and Christoph Anthes (HIVE, University of Applied Sciences Upper Austria) and Wolfgang Büschel (Technische Universität Dresden) and Cody Dunne (Northeastern University Khoury) and Tim Dwyer (Monash University) and Jens Grubert (Coburg University of Applied Sciences and Arts) and Jason H. Haga (Digital Architecture Promotion Center, National Institute of Advanced Industrial Science and Technology) and Nurit Kirshenbaum (LAVA, University of Hawaii at Manoa) and Dylan Kobayashi (LAVA, University of Hawaii at Manoa) and Tica Lin (Harvard University) and Monsurat Olaosebikan (Tufts University) and Fabian Pointecker (HIVE, University of Applied Sciences Upper Austria) and David Saffo (Northeastern University Khoury) and Nazmus Saquib (MIT Media Lab) and Dieter Schmalstieg (Graz University of Technology) and Danielle Albers Szafir (University of Colorado Boulder) and Matthew Whitlock (University of Colorado Boulder) and Yalong Yang (Harvard University)},

doi = {10.1145/3411764.3446866},

year = {2021},

date = {2021-05-01},

abstract = {Immersive Analytics is a quickly evolving field that unites several areas such as visualisation, immersive environments, and human-computer interaction to support human data analysis with emerging technologies. This research has thrived over the past years with multiple workshops, seminars, and a growing body of publications, spanning several conferences. Given the rapid advancement of interaction technologies and novel application domains, this paper aims toward a broader research agenda to enable widespread adoption. We present 17 key research challenges developed over multiple sessions by a diverse group of 24 international experts, initiated from a virtual scientific workshop at ACM CHI 2020. These challenges aim to coordinate future work by providing a systematic roadmap of current directions and impending hurdles to facilitate productive and effective applications for Immersive Analytics.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Hidden Interaction Techniques: Concealed Information Acquisition and Texting on Smartphones and Wearables

Ville Mäkelä (LMU Munich), Johannes Kleine (LMU Munich), Maxine Hood (Wellesley College), Florian Alt (Bundeswehr University Munich), Albrecht Schmidt (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021MakelaHidden,

title = {Hidden Interaction Techniques: Concealed Information Acquisition and Texting on Smartphones and Wearables},

author = {Ville Mäkelä (LMU Munich) and Johannes Kleine (LMU Munich) and Maxine Hood (Wellesley College) and Florian Alt (Bundeswehr University Munich) and Albrecht Schmidt (LMU Munich)},

url = {https://www.en.um.informatik.uni-muenchen.de, Website - Human Centered Ubiquitous Media (HCUM)

https://www.youtube.com/watch?v=hlybyJlJFxI, YouTube Teaser},

doi = {10.1145/3411764.3445504},

year = {2021},

date = {2021-05-01},

abstract = {There are many situations where using personal devices is not socially acceptable, or where nearby people present a privacy risk. For these situations, we explore the concept of hidden interaction techniques through two prototype applications. HiddenHaptics allows users to receive information through vibrotactile cues on a smartphone, and HideWrite allows users to write text messages by drawing on a dimmed smartwatch screen. We conducted three user studies to investigate whether, and how, these techniques can be used without being exposed. Our primary findings are (1) users can effectively hide their interactions while attending to a social situation, (2) users seek to interact when another person is speaking, and they also tend to hide the interaction using their body or furniture, and (3) users can sufficiently focus on the social situation despite their interaction, whereas non-users feel that observing the user hinders their ability to focus on the social activity.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

How WEIRD is CHI?

Sebastian Linxen (University of Basel), Christian Sturm (Hamm-Lippstadt University of Applied Sciences), Florian Brühlmann (University of Basel), Vincent Cassau (Hochschule Hamm- Lippstadt), Klaus Opwis (University of Basel), Katharina Reinecke (University of Washington)

Abstract | Tags: Full Paper | Links:

@inproceedings{Linxen2021Weird,

title = {How WEIRD is CHI?},

author = {Sebastian Linxen (University of Basel) and Christian Sturm (Hamm-Lippstadt University of Applied Sciences) and Florian Brühlmann (University of Basel) and Vincent Cassau (Hochschule Hamm- Lippstadt) and Klaus Opwis (University of Basel) and Katharina Reinecke (University of Washington)},

doi = {10.1145/3411764.3445488},

year = {2021},

date = {2021-05-07},

publisher = {ACM},

abstract = {Computer technology is often designed in technology hubs in Western countries, invariably making it "WEIRD", because it is based on the intuition, knowledge, and values of people who are Western, Educated, Industrialized, Rich, and Democratic. Developing technology that is universally useful and engaging requires knowledge about members of WEIRD and non-WEIRD societies alike. In other words, it requires us, the CHI community, to generate this knowledge by studying representative participant samples. To find out to what extent CHI participant samples are from Western societies, we analyzed papers published in the CHI proceedings between 2016-2020. Our findings show that 73% of CHI study findings are based on Western participant samples, representing less than 12% of the world's population. Furthermore, we show that most participant samples at CHI tend to come from industrialized, rich, and democratic countries with generally highly educated populations. Encouragingly, recent years have seen a slight increase in non-Western samples and those that include several countries. We discuss suggestions for further broadening the international representation of CHI participant samples.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Hummer: Text Entry by Gaze and Hum

Ramin Hedeshy (University of Stuttgart), Chandan Kumar (University of Stuttgart), Raphael Menges (University of Koblenz), Steffen Staab (University of Stuttgart, University of Southhampton)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021HedeshyHummer,

title = {Hummer: Text Entry by Gaze and Hum},

author = {Ramin Hedeshy (University of Stuttgart) and Chandan Kumar (University of Stuttgart) and Raphael Menges (University of Koblenz) and Steffen Staab (University of Stuttgart and University of Southhampton)},

url = {https://www.ipvs.uni-stuttgart.de/departments/ac/, Website - Analytic Computing

https://twitter.com/AnalyticComp, Twitter - Analytic Computing

https://youtu.be/UR2sX7zrXGw, YouTube - Teaser Video},

doi = {10.1145/3411764.3445501},

year = {2021},

date = {2021-05-01},

abstract = {Text entry by gaze is a useful means of hands-free interaction that is applicable in settings where dictation suffers from poor voice recognition or where spoken words and sentences jeopardize privacy or confidentiality. However, text entry by gaze still shows inferior performance and it quickly exhausts its users. We introduce text entry by gaze and hum as a novel hands-free text entry. We review related literature to converge to word-level text entry by analysis of gaze paths that are temporally constrained by humming. We develop and evaluate two design choices: ``HumHum'' and ``Hummer.'' The first method requires short hums to indicate the start and end of a word. The second method interprets one continuous humming as an indication of the start and end of a word. In an experiment with 12 participants, Hummer achieved a commendable text entry rate of 20.45 words per minute, and outperformed HumHum and the gaze-only method EyeSwipe in both quantitative and qualitative measures. },

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Investigating the Impact of Real-World Environments on the Perception of 2D Visualizations in Augmented Reality

Marc Satkowski (Technische Universität Dresden), Raimund Dachselt (Technische Universität Dresden)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021SatkowskiInvestigating,

title = {Investigating the Impact of Real-World Environments on the Perception of 2D Visualizations in Augmented Reality},

author = {Marc Satkowski (Technische Universität Dresden) and Raimund Dachselt (Technische Universität Dresden)},

url = {http://www.imld.de, Website - Interactive Media Lab Technische Universität Dresden

https://www.twitter.com/imldresden, Twitter - Interactive Media Lab Technische Universität Dresden

https://youtu.be/W42kTaINqZ4, YouTube - Teaser

https://youtu.be/DNPhOJ6AyRE, YouTube - Video},

doi = {10.1145/3411764.3445330},

year = {2021},

date = {2021-05-01},

abstract = {In this work we report on two comprehensive user studies investigating the perception of Augmented Reality (AR) visualizations influenced by real-world backgrounds. Since AR is an emerging technology, it is important to also consider productive use cases, which is why we chose an exemplary and challenging industry 4.0 environment. Our basic perceptual research focuses on both the visual complexity of backgrounds as well as the influence of a secondary task. In contrast to our expectation, data of our 34 study participants indicate that the background has far less influence on the perception of AR visualizations. Moreover, we observed a mismatch between measured and subjectively reported performance. We discuss the importance of the background and recommendations for visual real-world augmentations. Overall, our results suggest that AR can be used in many visually challenging environments without losing the ability to productively work with the visualizations shown.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

It Takes More Than One Hand to Clap: On the Role of ‘Care’ in Maintaining Design Results

Max Krüger (Universität Siegen), Anne Weibert (Universität Siegen), Débora de Castro Leal (Universität Siegen), Dave Randall (Universität Siegen), Volker Wulf (Universität Siegen)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Krueger2021CareRole,

title = {It Takes More Than One Hand to Clap: On the Role of ‘Care’ in Maintaining Design Results},

author = {Max Krüger (Universität Siegen) and Anne Weibert (Universität Siegen) and Débora de Castro Leal (Universität Siegen) and Dave Randall (Universität Siegen) and Volker Wulf (Universität Siegen)},

url = {https://www.wineme.uni-siegen.de/ (lab page)},

doi = {10.1145/3411764.3445389},

year = {2021},

date = {2021-05-07},

abstract = {Within Participatory- and Co-Design projects, the issue of sustainability and maintenance of the co-designed artefacts is a crucial yet largely unresolved issue. In this paper, we look back on four years of work on co-designing tools that assist refugees and migrants in their efforts to settle in Germany, the last of which the project has been independently maintained by our community collaborators. We reflect on the role of pre-existing care practices amongst our community collaborators, and a continued openness throughout the project, that allowed a complex constellation of actors to be involved in its ongoing maintenance and our own, often mundane activities which have contributed to the sustainability of the results. Situating our account within an HCI for Social Justice agenda, we thereby contribute to an ongoing discussion about the sustainability of such activities.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

Itsy-Bits: Fabrication and Recognition of 3D-Printed Tangibles with Small Footprints on Capacitive Touchscreens

Martin Schmitz (Technical University of Darmstadt), Florian Müller (Technical University of Darmstadt), Max Mühlhäuser (Technical University of Darmstadt), Jan Riemann (Technical University of Darmstadt), Huy Viet Le (University of Stuttgart)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{2021SchmitzItsy,

title = {Itsy-Bits: Fabrication and Recognition of 3D-Printed Tangibles with Small Footprints on Capacitive Touchscreens},

author = {Martin Schmitz (Technical University of Darmstadt) and Florian Müller (Technical University of Darmstadt) and Max Mühlhäuser (Technical University of Darmstadt) and Jan Riemann (Technical University of Darmstadt) and Huy Viet Le (University of Stuttgart)},

url = {https://www.teamdarmstadt.de/, Website - Team Darmstadt

https://www.facebook.com/teamdarmstadt/, Facebook - Team Darmstadt

https://youtu.be/55vHxnOKl6k, YouTube - Teaser},

doi = {10.1145/3411764.3445502},

year = {2021},

date = {2021-05-01},

abstract = {Tangibles on capacitive touchscreens are a promising approach to overcome the limited expressiveness of touch input. While research has suggested many approaches to detect tangibles, the corresponding tangibles are either costly or have a considerable minimal size. This makes them bulky and unattractive for many applications. At the same time, they obscure valuable display space for interaction. To address these shortcomings, we contribute Itsy-Bits: a fabrication pipeline for 3D printing and recognition of tangibles on capacitive touchscreens with a footprint as small as a fingertip. Each Itsy-Bit consists of an enclosing 3D object and a unique conductive 2D shape on its bottom. Using only raw data of commodity capacitive touchscreens, Itsy-Bits reliably identifies and locates a variety of shapes in different sizes and estimates their orientation. Through example applications and a technical evaluation, we demonstrate the feasibility and applicability of Itsy-Bits for tangibles with small footprints.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

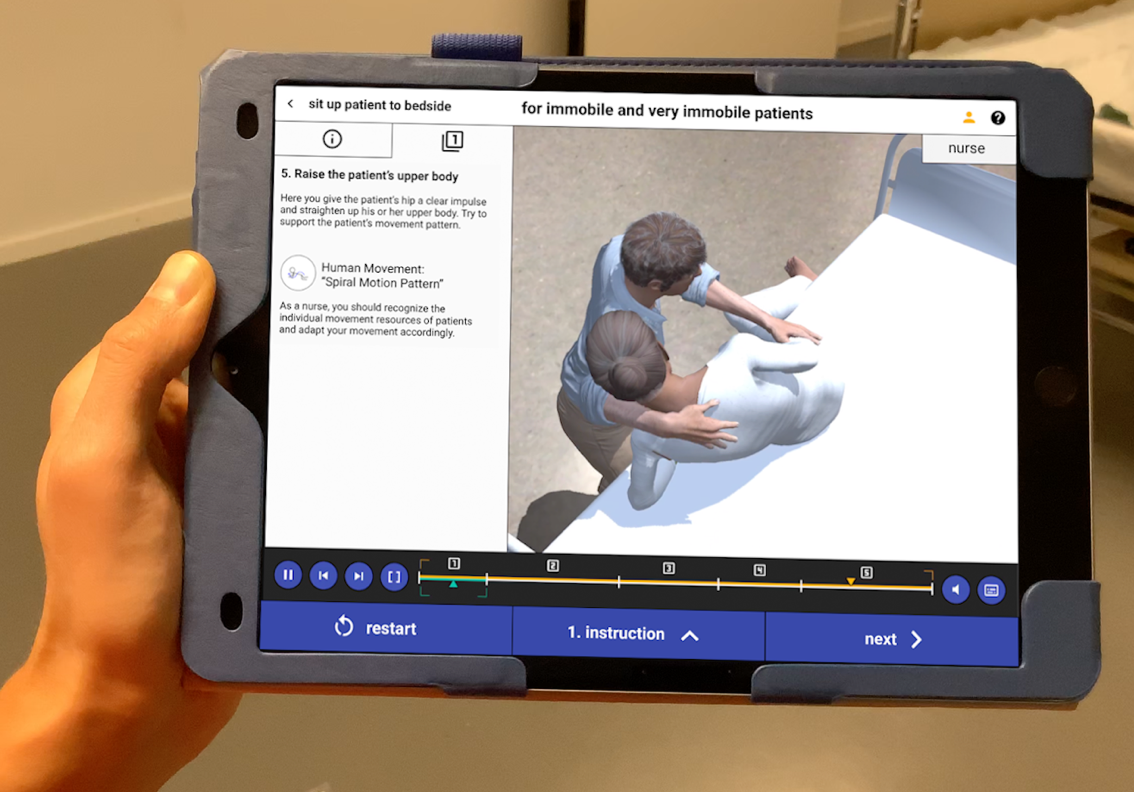

KiTT - The Kinaesthetics Transfer Teacher: Design and Evaluation of a Tablet-based System to Promote the Learning of Ergonomic Patient Transfers

Maximilian Dürr (University of Konstanz), Marcel Borowski (Aarhus University), Carla Gröschel (University of Konstanz), Ulrike Pfeil (University of Konstanz), Jens Müller (University of Konstanz), Harald Reiterer (University of Konstanz)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021DuerrKitt,

title = {KiTT - The Kinaesthetics Transfer Teacher: Design and Evaluation of a Tablet-based System to Promote the Learning of Ergonomic Patient Transfers},

author = {Maximilian Dürr (University of Konstanz) and Marcel Borowski (Aarhus University) and Carla Gröschel (University of Konstanz) and Ulrike Pfeil (University of Konstanz) and Jens Müller (University of Konstanz) and Harald Reiterer (University of Konstanz) },

url = {https://hci.uni-konstanz.de/en/, Website - HCI Group Konstanz

https://twitter.com/HCIGroupKN, Twitter - @HCIGroupKN

https://youtu.be/sIfW0Q3HGZI, YouTube - Teaser

https://www.youtube.com/watch?v=BtwAbytRrJQ, YouTube - Video},

doi = {10.1145/3411764.3445496},

year = {2021},

date = {2021-05-01},

abstract = {Nurses frequently transfer patients as part of their daily work. However, manual patient transfers pose a major risk to nurses’ health. Although the Kinaesthetics care conception can help address this issue, existing support to learn the concept is low. We present KiTT, a tablet-based system, to promote the learning of ergonomic patient transfers based on the Kinaesthetics care conception. KiTT supports the training of Kinaesthetics-based patient transfers by two nurses. The nurses are guided by the phases (i) interactive instructions, (ii) training of transfer conduct, and (iii) feedback and reflection. We evaluated KiTT with 26 nursing-care students in a nursing-care school. Our results indicate that KiTT provides a good subjective support for the learning of Kinaesthetics. Our results also suggest that KiTT can promote the ergonomically correct conduct of patient transfers while providing a good user experience adequate to the nursing-school context, and reveal how KiTT can extend existing practices.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

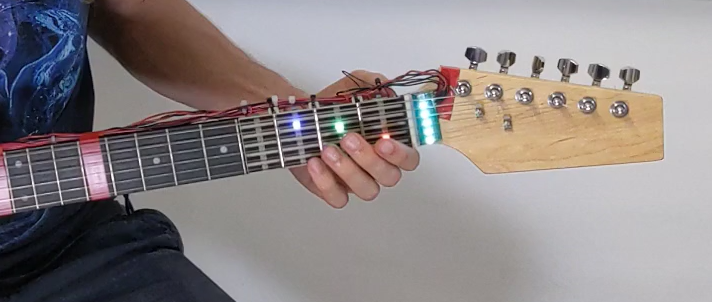

Let’s Frets! Assisting Guitar Students during Practice via Capacitive Sensing

Karola Marky (Technical University of Darmstadt), Andreas Weiß (Musical School Schallkultur), Andrii Matviienko (Technical University of Darmstadt), Florian Brandherm (Technical University of Darmstadt), Sebastian Wolf (Technical University of Darmstadt), Martin Schmitz (Technical University of Darmstadt), Florian Krell (Technical University of Darmstadt), Florian Müller (Technical University of Darmstadt), Max Mühlhäuser (Technical University of Darmstadt), Thomas Kosch (Technical University of Darmstadt)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021MarkyFretsPaper,

title = {Let’s Frets! Assisting Guitar Students during Practice via Capacitive Sensing},

author = {Karola Marky (Technical University of Darmstadt) and Andreas Weiß (Musical School Schallkultur) and Andrii Matviienko (Technical University of Darmstadt) and Florian Brandherm (Technical University of Darmstadt) and Sebastian Wolf (Technical University of Darmstadt) and Martin Schmitz (Technical University of Darmstadt) and Florian Krell (Technical University of Darmstadt) and Florian Müller (Technical University of Darmstadt) and Max Mühlhäuser (Technical University of Darmstadt) and Thomas Kosch (Technical University of Darmstadt)},

doi = {10.1145/3411764.3445595},

year = {2021},

date = {2021-05-01},

abstract = {Learning a musical instrument requires regular exercise. However, students are often on their own during their practice sessions due to the limited time with their teachers, which increases the likelihood of mislearning playing techniques. To address this issue, we present Let's Frets - a modular guitar learning system that provides visual indicators and capturing of finger positions on a 3D-printed capacitive guitar fretboard. We based the design of Let's Frets on requirements collected through in-depth interviews with professional guitarists and teachers. In a user study (N=24), we evaluated the feedback modules of Let's Frets against fretboard charts. Our results show that visual indicators require the least time to realize new finger positions while a combination of visual indicators and position capturing yielded the highest playing accuracy. We conclude how Let's Frets enables independent practice sessions that can be translated to other musical instruments.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Let’s Share a Ride into the Future: A Qalitative Study Comparing Hypothetical Implementation Scenarios of Automated Vehicles

Martina Schuß (Technische Hochschule Ingolstadt), Philipp Wintersberger (Technische Hochschule Ingolstadt), Andreas Riener (Technische Hochschule Ingolstadt )

Abstract | Tags: Full Paper | Links:

@inproceedings{2021SchussShare,

title = {Let’s Share a Ride into the Future: A Qalitative Study Comparing Hypothetical Implementation Scenarios of Automated Vehicles},

author = {Martina Schuß (Technische Hochschule Ingolstadt) and Philipp Wintersberger (Technische Hochschule Ingolstadt) and Andreas Riener (Technische Hochschule Ingolstadt )},

url = {https://hcig.thi.de/, HCI Group Technische Hochschule Ingolstadt},

doi = {10.1145/3411764.3445609},

year = {2021},

date = {2021-05-01},

abstract = {Automated Vehicles (AVs) are expected to radically disrupt our mobility. Whereas much is speculated about how AVs will actually be implemented in the future, we argue that their advent should be taken as an opportunity to enhance all people’s mobility and improve their lives. Thus, it is important to focus on both the environment and the needs of target groups that have not been sufficiently considered in the past. In this paper, we present the findings from a qualitative study (N=11) of public attitude on hypothetical implementation scenarios for AVs. Our results indicate that people are aware of the benefits of shared mobility for the environment and society, and are generally open to using it. However, 1) emotional factors mitigate this openness and 2) security concerns were expressed by female participants. We recommend that identified concerns must be addressed to allow AVs fully exploiting their benefits for society and environment.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Machine Learning Uncertainty as a Design Material: A Post-Phenomenological Inquiry into Pragmatic Concepts for Design Research

Jesse Josua Benjamin (UTwente), Arne Berger (HS Anhalt), Nick Merrill (UC Berkeley), James Pierce (University of Washington)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021BenjaminMachine,

title = {Machine Learning Uncertainty as a Design Material: A Post-Phenomenological Inquiry into Pragmatic Concepts for Design Research},

author = {Jesse Josua Benjamin (UTwente) and Arne Berger (HS Anhalt) and Nick Merrill (UC Berkeley) and James Pierce (University of Washington)},

url = {https://www.hs-anhalt.de/hochschule-anhalt/fachbereich-5/personen-kontakt/professorinnen-und-professoren.html#modal4780, Website - Arne Berger

https://twitter.com/arneberger, Twitter - Arne Berger},

doi = {10.1145/3411764.3445481},

year = {2021},

date = {2021-05-01},

abstract = {Design research is important for understanding and interrogating how emerging technologies shape human experience. However, design research with Machine Learning (ML) is relatively underdeveloped. Crucially, designers have not found a grasp on ML uncertainty as a design opportunity rather than an obstacle. The technical literature points to data and model uncertainties as two main properties of ML. Through post-phenomenology, we position uncertainty as one defning material attribute of ML processes which mediate human experience. To understand ML uncertainty as a design material, we investigate four design research case studies involving ML. We derive three provocative concepts: thingly uncertainty: ML-driven artefacts have uncertain, variable relations to their environments; pattern leakage: ML uncertainty can lead to patterns shaping the world they are meant to represent; and futures creep: ML technologies texture human relations to time with uncertainty. Finally, we outline design research trajectories and sketch a post-phenomenological approach to human-ML relations.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Making Sense of Complex Running Metrics Using a Modified Running Shoe

Paweł W. Woźniak (Utrecht University), Monika Zbytniewska (ETH Zurich), Francisco Kiss (BETESO - Bürger Electronic GmbH), Jasmin Niess (University of Bremen)

Abstract | Tags: Full Paper | Links:

@inproceedings{Wozniak2021Running,

title = {Making Sense of Complex Running Metrics Using a Modified Running Shoe},

author = {Paweł W. Woźniak (Utrecht University) and Monika Zbytniewska (ETH Zurich) and Francisco Kiss (BETESO - Bürger Electronic GmbH) and Jasmin Niess (University of Bremen)},

url = {https://hci.uni-bremen.de/ (lab page)

https://twitter.com/HCIBremen

https://www.youtube.com/watch?v=E5Y6wGDmbls (teaser)},

doi = {10.1145/3411764.3445506},

year = {2021},

date = {2021-05-07},

publisher = {ACM},

abstract = {Running is a widely popular physical activity that offers many health benefits. As runners progress with their training, understanding one's own body becomes a key concern in achieving wellbeing through running. While extensive bodily sensing opportunities exist for runners, understanding complex sensor data is a challenge. In this paper, we investigate how data from shoe-worn sensors can be visualised to empower runners to improve their technique. We designed GraFeet---an augmented running shoe that visualises kinesiological data about the runner's feet and gait. We compared our prototype with a standard sensor dashboard in a user study where users ran with the sensor and analysed the generated data after the run. GraFeet was perceived as more usable; producing more insights and less confusion in the users. Based on our inquiry, we contribute findings about using data from body-worn sensors to support physically active individuals.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

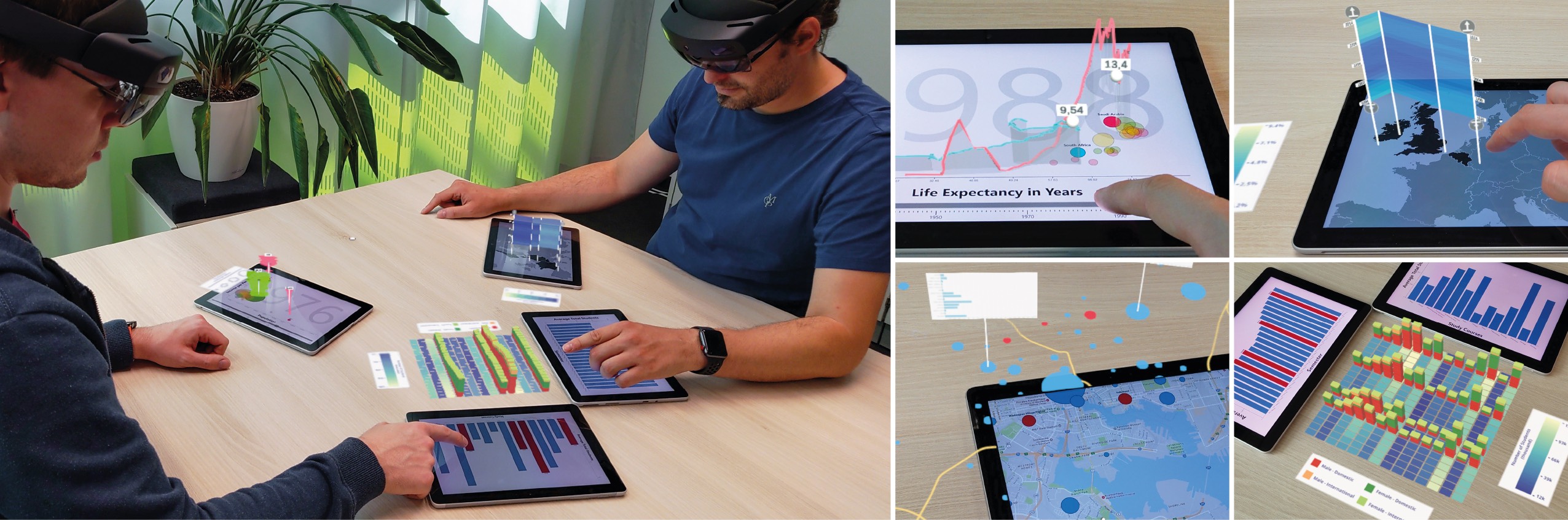

MARVIS: Combining Mobile Devices and Augmented Reality for Visual Data Analysis

Ricardo Langner (Technische Universität Dresden), Marc Satkowski (Technische Universität Dresden), Wolfgang Büschel (Technische Universität Dresden), Raimund Dachselt (Technische Universität Dresden)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021LangnerMarvis,

title = {MARVIS: Combining Mobile Devices and Augmented Reality for Visual Data Analysis},

author = {Ricardo Langner (Technische Universität Dresden) and Marc Satkowski (Technische Universität Dresden) and Wolfgang Büschel (Technische Universität Dresden) and Raimund Dachselt (Technische Universität Dresden)},

url = {https://imld.de/marvis, Website - Marvis @ IMLD

https://twitter.com/imldresde, Twitter - IMLD

https://youtu.be/DHvnkpmjUhw, YouTube - Teaser Video

https://youtu.be/bDS7kRFYgjY, YouTube - Video Figure},

doi = {10.1145/3411764.3445593},

year = {2021},

date = {2021-05-01},

abstract = {We present MARVIS, a conceptual framework that combines mobile devices and head-mounted Augmented Reality (AR) for visual data analysis. We propose novel concepts and techniques addressing visualization-specific challenges. By showing additional 2D and 3D information around and above displays, we extend their limited screen space. AR views between displays as well as linking and brushing are also supported, making relationships between separated visualizations plausible. We introduce the design process and rationale for our techniques. To validate MARVIS' concepts and show their versatility and widespread applicability, we describe six implemented example use cases. Finally, we discuss insights from expert hands-on reviews. As a result, we contribute to a better understanding of how the combination of one or more mobile devices with AR can benefit visual data analysis. By exploring this new type of visualization environment, we hope to provide a foundation and inspiration for future mobile data visualizations.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

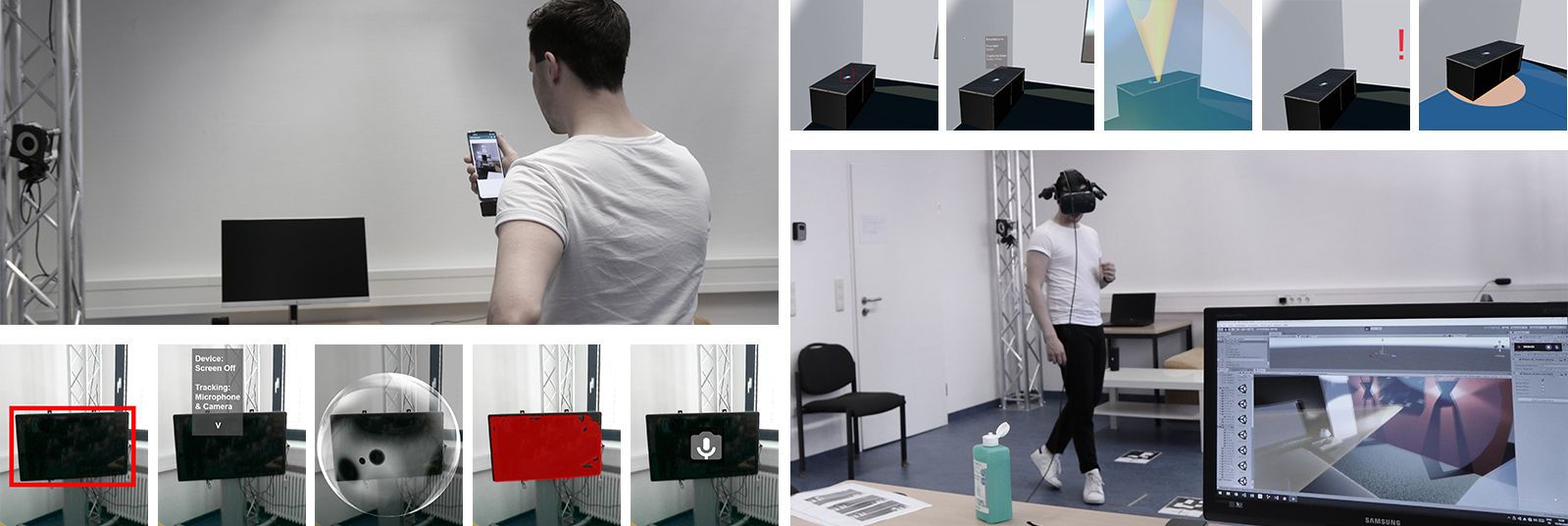

MIRIA: A Mixed Reality Toolkit for the In-Situ Visualization and Analysis of Spatio-Temporal Interaction Data

Wolfgang Büschel (Interactive Media Lab Dresden, Technische Universität Dresden), Anke Lehmann (Interactive Media Lab Dresden, Technische Universität Dresden), Raimund Dachselt (Interactive Media Lab Dresden, Technische Universität Dresden)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021BueschelMiria,

title = {MIRIA: A Mixed Reality Toolkit for the In-Situ Visualization and Analysis of Spatio-Temporal Interaction Data},

author = {Wolfgang Büschel (Interactive Media Lab Dresden, Technische Universität Dresden) and Anke Lehmann (Interactive Media Lab Dresden, Technische Universität Dresden) and Raimund Dachselt (Interactive Media Lab Dresden, Technische Universität Dresden)},

url = {https://imld.de, Website - Interactive Media Lab Dresden

https://twitter.com/imldresden, Twitter - Interactive Media Lab Dresden

https://youtu.be/TZUMpbExkds, YouTube - Teaser

https://youtu.be/mnFZ-skGH5s, YouTube - Video},

doi = {10.1145/3411764.3445651},

year = {2021},

date = {2021-05-01},

abstract = {In this paper, we present MIRIA, a Mixed Reality Interaction Analysis toolkit designed to support the in-situ visual analysis of user interaction in mixed reality and multi-display environments. So far, there are few options to effectively explore and analyze interaction patterns in such novel computing systems. With MIRIA, we address this gap by supporting the analysis of user movement, spatial interaction, and event data by multiple, co-located users directly in the original environment. Based on our own experiences and an analysis of the typical data, tasks, and visualizations used in existing approaches, we identify requirements for our system. We report on the design and prototypical implementation of MIRIA, which is informed by these requirements and offers various visualizations such as 3D movement trajectories, position heatmaps, and scatterplots. To demonstrate the value of MIRIA for real-world analysis tasks, we conducted expert feedback sessions using several use cases with authentic study data.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Oh, Snap! A Fabrication Pipeline to Magnetically Connect Conventional and 3D-Printed Electronics

Martin Schmitz (Technical University of Darmstadt), Jan Riemann (Technical University of Darmstadt), Florian Müller (Technical University of Darmstadt), Steffen Kreis (Technical University of Darmstadt), Max Mühlhäuser (Technical University of Darmstadt)

Abstract | Tags: Best Paper, Full Paper | Links:

@inproceedings{2021SchmitzSnap,

title = {Oh, Snap! A Fabrication Pipeline to Magnetically Connect Conventional and 3D-Printed Electronics},

author = {Martin Schmitz (Technical University of Darmstadt) and Jan Riemann (Technical University of Darmstadt) and Florian Müller (Technical University of Darmstadt) and Steffen Kreis (Technical University of Darmstadt) and Max Mühlhäuser (Technical University of Darmstadt)},

url = {https://www.teamdarmstadt.de, Website - Telecooperation Lab

https://www.facebook.com/teamdarmstadt/, Facebook - Team Darmstadt

https://youtu.be/ado4a_chzqo, YouTube - Teaser},

doi = {10.1145/3411764.3445641},

year = {2021},

date = {2021-05-01},

abstract = {3D printing has revolutionized rapid prototyping by speeding up the creation of custom-shaped objects. With the rise of multi-material 3Dprinters, these custom-shaped objects can now be made interactive in a single pass through passive conductive structures. However, connecting conventional electronics to these conductive structures often still requires time-consuming manual assembly involving many wires, soldering or gluing. To alleviate these shortcomings, we propose Oh, Snap!: a fabrication pipeline and interfacing concept to magnetically connect a 3D-printed object equipped with passive sensing structures to conventional sensing electronics. To this end, Oh, Snap! utilizes ferromagnetic and conductive 3D-printed structures, printable in a single pass on standard printers. We further present a proof-of-concept capacitive sensing board that enables easy and robust magnetic assembly to quickly create interactive 3D-printed objects. We evaluate Oh, Snap! by assessing the robustness and quality of the connection and demonstrate its broad applicability by a series of example applications.},

keywords = {Best Paper, Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

On Activism and Academia: Reflecting Together and Sharing Experiences Among Critical Friends

Debora de Castro Leal (University of Siegen), Angelika Strohmayer (Northumbria University), Max Krüger (University of Siegen)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021deCastroOn,

title = {On Activism and Academia: Reflecting Together and Sharing Experiences Among Critical Friends},

author = {Debora de Castro Leal (University of Siegen) and Angelika Strohmayer (Northumbria University) and Max Krüger (University of Siegen)},

url = {https://www.linkedin.com/in/deboracastroleal/, LinkedIn - Debora de Csatro Leal},

doi = {10.1145/3411764.3445263},

year = {2021},

date = {2021-05-01},

abstract = {In recent years HCI and CSCW work has increasingly begun to address complex social problems and issues of social justice worldwide. Such activist-leaning work is not without problems. Through the experiences and reflections of an activist becoming academic and an academic becoming an activist, we outline these difficulties such as (1) the risk of perpetuating violence, oppression and exploitation when working with marginalised communities, (2) the reception of activist-academic work within our academic communities, and (3) problems of social justice that exist within our academic communities. Building on our own experiences, practices and existing literature from a variety of disciplines we advocate for the possibility of an activist-academic practice, outline possible ways forward and formulate questions we need to answer for HCI to contribute to a more just world.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Physiological and Perceptual Responses to Athletic Avatars while Cycling in Virtual Reality

Martin Kocur (University of Regensburg), Florian Habler (University of Regensburg), Valentin Schwind (Frankfurt University of Applied Sciences), Paweł W. Woźniak (Utrecht University), Christian Wolff (University of Regensburg), Niels Henze (University of Regensburg)

Abstract | Tags: Full Paper | Links:

@inproceedings{2021KocurPhysiological,

title = {Physiological and Perceptual Responses to Athletic Avatars while Cycling in Virtual Reality},

author = {Martin Kocur (University of Regensburg) and Florian Habler (University of Regensburg) and Valentin Schwind (Frankfurt University of Applied Sciences) and Paweł W. Woźniak (Utrecht University) and Christian Wolff (University of Regensburg) and Niels Henze (University of Regensburg)},

url = {http://interactionlab.io, Website - InteractionLab.io

https://www.facebook.com/InteractionLab.io, Facebook - InteractionLab.io},

doi = {10.1145/3411764.3445160},

year = {2021},

date = {2021-05-01},