We are happy to present you this year’s contributions from the German HCI labs to CHI 2021! Feel free to browse our list of publications: There are 53 full papers (including one Best Paper and five Honorable Mentions), 16 LBWs, and many demonstrations, workshops, or other interesting publications. Please send us a mail if you feel that your paper is missing or to correct any entries.

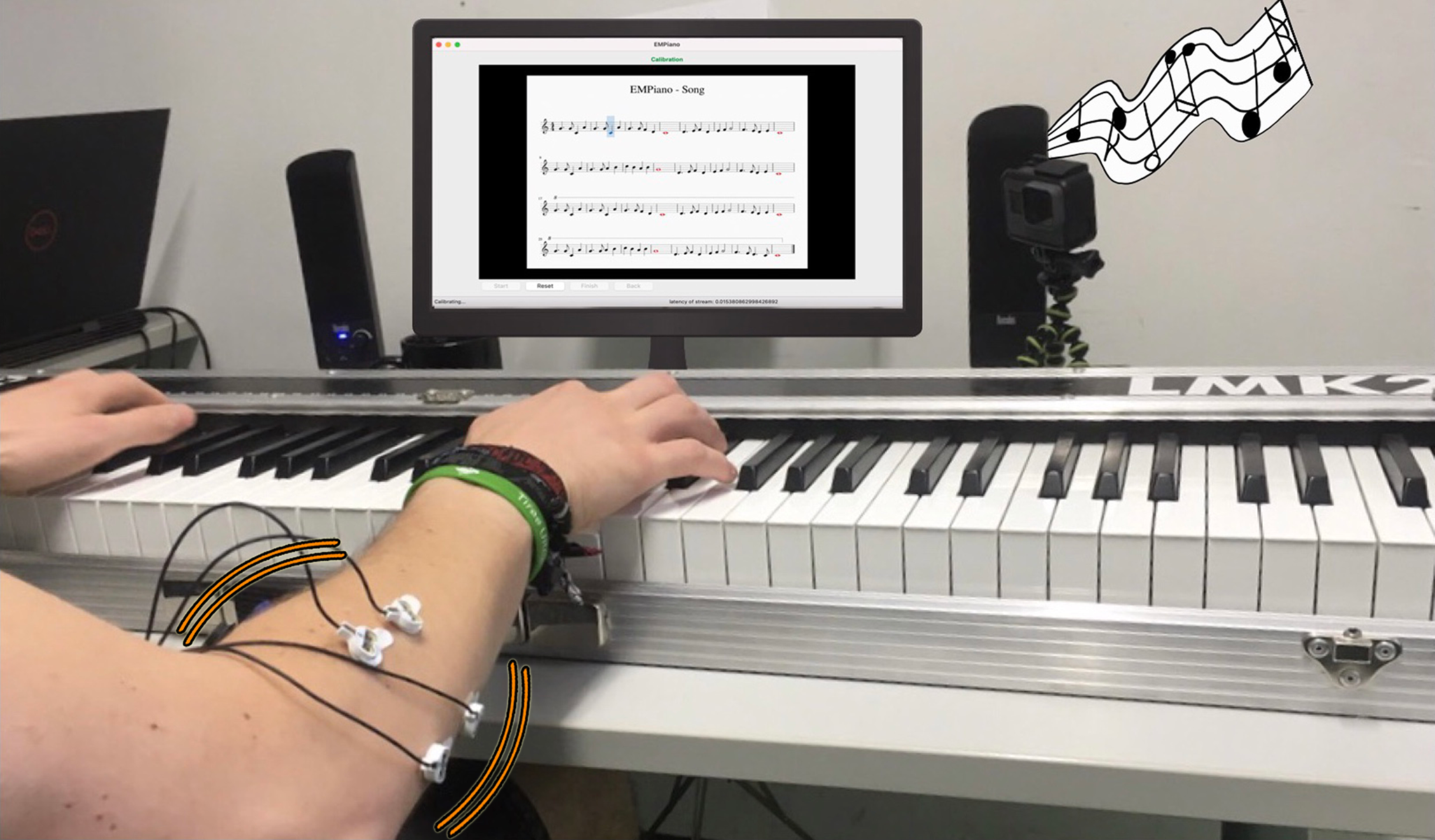

EMPiano: Electromyographic Pitch Control on the Piano Keyboard

Annika Kilian (LMU Munich), Jakob Karolus (LMU Munich), Thomas Kosch (TU Darmstadt), Albrecht Schmidt (LMU Munich), Paweł W. Woźniak (Utrecht University)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{2021KilianEMPiano,

title = {EMPiano: Electromyographic Pitch Control on the Piano Keyboard},

author = {Annika Kilian (LMU Munich) and Jakob Karolus (LMU Munich) and Thomas Kosch (TU Darmstadt) and Albrecht Schmidt (LMU Munich) and Paweł W. Woźniak (Utrecht University)},

url = {https://www.um.informatik.uni-muenchen.de/, Website - Human-Centered Ubiquitous Media

},

doi = {10.1145/3411763.3451556},

year = {2021},

date = {2021-05-01},

abstract = {The piano keyboard offers a significant range and polyphony for well-trained pianists. Yet, apart from dynamics, the piano is incapable of translating expressive movements such as vibrato onto the played note. Adding sound effects requires additional modalities. A pitch wheel can be found on the side of most electric pianos. To add a vibrato or pitch bend, the pianist needs to actively operate the pitch wheel with their hand, which requires cognitive effort and may disrupt play. In this work, we present EMPiano, a system that allows pianists to incorporate a soft pitch vibrato into their play seamlessly. Vibrato can be triggered through muscle activity and is recognized via electromyography. This allows EMPiano to integrate into piano play. Our system offers new interaction opportunities with the piano to increase the player's potential for expressive play. In this paper, we contribute the open-source implementation and the workflow behind EMPiano.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

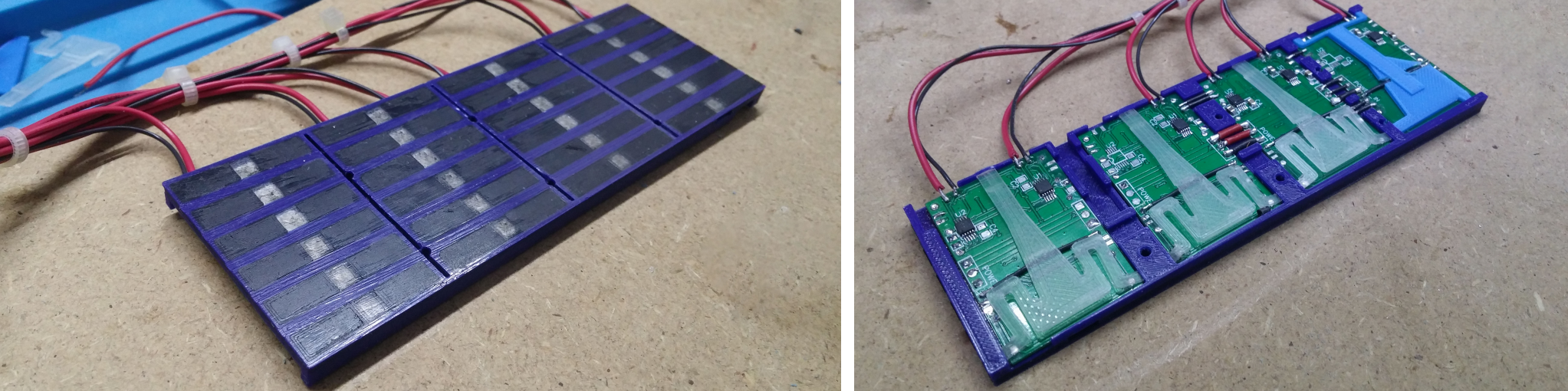

Let's Frets! Mastering Guitar Playing with Capacitive Sensing and Visual Guidance

Karola Marky (Technical University of Darmstadt), Andreas Weiß (Music School Schallkultur), Florian Müller (Technical University of Darmstadt), Martin Schmitz (Technical University of Darmstadt), Max Mühlhäuser (Technical University of Darmstadt), Thomas Kosch (Technical University of Darmstadt)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{2021MarkyFrets,

title = {Let's Frets! Mastering Guitar Playing with Capacitive Sensing and Visual Guidance},

author = {Karola Marky (Technical University of Darmstadt) and Andreas Weiß (Music School Schallkultur) and Florian Müller (Technical University of Darmstadt) and Martin Schmitz (Technical University of Darmstadt) and Max Mühlhäuser (Technical University of Darmstadt) and Thomas Kosch (Technical University of Darmstadt)},

url = {http://www.teamdarmstadt.de/, Website - Team Darmstadt},

doi = {10.1145/3411763.3451536},

year = {2021},

date = {2021-05-01},

abstract = {Mastering the guitar requires regular exercise to develop new skills and maintain existing abilities. We present Let's Frets - a modular guitar support system that provides visual guidance through LEDs that are integrated into a capacitive fretboard to support the practice of chords, scales, melodies, and exercises. Additional feedback is provided through a 3D-printed fretboard that senses the finger positions through capacitive sensing. We envision Let's Frets as an integrated guitar support system that raises the awareness of guitarists about their playing styles, their training progress, the composition of new pieces, and facilitating remote collaborations between teachers as well as guitar students. This interactivity demonstrates Let's Frets with an augmented fretboard and supporting software that runs on a mobile device.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

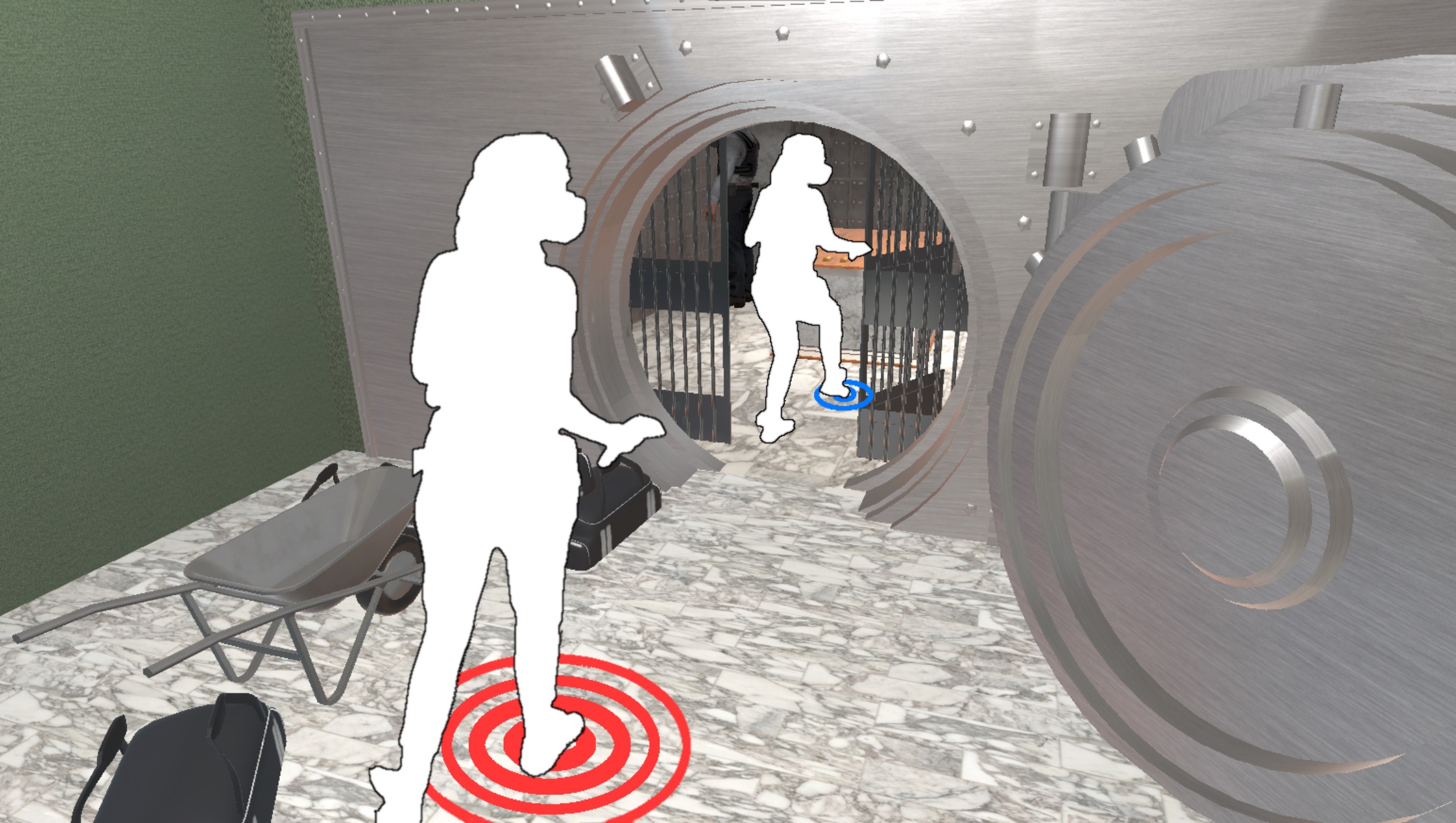

VRsneaky: Stepping into an Audible Virtual World with Gait-Aware Auditory Feedback

Felix Dietz (Bundeswehr University Munich), Matthias Hoppe (LMU Munich), Jakob Karolus (LMU Munich), Pawel Wozniak (Utrecht University), Albrecht Schmidt (LMU Munich), Tonja Machulla (LMU Munich)

Tags: Interactivity/Demonstration | Links:

@inproceedings{Dietz2021VRsneaky,

title = {VRsneaky: Stepping into an Audible Virtual World with Gait-Aware Auditory Feedback},

author = {Felix Dietz (Bundeswehr University Munich), Matthias Hoppe (LMU Munich), Jakob Karolus (LMU Munich), Pawel Wozniak (Utrecht University), Albrecht Schmidt (LMU Munich), Tonja Machulla (LMU Munich)},

url = {https://www.unibw.de/usable-security-and-privacy, Website - Usable Security and Privacy Group, Research Institute CODE

http://www.medien.ifi.lmu.de, Media Informatics Group, LMU Munich

https://youtu.be/gSoe4i-aR_g, Youtube Video},

doi = {10.1145/3334480.3383168},

year = {2021},

date = {2021-05-01},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}