Find a list of our publications at CHI 2022 below. There is also a schedule highlighting our talks. Your own publication is missing? Send us an email: contact@germanhci.de

(Don't) stand by me: How trait psychopathy and NPC emotion influence player perceptions, verbal responses, and movement behaviours in a gaming task

Martin Johannes Dechant (University of Saskatchewan), Robin Welsch (LMU Munich), Julian Frommel (University of Saskatchewan), Regan Mandryk (University of Saskatchewan)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022DechantStandByMe,

title = {(Don't) stand by me: How trait psychopathy and NPC emotion influence player perceptions, verbal responses, and movement behaviours in a gaming task},

author = {Martin Johannes Dechant (University of Saskatchewan) and Robin Welsch (LMU Munich) and Julian Frommel (University of Saskatchewan) and Regan Mandryk (University of Saskatchewan)},

url = {hci.usask.ca/, Website Lab

https://youtu.be/Wi3Kg_UkJOk, YouTube - Teaser Video},

doi = {10.1145/3491102.3502014},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Social interactions are an essential part of many digital games, and provide benefits to players; however, problematic social interactions also lead to harm. To inform our understanding of the origins of harmful social behaviours in gaming contexts, we examine how trait psychopathy influences player perceptions and behaviours within a gaming task. After measuring participants’ (n=385) trait-level boldness, meanness, and disinhibition, we expose them to neutral and angry social interactions with a non-player character (NPC) in a gaming task and assess their perceptions, verbal responses, and movement behaviours. Our findings demonstrate that the traits significantly influence interpretation of NPC emotion, verbal responses to the NPC, and movement behaviours around the NPC. These insights can inform the design of social games and communities and can help designers and researchers better understand how social functioning translates into gaming contexts},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

"I Didn't Catch That, But I'll Try My Best'': Anticipatory Error Handling in a Voice Controlled Game

Nima Zargham (Digital Media Lab, University of Bremen), Johannes Pfau (Digital Media Lab, University of Bremen), Tobias Schnackenberg (Digital Media Lab, University of Bremen), Rainer Malaka (Digital Media Lab, University of Bremen)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022ZarghamCatch,

title = {"I Didn't Catch That, But I'll Try My Best'': Anticipatory Error Handling in a Voice Controlled Game},

author = {Nima Zargham (Digital Media Lab, University of Bremen) and Johannes Pfau (Digital Media Lab, University of Bremen) and Tobias Schnackenberg (Digital Media Lab, University of Bremen) and Rainer Malaka (Digital Media Lab, University of Bremen)},

url = {https://www.uni-bremen.de/dmlab/team, Website Lab

https://www.youtube.com/watch?v=9LSZK8TG7C4, YouTube - Video Figure},

doi = {10.1145/3491102.3502115},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Advances in speech recognition, language processing and natural interaction have led to an increased industrial and academic interest. While the robustness and usability of such systems are steadily increasing, speech-based systems are still susceptible to recognition errors. This makes intelligent error handling of utmost importance for the success of those systems. In this work, we integrated anticipatory error handling for a voice-controlled video game where the game would perform a locally optimized action in respect to goal completion and obstacle avoidance, when a command is not recognized. We evaluated the user experience of our approach versus traditional, repetition-based error handling (N=34). Our results indicate that implementing anticipatory error handling can improve the usability of a system, if it follows the intention of the user. Otherwise, it impairs the user experience, even when deciding for technically optimal decisions.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

"Your Eyes Tell You Have Used This Password Before": Identifying Password Reuse from Gaze and Keystroke Dynamics

Yasmeen Abdrabou (Bundeswehr University Munich, University of Glasgow), Johannes Schütte (Bundeswehr University Munich), Ahmed Shams (Fatura LLC Egypt), Ken Pfeuffer (Aarhus University, Bundeswehr University Munich), Daniel Buschek (University of Bayreuth), Mohamed Khamis (University of Glasgow), Florian Alt (Bundeswehr University Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022AbdrabouPasswordReuse,

title = {"Your Eyes Tell You Have Used This Password Before": Identifying Password Reuse from Gaze and Keystroke Dynamics},

author = {Yasmeen Abdrabou (Bundeswehr University Munich, University of Glasgow) and Johannes Schütte (Bundeswehr University Munich) and Ahmed Shams (Fatura LLC Egypt) and Ken Pfeuffer (Aarhus University, Bundeswehr University Munich) and Daniel Buschek (University of Bayreuth) and Mohamed Khamis (University of Glasgow) and Florian Alt (Bundeswehr University Munich)},

url = {https://www.unibw.de/usable-security-and-privacy-en, Website Lab

https://youtu.be/22eRF52FLdo, YouTube - Teaser Video

https://youtu.be/_5UfR7GUoXk, YouTube - Video Figure},

doi = {10.1145/3491102.3517531},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {A significant drawback of text passwords for end-user authentication is password reuse. We propose a novel approach to detect password reuse by leveraging gaze as well as typing behavior and study its accuracy. We collected gaze and typing behavior from 49 users while creating accounts for 1) a webmail client and 2) a news website. While most participants came up with a new password, 32% reported having reused an old password when setting up their accounts. We then compared different ML models to detect password reuse from the collected data. Our models achieve an accuracy of up to 87.7% in detecting password reuse from gaze, 75.8% accuracy from typing, and 88.75% when considering both types of behavior. We demonstrate that revised{using gaze, password} reuse can already be detected during the registration process, before users entered their password. Our work paves the road for developing novel interventions to prevent password reuse.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

ADHD and Technology Research -- Investigated by Neurodivergent Readers

Katta Spiel (Human-Computer Interaction Group, TU Wien), Eva Hornecker (Bauhaus-Universität Weimar), Rua Mae Williams (University of Florida), Judith Good (University of Sussex)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022SpielADHD,

title = {ADHD and Technology Research -- Investigated by Neurodivergent Readers},

author = {Katta Spiel (Human-Computer Interaction Group, TU Wien) and Eva Hornecker (Bauhaus-Universität Weimar) and Rua Mae Williams (University of Florida) and Judith Good (University of Sussex)},

url = {https://www.uni-weimar.de/en/media/chairs/computer-science-department/human-computer-interaction/, Website Lab},

doi = {10.1145/3411764.3445196},

year = {2022},

date = {2022-04-30},

abstract = {Technology research for neurodivergent conditions is largely shaped by research aims which privilege neuro-normative outcomes. As such, there is an epistemic imbalance in meaning making about these technologies. We conducted a critical literature review of technologies designed for people with ADHD, focusing on how ADHD is framed, the research aims and approaches, the role of people with ADHD within the research process, and the types of systems being developed within Computing and HCI. Our analysis and review is conducted explicitly from an insider perspective, bringing our perspectives as neurodivergent researchers to the topic of technologies in the context of ADHD. We found that 1) technologies are largely used to `mitigate' the experiences of ADHD which are perceived as disruptive to neurotypical standards of behaviour; 2) little HCI research in the area invites this population to co-construct the technologies or to leverage neurodivergent experiences in the construction of research aims; and 3) participant resistance to deficit frames can be read within the researchers' own accounts of participant actions. We discuss the implications of this status quo for disabled people and technology researchers alike, and close with a set of recommendations for future work in this area. },

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

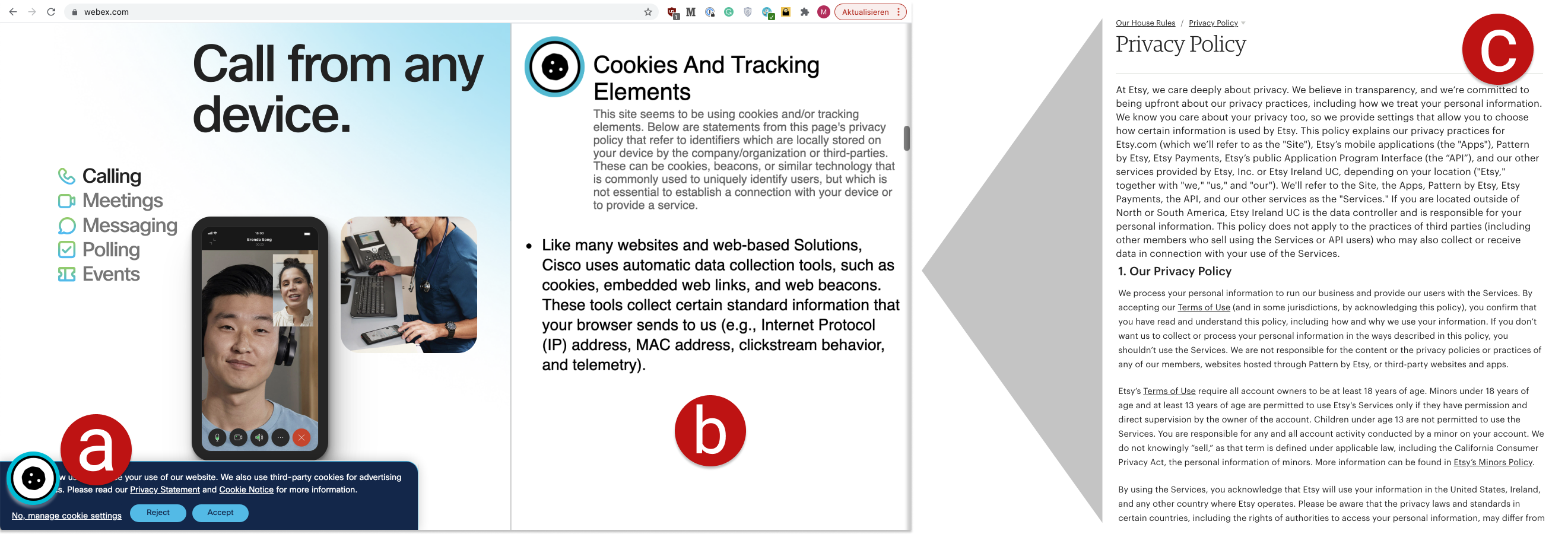

Automating Contextual Privacy Policies: Design and Evaluation of a Production Tool for Digital Consumer Privacy Awareness

Maximiliane Windl (LMU Munich), Niels Henze (University of Regensburg), Albrecht Schmidt (LMU Munich), Sebastian S. Feger (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022WindlAutomating,

title = {Automating Contextual Privacy Policies: Design and Evaluation of a Production Tool for Digital Consumer Privacy Awareness},

author = {Maximiliane Windl (LMU Munich) and Niels Henze (University of Regensburg) and Albrecht Schmidt (LMU Munich) and Sebastian S. Feger (LMU Munich)},

url = {https://maximiliane-windl.com/, Website Author

https://www.facebook.com/maximiliane.pauline/, Facebook Author

https://www.linkedin.com/in/maximiliane-windl-8889b6195/, LinkedIn Author

https://youtu.be/uCFj_nBdzsk, YouTube - Teaser Video},

doi = {10.1145/3491102.3517688},

year = {2022},

date = {2022-04-30},

abstract = {Users avoid engaging with privacy policies because they are lengthy and complex, making it challenging to retrieve relevant information. In response, research proposed contextual privacy policies (CPPs) that embed relevant privacy information directly into their affiliated contexts. To date, CPPs are limited to concept showcases. This work evolves CPPs into a production tool that automatically extracts and displays concise policy information. We first evaluated the technical functionality on the US's 500 most visited websites with 59 participants. Based on our results, we further revised the tool to deploy it in the wild with 11 participants over ten days. We found that our tool is effective at embedding CPP information on websites. Moreover, we found that the tool's usage led to more reflective privacy behavior, making CPPs powerful in helping users understand the consequences of their online activities. We contribute design implications around CPP presentation to inform future systems design.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

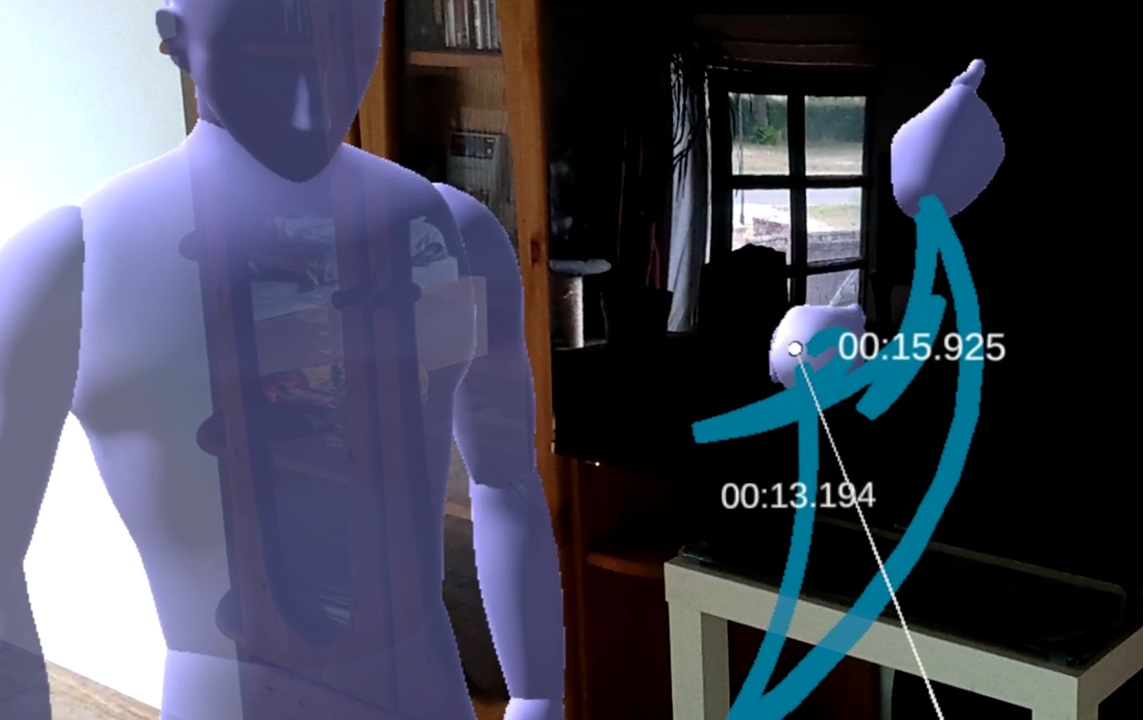

AvatAR: An Immersive Analysis Environment for Human Motion Data Combining Interactive 3D Avatars and Trajectories

Patrick Reipschläger (Autodesk Research, Toronto, Ontario, Canada; Technische Universität Dresden Dresden, Germany), Frederik Brudy (Autodesk Research, Toronto, Ontario, Canada), Raimund Dachselt (Technische Universität Dresden Dresden, Germany), Justin Matejka (Autodesk Research, Toronto, Ontario, Canada), George Fitzmaurice (Autodesk Research, Toronto, Ontario, Canada), Fraser Anderson (Autodesk Research, Toronto, Ontario, Canada)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022ReipschlaegerAvatAR,

title = {AvatAR: An Immersive Analysis Environment for Human Motion Data Combining Interactive 3D Avatars and Trajectories},

author = {Patrick Reipschläger (Autodesk Research, Toronto, Ontario, Canada; Technische Universität Dresden Dresden, Germany) and Frederik Brudy (Autodesk Research, Toronto, Ontario, Canada) and Raimund Dachselt (Technische Universität Dresden Dresden, Germany) and Justin Matejka (Autodesk Research, Toronto, Ontario, Canada) and George Fitzmaurice (Autodesk Research, Toronto, Ontario, Canada) and Fraser Anderson (Autodesk Research, Toronto, Ontario, Canada)},

url = {https://www.imld.de, Website Lab},

doi = {10.1145/3491102.3517676},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Analysis of human motion data can reveal valuable insights about the utilization of space and interaction of humans with their environment. To support this, we present AvatAR, an immersive analysis environment for the in-situ visualization of human motion data, that combines 3D trajectories, virtual avatars of people’s movement, and a detailed representation of their posture. Additionally, we describe how to embed visualizations directly into the environment, showing what a person looked at or what surfaces they touched, and how the avatar’s body parts can be used to access and manipulate those visualizations. AvatAR combines an AR HMD with a tablet to provide both mid-air and touch interaction for system control, as well as an additional overview to help users navigate the environment. We implemented a prototype and present several scenarios to show that AvatAR can enhance the analysis of human motion data by making data not only explorable, but experienceable.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

BikeAR: Understanding Cyclists’ Crossing Decision-Making at Uncontrolled Intersections using Augmented Reality

Andrii Matviienko (Technical University of Darmstadt), Florian Müller (LMU Munich), Dominik Schön (Technical University of Darmstadt), Paul Seesemann (Technical University of Darmstadt), Sebastian Günther (Technical University of Darmstadt), Max Mühlhäuser (Technical University of Darmstadt)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022MatviienkoBikeAR,

title = {BikeAR: Understanding Cyclists’ Crossing Decision-Making at Uncontrolled Intersections using Augmented Reality},

author = {Andrii Matviienko (Technical University of Darmstadt) and Florian Müller (LMU Munich) and Dominik Schön (Technical University of Darmstadt) and Paul Seesemann (Technical University of Darmstadt) and Sebastian Günther (Technical University of Darmstadt) and Max Mühlhäuser (Technical University of Darmstadt)},

url = {https://teamdarmstadt.de/, Website Lab

https://www.facebook.com/teamdarmstadt, Facebook Lab},

doi = {10.1145/3491102.3517560},

year = {2022},

date = {2022-04-30},

abstract = {Cycling has become increasingly popular as a means of transportation. However, cyclists remain a highly vulnerable group of road users. According to accident reports, one of the most dangerous situations for cyclists are uncontrolled intersections, where cars approach from both directions. To address this issue and assist cyclists in crossing decision-making at uncontrolled intersections, we designed two visualizations that: (1) highlight occluded cars through an X-ray vision and (2) depict the remaining time the intersection is safe to cross via a Countdown. To investigate the efficiency of these visualizations, we proposed an Augmented Reality simulation as a novel evaluation method, in which the above visualizations are represented as AR, and conducted a controlled experiment with 24 participants indoors. We found that the X-ray ensures a fast selection of shorter gaps between cars, while the Countdown facilitates a feeling of safety and provides a better intersection overview.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Care Workers Making Use of Robots: Results of a Three-Month Study on Human-Robot Interaction within a Care Home

Felix Carros (Information Systems, New Media, University of Siegen, Siegen, Germany), Isabel Schwaninger (HCI Group, Faculty of Informatics, TU Wien, Vienna, Austria), Adrian Preussner (Information Systems, New Media, University of Siegen, Siegen, Germany), Dave Randall (Information Systems, New Media, University of Siegen, Siegen, Germany), Rainer Wieching (Information Systems, New Media, University of Siegen, Siegen, Germany), Geraldine Fitzpatrick (TU Wien, Vienna, Austria), Volker Wulf (Institute of Information Systems, New Media, Siegen, Germany)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022CarrosCareWorkers,

title = {Care Workers Making Use of Robots: Results of a Three-Month Study on Human-Robot Interaction within a Care Home},

author = {Felix Carros (Information Systems and New Media, University of Siegen, Siegen, Germany) and Isabel Schwaninger (HCI Group, Faculty of Informatics, TU Wien, Vienna, Austria) and Adrian Preussner (Information Systems and New Media, University of Siegen, Siegen, Germany) and Dave Randall (Information Systems and New Media, University of Siegen, Siegen, Germany) and Rainer Wieching (Information Systems and New Media, University of Siegen, Siegen, Germany) and Geraldine Fitzpatrick (TU Wien, Vienna, Austria) and Volker Wulf (Institute of Information Systems and New Media, Siegen, Germany)},

url = {https://www.wineme.uni-siegen.de, Website Lab

https://twitter.com/sozioinformativ, Twitter Lab},

doi = {10.1145/3491102.3517435},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Research on social robots in care has often focused on either the care recipients or the technology itself, neglecting the care workers who, in and through their collaborative and coordinative practices, will need to work with the robots. To better understand these interactions with a social robot (Pepper), we undertook a 3 month long-term study within a care home to gain empirical insights into the way the robot was used. We observed how care workers learned to use the device, applied it to their daily work life, and encountered obstacles. Our findings show that the care workers used the robot regularly (1:07 hours/day) mostly in one-to-one interactions with residents. While the robot had a limited effect on reducing the workload of care workers, it had other positive effects, demonstrating the potential to enhance the quality of care.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Consent in the Age of AR: Investigating The Comfort With Displaying Personal Information in Augmented Reality

Jan Ole Rixen (Institute of Media Informatics, Ulm University), Mark Colley (Institute of Media Informatics, Ulm University), Ali Askari (Institute of Media Informatics, Ulm University), Jan Gugenheimer (Télécom Paris - LTCI, Institut Polytechnique de Paris), Enrico Rukzio (Institute of Media Informatics, Ulm University)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022RixenPersonalInformationAR,

title = {Consent in the Age of AR: Investigating The Comfort With Displaying Personal Information in Augmented Reality},

author = {Jan Ole Rixen (Institute of Media Informatics, Ulm University) and Mark Colley (Institute of Media Informatics, Ulm University) and Ali Askari (Institute of Media Informatics, Ulm University) and Jan Gugenheimer (Télécom Paris - LTCI, Institut Polytechnique de Paris) and Enrico Rukzio (Institute of Media Informatics, Ulm University)},

doi = {10.1145/3491102.3502140},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Social Media (SM) has shown that we adapt our communication and disclosure behaviors to available technological opportunities. Head-mounted Augmented Reality (AR) will soon allow to effortlessly display the information we disclosed not isolated from our physical presence (e.g., on a smartphone) but visually attached to the human body. In this work, we explore how the medium (AR vs.Smartphone), our role (being augmented vs. augmenting), and characteristics of information types (e.g., level of intimacy, self-disclosed vs. non-self-disclosed) impact the users’ comfort when displaying personal information. Conducting an online survey (N=148), we found that AR technology and being augmented negatively impacted this comfort. Additionally, we report that AR mitigated the effects of information characteristics compared to those they had on smartphones. In light of our results, we discuss that information augmentation should be built on consent and openness, focusing more on the comfort of the augmented rather than the technological possibilities.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

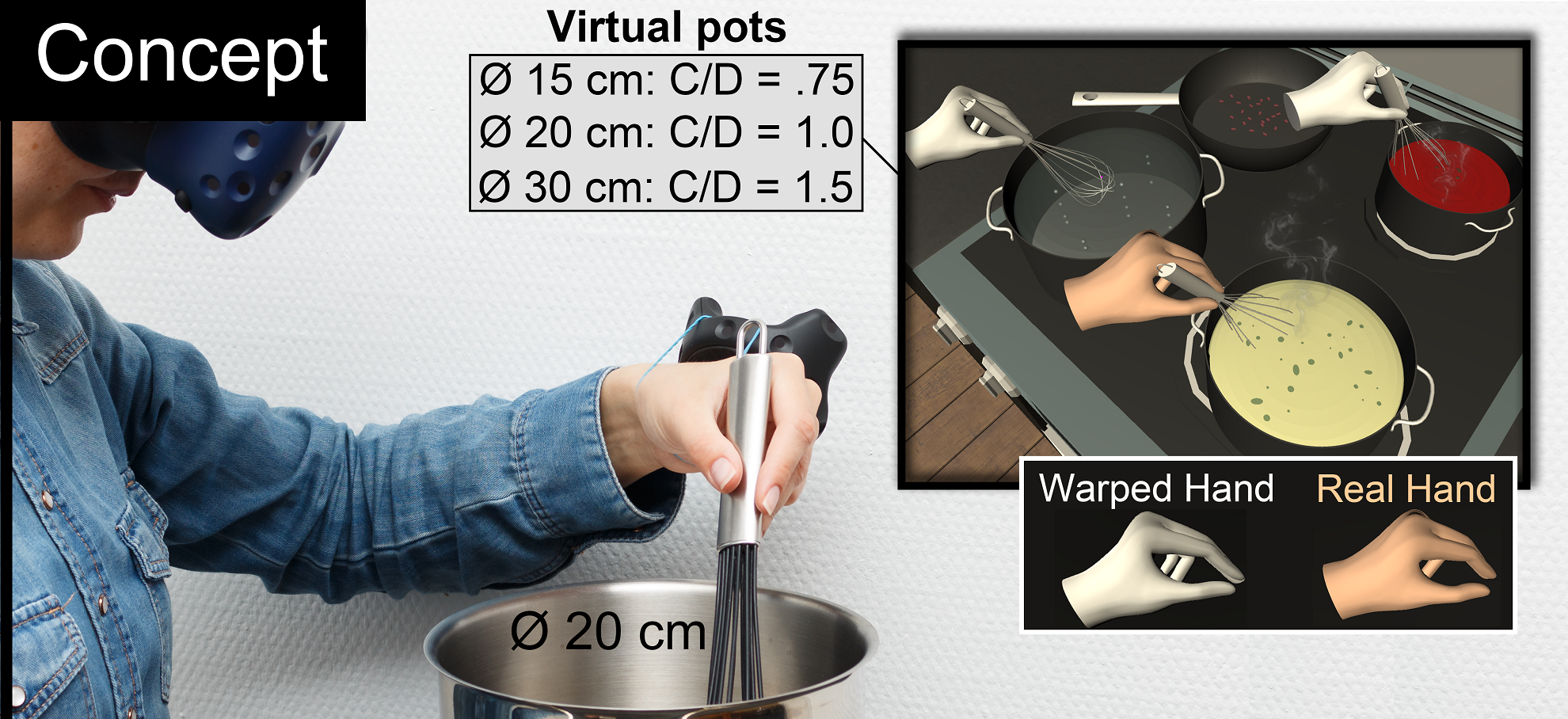

Designing Visuo-Haptic Illusions with Proxies in Virtual Reality: Exploration of Grasp, Movement Trajectory and Object Mass

Martin Feick (DFKI, Saarland Informatics Campus), Kora P. Regitz (DFKI, Saarland Informatics Campus), Anthony Tang (University of Toronto), Antonio Krüger (DFKI, Saarland Informatics Campus)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022FeickVisuoHapticIllusions,

title = {Designing Visuo-Haptic Illusions with Proxies in Virtual Reality: Exploration of Grasp, Movement Trajectory and Object Mass},

author = {Martin Feick (DFKI, Saarland Informatics Campus) and Kora P. Regitz (DFKI, Saarland Informatics Campus) and Anthony Tang (University of Toronto) and Antonio Krüger (DFKI, Saarland Informatics Campus)},

url = {https://umtl.cs.uni-saarland.de/, Website Lab

https://twitter.com/mafeick, Twitter Author

https://www.youtube.com/watch?v=Xp_2tldYA7Q, YouTube - Teaser Video

https://www.youtube.com/watch?v=NnTAEs97an0, YouTube - Video Figure},

doi = {10.1145/3491102.3517671},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Visuo-haptic illusions are a method to expand proxy-based interactions in VR by introducing unnoticeable discrepancies between the virtual and real world. Yet how different design variables affect the illusions with proxies is still unclear. To unpack a subset of variables, we conducted two user studies with 48 participants to explore the impact of (1) different grasping types and movement trajectories, and (2) different grasping types and object masses on the discrepancy which may be introduced. Our Bayes analysis suggests that grasping types and object masses (≤ 500 g) did not noticeably affect the discrepancy, but for movement trajectory, results were inconclusive. Further, we identified a significant difference between (un)restricted movement trajectories. Our data shows considerable differences in participants’ proprioceptive accuracy, which seem to correlate with their prior VR experience. Finally, we illustrate the impact of our key findings on the visuo-haptic illusion design process by showcasing a new design workflow.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Effects of Pedestrian Behavior, Time Pressure, and Repeated Exposure on Crossing Decisions in Front of Automated Vehicles Equipped with External Communication

Mark Colley (Institute of Media Informatics, Ulm University), Elvedin Bajrovic (Institute of Media Informatics, Ulm University), Enrico Rukzio (Institute of Media Informatics, Ulm University)

Abstract | Tags: Full Paper | Links:

@bachelorthesis{2022ColleyEffectsAutomatedVehicles,

title = {Effects of Pedestrian Behavior, Time Pressure, and Repeated Exposure on Crossing Decisions in Front of Automated Vehicles Equipped with External Communication},

author = {Mark Colley (Institute of Media Informatics, Ulm University) and Elvedin Bajrovic (Institute of Media Informatics, Ulm University) and Enrico Rukzio (Institute of Media Informatics, Ulm University)},

url = {https://www.uni-ulm.de/en/in/mi/hci/, Website Lab

https://twitter.com/mi_uulm, Twitter Lab},

doi = {10.1145/3491102.3517571},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Automated vehicles are expected to substitute driver-pedestrian communication via LED strips or displays. This communication is expected to improve trust and the crossing process in general. However, numerous factors such as other pedestrians' behavior, perceived time pressure, or previous experience influence crossing decisions. Therefore, we report the results of a triply subdivided Virtual Reality study (N=18) evaluating these. Results show that external communication was perceived as hedonically pleasing, increased perceived safety and trust, and also that pedestrians' behavior affected participants' behavior. A timer did not alter crossing behavior, however, repeated exposure increased trust and reduced crossing times, showing a habituation effect. Our work helps better to integrate research on external communication in ecologically valid settings.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {bachelorthesis}

}

Elements of XR Prototyping: Characterizing the Role and Use of Prototypes in Augmented and Virtual Reality Design

Veronika Krauß (Universität Siegen), Michael Nebeling (University of Michigan), Florian Jasche (Universität Siegen), Alexander Boden (Hochschule Bonn-Rhein-Sieg, Fraunhofer FIT)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022KraussXRPrototyping,

title = {Elements of XR Prototyping: Characterizing the Role and Use of Prototypes in Augmented and Virtual Reality Design},

author = {Veronika Krauß (Universität Siegen) and Michael Nebeling (University of Michigan) and Florian Jasche (Universität Siegen) and Alexander Boden (Hochschule Bonn-Rhein-Sieg, Fraunhofer FIT)},

url = {www.verbraucherinformatik.de, Website Lab},

doi = {10.1145/3491102.3517714},

year = {2022},

date = {2022-04-30},

abstract = {Current research in augmented, virtual, and mixed reality (XR) reveals a lack of tool support for designing and, in particular, prototyping XR applications. While recent tools research is often motivated by studying the requirements of non-technical designers and end-user developers, the perspective of industry practitioners is less well understood. In an interview study with 17 practitioners from different industry sectors working on professional XR projects, we establish the design practices in industry, from early project stages to the final product. To better understand XR design challenges, we characterize the different methods and tools used for prototyping and describe the role and use of key prototypes in the different projects. We extract common elements of XR prototyping, elaborating on the tools and materials used for prototyping and establishing different views on the notion of fidelity. Finally, we highlight key issues for future XR tools research.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

FoolProofJoint: Reducing Assembly Errors of Laser Cut 3D Models by Means of Custom Joint Patterns

Keunwoo Park (Hasso Plattner Institute, Potsdam, Germany), Conrad Lempert (Hasso Plattner Institute, Potsdam, Germany), Muhammad Abdullah (Hasso Plattner Institute, Potsdam, Germany), Shohei Katakura (Hasso Plattner Institute, Potsdam, Germany), Jotaro Shigeyama (Hasso Plattner Institute, Potsdam, Germany), Thijs Roumen (Hasso Plattner Institute, Potsdam, Germany), Patrick Baudisch (Hasso Plattner Institute, Potsdam, Germany)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022ParkFoolProofJoint,

title = {FoolProofJoint: Reducing Assembly Errors of Laser Cut 3D Models by Means of Custom Joint Patterns},

author = {Keunwoo Park (Hasso Plattner Institute, Potsdam, Germany) and Conrad Lempert (Hasso Plattner Institute, Potsdam, Germany) and Muhammad Abdullah (Hasso Plattner Institute, Potsdam, Germany) and Shohei Katakura (Hasso Plattner Institute, Potsdam, Germany) and Jotaro Shigeyama (Hasso Plattner Institute, Potsdam, Germany) and Thijs Roumen (Hasso Plattner Institute, Potsdam, Germany) and Patrick Baudisch (Hasso Plattner Institute, Potsdam, Germany)},

url = {https://hpi.de/baudisch/home.html, Website HCI Lab Potsdam},

doi = {10.1145/3491102.3501919},

year = {2022},

date = {2022-04-30},

abstract = {We present FoolProofJoint, a software tool that simplifies the assembly of laser-cut 3D models and reduces the risk of erroneous assembly. FoolProofJoint achieves this by modifying finger joint patterns. Wherever possible, FoolProofJoint makes similar looking pieces fully interchangeable, thereby speeding up the user’s visual search for a matching piece. When that is not possible, FoolProofJoint gives finger joints a unique pattern of individual fingers widths so as to fit only with the correct joint on the correct piece, thereby preventing erroneous assembly. In our benchmark set of 217 laser-cut 3D models downloaded from kyub.com, FoolProofJoint made groups of similar looking pieces fully interchangeable for 65% of all groups of similar pieces; FoolProofJoint fully prevented assembly mistakes for 97% of all models.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

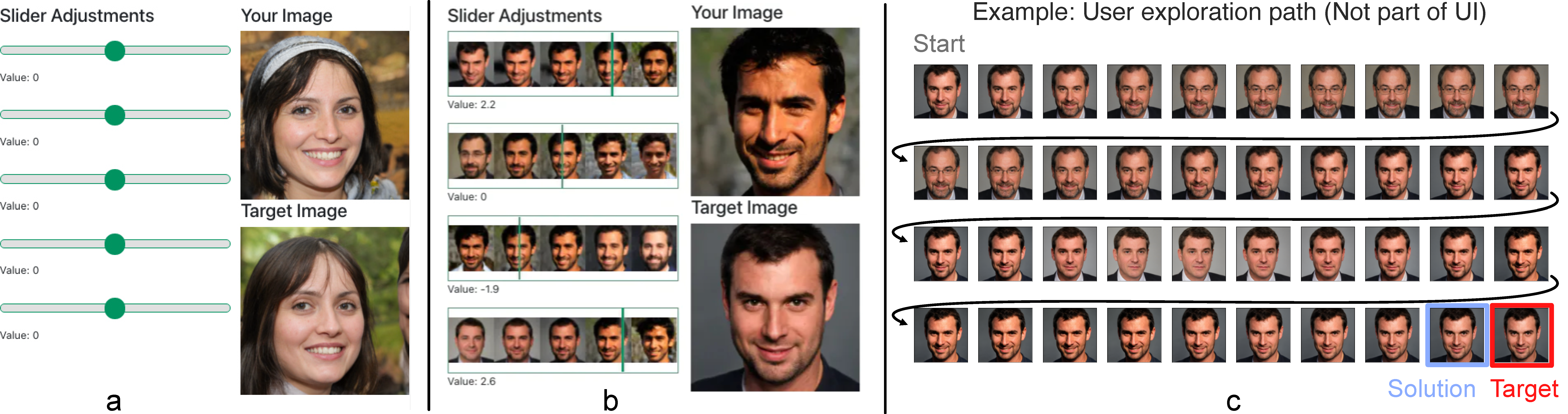

GANSlider: How Users Control Generative Models for Images using Multiple Sliders with and without Feedforward Information

Hai Dang (University of Bayreuth), Lukas Mecke (LMU Munich, UniBW Munich), Daniel Buschek (University of Bayreuth)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022DangGANSlider,

title = {GANSlider: How Users Control Generative Models for Images using Multiple Sliders with and without Feedforward Information},

author = {Hai Dang (University of Bayreuth) and Lukas Mecke (LMU Munich, UniBW Munich) and Daniel Buschek (University of Bayreuth)},

url = {https://www.hciai.uni-bayreuth.de/, Website Lab},

doi = {10.1145/3491102.3502141},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {We investigate how multiple sliders with and without feedforward visualizations influence users' control of generative models. In an online study (N=138), we collected a dataset of people interacting with a generative adversarial network (StyleGAN2) in an image reconstruction task. We found that more control dimensions (sliders) significantly increase task difficulty and user actions. Visual feedforward partly mitigates this by enabling more goal-directed interaction. However, we found no evidence of faster or more accurate task performance. This indicates a tradeoff between feedforward detail and implied cognitive costs, such as attention. Moreover, we found that visualizations alone are not always sufficient for users to understand individual control dimensions. Our study quantifies fundamental UI design factors and resulting interaction behavior in this context, revealing opportunities for improvement in the UI design for interactive applications of generative models. We close by discussing design directions and further aspects.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Haptic Fidelity Framework: Defining the Factors of Realistic Haptic Feedback for Virtual Reality

Thomas Muender (University of Bremen), Michael Bonfert (University of Bremen), Anke V. Reinschluessel (University of Bremen), Rainer Malaka (University of Bremen), Tanja Döring (University of Bremen)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022MuenderHapticFidelity,

title = {Haptic Fidelity Framework: Defining the Factors of Realistic Haptic Feedback for Virtual Reality},

author = {Thomas Muender (University of Bremen) and Michael Bonfert (University of Bremen) and Anke V. Reinschluessel (University of Bremen) and Rainer Malaka (University of Bremen) and Tanja Döring (University of Bremen)},

url = {http://dm.tzi.de/, Website Lab

https://twitter.com/dmlabbremen, Twitter Lab

https://youtu.be/chEEus4K2Gs, YouTube - Teaser Video},

doi = {10.1145/3491102.3501953},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Providing haptic feedback in virtual reality to make the experience more realistic has become a strong focus of research in recent years. The resulting haptic feedback systems differ greatly in their technologies, feedback possibilities, and overall realism making it challenging to compare different systems. We propose the Haptic Fidelity Framework providing the means to describe, understand and compare haptic feedback systems. The framework locates a system in the spectrum of providing realistic or abstract haptic feedback using the Haptic Fidelity dimension. It comprises 14 criteria that either describe foundational or limiting factors. A second Versatility dimension captures the current trade-off between highly realistic but application-specific and more abstract but widely applicable feedback. To validate the framework, we compared the Haptic Fidelity score to the perceived feedback realism of evaluations from 38 papers and found a strong correlation suggesting the framework accurately describes the realism of haptic feedback.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

It Is Not Always Discovery Time: Four Pragmatic Approaches in Designing AI Systems

Maximiliane Windl (LMU Munich), Sebastian S. Feger (LMU Munich), Lara Zijlstra (Utrecht University), Albrecht Schmidt (LMU Munich), Paweł W. Woźniak (Chalmers University of Technology)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022WindlDiscovery,

title = {It Is Not Always Discovery Time: Four Pragmatic Approaches in Designing AI Systems},

author = {Maximiliane Windl (LMU Munich) and Sebastian S. Feger (LMU Munich) and Lara Zijlstra (Utrecht University) and Albrecht Schmidt (LMU Munich) and Paweł W. Woźniak (Chalmers University of Technology)},

url = {https://maximiliane-windl.com/, Website Author

https://www.facebook.com/maximiliane.pauline/, Facebook Author

https://www.linkedin.com/in/maximiliane-windl-8889b6195/, LinkedIn Author

https://www.youtube.com/watch?v=BlU4IuoCn-s, YouTube - Teaser Video},

doi = {10.1145/3491102.3501943},

year = {2022},

date = {2022-04-30},

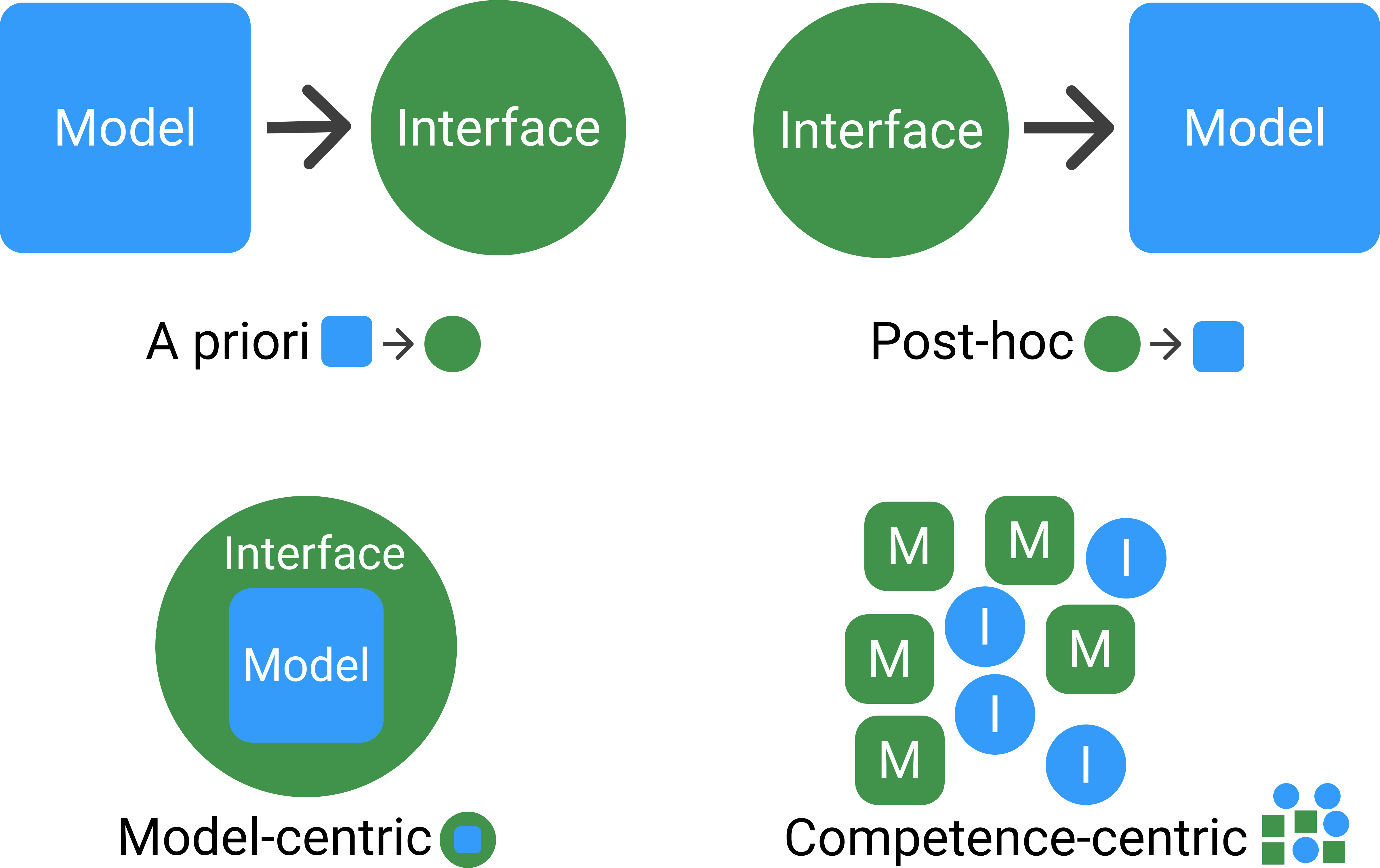

abstract = {While systems that use Artificial Intelligence (AI) are increasingly becoming part of everyday technology use, we do not fully understand how AI changes design processes. A structured understanding of how designers work with AI is needed to improve the design process and educate future designers. To that end, we conducted interviews with designers who participated in projects which used AI. While past work focused on AI systems created by experienced designers, we focus on the perspectives of a diverse sample of interaction designers. Our results show that the design process of an interactive system is affected when AI is integrated and that design teams adapt their processes to accommodate AI. Based on our data, we contribute four approaches adopted by interaction designers working with AI: a priori, post-hoc, model-centric, and competence-centric. Our work contributes a pragmatic account of how design processes for AI systems are enacted.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Keep it Short: A Comparison of Voice Assistants' Response Behavior

Gabriel Haas (Ulm University), Michael Rietzler (Institute of Mediainformatics, Ulm University), Matt Jones (Swansea University), Enrico Rukzio (Ulm University)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022HaasKeepItShort,

title = {Keep it Short: A Comparison of Voice Assistants' Response Behavior},

author = {Gabriel Haas (Ulm University) and Michael Rietzler (Institute of Mediainformatics, Ulm University) and Matt Jones (Swansea University) and Enrico Rukzio (Ulm University)},

url = {https://www.uni-ulm.de/en/in/mi/hci/, Website Lab},

doi = {10.1145/3491102.3517684},

year = {2022},

date = {2022-04-30},

abstract = {Voice assistants (VAs) are present in homes, smartphones, and cars. They allow users to perform tasks without graphical or tactile user interfaces, as they are designed for natural language interaction. However, we found that currently, VAs are emulating human behavior by responding in complete sentences, limiting the design options, and preventing VAs from meeting their full potential as a utilitarian tool. We implemented a VA that handles requests in three response styles: two differing short keyword-based response styles and a full-sentence baseline. In a user study, 72 participants interacted with our VA by issuing eight requests. Results show that the short responses were perceived similarly useful and likable while being perceived as more efficient, especially for commands, and sometimes better to comprehend than the baseline. To achieve widespread adoption, we argue that VAs should be customizable and adapt to users instead of always responding in full sentences.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

MoodWorlds: A Virtual Environment for Autonomous Emotional Expression

Nadine Wagener (University of Bremen), Jasmin Niess (University of St. Gallen), Yvonne Rogers (UCL Interaction Centre), Johannes Schöning (University of St. Gallen)

Abstract | Tags: Full Paper | Links:

@inproceedings{Wagner2022Mood,

title = {MoodWorlds: A Virtual Environment for Autonomous Emotional Expression},

author = {Nadine Wagener (University of Bremen) and Jasmin Niess (University of St. Gallen) and Yvonne Rogers (UCL Interaction Centre) and Johannes Schöning (University of St. Gallen)},

url = {https://hci.uni-bremen.de/

https://twitter.com/HCIBremen},

doi = {https://doi.org/10.1145/3491102.350186},

year = {2022},

date = {2022-04-29},

publisher = {ACM },

abstract = {Immersive interactive technologies such as virtual reality (VR) have the potential to foster well-being. While VR applications have been successfully used to evoke positive emotions through the presetting of light, colour and scenery, the experiential potential of allowing users to independently create a virtual environment (VE) has not yet been sufficiently addressed. To that end, we explore how the autonomous design of a VE can affect emotional engagement and well-being. We present Mood Worlds -- a VR application allowing users to visualise their emotions by self-creating a VE. In an exploratory evaluation (N=16), we found that Mood Worlds is an effective tool supporting emotional engagement. Additionally, we found that an autonomous creation process in VR increases positive emotions and well-being. Our work shows that VR can be an effective tool to visualise emotions, thereby increasing positive affect. We discuss opportunities and design requirements for VR as positive technology.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Next Steps in Epidermal Computing: Opportunities and Challenges for Soft On-Skin Devices

Aditya Shekhar Nittala (Saarland University), Jürgen Steimle (Saarland University)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022NittalaStepsOnSkinDevices,

title = {Next Steps in Epidermal Computing: Opportunities and Challenges for Soft On-Skin Devices},

author = {Aditya Shekhar Nittala (Saarland University) and Jürgen Steimle (Saarland University)},

url = {https://hci.cs.uni-saarland.de/, Website Lab},

doi = {10.1145/3491102.3517668},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

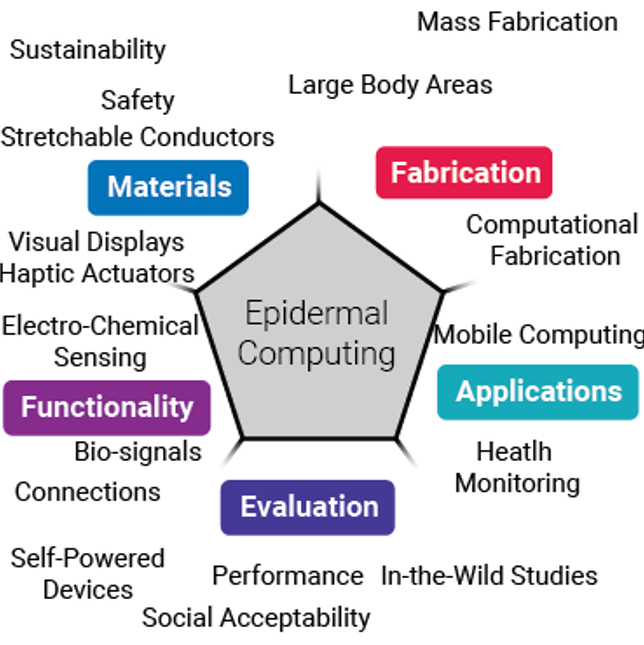

publisher = {ACM},

abstract = {Skin is a promising interaction medium and has been widely explored for mobile, and expressive interaction. Recent research in HCI has seen the development of Epidermal Computing Devices: ultra-thin and non-invasive devices which reside on the user's skin, offering intimate integration with the curved surfaces of the body, while having physical and mechanical properties that are akin to skin, expanding the horizon of on-body interaction. However, with rapid technological advancements in multiple disciplines, we see a need to synthesize the main open research questions and opportunities for the HCI community to advance future research in this area. By systematically analyzing Epidermal Devices contributed in the HCI community, physical sciences research and from our experiences in designing and building Epidermal Devices, we identify opportunities and challenges for advancing research across five themes. This multi-disciplinary synthesis enables multiple research communities to facilitate progression towards more coordinated endeavors for advancing Epidermal Computing.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Pandemic Displays: Considering Hygiene on Public Touchscreens in the Post-Pandemic Era

Ville Mäkelä (University of Waterloo), Jonas Winter (LMU Munich), Jasmin Schwab (Bundeswehr University Munich), Michael Koch (Bundeswehr University Munich), Florian Alt (Bundeswehr University Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022MaekelaePandemicDisplays,

title = {Pandemic Displays: Considering Hygiene on Public Touchscreens in the Post-Pandemic Era},

author = {Ville Mäkelä (University of Waterloo) and Jonas Winter (LMU Munich) and Jasmin Schwab (Bundeswehr University Munich) and Michael Koch (Bundeswehr University Munich) and Florian Alt (Bundeswehr University Munich)},

url = {https://www.youtube.com/watch?v=YXUghIhwD5o, YouTube - Video Figure},

doi = {10.1145/3491102.3501937},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {The COVID-19 pandemic created unprecedented questions for touch-based public displays regarding hygiene, risks, and general awareness. We study how people perceive and consider hygiene on shared touchscreens, and how touchscreens could be improved through hygiene-related functions. First, we report the results from an online survey (n = 286). Second, we present a hygiene concept for touchscreens that visualizes prior touches and provides information about the cleaning of the display and number of prior users. Third, we report the feedback for our hygiene concept from 77 participants. We find that there is demand for improved awareness of public displays' hygiene status, especially among those with stronger concerns about COVID-19. A particularly desired detail is when the display has been cleaned. For visualizing prior touches, fingerprints worked best. We present further considerations for designing for hygiene on public displays},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Pet-Robot or Appliance? Care Home Residents with Dementia Respond to a Zoomorphic Floor Washing Robot

Emanuela Marchetti (SDU Syddansk Universitet, Odense, Denmark), Sophie Grimme (OFFIS Oldenburg, Bauhaus-Universität Weimar), Eva Hornecker (Bauhaus-Universität Weimar), Avgi Kollakidou (Mærsk Mc-Kinney Møller Institute, University of Southern Denmark, Odense, Denmark), Philipp Graf (TU Chemnitz)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022MarchettiPetRobot,

title = {Pet-Robot or Appliance? Care Home Residents with Dementia Respond to a Zoomorphic Floor Washing Robot},

author = {Emanuela Marchetti (SDU Syddansk Universitet, Odense, Denmark) and Sophie Grimme (OFFIS Oldenburg, Bauhaus-Universität Weimar) and Eva Hornecker (Bauhaus-Universität Weimar) and Avgi Kollakidou (Mærsk Mc-Kinney Møller Institute, University of Southern Denmark, Odense, Denmark) and Philipp Graf (TU Chemnitz)},

url = {https://www.uni-weimar.de/en/media/chairs/computer-science-department/human-computer-interaction/, Website Lab},

doi = {10.1145/3491102.3517463},

year = {2022},

date = {2022-04-30},

abstract = {Any active entity that shares space with people is interpreted as a social actor. Based on this notion, we explore how robots that integrate functional utility with a social role and character can integrate meaningfully into daily practice. Informed by interviews and observations, we designed a zoomorphic floor cleaning robot which playfully interacts with care home residents affected by dementia. A field study shows that playful interaction can facilitate the introduction of utilitarian robots in care homes, being nonthreatening and easy to make sense of. Residents previously reacted with distress to a Roomba robot, but were now amused by and played with our cartoonish cat robot or simply tolerated its presence. They showed awareness of the machine-nature of the robot, even while engaging in pretend-play. A playful approach to the design of functional robots can thus explicitly conceptualize such robots as social actors in their context of use.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Print-A-Sketch: A Handheld Printer for Physical Sketching of Circuits and Sensors on Everyday Surfaces

Narjes Pourjafarian (Saarland University, Saarland Informatics Campus, Saarbrücken, Germany), Marion Koelle (Saarland University, Saarland Informatics Campus, Saarbrücken, Germany), Fjolla Mjaku (Saarland University, Saarland Informatics Campus, Saarbrücken, Germany), Paul Strohmeier (Max Planck Institute for Informatics, Saarland University, Saarland Informatics Campus, Saarbrücken, Germany), Jürgen Steimle

Abstract | Tags: Full Paper | Links:

@inproceedings{2022PourjafarianSurfacesPrinter,

title = {Print-A-Sketch: A Handheld Printer for Physical Sketching of Circuits and Sensors on Everyday Surfaces},

author = {Narjes Pourjafarian (Saarland University, Saarland Informatics Campus, Saarbrücken, Germany) and Marion Koelle (Saarland University, Saarland Informatics Campus, Saarbrücken, Germany) and Fjolla Mjaku (Saarland University, Saarland Informatics Campus, Saarbrücken, Germany) and Paul Strohmeier (Max Planck Institute for Informatics, Saarland University, Saarland Informatics Campus, Saarbrücken, Germany) and Jürgen Steimle},

url = {https://hci.cs.uni-saarland.de/, Website Lab

https://youtu.be/GbC9pKPzhF4, YouTube - Teaser Video

https://youtu.be/nnvOC-OtINw, YouTube - Video Figure},

doi = {10.1145/3491102.3502074},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {We present Print-A-Sketch, an open-source handheld printer prototype for sketching circuits and sensors. Print-A-Sketch combines desirable properties from free-hand sketching and functional electronic printing. Manual human control of large strokes is augmented with computer control of fine detail. Shared control of Print-A-Sketch supports sketching interactive interfaces on everyday objects -- including many objects with materials or sizes which otherwise are difficult to print on. We present an overview of challenges involved in such a system and show how these can be addressed using context-aware, dynamic printing. Continuous sensing ensures quality prints by adjusting inking-rate to hand movement and material properties. Continuous sensing also enables the print to adapt to previously printed traces to support incremental and iterative sketching. Results show good conductivity on many materials and high spatial precision, supporting on-the-fly creation of functional interfaces.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Proxemics for Human-Agent Interaction in Augmented Reality

Ann Huang (LMU Munich), Pascal Knierim (LMU Munich), Francesco Chiossi (LMU Munich), Lewis Chuang (Chair for Humans, Technology, Chemnitz University of Technology), Robin Welsch (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022HuangProxemics,

title = {Proxemics for Human-Agent Interaction in Augmented Reality},

author = {Ann Huang (LMU Munich) and Pascal Knierim (LMU Munich) and Francesco Chiossi (LMU Munich) and Lewis Chuang (Chair for Humans and Technology, Chemnitz University of Technology) and Robin Welsch (LMU Munich)},

url = {https://www.um.informatik.uni-muenchen.de/index.html, Website Lab

https://twitter.com/annnhuang, Twitter Author

https://youtu.be/1ULnYIqRqTE, YouTube - Teaser Video},

doi = {10.1145/3491102.3517593},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Augmented Reality (AR) embeds virtual content in physical spaces, including virtual agents that are known to exert a social presence on users. Existing design guidelines for AR rarely consider the social implications of an agent's personal space (PS) and that it can impact user behavior and arousal. We report an experiment (N=54) where participants interacted with agents in an AR art gallery scenario. When participants approached six virtual agents (i.e., two males, two females, a humanoid robot, and a pillar) to ask for directions, we found that participants respected the agents' PS and modulated interpersonal distances according to the human-like agents' perceived gender. When participants were instructed to walk through the agents, we observed heightened skin-conductance levels that indicate physiological arousal. These results are discussed in terms of proxemic theory that result in design recommendations for implementing pervasive AR experiences with virtual agents.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Reducing Virtual Reality Sickness for Cyclists in VR Bicycle Simulators

Andrii Matviienko (Technical University of Darmstadt), Florian Müller (LMU Munich), Marcel Zickler (Technical University of Darmstadt), Lisa Gasche (Technical University of Darmstadt), Julia Abels (Technical University of Darmstadt), Till Steinert (Technical University of Darmstadt), Max Mühlhäuser (Technical University of Darmstadt)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022MatviienkoVRSickness,

title = {Reducing Virtual Reality Sickness for Cyclists in VR Bicycle Simulators},

author = {Andrii Matviienko (Technical University of Darmstadt) and Florian Müller (LMU Munich) and Marcel Zickler (Technical University of Darmstadt) and Lisa Gasche (Technical University of Darmstadt) and Julia Abels (Technical University of Darmstadt) and Till Steinert (Technical University of Darmstadt) and Max Mühlhäuser (Technical University of Darmstadt)},

url = {https://teamdarmstadt.de/, Website Lab

https://www.facebook.com/teamdarmstadt, Facebook Lab},

doi = {10.1145/3491102.3501959},

year = {2022},

date = {2022-04-30},

abstract = {Virtual Reality (VR) bicycle simulations aim to recreate the feeling of riding a bicycle and are commonly used in many application areas. However, current solutions still create mismatches between the visuals and physical movement, which causes VR sickness and diminishes the cycling experience. To reduce VR sickness in bicycle simulators, we conducted two controlled lab experiments addressing two main causes of VR sickness: (1) steering methods and (2) cycling trajectory. In the first experiment (N = 18) we compared handlebar, HMD, and upper-body steering methods. In the second experiment (N = 24) we explored three types of movement in VR (1D, 2D, and 3D trajectories) and three countermeasures (airflow, vibration, and dynamic Field-of-View) to reduce VR sickness. We found that handlebar steering leads to the lowest VR sickness without decreasing cycling performance and airflow suggests to be the most promising method to reduce VR sickness for all three types of trajectories.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

ReLive: Bridging In-Situ and Ex-Situ Visual Analytics for Analyzing Mixed Reality User Studies

Sebastian Hubenschmid (University of Konstanz), Jonathan Wieland (University of Konstanz), Daniel Immanuel Fink (University of Konstanz), Andrea Batch (University of Maryland), Johannes Zagermann (University of Konstanz), Niklas Elmqvist (University of Maryland), Harald Reiterer (University of Konstanz)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022HubenschmidReLive,

title = {ReLive: Bridging In-Situ and Ex-Situ Visual Analytics for Analyzing Mixed Reality User Studies},

author = {Sebastian Hubenschmid (University of Konstanz) and Jonathan Wieland (University of Konstanz) and Daniel Immanuel Fink (University of Konstanz) and Andrea Batch (University of Maryland) and Johannes Zagermann (University of Konstanz) and Niklas Elmqvist (University of Maryland) and Harald Reiterer (University of Konstanz)},

url = {https://hci.uni-konstanz.de/, Website Lab

https://twitter.com/HCIGroupKN, Twitter Lab

https://youtu.be/I2goaoFOSjY, YouTube - Teaser Video

https://youtu.be/BaNZ02QkZ_k, YouTube - Video Figure

https://youtu.be/As3i9rzliF4, YouTube - Talk},

doi = {10.1145/3491102.3517550},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {The nascent field of mixed reality is seeing an ever-increasing need for user studies and field evaluation, which are particularly challenging given device heterogeneity, diversity of use, and mobile deployment. Immersive analytics tools have recently emerged to support such analysis in situ, yet the complexity of the data also warrants an ex-situ analysis using more traditional non-immersive visual analytics setups. To bridge the gap between both approaches, we introduce ReLive: a mixed-immersion visual analytics framework for exploring and analyzing mixed reality user studies. ReLive combines an in-situ virtual reality view with a complementary ex-situ desktop view. While the virtual reality view allows users to relive interactive spatial recordings replicating the original study, the synchronized desktop view provides a familiar interface for analyzing aggregated data. We validated our concepts in a two-step evaluation consisting of a design walkthrough and an empirical expert user study.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

ShapeFindAR: Exploring In-Situ Spatial Search for Physical Artifact Retrieval using Mixed Reality

Evgeny Stemasov (Institute of Media Informatics, Ulm University), Tobias Wagner (Institute of Media Informatics, Ulm University), Jan Gugenheimer (Télécom Paris - LTCI, Institut Polytechnique de Paris), Enrico Rukzio (Institute of Media Informatics, Ulm University)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022StemasovShapeFindAR,

title = {ShapeFindAR: Exploring In-Situ Spatial Search for Physical Artifact Retrieval using Mixed Reality },

author = {Evgeny Stemasov (Institute of Media Informatics, Ulm University) and Tobias Wagner (Institute of Media Informatics, Ulm University) and Jan Gugenheimer (Télécom Paris - LTCI, Institut Polytechnique de Paris) and Enrico Rukzio (Institute of Media Informatics, Ulm University)},

url = {https://www.uni-ulm.de/in/mi/hci/, Website Lab

https://twitter.com/mi_uulm, Twitter Lab

https://youtu.be/mVcBzLaWuPs, YouTube - Teaser Video

https://youtu.be/rc2JNFkAHx0, YouTube - Video Figure},

doi = {10.1145/3491102.3517682},

year = {2022},

date = {2022-04-30},

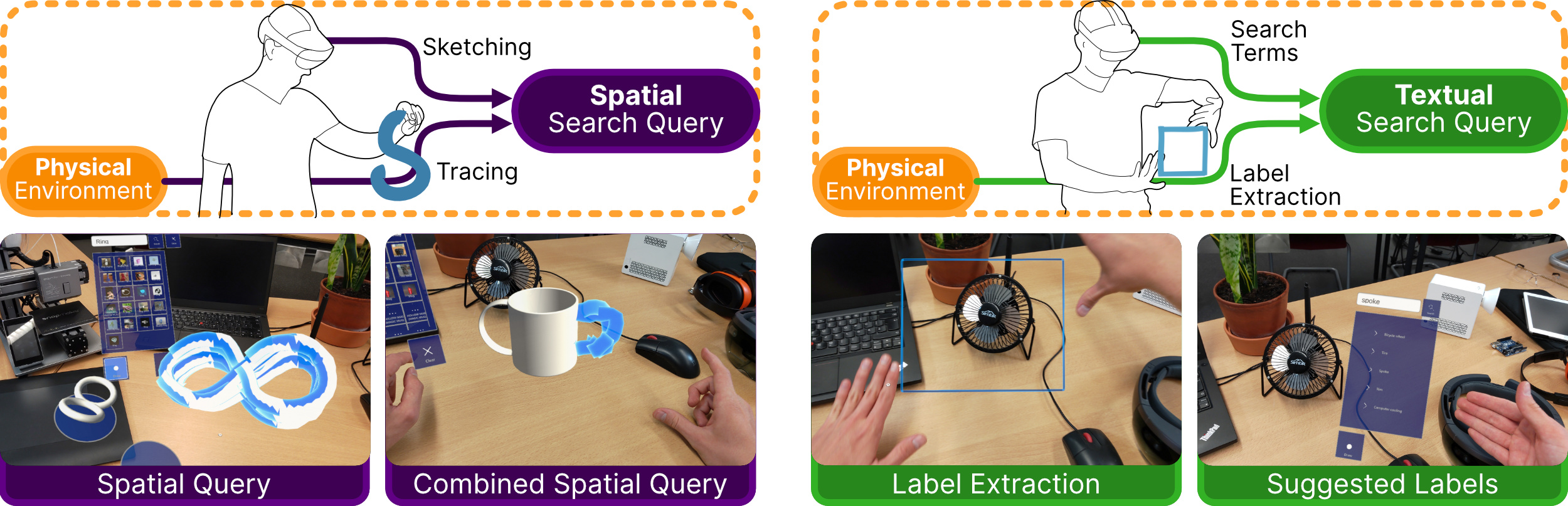

abstract = {Personal fabrication is made more accessible through repositories like Thingiverse, as they replace modeling with retrieval. However, they require users to translate spatial requirements to keywords, which paints an incomplete picture of physical artifacts: proportions or morphology are non-trivially encoded through text only. We explore a vision of in-situ spatial search for (future) physical artifacts, and present ShapeFindAR, a mixed-reality tool to search for 3D-models using in-situ sketches blended with textual queries. With ShapeFindAR, users search for geometry, and not necessarily precise labels, while coupling the search process to the physical environment (e.g., by sketching in-situ, extracting search terms from objects present, or tracing them). We developed ShapeFindAR for HoloLens 2, connected to a database of 3D-printable artifacts. We specify in-situ spatial search, describe its advantages, and present walkthroughs using ShapeFindAR, which highlight novel ways for users to articulate their wishes, without requiring complex modeling tools or profound domain knowledge.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Shaping Textile Sliders: An Evaluation of Form Factors and Tick Marks for Textile Sliders

Oliver Nowak (RWTH Aachen University), René Schäfer (RWTH Aachen University), Anke Brocker (RWTH Aachen University), Philipp Wacker (RWTH Aachen University), Jan Borchers (RWTH Aachen University)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022NowakTextileSliders,

title = {Shaping Textile Sliders: An Evaluation of Form Factors and Tick Marks for Textile Sliders},

author = {Oliver Nowak (RWTH Aachen University) and René Schäfer (RWTH Aachen University) and Anke Brocker (RWTH Aachen University) and Philipp Wacker (RWTH Aachen University) and Jan Borchers (RWTH Aachen University)},

url = {https://hci.rwth-aachen.de, Website Lab

https://www.youtube.com/watch?v=2XikxwFNWhU, YouTube - Teaser Video},

doi = {10.1145/3491102.3517473},

year = {2022},

date = {2022-04-30},

abstract = {Textile interfaces enable designers to integrate unobtrusive media and smart home controls into furniture such as sofas. While the technical aspects of such controllers have been the subject of numerous research projects, the physical form factor of these controls has received little attention so far. This work investigates how general design properties, such as overall slider shape, raised vs. recessed sliders, and number and layout of tick marks, affect users' preferences and performance. Our first user study identified a preference for certain design combinations, such as recessed, closed-shaped sliders. Our second user study included performance measurements on variations of the preferred designs from study 1, and took a closer look at tick marks. Tick marks supported orientation better than slider shape. Sliders with at least three tick marks were preferred, and performed well. Non-uniform, equally distributed tick marks reduced the movements users needed to orient themselves on the slider.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

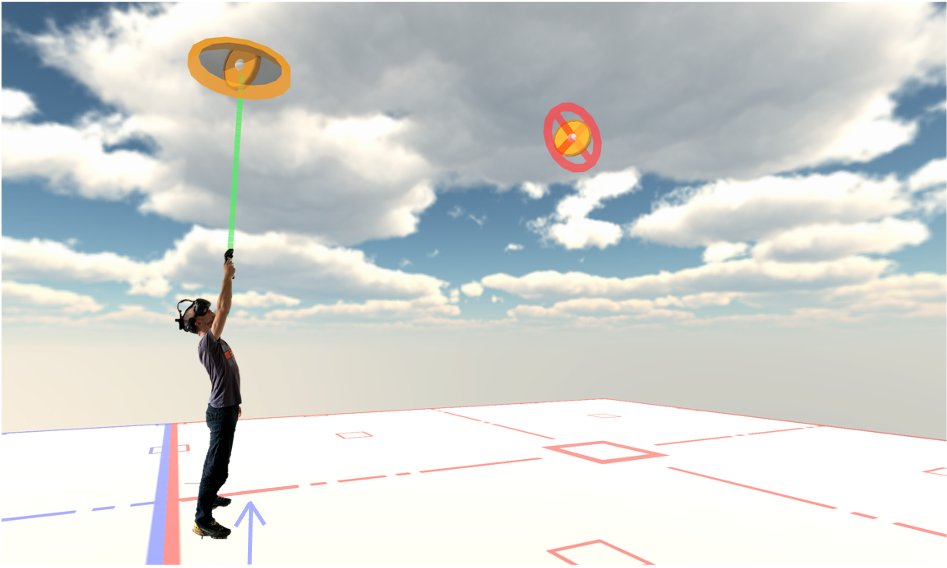

SkyPort: Investigating 3D Teleportation Methods in Virtual Environments

Andrii Matviienko (Technical University of Darmstadt), Florian Müller (LMU Munich), Martin Schmitz (Technical University of Darmstadt), Marco Fendrich (Technical University of Darmstadt), Max Mühlhäuser (Technical University of Darmstadt)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{2022MatviienkoSkyPort,

title = {SkyPort: Investigating 3D Teleportation Methods in Virtual Environments},

author = {Andrii Matviienko (Technical University of Darmstadt) and Florian Müller (LMU Munich) and Martin Schmitz (Technical University of Darmstadt) and Marco Fendrich (Technical University of Darmstadt) and Max Mühlhäuser (Technical University of Darmstadt)},

url = {https://teamdarmstadt.de/, Website Lab

https://www.facebook.com/teamdarmstadt, Facebook Lab},

doi = {10.1145/3491102.3501983},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Teleportation has become the de facto standard of locomotion in Virtual Reality (VR) environments. However, teleportation with parabolic and linear target aiming methods is restricted to horizontal 2D planes and it is unknown how they transfer to the 3D space. In this paper, we propose six 3D teleportation methods in virtual environments based on the combination of two existing aiming methods (linear and parabolic) and three types of transitioning to a target (instant, interpolated and continuous). To investigate the performance of the proposed teleportation methods, we conducted a controlled lab experiment (N = 24) with a mid-air coin collection task to assess accuracy, efficiency and VR sickness. We discovered that the linear aiming method leads to faster and more accurate target selection. Moreover, a combination of linear aiming and instant transitioning leads to the highest efficiency and accuracy without increasing VR sickness.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

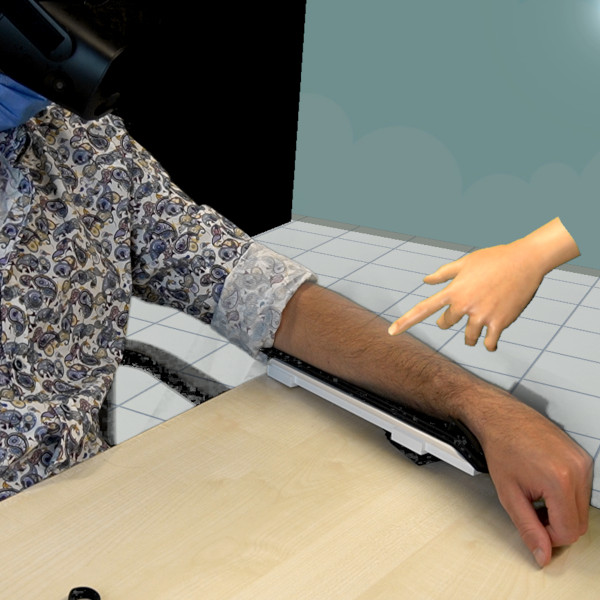

Smooth as Steel Wool: Effects of Visual Stimuli on the Haptic Perception of Roughness in Virtual Reality

Sebastian Günther (TU Darmstadt), Julian Rasch (LMU München), Dominik Schön (TU Darmstadt), Florian Müller (LMU München), Martin Schmitz (TU Darmstadt), Jan Riemann (TU Darmstadt), Andrii Matviienko (TU Darmstadt), Max Mühlhäuser (LMU München)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022GuentherSmooth,

title = {Smooth as Steel Wool: Effects of Visual Stimuli on the Haptic Perception of Roughness in Virtual Reality},

author = {Sebastian Günther (TU Darmstadt) and Julian Rasch (LMU München) and Dominik Schön (TU Darmstadt) and Florian Müller (LMU München) and Martin Schmitz (TU Darmstadt) and Jan Riemann (TU Darmstadt) and Andrii Matviienko (TU Darmstadt) and Max Mühlhäuser (LMU München)},

url = {https://sebastian-guenther.com/, Website Author

https://www.teamdarmstadt.de, Website - Telecooperation Lab Darmstadt

https://www.youtube.com/watch?v=glEOP48qVCE, YouTube - Teaser Video

https://www.youtube.com/watch?v=9q6zZCJ9rLg, YouTube - Video Figure},

doi = {10.1145/3491102.3517454},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

publisher = {ACM},

abstract = {"Haptic Feedback is essential for lifelike Virtual Reality (VR) experiences. To provide a wide range of matching sensations of being touched or stroked, current approaches typically need large numbers of different physical textures. However, even advanced devices can only accommodate a limited number of textures to remain wearable. Therefore, a better understanding is necessary of how expectations elicited by different visualizations affect haptic perception, to achieve a balance between physical constraints and great variety of matching physical textures.

In this work, we conducted an experiment (N=31) assessing how the perception of roughness is affected within VR. We designed a prototype for arm stroking and compared the effects of different visualizations on the perception of physical textures with distinct roughnesses. Additionally, we used the visualizations' real-world materials, no-haptics and vibrotactile feedback as baselines. As one result, we found that two levels of roughness can be sufficient to convey a realistic illusion."},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

In this work, we conducted an experiment (N=31) assessing how the perception of roughness is affected within VR. We designed a prototype for arm stroking and compared the effects of different visualizations on the perception of physical textures with distinct roughnesses. Additionally, we used the visualizations' real-world materials, no-haptics and vibrotactile feedback as baselines. As one result, we found that two levels of roughness can be sufficient to convey a realistic illusion."

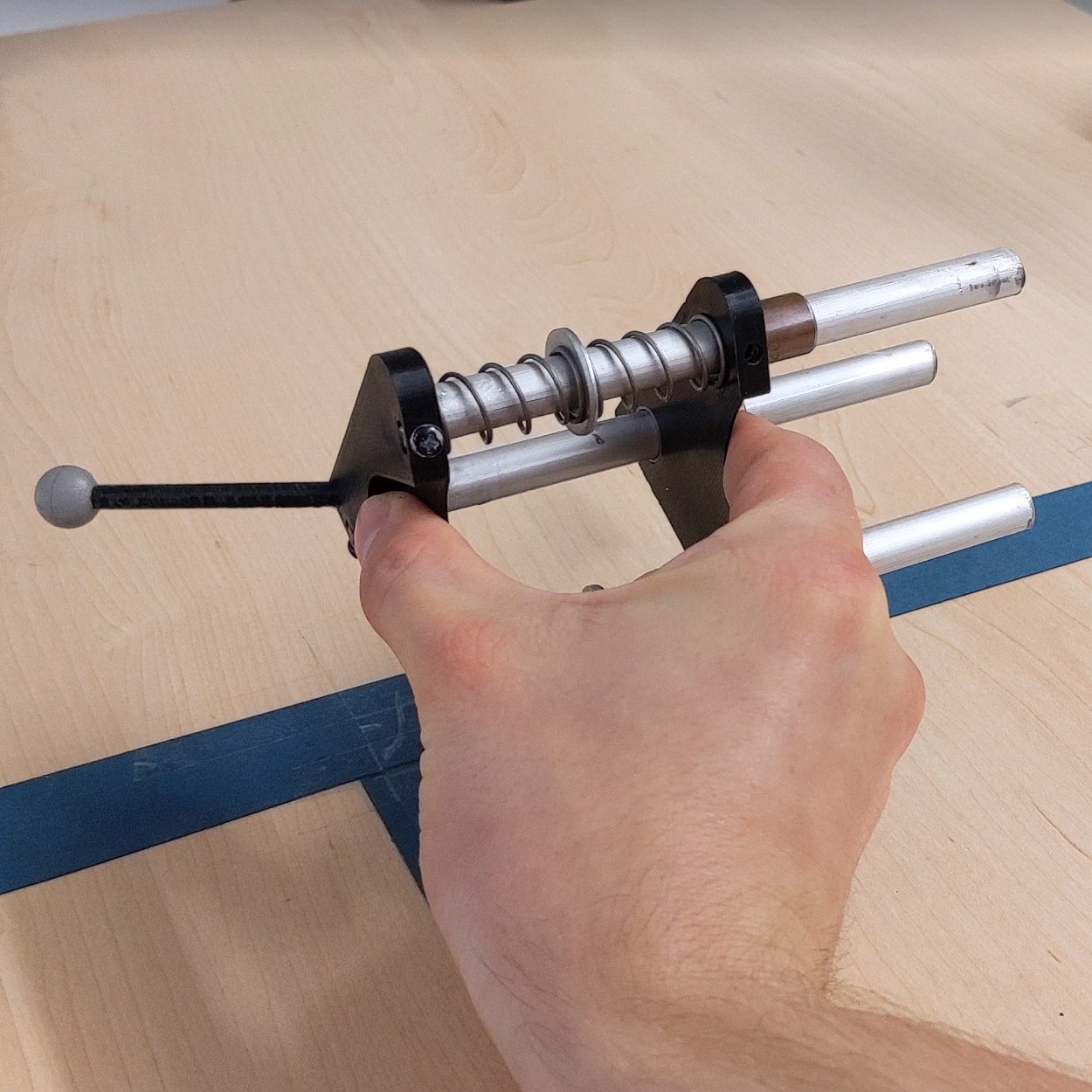

Squeezy-Feely: Investigating Lateral Thumb-Index Pinching as an Input Modality

Martin Schmitz (TU Darmstadt), Sebastian Günther (TU Darmstadt), Dominik Schön (TU Darmstadt), Florian Müller (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022SchmitzSqueezy,

title = {Squeezy-Feely: Investigating Lateral Thumb-Index Pinching as an Input Modality},

author = {Martin Schmitz (TU Darmstadt) and Sebastian Günther (TU Darmstadt) and Dominik Schön (TU Darmstadt) and Florian Müller (LMU Munich)},

url = {https://www.teamdarmstadt.de/, Website Lab

https://mschmitz.org/misc/SqueezyFeely-CHI22-teaser.mp4, Teaser Video},

doi = {10.1145/3491102.3501981},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {From zooming on smartphones and mid-air gestures to deformable user interfaces, thumb-index pinching grips are used in many interaction techniques. However, there is still a lack of systematic understanding of how the accuracy and efficiency of such grips are affected by various factors such as counterforce, grip span, and grip direction. Therefore, in this paper, we contribute an evaluation (N = 18) of thumb-index pinching performance in a visual targeting task using scales up to 75 items. As part of our findings, we conclude that the pinching interaction between the thumb and index finger is a promising modality also for one-dimensional input on higher scales. Furthermore, we discuss and outline implications for future user interfaces that benefit from pinching as an additional and complementary interaction modality.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

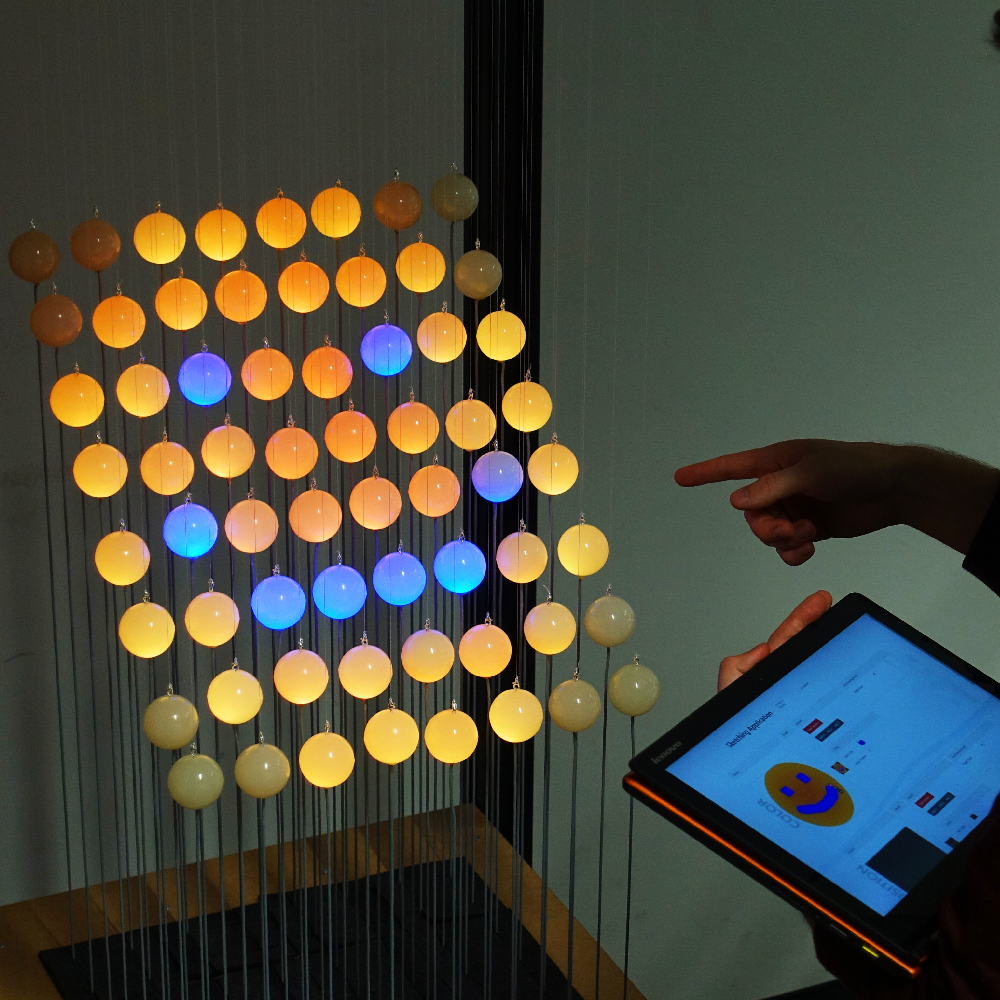

STRAIDE: A Research Platform for Shape-Changing Spatial Displays based on Actuated Strings

Severin Engert (Technische Universität Dresden), Konstantin Klamka (Technische Universität Dresden), Andreas Peetz (Technische Universität Dresden), Raimund Dachselt (Technische Universität Dresden)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022EngertStraide,

title = {STRAIDE: A Research Platform for Shape-Changing Spatial Displays based on Actuated Strings},

author = {Severin Engert (Technische Universität Dresden) and Konstantin Klamka (Technische Universität Dresden) and Andreas Peetz (Technische Universität Dresden) and Raimund Dachselt (Technische Universität Dresden)},

url = {https://imld.de, Website Interactive Media Lab Dresden

https://twitter.com/imldresden, Twitter

https://www.youtube.com/watch?v=AQRS6ko4Z8s, YouTube - Teaser Video

https://www.youtube.com/watch?v=t-R04SkK6vs, YouTube - Video Figure},

doi = {10.1145/3491102.3517462},

year = {2022},

date = {2022-04-30},

abstract = {We present STRAIDE, a string-actuated interactive display environment that allows to explore the promising potential of shape-changing interfaces for casual visualizations. At the core, we envision a platform that spatially levitates elements to create dynamic visual shapes in space. We conceptualize this type of tangible mid-air display and discuss its multifaceted design dimensions. Through a design exploration, we realize a physical research platform with adjustable parameters and modular components. For conveniently designing and implementing novel applications, we provide developer tools ranging from graphical emulators to in-situ augmented reality representations. To demonstrate STRAIDE's reconfigurability, we further introduce three representative physical setups as a basis for situated applications including ambient notifications, personal smart home controls, and entertainment. They serve as a technical validation, lay the foundations for a discussion with developers that provided valuable insights, and encourage ideas for future usage of this type of appealing interactive installation.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

There Is No First- or Third-Person View in Virtual Reality: Understanding the Perspective Continuum

Matthias Hoppe (LMU Munich), Andrea Baumann (LMU Munich), Patrick Tamunjoh (LMU Munich), Tonja-Katrin Machulla (TU Dortmund), Paweł W. Woźniak (Chalmers University of Technology), Albrecht Schmidt (LMU Munich), Robin Welsch (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022HoppeFirstThirdPersonVR,

title = {There Is No First- or Third-Person View in Virtual Reality: Understanding the Perspective Continuum},

author = {Matthias Hoppe (LMU Munich) and Andrea Baumann (LMU Munich) and Patrick Tamunjoh (LMU Munich) and Tonja-Katrin Machulla (TU Dortmund) and Paweł W. Woźniak (Chalmers University of Technology) and Albrecht Schmidt (LMU Munich) and Robin Welsch (LMU Munich) },

url = {https://www.um.informatik.uni-muenchen.de/personen/mitarbeiter/hoppe/index.html, Website Author

https://www.researchgate.net/profile/Matthias-Hoppe-3, ResearchGate Author},

doi = {10.1145/3491102.3517447},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Modern games make creative use of First- and Third-person perspectives (FPP and TPP) to allow the player to explore virtual worlds. Traditionally, FPP and TPP perspectives are seen as distinct concepts. Yet, Virtual Reality (VR) allows for flexibility in choosing perspectives. We introduce the notion of a perspective continuum in VR, which is technically related to the camera position and conceptually to how users perceive their environment in VR. A perspective continuum enables adapting and manipulating the sense of agency and involvement in the virtual world. This flexibility of perspectives broadens the design space of VR experiences through deliberately manipulating perception. In a study, we explore users' attitudes, experiences and perceptions while controlling a virtual character from the two known perspectives. Statistical analysis of the empirical results shows the existence of a perspective continuum in VR. Our findings can be used to design experiences based on shifts of perception.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

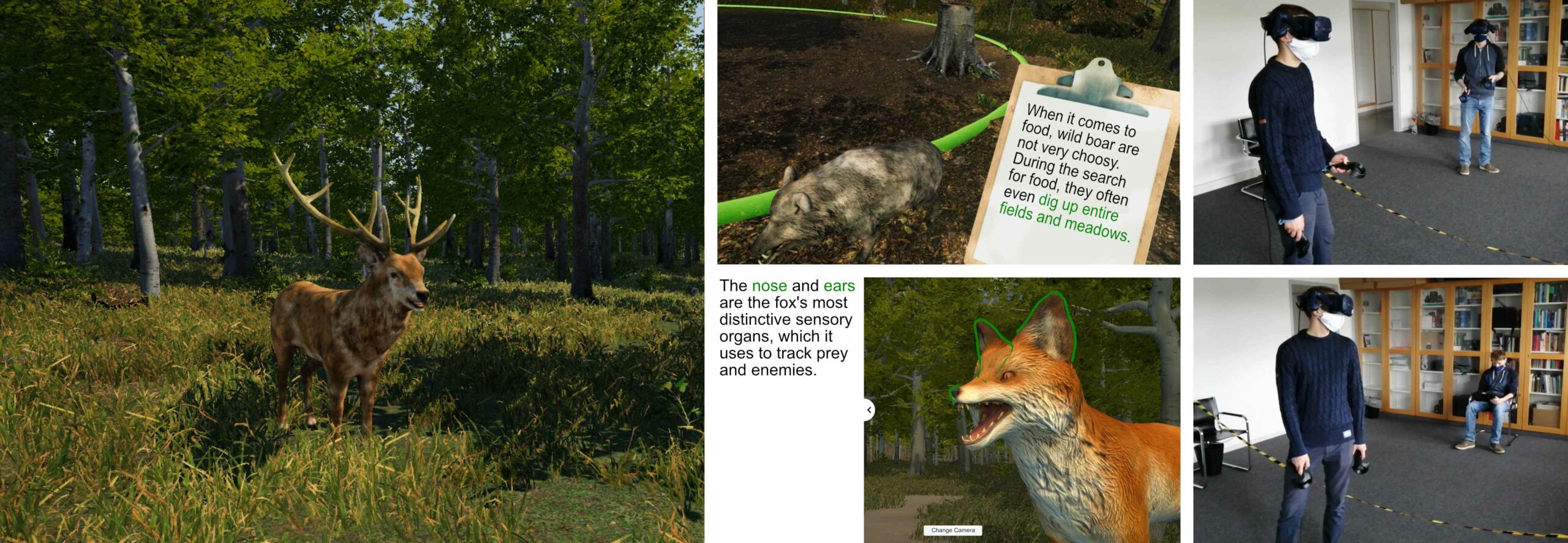

Towards Collaborative Learning in Virtual Reality: A Comparison of Co-Located Symmetric and Asymmetric Pair-Learning

Tobias Drey (Institute of Media Informatics, Ulm University), Patrick Albus (Institute of Psychology + Education, Ulm University), Simon der Kinderen (Institute of Media Informatics, Ulm University), Maximilian Milo (Institute of Media Informatics, Ulm University), Thilo Segschneider (Institute of Media Informatics, Ulm University), Linda Chanzab (Institute of Media Informatics, Ulm University), Michael Rietzler (Institute of Media Informatics, Ulm University), Tina Seufert (Institute of Psychology + Education, Ulm University), Enrico Rukzio (Institute of Media Informatics, Ulm University)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022DreyCollaborative,

title = {Towards Collaborative Learning in Virtual Reality: A Comparison of Co-Located Symmetric and Asymmetric Pair-Learning},

author = {Tobias Drey (Institute of Media Informatics, Ulm University) and Patrick Albus (Institute of Psychology + Education, Ulm University) and Simon der Kinderen (Institute of Media Informatics, Ulm University) and Maximilian Milo (Institute of Media Informatics, Ulm University) and Thilo Segschneider (Institute of Media Informatics, Ulm University) and Linda Chanzab (Institute of Media Informatics, Ulm University) and Michael Rietzler (Institute of Media Informatics, Ulm University) and Tina Seufert (Institute of Psychology + Education, Ulm University) and Enrico Rukzio (Institute of Media Informatics, Ulm University)},

url = {https://www.uni-ulm.de/en/in/mi/institute/staff/tobias-drey/, Website Author

https://twitter.com/TobiasDrey, Twitter Author

https://www.linkedin.com/in/tobias-drey-ulm/, LinkedIn Author

https://www.youtube.com/watch?v=6og_BFyI-gM, YouTube - Teaser Video

https://www.youtube.com/watch?v=_m32zfsjWcY, YouTube - Video Figure},

doi = {10.1145/3491102.3517641},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Pair-learning is beneficial for learning outcome, motivation, and social presence, and so is virtual reality (VR) by increasing immersion, engagement, motivation, and interest of students. Nevertheless, there is a research gap if the benefits of pair-learning and VR can be combined. Furthermore, it is not clear which influence it has if only one or both peers use VR. To investigate these aspects, we implemented two types of VR pair-learning systems, a symmetric system with both peers using VR and an asymmetric system with one using a tablet. In a user study (N=46), the symmetric system statistically significantly provided higher presence, immersion, player experience, and lower intrinsic cognitive load, which are all important for learning. Symmetric and asymmetric systems performed equally well regarding learning outcome, highlighting that both are valuable learning systems. We used these findings to define guidelines on how to design co-located VR pair-learning applications, including characteristics for symmetric and asymmetric systems.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

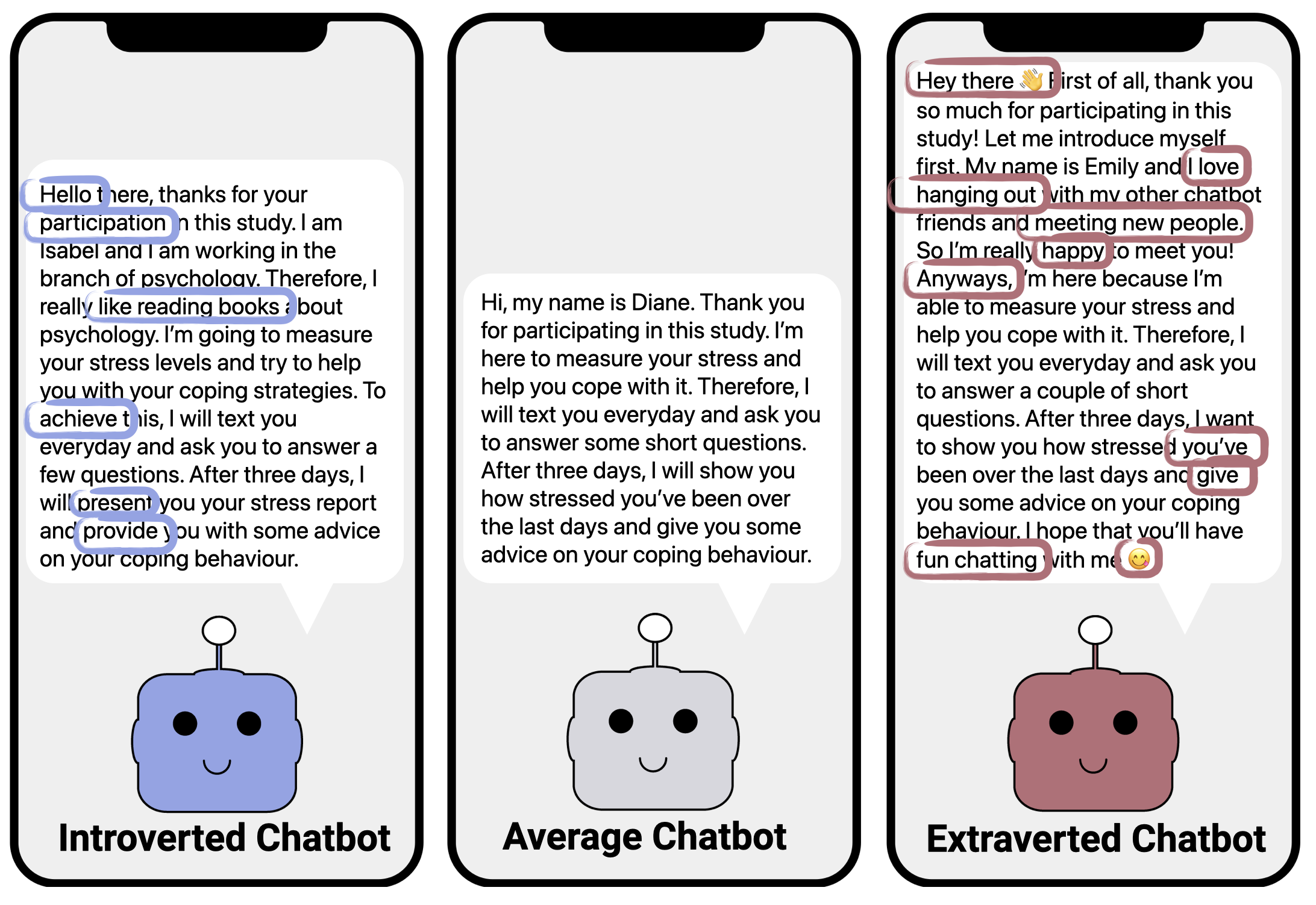

User Perceptions of Extraversion in Chatbots after Repeated Use

Sarah Theres Völkel (LMU Munich), Ramona Schoedel (LMU Munich), Lale Kaya (LMU Munich), Sven Mayer (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022VoelkelChatbots,

title = {User Perceptions of Extraversion in Chatbots after Repeated Use},

author = {Sarah Theres Völkel (LMU Munich) and Ramona Schoedel (LMU Munich) and Lale Kaya (LMU Munich) and Sven Mayer (LMU Munich)},

url = {http://www.medien.ifi.lmu.de, Website Lab

https://youtu.be/brDKyt56_iU, YouTube - Teaser Video},

doi = {10.1145/3491102.3502058},

year = {2022},

date = {2022-04-30},

abstract = {Whilst imbuing robots and voice assistants with personality has been found to positively impact user experience, little is known about user perceptions of personality in purely text-based chatbots. In a within-subjects study, we asked N=34 participants to interact with three chatbots with different levels of Extraversion (extraverted, average, introverted), each over the course of four days. We systematically varied the chatbots' responses to manipulate Extraversion based on work in the psycholinguistics of human behaviour. Our results show that participants perceived the extraverted and average chatbots as such, whereas verbal cues transferred from human behaviour were insufficient to create an introverted chatbot. Whilst most participants preferred interacting with the extraverted chatbot, participants engaged significantly more with the introverted chatbot as indicated by the users' average number of written words. We discuss implications for researchers and practitioners on how to design chatbot personalities that can adapt to user preferences.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

VRception: Rapid Prototyping of Cross-Reality Systems in Virtual Reality

Uwe Gruenefeld (University of Duisburg-Essen), Jonas Auda (University of Duisburg-Essen), Florian Mathis (University of Glasgow, University of Edinburgh), Stefan Schneegass (University of Duisburg-Essen), Mohamed Khamis (University of Glasgow), Jan Gugenheimer (Institut Polytechnique de Paris, Télécom Paris, LTCI), and Sven Mayer (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022GruenefeldVRception,

title = {VRception: Rapid Prototyping of Cross-Reality Systems in Virtual Reality},

author = {Uwe Gruenefeld (University of Duisburg-Essen) and Jonas Auda (University of Duisburg-Essen) and Florian Mathis (University of Glasgow, University of Edinburgh) and Stefan Schneegass (University of Duisburg-Essen) and Mohamed Khamis (University of Glasgow) and Jan Gugenheimer (Institut Polytechnique de Paris, Télécom Paris, LTCI) and and Sven Mayer (LMU Munich)},

url = {https://www.hci.wiwi.uni-due.de/, Website Lab

https://www.facebook.com/HCIEssen, Facebook Lab

https://1drv.ms/v/s!Avx0fb46Z9TNxK8sGhhe-VUt-QDIZA?e=Slw4GB, Teaser Video

https://1drv.ms/v/s!Avx0fb46Z9TNxK8klmFf1sh0avlT0A?e=AnLhgc, Video Figure},

doi = {10.1145/3491102.3501821},

year = {2022},

date = {2022-04-30},

urldate = {2022-04-30},

abstract = {Cross-reality systems empower users to transition along the reality-virtuality continuum or collaborate with others experiencing different manifestations of it. However, prototyping these systems is challenging, as it requires sophisticated technical skills, time, and often expensive hardware. We present VRception, a concept and toolkit for quick and easy prototyping of cross-reality systems. By simulating all levels of the reality-virtuality continuum entirely in Virtual Reality, our concept overcomes the asynchronicity of realities, eliminating technical obstacles. Our VRception toolkit leverages this concept to allow rapid prototyping of cross-reality systems and easy remixing of elements from all continuum levels. We replicated six cross-reality papers using our toolkit and presented them to their authors. Interviews with them revealed that our toolkit sufficiently replicates their core functionalities and allows quick iterations. Additionally, remote participants used our toolkit in pairs to collaboratively implement prototypes in about eight minutes that they would have otherwise expected to take days.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Where Should We Put It? Layout and Placement Strategies of Documents in Augmented Reality for Collaborative Sensemaking

Weizhou Luo (Interactive Media Lab Dresden, Technische Universität Dresden), Anke Lehmann (Interactive Media Lab Dresden, Technische Universität Dresden), Hjalmar Widengren (Interactive Media Lab Dresden, Technische Universität Dresden), Raimund Dachselt (Interactive Media Lab Dresden, Technische Universität Dresden; Cluster of Excellence Physics of Life, Technische Universität Dresden; Centre for Tactile Internet with Human-in-the-Loop (CeTI), Technische Universität Dresden)

Abstract | Tags: Full Paper | Links:

@inproceedings{2022LuoPlacement,

title = {Where Should We Put It? Layout and Placement Strategies of Documents in Augmented Reality for Collaborative Sensemaking},