We curated a list of this year’s publications — including links to social media, lab websites, and supplemental material. We have six journal articles, 67 full papers, 30 LBWs, eleven interactivities, one alt.chi paper, one DC paper, lead three workshops and give two courses. Four papers were awarded a best paper award, and seven papers received an honourable mention.

The papers for the contributing labs were also curated in a PDF booklet by Michael Chamunorwa, and it can be downloaded here: Booklet 2023

Your publication is missing? Send us an email: contact@germanhci.de

“In Your Face!”: Visualizing Fitness Tracker Data in Augmented Reality

Sebastian Rigling (University of Stuttgart), Xingyao Yu (University of Stuttgart), Michael Sedlmair (University of Stuttgart)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Rigling2023Face,

title = {“In Your Face!”: Visualizing Fitness Tracker Data in Augmented Reality},

author = {Sebastian Rigling (University of Stuttgart), Xingyao Yu (University of Stuttgart), Michael Sedlmair (University of Stuttgart)},

url = {https://www.vis.uni-stuttgart.de/en/institute/research_group/augmented_reality_and_virtual_reality/, Website},

doi = {10.1145/3544549.3585912},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {The benefits of augmented reality (AR) have been demonstrated in both medicine and fitness, while its application in areas where these two fields overlap has been barely explored. We argue that AR opens up new opportunities to interact with, understand and share personal health data. To this end, we developed an app prototype that uses a Snapchat-like face filter to visualize personal health data from a fitness tracker in AR. We tested this prototype in two pilot studies and found that AR does have potential in this type of application. We suggest that AR cannot replace the current interfaces of smartwatches and mobile apps, but it can pick up where current technology falls short in creating intrinsic motivation and personal health awareness. We also provide ideas for future work in this direction.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

A Database for Kitchen Objects: Investigating Danger Perception in the Context of Human-Robot Interaction

Jan Leusmann (LMU Munich), Carl Oechsner (LMU Munich), Johanna Prinz (LMU Munich), Robin Welsch (Aalto University), Sven Mayer (LMU Munich)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Leusmann2023Kitchen,

title = {A Database for Kitchen Objects: Investigating Danger Perception in the Context of Human-Robot Interaction},

author = {Jan Leusmann (LMU Munich), Carl Oechsner (LMU Munich), Johanna Prinz (LMU Munich), Robin Welsch (Aalto University), Sven Mayer (LMU Munich)},

url = {https://www.en.um.informatik.uni-muenchen.de/index.html, Website

https://twitter.com/mimuc, twitter

https://twitter.com/janleusmann, twitter},

doi = {10.1145/3544549.3585884},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {In the future, humans collaborating closely with cobots in everyday tasks will require handing each other objects. So far, researchers have optimized human-robot collaboration concerning measures such as trust, safety, and enjoyment. However, as the objects themselves influence these measures, we need to investigate how humans perceive the danger level of objects. Thus, we created a database of 153 kitchen objects and conducted an online survey (N=300) investigating their perceived danger level. We found that (1) humans perceive kitchen objects vastly differently, (2) the object-holder has a strong effect on the danger perception, and (3) prior user knowledge increases the perceived danger of robots handling those objects. This shows that future human-robot collaboration studies must investigate different objects for a holistic image. We contribute a wiki-like open-source database to allow others to study predefined danger scenarios and eventually build object-aware systems: https://hri-objects.leusmann.io/.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Augmented Reality-based Indoor Positioning for Smart Home Automations

Marius Schenkluhn (Karlsruhe Institute of Technology & Robert Bosch GmbH), Christian Peukert (Karlsruhe Institute of Technology), Christof Weinhardt (Karlsruhe Institute of Technology)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Schenkluhn2023Smart,

title = {Augmented Reality-based Indoor Positioning for Smart Home Automations},

author = {Marius Schenkluhn (Karlsruhe Institute of Technology & Robert Bosch GmbH), Christian Peukert (Karlsruhe Institute of Technology), Christof Weinhardt (Karlsruhe Institute of Technology)},

url = {https://im.iism.kit.edu/english/1175.php, Website

https://www.youtube.com/watch?v=wCBtQS6J5mM, Teaser Video

https://www.youtube.com/watch?v=-FubByC25N8, Full Video

},

doi = {10.1145/3544549.3585745},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Ambient Assisted Living (AAL) has been discussed for some time; however, many systems are not considered as interoperable or user-friendly. This fact is an even more important issue as every interaction is more costly in terms of time and effort for people with disabilities or senior citizens. Therefore, this paper examines the potential of automations that can substitute typical daily interactions in AAL or Smart Home settings in general based on the users’ location. Particularly, we suggest the novel approach of using the indoor positioning capabilities of Augmented Reality (AR) head-mounted displays (HMD) to detect, track, and identify residents for the purpose of automatically controlling various Internet of Things (IoT) devices in Smart Homes. An implementation of this feature on an off-the-shelf AR HMD without additional external trackers is demonstrated and the results of an initial feasibility study are presented.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Autonomy and Safety: A Quantitative Study with Control Room Operators on Affinity for Technology Interaction and Wish for Pervasive Computing Solutions

Nadine Flegel (Trier University of Applied Sciences), Daniel Wessel (University of Lübeck), Jonas Pöhler (University of Siegen), Kristof Van Laerhoven (University of Siegen), Tilo Mentler (Trier University of Applied Sciences)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Flegel2023Autonomy,

title = {Autonomy and Safety: A Quantitative Study with Control Room Operators on Affinity for Technology Interaction and Wish for Pervasive Computing Solutions},

author = {Nadine Flegel (Trier University of Applied Sciences), Daniel Wessel (University of Lübeck), Jonas Pöhler (University of Siegen), Kristof Van Laerhoven (University of Siegen), Tilo Mentler (Trier University of Applied Sciences)},

doi = {10.1145/3544549.3585822},

year = {2023},

date = {2023-04-20},

publisher = {ACM},

abstract = {Control rooms are central to the well-being of many people. In terms of human computer interaction (HCI), they are characterized by complex IT infrastructures providing numerous graphical user interfaces. More modern approaches have been researched for decades. However, they are rarely used. What role does the attitude of operators towards novel solutions play? In one of the first quantitative cross-domain studies in safety-related HCI research (N = 155), we gained insight into affinity for technology interaction (ATI) and wish for pervasive computing solutions of operators in three domains (emergency response, public utilities, maritime traffic). Results show that ATI values were rather high, with broader range only in maritime traffic operators. Furthermore, the assessment of autonomy is more strongly related to the desire for novel solutions than perceived added safety value. These findings can provide guidance for the design of pervasive computing solutions, not only but especially for users in safety-critical contexts.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Balancing Power Relations in Participatory Design: The Importance of Initiative and External Factors

Torben Volkmann (Universität zu Lübeck), Markus Dresel (Universität zu Lübeck), Nicole Jochems (Universität zu Lübeck)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Volkmann2023Participatory,

title = {Balancing Power Relations in Participatory Design: The Importance of Initiative and External Factors },

author = {Torben Volkmann (Universität zu Lübeck), Markus Dresel (Universität zu Lübeck), Nicole Jochems (Universität zu Lübeck)},

url = {https://www.imis.uni-luebeck.de, Website},

doi = {10.1145/3544549.3585864},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Power imbalances between users and designers impede the intent to equal contributions in a participatory process. Recent years have shown that unforeseen external factors pose a risk of exacerbating this power imbalance by limiting in-person meetings and communication in general. This study evaluated a projects' power relations using a three-part reflection-on-action approach. Results show, that external factors can act as an actor in the power relationship model and that the change of initiative can change the power relations. Thus, we propose a power relation triangle for Participatory Design processes, including participants, designers and external conditions as actors and the decision-making at the center based on the combination of the actor's relations. This framework can help to better understand and address power imbalances in Participatory Design.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Bees, Birds and Butterflies: Investigating the Influence of Distractors on Visual Attention Guidance Techniques

Nina Doerr (University of Stuttgart), Katrin Angerbauer (University of Stuttgart), Melissa Reinelt (University of Stuttgart), Michael Sedlmair (University of Stuttgart)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Doerr2023Bees,

title = {Bees, Birds and Butterflies: Investigating the Influence of Distractors on Visual Attention Guidance Techniques },

author = {Nina Doerr (University of Stuttgart), Katrin Angerbauer (University of Stuttgart), Melissa Reinelt (University of Stuttgart), Michael Sedlmair (University of Stuttgart)},

url = {https://github.com/visvar/, Website},

doi = {10.1145/3544549.3585816},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Visual attention guidance methods direct the viewer's gaze in immersive environments by visually highlighting elements of interest. The highlighting can be done, for instance, by adding a colored circle around elements, adding animated swarms (HiveFive), or removing objects from one eye in a stereoscopic display (Deadeye). We contribute a controlled user experiment (N=30) comparing these three techniques under the influence of visual distractors, such as bees flying by. Our results show that Circle and HiveFive performed best in terms of task performance and qualitative feedback, and were largely robust against different levels of distractions. Furthermore, we discovered a high mental demand for Deadeye.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Blended Interaction: Communication and Collaboration Between Two Users Across the Reality-Virtuality Continuum

Lucie Kruse (Universität Hamburg), Joel Wittig (Universität Hamburg), Sebastian Finnern (Universität Hamburg), Melvin Gundlach (Universität Hamburg), Niclas Iserlohe (Universität Hamburg), Dr. Oscar Ariza (Universität Hamburg), Prof. Dr. Frank Steinicke (Universität Hamburg)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Kruse2023Blended,

title = {Blended Interaction: Communication and Collaboration Between Two Users Across the Reality-Virtuality Continuum },

author = {Lucie Kruse (Universität Hamburg), Joel Wittig (Universität Hamburg), Sebastian Finnern (Universität Hamburg), Melvin Gundlach (Universität Hamburg), Niclas Iserlohe (Universität Hamburg), Dr. Oscar Ariza (Universität Hamburg), Prof. Dr. Frank Steinicke (Universität Hamburg)},

url = {https://www.inf.uni-hamburg.de/en/inst/ab/hci.html, Website

},

doi = {10.1145/3544549.3585881},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Mixed reality (MR) technologies provide enormous potential for collaboration between multiple users across the Reality–Virtuality continuum. We evaluate communication in a MR-based two-user collaboration task, in which the users have to move an object through an obstacle without collision. We used a blended reality environment, in which one user is immersed in virtual reality, whereas the other uses mobile augmented reality. Both users have different abilities and information and mutually depend on each other for successful completion of the task. Communication consensus can either be achieved by using speech, visual widgets, or a combination of both. The results indicate that speech plays a fundamental role. The usage of widgets served as an extension rather than a replacement of language. However, the combination of speech and widgets improved the clearness of communication with less miscommunication. These results provide important indications about how to design blended collaboration across the Reality–Virtuality continuum.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring Gesture and Gaze Proxies to Communicate Instructor's Nonverbal Cues in Lecture Videos

Tobias Wagner (Institute of Media Informatics, Ulm University), Teresa Hirzle (Department of Computer Science, University of Copenhagen), Anke Huckauf (Institute of Psychology, Education, Ulm University), Enrico Rukzio (Institute of Media Informatics, Ulm University)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Wagner2023Proxies,

title = {Exploring Gesture and Gaze Proxies to Communicate Instructor's Nonverbal Cues in Lecture Videos},

author = {Tobias Wagner (Institute of Media Informatics, Ulm University), Teresa Hirzle (Department of Computer Science, University of Copenhagen), Anke Huckauf (Institute of Psychology and Education, Ulm University), Enrico Rukzio (Institute of Media Informatics, Ulm University)},

url = {https://wgnrto.github.io/, Website

https://twitter.com/wgnrto, twitter},

doi = {10.1145/3544549.3585842},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Teaching via lecture video has become the defacto standard for remote education, but videos make it difficult to interpret instructors' nonverbal referencing to the content. This is problematic, as nonverbal cues are essential for students to follow and understand a lecture. As remedy, we explored different proxies representing instructors' pointing gestures and gaze to provide students a point of reference in a lecture video: no proxy, gesture proxy, gaze proxy, alternating proxy, and concurrent proxies. In an online study with 100 students, we evaluated the proxies' effects on mental effort, cognitive load, learning performance, and user experience. Our results show that the proxies had no significant effect on learning-directed aspects and that the gesture and alternating proxy achieved the highest pragmatic quality. Furthermore, we found that alternating between proxies is a promising approach providing students with information about instructors' pointing and gaze position in a lecture video.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring Mixed Reality in General Aviation to Support Pilot Workload

Christopher Katins (HU Berlin), Sebastian S. Feger (LMU Munich), Thomas Kosch (HU Berlin)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Katins2023Pilot,

title = {Exploring Mixed Reality in General Aviation to Support Pilot Workload},

author = {Christopher Katins (HU Berlin), Sebastian S. Feger (LMU Munich), Thomas Kosch (HU Berlin)},

url = {cognisens.group, Website},

doi = {10.1145/3544549.3585742},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Pilots in non-commercial aviation have minimal access to digital support tools. Equipping aircraft with modern technologies introduces high costs and is labor intensive. Hence, wearable or mobile support, such as common 2D maps displayed on standard tablets, are often the only digital information source supporting pilot workload. Yet, they fail to adequately capture the 3D airspace and its surroundings, challenging the pilot's workload in return. In this work, we explore how mixed reality can support pilots by projecting supportive elements into their fields of view. Considering the design of a preliminary mixed reality prototype, we conducted a user study with twelve pilots in a full-sized flight simulator. Our quantitative measures show that the perceived pilot workload when handling surrounding aircraft reduced while improving the overall landing routine efficiency at the same time. This work shows the utility of mixed reality technologies while simultaneously emphasizing future research directions in general aviation.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring Mobility Behavior Around Ambient Displays Using Clusters of Multi-dimensional Walking Trajectories

Jan Schwarzer (Hamburg University of Applied Sciences), Julian Fietkau (University of the Bundeswehr Munich), Laurenz Fuchs (University of the Bundeswehr Munich), Susanne Draheim (Hamburg University of Applied Sciences), Kai von Luck (Hamburg University of Applied Sciences), Michael Koch (University of the Bundeswehr Munich)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{mobility23schwarzer,

title = {Exploring Mobility Behavior Around Ambient Displays Using Clusters of Multi-dimensional Walking Trajectories},

author = {Jan Schwarzer (Hamburg University of Applied Sciences), Julian Fietkau (University of the Bundeswehr Munich), Laurenz Fuchs (University of the Bundeswehr Munich), Susanne Draheim (Hamburg University of Applied Sciences), Kai von Luck (Hamburg University of Applied Sciences), Michael Koch (University of the Bundeswehr Munich)},

url = {https://csti.haw-hamburg.de/, Website

https://twitter.com/csti_hamburg, Twitter},

doi = {10.1145/3544549.3585661},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Spatial information has become crucial in ambient display research and helps to better understand how people behave in a display’s vicinity. Walking trajectories have long been used to uncover such information and tools have been developed to capture them anonymously and automatically. However, more research is needed on the level of automation during mobility behavior analyses. Particularly, working with depth-based skeletal data still requires signifcant manual efort to, for instance, determine walking trajectories similar in shape. To advance on this situation, we adopt both agglomerative hierarchical clustering and dynamic time warping in this research. To the best of our knowledge, both algorithms have so far not found application in our feld. Using a multi-dimensional data set obtained from a longitudinal, real-world deployment, we demonstrate here the applicability and usefulness of this approach. In doing so, we contribute insightful ideas for future discussions on the methodological development in ambient display research.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

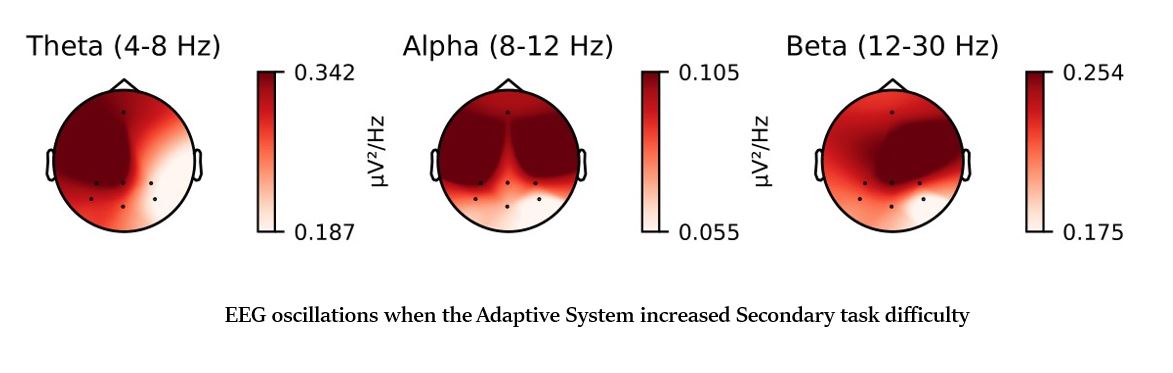

Exploring Physiological Correlates of Visual Complexity Adaptation: Insights from EDA, ECG, and EEG Data for Adaptation Evaluation in VR Adaptive Systems

Francesco Chiossi (LMU Munich), Changkun Ou (LMU Munich), Sven Mayer (LMU Munich)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Chiossi2023Physiological,

title = {Exploring Physiological Correlates of Visual Complexity Adaptation: Insights from EDA, ECG, and EEG Data for Adaptation Evaluation in VR Adaptive Systems},

author = {Francesco Chiossi (LMU Munich), Changkun Ou (LMU Munich), Sven Mayer (LMU Munich)},

url = {https://www.um.informatik.uni-muenchen.de/index.html, Website

https://twitter.com/mimuc, twitter},

doi = {10.1145/3544549.3585624},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Physiologically-adaptive Virtual Reality can drive interactions and adjust virtual content to better fit users' needs and support specific goals. However, the complexity of psychophysiological inference hinders efficient adaptation as the relationship between cognitive and physiological features rarely show one-to-one correspondence. Therefore, it is necessary to employ multimodal approaches to evaluate the effect of adaptations. In this work, we analyzed a multimodal dataset (EEG, ECG, and EDA) acquired during interaction with a VR-adaptive system that employed EDA as input for adaptation of secondary task difficulty. We evaluated the effect of dynamic adjustments on different physiological features and their correlation. Our results show that when the adaptive system increased the secondary task difficulty, theta, beta, and phasic EDA features increased. Moreover, we found a high correlation between theta, alpha, and beta oscillations during difficulty adjustments. Our results show how specific EEG and EDA features can be employed for evaluating VR adaptive systems.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

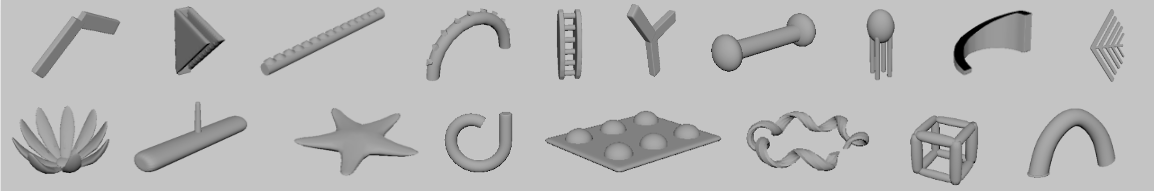

Exploring Shape Designs for Soft Robotics and Users’ Associations with Them

Anke Brocker (RWTH Aachen University), Ekaterina Nedorubkova (RWTH Aachen University), Simon Voelker (RWTH Aachen University), Jan Borchers (RWTH Aachen University),

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{shape23Brocker,

title = {Exploring Shape Designs for Soft Robotics and Users’ Associations with Them},

author = {Anke Brocker (RWTH Aachen University), Ekaterina Nedorubkova (RWTH Aachen University), Simon Voelker (RWTH Aachen University), Jan Borchers (RWTH Aachen University), },

url = {https://hci.rwth-aachen.de., Website

https://youtu.be/iT0n5njua-8, preview

https://youtu.be/p8PDfKvYmlU, full},

doi = {10.1145/3544549.3585606},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Soft robotics provides flexible structures and materials that move in natural and organic ways. They facilitate creating safe and tolerant mechanisms for human--machine interaction. This makes soft robotics attractive for tasks that rigid robots are unable to carry out. Users may also display a higher acceptance of soft robots compared to rigid robots because their natural way of movement helps users to relate to scenarios they know from everyday life, making the interaction with the soft robot feel more intuitive. However, the variety of soft robotics shape designs, and how to integrate them into applications, have not been explored fully yet.

In a user study, we investigated users' associations and ideas for application areas for 36 soft robotics shape designs, brainstormed with users beforehand. We derived first design recommendations for soft robotics designs such as clear signifiers indicating the possible motion.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

In a user study, we investigated users' associations and ideas for application areas for 36 soft robotics shape designs, brainstormed with users beforehand. We derived first design recommendations for soft robotics designs such as clear signifiers indicating the possible motion.

Exploring the Perception of Pain in Virtual Reality using Perceptual Manipulations

Gaëlle Clavelin (Telecom Paris, IP Paris), Mickael Bouhier (Telecom Paris, IP Paris), Wen-Jie Tseng (Telecom Paris, IP Paris), Jan Gugenheimer (TU Darmstadt)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Clavelin2023Pain,

title = {Exploring the Perception of Pain in Virtual Reality using Perceptual Manipulations},

author = {Gaëlle Clavelin (Telecom Paris, IP Paris), Mickael Bouhier (Telecom Paris, IP Paris), Wen-Jie Tseng (Telecom Paris, IP Paris), Jan Gugenheimer (TU Darmstadt)},

doi = {10.1145/3544549.3585674},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Perceptual manipulations (PMs) in Virtual Reality (VR) can steer users’ actions (e.g., redirection techniques) and amplify haptic perceptions (e.g., weight). However, their ability to amplify or induce negative perceptions such as physical pain is not well understood. In this work, we explore if PMs can be leveraged to induce the perception of pain, without modifying the physical stimulus. We implemented a VR experience combined with a haptic prototype, simulating the dislocation of a finger. A user study (n=18) compared three conditions (visual-only, haptic-only and combined) on the perception of physical pain and physical discomfort. We observed that using PMs with a haptic device resulted in a significantly higher perception of physical discomfort and an increase in the perception of pain compared to the unmodified sensation (haptic-only). Finally, we discuss how perception of pain can be leveraged in future VR applications and reflect on ethical concerns.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring the Use of Electromagnets to Influence Key Targeting on Physical Keyboards

Lukas Mecke (University of the Bundeswehr Munich, LMU Munich), Ismael Prieto Romero (University of the Bundeswehr Munich), Sarah Delgado Rodriguez (University of the Bundeswehr Munich), Florian Alt (University of the Bundeswehr Munich)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Mecke2023Electromagnets,

title = {Exploring the Use of Electromagnets to Influence Key Targeting on Physical Keyboards},

author = {Lukas Mecke (University of the Bundeswehr Munich, LMU Munich), Ismael Prieto Romero (University of the Bundeswehr Munich), Sarah Delgado Rodriguez (University of the Bundeswehr Munich), Florian Alt (University of the Bundeswehr Munich)},

url = {https://www.unibw.de/usable-security-and-privacy, Website},

doi = {10.1145/3544549.3585703},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {In this work we explore the use of force induced through electromagnets to influence finger movement while using a keyboard. To achieve this we generate a magnetic field below a keyboard and place a permanent magnet on the user's finger as a minimally invasive approach to dynamically induce variable force. Contrary to other approaches our setup can thus generate forces even at a distance from the keyboard.

We explore this concept by building a prototype and analysing different configurations of electromagnets (i.e., attraction and repulsion) and placements of a permanent magnet on the user's fingers in a preliminary study (N=4). Our force measurements show that we can induce 3.56 N at a distance of 10 mm. Placing the magnet on the index finger allowed for influencing key press times and was perceived comfortable. Finally we discuss implications and potential application areas like mid-air feedback and guidance.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

We explore this concept by building a prototype and analysing different configurations of electromagnets (i.e., attraction and repulsion) and placements of a permanent magnet on the user's fingers in a preliminary study (N=4). Our force measurements show that we can induce 3.56 N at a distance of 10 mm. Placing the magnet on the index finger allowed for influencing key press times and was perceived comfortable. Finally we discuss implications and potential application areas like mid-air feedback and guidance.

EyesOnMe: Investigating Haptic and Visual User Guidance for Near-Eye Positioning of Mobile Phones for Self-Eye-Examinations

Luca-Maxim Meinhardt (Ulm University), Kristof Van Laerhoven (University of Siegen), David Dobbelstein (Carl Zeiss AG)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{eyes23Meinhardt,

title = {EyesOnMe: Investigating Haptic and Visual User Guidance for Near-Eye Positioning of Mobile Phones for Self-Eye-Examinations},

author = {Luca-Maxim Meinhardt (Ulm University), Kristof Van Laerhoven (University of Siegen), David Dobbelstein (Carl Zeiss AG)},

url = {https://www.uni-ulm.de/in/mi/hci/, Website

https://twitter.com/mi_uulm?t=CT2R8HlG-ubglCLz49fu8Q&s=09, Twitter

},

doi = {10.1145/3544549.3585799},

year = {2023},

date = {2023-04-28},

publisher = {ACM},

abstract = {The scarcity of professional ophthalmic equipment in rural areas and during exceptional situations such as the COVID-19 pandemic highlights the need for tele-ophthalmology. This late-breaking work presents a novel method for guiding users to a specific pose (3D position and 3D orientation) near the eye for mobile self-eye examinations using a smartphone. The user guidance is implemented utilizing haptic and visual modalities to guide the user and subsequently capture a close-up photo of the user's eyes. In a within-subject user study (n=24), the required time, success rate, and perceived demand for the visual and haptic feedback conditions were examined. The results indicate that haptic feedback was the most efficient and least cognitively demanding in the positioning task near the eye, whereas relying on only visual feedback can be more difficult due to the near focus point or refractive errors.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Fabric Faces: Combining Textiles and 3D Printing for Maker-Friendly Folding-Based Assembly

Adrian Wagner (RWTH Aachen University), Paul Miles Preuschoff (RWTH Aachen University), Philipp Wacker (RWTH Aachen University), Simon Voelker (RWTH Aachen University), Jan Borchers (RWTH Aachen University),

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{faces23Wagner,

title = {Fabric Faces: Combining Textiles and 3D Printing for Maker-Friendly Folding-Based Assembly},

author = {Adrian Wagner (RWTH Aachen University), Paul Miles Preuschoff (RWTH Aachen University), Philipp Wacker (RWTH Aachen University), Simon Voelker (RWTH Aachen University), Jan Borchers (RWTH Aachen University),},

url = {https://hci.rwth-aachen.de, Website

https://youtu.be/rDh9PL4iqbQ, Preview

https://youtu.be/MQHgeCfUCTY, Full},

doi = {https://doi.org/10.1145/3544549.3585854},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

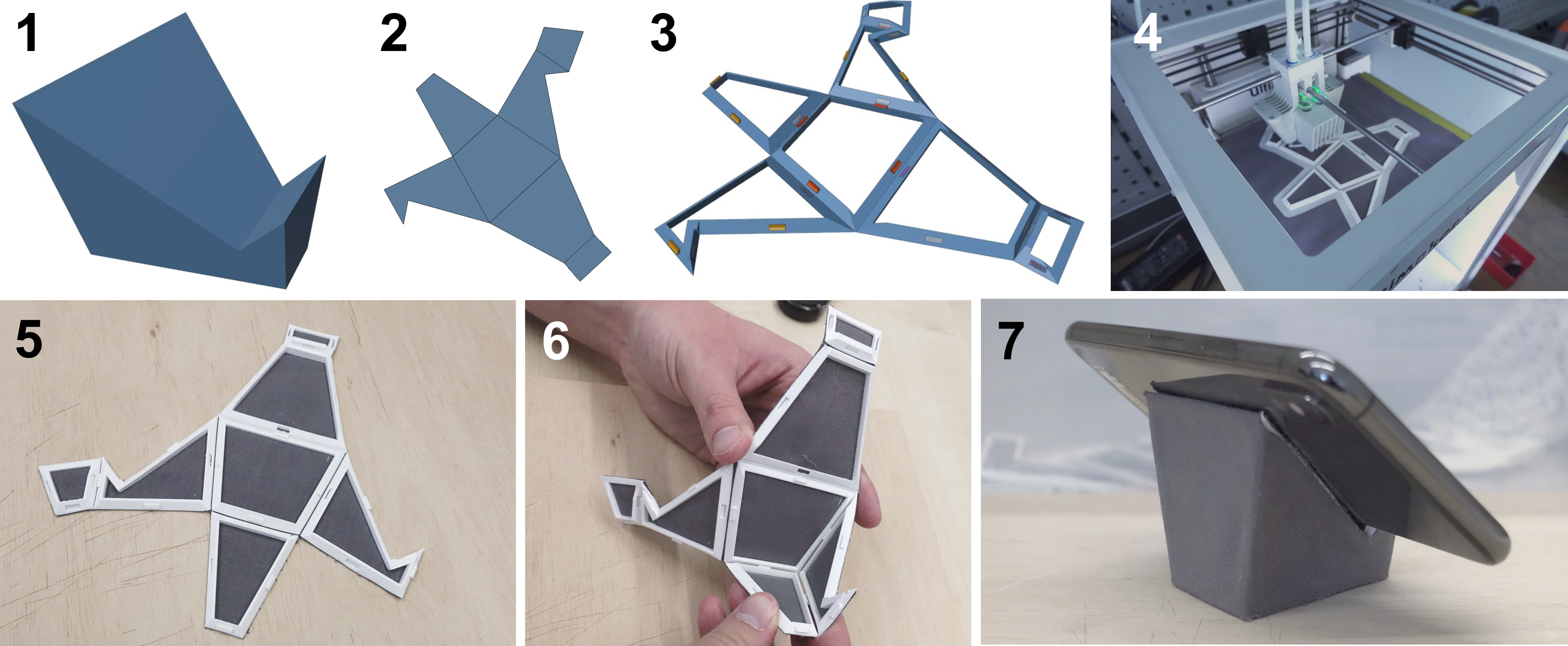

abstract = {"We introduce a Personal Fabrication workflow to easily create feature-rich 3D objects with textile-covered surfaces.

Our approach unfolds a 3D model into a series of flat frames with connectors, which are then 3D-printed onto a piece of fabric, and folded manually into the shape of the original model.

This opens up an accessible way to incorporate established 2D textile workflows, such as embroidery, using color patterns, and combining different fabrics, when creating 3D objects.

FabricFaces objects can also be flattened again easily for transport and storage.

We provide an open-source plugin for the common 3D tool Blender.

It enables a one-click workflow to turn a user-provided model into 3D printer instructions, textile cut patterns, and connector support.

Generated frames can be refined quickly and iteratively through previews and extensive options for manual intervention.

We present example objects illustrating a variety of use cases."},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Our approach unfolds a 3D model into a series of flat frames with connectors, which are then 3D-printed onto a piece of fabric, and folded manually into the shape of the original model.

This opens up an accessible way to incorporate established 2D textile workflows, such as embroidery, using color patterns, and combining different fabrics, when creating 3D objects.

FabricFaces objects can also be flattened again easily for transport and storage.

We provide an open-source plugin for the common 3D tool Blender.

It enables a one-click workflow to turn a user-provided model into 3D printer instructions, textile cut patterns, and connector support.

Generated frames can be refined quickly and iteratively through previews and extensive options for manual intervention.

We present example objects illustrating a variety of use cases."

HaptiX: Vibrotactile Haptic Feedback for Communication of 3D Directional Cues

Max Pascher (Westphalian University of Applied Sciences & University of Duisburg-Essen), Til Franzen(Westphalian University of Applied Sciences), Kirill Kronhardt (Westphalian University of Applied Sciences), Uwe Gruenefeld (University of Duisburg-Essen), Stefan Schneegass (University of Duisburg-Essen), Jens Gerken (Westphalian University of Applied Sciences)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Pascher2023HaptiX,

title = {HaptiX: Vibrotactile Haptic Feedback for Communication of 3D Directional Cues},

author = {Max Pascher (Westphalian University of Applied Sciences & University of Duisburg-Essen), Til Franzen(Westphalian University of Applied Sciences), Kirill Kronhardt (Westphalian University of Applied Sciences), Uwe Gruenefeld (University of Duisburg-Essen), Stefan Schneegass (University of Duisburg-Essen), Jens Gerken (Westphalian University of Applied Sciences)},

url = {https://hci.w-hs.de/, Website Westphalian University of Applied Sciences

https://www.hci.wiwi.uni-due.de, Website University of Duisburg-Essen},

doi = {10.1145/3544549.3585601},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {In Human-Computer-Interaction, vibrotactile haptic feedback offers the advantage of being independent of the visual perception of the environment. For example, the user's field of view is not obscured by user interface elements, and the visual sense is not unnecessarily strained. This is especially advantageous when the visual channel is already busy or the visual sense is limited. We developed three design variants based on different vibrotactile illusions to communicate 3D directional cues. In particular, we explored two variants based on the vibrotactile illusion of the cutaneous rabbit and one based on apparent vibrotactile motion. To communicate gradient information, we combined these with pulse-based and intensity-based mapping. A subsequent study showed that the pulse-based variants based on the vibrotactile illusion of the cutaneous rabbit are suitable for communicating both directional and gradient characteristics. The results further show that a representation of 3D directions via vibrations can be effective and satisfactory.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

I want to be able to change the speed and size of the avatar: Assessing User Requirements for Animated Sign Language Translation Interfaces

Amelie Nolte (University of Luebeck), Barbara Gleissl (Ergosign GmbH), Jule Heckmann (Ergosign GmbH), Dieter Wallach (Ergosign GmbH), Nicole Jochems (University of Luebeck)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Nolte2023Sign,

title = {I want to be able to change the speed and size of the avatar: Assessing User Requirements for Animated Sign Language Translation Interfaces},

author = {Amelie Nolte (University of Luebeck), Barbara Gleissl (Ergosign GmbH), Jule Heckmann (Ergosign GmbH), Dieter Wallach (Ergosign GmbH), Nicole Jochems (University of Luebeck)},

url = {https://www.imis.uni-luebeck.de/en, Website},

doi = {10.1145/3544549.3585675},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Despite research having shown that signing users vary in their preferences and needs of distinct sign language (SL) parameters, current research on SL avatars lacks consideration of the UI context's options for individualization or configuration of such parameters. Our paper addresses this gap as it presents our ability-oriented online survey, in which we asked native signers about parameters they would like to configure, as well content types that should be offered for translation within travel contexts. Our results indicate that parameters with a direct influence on the understandability of the avatar are most important for individual adjustments and situations with high time-pressure are valued highly for translation within travelling contexts. Also, our study design revealed that assessing the language background of participants in the form of an example-based self-assessment can help achieve more sensitive results, emphasizing the need for adequate research approaches. },

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Immersive Reading: Comparison of Performance and User Experience for Reading Long Texts in Virtual Reality

Jenny Gabel (Understanding Written Artefacts, Human Computer Interaction, Universität Hamburg), Melanie Ludwig ( Human Computer Interaction, Universität Hamburg), Frank Steinicke (Human Computer Interaction, Understanding Written Artefacts, Universität Hamburg)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Gabel2023Reading,

title = {Immersive Reading: Comparison of Performance and User Experience for Reading Long Texts in Virtual Reality},

author = {Jenny Gabel (Understanding Written Artefacts, Human Computer Interaction, Universität Hamburg), Melanie Ludwig ( Human Computer Interaction, Universität Hamburg), Frank Steinicke (Human Computer Interaction, Understanding Written Artefacts, Universität Hamburg)},

url = {https://www.inf.uni-hamburg.de/en/inst/ab/hci.html, Website

https://twitter.com/uhhhci, twitter},

doi = {10.1145/3544549.3585895},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Specific use cases in virtual reality (VR) require users to read long texts on their headsets. We use VR to support researchers in the humanities, which includes the display of long text descriptions in VR. However, research on suitable user interface (UI) patterns for displaying and interacting with long texts in VR is scarce. To address this gap, we designed four text panel variants and conducted a within-participants study (N=24) to evaluate user experience (UX) and reading performance. Our findings suggest that there are no significant differences between conditions regarding reading performance. Yet, there are significant effects of conditions on some aspects of UX. Our work offers initial insights and future directions for research on the design of suitable UI patterns and the UX for reading long texts in VR.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

InterFlowCeption: Foundations for Technological Enhancement of Interoception to Foster Flow States during Mental Work: About the potential of technologically supported body awareness to promote flow experiences during mental work

Christoph Berger (Karlsruhe Institute of Technology), Michael Thomas Knierim (Karlsruhe Institute of Technology), Ivo Benke (Karlsruhe Institute of Technology), Karen Bartholomeyczik (Karlsruhe Institute of Technology),, Christof Weinhardt (Karlsruhe Institute of Technology)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Berger2023Inter,

title = {InterFlowCeption: Foundations for Technological Enhancement of Interoception to Foster Flow States during Mental Work: About the potential of technologically supported body awareness to promote flow experiences during mental work},

author = {Christoph Berger (Karlsruhe Institute of Technology), Michael Thomas Knierim (Karlsruhe Institute of Technology), Ivo Benke (Karlsruhe Institute of Technology), Karen Bartholomeyczik (Karlsruhe Institute of Technology), and Christof Weinhardt (Karlsruhe Institute of Technology)},

doi = {10.1145/3544549.3585833},

year = {2023},

date = {2023-04-23},

abstract = {Conducting mental work by interacting with digital technology increases productivity, but strains attentional capacities and mental well-being. In consequence, many mental workers try to cultivate their fow experience. However, this is complex and difcult to achieve. Nevertheless, current technological systems do not yet provide this support in mental work. As interoception, the individ- ual bodily awareness is an underlying mechanism of numerous fow correlates, it might ofer a new approach for fow-supporting sys- tems in these scenarios. Results from a survey study with 176 digital workers show that adaptive regulation of interoceptive sensations correlates with higher levels of fow and engagement. Additionally, regular mindfulness practices improved workers’ adaptive regula- tion of bodily signals. Based on these results and integrating the current literature, this work conceptualizes three future techno- logical support systems, such as interoceptive biofeedback, and electrical or auditory stimulation to enhance interoceptive aware- ness and foster fow in mental work.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

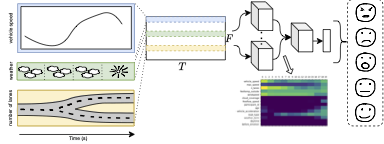

Interpretable Time-Dependent Convolutional Emotion Recognition with Contextual Data Streams

David Bethge (LMU Munich, Dr. Ing. h.c. F. Porsche AG), Constantin Patsch (Dr. Ing. h.c. F. Porsche AG), Philipp Hallgarten (Dr. Ing. h.c. F. Porsche AG, Universität Tübingen), Thomas Kosch (HU Berlin)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Bethge2023Emotion,

title = {Interpretable Time-Dependent Convolutional Emotion Recognition with Contextual Data Streams},

author = {David Bethge (LMU Munich, Dr. Ing. h.c. F. Porsche AG), Constantin Patsch (Dr. Ing. h.c. F. Porsche AG), Philipp Hallgarten (Dr. Ing. h.c. F. Porsche AG, Universität Tübingen), Thomas Kosch (HU Berlin)},

url = {https://ubicomp.net/wp/, Website},

doi = {10.1145/3544549.3585672},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Emotion prediction is important when interacting with computers. However, emotions are complex, difficult to assess, understand, and hard to classify. Current emotion classification strategies skip why a specific emotion was predicted, complicating the user's understanding of affective and empathic interface behaviors. Advances in deep learning showed that transformer networks can learn powerful time-series patterns while showing classification decisions and feature importances. We present a novel transformer-based model that classifies emotions robustly. Our model not only offers high emotion-prediction performance but also enables transparency on the model decisions. Our solution thereby provides a time-aware feature interpretation of classification decisions using saliency maps. We evaluate the system on a contextual, real-world driving dataset involving twelve participants. Our model achieves a mean accuracy of 70% in 5-class emotion classification on unknown roads and outperforms in-car facial expression recognition by 14%. We conclude how emotion prediction can be improved by incorporating emotion sensing into interactive computing systems.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

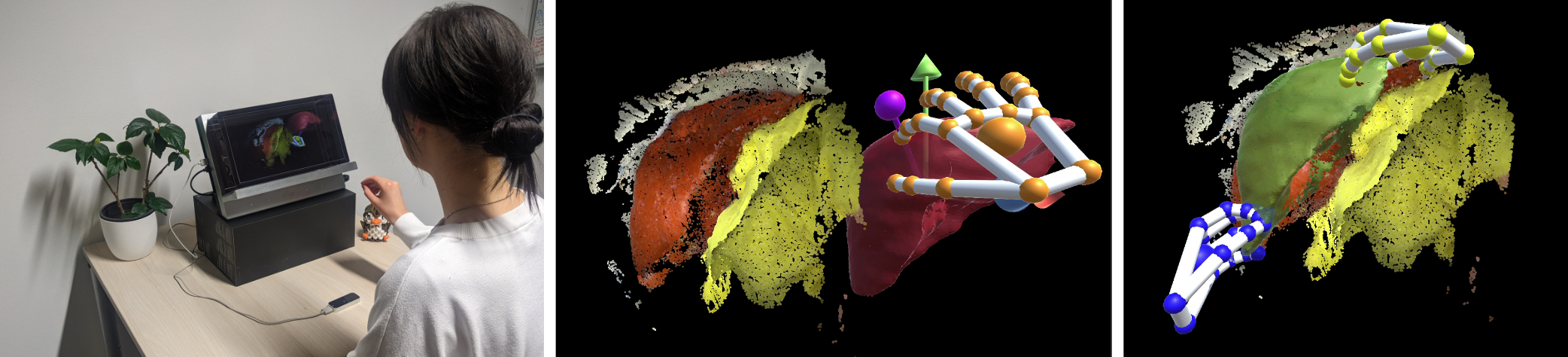

Point Cloud Alignment through Mid-Air Gestures on a Stereoscopic Display

Katja Krug (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Marc Satkowski (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Reuben Docea (Translational Surgical Oncology, National Center for Tumor Diseases Dresden, Germany), Tzu-Yu Ku (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Raimund Dachselt (Interactive Media Lab Dresden, Technische Universität Dresden, Germany)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Krug2023Point,

title = {Point Cloud Alignment through Mid-Air Gestures on a Stereoscopic Display},

author = {Katja Krug (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Marc Satkowski (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Reuben Docea (Translational Surgical Oncology, National Center for Tumor Diseases Dresden, Germany), Tzu-Yu Ku (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Raimund Dachselt (Interactive Media Lab Dresden, Technische Universität Dresden, Germany)},

url = {https://imld.de/, Website

https://twitter.com/imldresden, twitter

https://youtu.be/RdS1TzleUXQ, Teaser Video

https://youtu.be/9hxx3NPbL8M, Full Video},

doi = {10.1145/3544549.3585862},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Manual point cloud registration is often a crucial step during the mapping of 3D point clouds and usually performed on a conventional desktop setup with mouse interaction. Since 3D point clouds are inherently spatial, these 2D applications suffer from impaired depth perception and inconvenient interaction. Nonetheless, there are few efforts to improve the usability of these applications. To address this, we propose an alternative setup, consisting of a stereoscopic display and an external hand tracker, allowing for enhanced depth perception and natural interaction without the need for body-worn devices or handheld controllers. We developed interaction techniques for point cloud alignment in 3D space, including visual feedback during alignment, and implemented a proof-of-concept prototype in the context of a surgical use case. We describe the use case, design and implementation of our concepts and outline future work. Herewith we provide a user-centered alternative to desktop applications for manual point cloud registration.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Reach Prediction using Finger Motion Dynamics

Dimitar Valkov (Saarland University, Germany; DFKI, Saarland Informatics Campus, Germany), Pascal Kockwelp (Computer Science Department, University of Münster, Germany), Florian Daiber (DFKI, Saarland Informatics Campus, Germany), Antonio Krüger (DFKI, Saarland Informatics Campus, Germany)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Valkov2023Reach,

title = {Reach Prediction using Finger Motion Dynamics},

author = {Dimitar Valkov (Saarland University, Germany; DFKI, Saarland Informatics Campus, Germany), Pascal Kockwelp (Computer Science Department, University of Münster, Germany), Florian Daiber (DFKI, Saarland Informatics Campus, Germany), Antonio Krüger (DFKI, Saarland Informatics Campus, Germany)},

url = {https://umtl.cs.uni-saarland.de/, Website},

doi = {10.1145/3544549.3585773},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {The ability to predict the object the user intends to grasp or to recognize the one she is already holding offers essential contextual information and may help to leverage the effects of point-to-point latency in interactive environments. This paper investigates the feasibility and accuracy of recognizing un-instrumented objects based on hand kinematics during reach-to-grasp and transport actions. In a data collection study, we recorded the hand motions of 16 participants while reaching out to grasp and then moving real and synthetic objects.

Our results demonstrate that even a simple LSTM network can predict the time point at which the user grasps an object with 23 ms precision and the current distance to it with a precision better than 1 cm. The target's size can be determined in advance with an accuracy better than 97%.

Our results have implications for designing adaptive and fine-grained interactive user interfaces in ubiquitous and mixed-reality environments.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Our results demonstrate that even a simple LSTM network can predict the time point at which the user grasps an object with 23 ms precision and the current distance to it with a precision better than 1 cm. The target's size can be determined in advance with an accuracy better than 97%.

Our results have implications for designing adaptive and fine-grained interactive user interfaces in ubiquitous and mixed-reality environments.

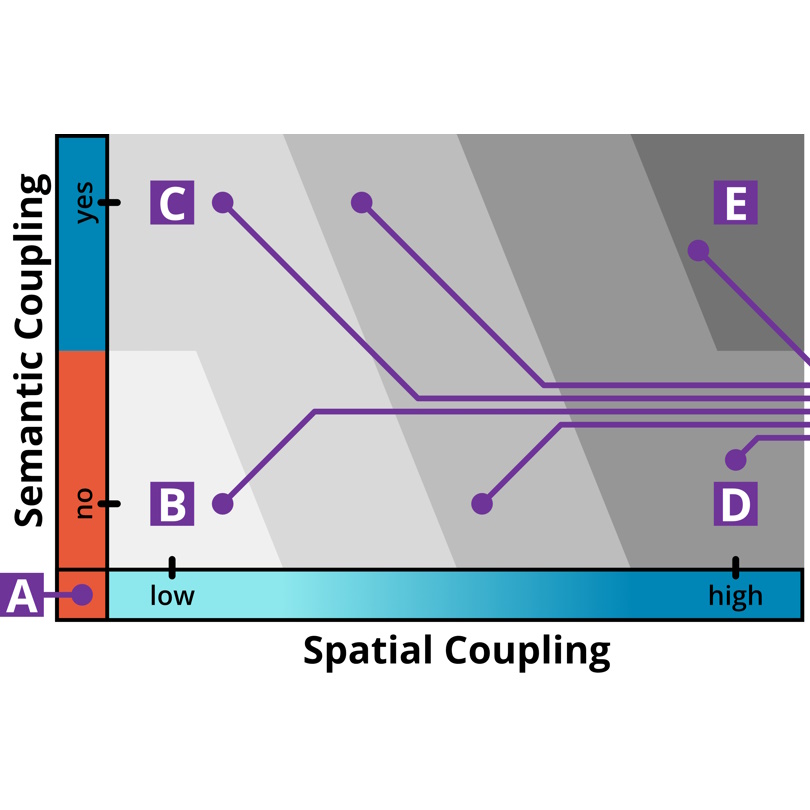

Spatiality and Semantics - Towards Understanding Content Placement in Mixed Reality

Mats Ole Ellenberg (Interactive Media Lab Dresden,Technische Universität Dresden; Centre for Tactile Internet with Human-in-the-Loop (CeTI), Technische Univerität Dresden), Marc Satkowski (Interactive Media Lab Dresden,Technische Universität Dresden; Centre for Scalable Data Analytics, Artificial Intelligence (ScaDS.AI), Dresden/Leipzig), Weizhou Luo (Interactive Media Lab Dresden,Technische Universität Dresden), Raimund Dachselt (Interactive Media Lab Dresden,Technische Universität Dresden; Centre for Tactile Internet with Human-in-the-Loop (CeTI), Technische Univerität Dresden; Centre for Scalable Data Analytics, Artificial Intelligence (ScaDS.AI), Dresden/Leipzig; Cluster of Excellence Physics of Life, Technische Universität Dresden)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Ellenberg2023Spatiality,

title = {Spatiality and Semantics - Towards Understanding Content Placement in Mixed Reality},

author = {Mats Ole Ellenberg (Interactive Media Lab Dresden,Technische Universität Dresden; Centre for Tactile Internet with Human-in-the-Loop (CeTI), Technische Univerität Dresden), Marc Satkowski (Interactive Media Lab Dresden,Technische Universität Dresden; Centre for Scalable Data Analytics and Artificial Intelligence (ScaDS.AI), Dresden/Leipzig), Weizhou Luo (Interactive Media Lab Dresden,Technische Universität Dresden), Raimund Dachselt (Interactive Media Lab Dresden,Technische Universität Dresden; Centre for Tactile Internet with Human-in-the-Loop (CeTI), Technische Univerität Dresden; Centre for Scalable Data Analytics and Artificial Intelligence (ScaDS.AI), Dresden/Leipzig; Cluster of Excellence Physics of Life, Technische Universität Dresden)},

url = {https://imld.de/en/, Website

https://twitter.com/imldresden, twitter

https://www.youtube.com/watch?v=GQ_qMIB-6jY, Teaser Video

},

doi = {10.1145/3544549.3585853},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Mixed Reality (MR) popularizes numerous situated applications where virtual content is spatially integrated into our physical environment. However, we only know little about what properties of an environment influence the way how people place digital content and perceive the resulting layout. We thus conducted a preliminary study (N = 8) examining how physical surfaces affect organizing virtual content like documents or charts, focusing on user perception and experience. We found, among others, that the situated layout of virtual content in its environment can be characterized by the level of spatial as well as semantic coupling. Consequently, we propose a two-dimensional design space to establish the vocabularies and detail their parameters for content organization. With our work, we aim to facilitate communication between designers or researchers, inform general MR interface design, and provide a first step towards future MR workspaces empowered by blending digital content and its real-world context.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Statistically Controlling for Processing Fluency Reduces the Aesthetic-Usability Effect

Jan Preßler (Julius-Maximilians-Universität), Lukas Schmid (Julius-Maximilians-Universität), Jörn Hurtienne (Julius-Maximilians-Universität)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Pressler2023Statistically,

title = {Statistically Controlling for Processing Fluency Reduces the Aesthetic-Usability Effect},

author = {Jan Preßler (Julius-Maximilians-Universität), Lukas Schmid (Julius-Maximilians-Universität), Jörn Hurtienne (Julius-Maximilians-Universität)},

url = {https://www.mcm.uni-wuerzburg.de/psyergo/startseite/, Website

},

doi = {10.1145/3544549.3585739},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {The aesthetic-usability effect asserts that user interfaces that appear aesthetic also appear easier to use. Most explanations for the effect see aesthetics in a causal role. In contrast, we propose that processing fluency as a third variable causes both judgements of aesthetics and usability. Processing fluency refers to the subjective ease of information processing and has been shown to influence, among others, judgements of aesthetics and usability. We tested our proposition in an experiment in which users rated screenshots of city websites. The aesthetic-usability effect was replicated by our data: aesthetics and usability correlated .79. When controlling for fluency, however, the aesthetic-usability effect was considerably diminished; the correlation decreased to .34. Future research will address the limitations of this study by investigating a wider range of website designs and adding interactivity to the interfaces.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Text Me if You Can: Investigating Text Input Methods for Cyclists

Andrii Matviienko (KTH Royal Institute of Technology, Sweden), Jean-Baptiste Durand-Pierre (Technical University of Darmstadt, Germany), Jona Cvancar (Technical University of Darmstadt, Germany), Max Mühlhäuser (Technical University of Darmstadt, Germany)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Matviienko2023Text,

title = {Text Me if You Can: Investigating Text Input Methods for Cyclists},

author = {Andrii Matviienko (KTH Royal Institute of Technology, Sweden), Jean-Baptiste Durand-Pierre (Technical University of Darmstadt, Germany), Jona Cvancar (Technical University of Darmstadt, Germany), Max Mühlhäuser (Technical University of Darmstadt, Germany)},

url = {https://teamdarmstadt.de/, Website},

doi = {10.1145/3544549.3585734},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Cycling is emerging as a relevant alternative to cars. However, the more people commute by bicycle, the higher the number of cyclists who use their smartphones on the go and endanger road safety. To better understand input while cycling, in this paper, we present the design and evaluation of three text input methods for cyclists: (1) touch input using smartphones, (2) midair input using a Microsoft Hololens 2, and (3) a set of ten physical buttons placed on both sides of the handlebar. We conducted a controlled indoor experiment (N = 12) on a bicycle simulator to evaluate these input methods. We found that text input via touch input was faster and less mentally demanding than input with midair gestures and physical buttons. However, the midair gestures were the least error-prone, and the physical buttons facilitated keeping both hands on the handlebars and were more intuitive and less distracting.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Up, Up and Away - Investigating Information Needs for Helicopter Pilots in future Urban Air Mobility

Luca-Maxim Meinhardt (Ulm University), Mark Colley (Ulm University), Alexander Fassbender (Ulm University), Michael Rietzler (Ulm University), Enrico Rukzio (Um University)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{pilots23meinhardt,

title = {Up, Up and Away - Investigating Information Needs for Helicopter Pilots in future Urban Air Mobility},

author = {Luca-Maxim Meinhardt (Ulm University), Mark Colley (Ulm University), Alexander Fassbender (Ulm University), Michael Rietzler (Ulm University), Enrico Rukzio (Um University)},

url = {https://www.uni-ulm.de/in/mi/hci/, Website

https://twitter.com/mi_uulm?t=CT2R8HlG-ubglCLz49fu8Q&s=09, Twitter},

doi = {10.1145/3544549.3585643},

year = {2023},

date = {2023-04-28},

publisher = {ACM},

abstract = {This qualitative work aims to address the emerging challenges and opportunities through advanced automation and visualization capabilities in the field of helicopter piloting for future Urban Air Mobility. A workshop was conducted with N=6 professional helicopter pilots to gather insights on these topics. The participants discussed key themes, including information needs, user interfaces, and automation. The results unveiled novel opportunities and highlighted challenges for research on aiding helicopter pilots in the fields of obstacle avoidance, map visualization, and air traffic visualization, such as augmenting flight paths to increase their situation awareness.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Virtual Tourism, Real Experience: A Motive-Oriented Approach to Virtual Tourism

Sara Wolf (Institute Human-Computer-Media, Julius-Maximilians-Universität, Würburg, Germany), Michael Weber (Institute Human-Computer-Media, Julius-Maximilians-Universität, Würburg, Germany), Jörn Hurtienne (Chair of Psychological Ergonomics, Julius-Maximilians-Universität, Würburg, Germany)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Wolf2023Tourism,

title = {Virtual Tourism, Real Experience: A Motive-Oriented Approach to Virtual Tourism},

author = {Sara Wolf (Institute Human-Computer-Media, Julius-Maximilians-Universität, Würburg, Germany), Michael Weber (Institute Human-Computer-Media, Julius-Maximilians-Universität, Würburg, Germany), Jörn Hurtienne (Chair of Psychological Ergonomics, Julius-Maximilians-Universität, Würburg, Germany)},

url = {https://www.mcm.uni-wuerzburg.de/psyergo/startseite/, Website},

doi = {10.1145/3544549.3585594},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Virtual tourism products promise to combine the best of two worlds: Staying in the safety of one’s home while having engaging tourism experiences. Previous tourism research has emphasised that tourism experiences involve more than just seeing other places. They address cultural motives such as novelty and education and socio-psychological motives like relaxation, escape from a mundane environment or facilitation of social interaction. We suggest applying the motive-oriented perspective in HCI research on virtual tourism and report on a corresponding analysis of 21 virtual tourism products. Our findings show that current virtual tourism products neglect the breadth of tourist motives. They mainly focus on cultural motives while rarely addressing socio-psychological motives, especially kinship relationships and prestige. Our findings demonstrate the usefulness of the motive-oriented perspective for HCI and inspired conceptual ideas for addressing motives in virtual tourism products that may be useful for future research and design in this area.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

VR, Gaze, and Visual Impairment: An Exploratory Study of the Perception of Eye Contact across different Sensory Modalities for People with Visual Impairments in Virtual Reality

Markus Wieland (University of Stuttgart), Michael Sedlmair (University of Stuttgart), Tonja-Katrin Machulla (TU Chemnitz)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Wieland2023Impairment,

title = {VR, Gaze, and Visual Impairment: An Exploratory Study of the Perception of Eye Contact across different Sensory Modalities for People with Visual Impairments in Virtual Reality},

author = {Markus Wieland (University of Stuttgart), Michael Sedlmair (University of Stuttgart), Tonja-Katrin Machulla (TU Chemnitz)},

url = {https://visvar.github.io/, Website},

doi = {10.1145/3544549.3585726},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {As social virtual reality (VR) becomes more popular, avatars are being designed with realistic behaviors incorporating non-verbal cues like eye contact. However, for people with visual impairments, perceiving eye contact during a conversation can be challenging. VR presents an opportunity to display eye contact cues in alternative ways, making them perceivable for people with visual impairments. We performed an exploratory study to gain initial insights on designing eye contact cues for people with visual impairments, including a focus group for a deeper understanding of the topic. We implemented eye contact cues via visual, auditory, and tactile sensory modalities in VR and tested these approaches with eleven participants with visual impairments and collected qualitative feedback. The results show that visual cues indicating the direction of gaze were preferred, but auditory and tactile cues were also prevalent as they do not superimpose additional visual information.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

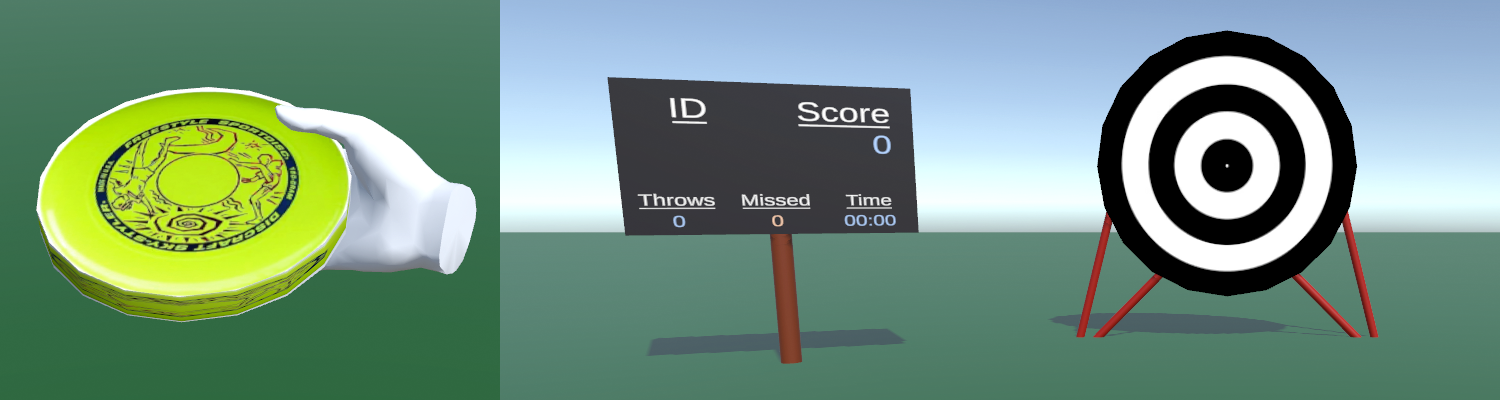

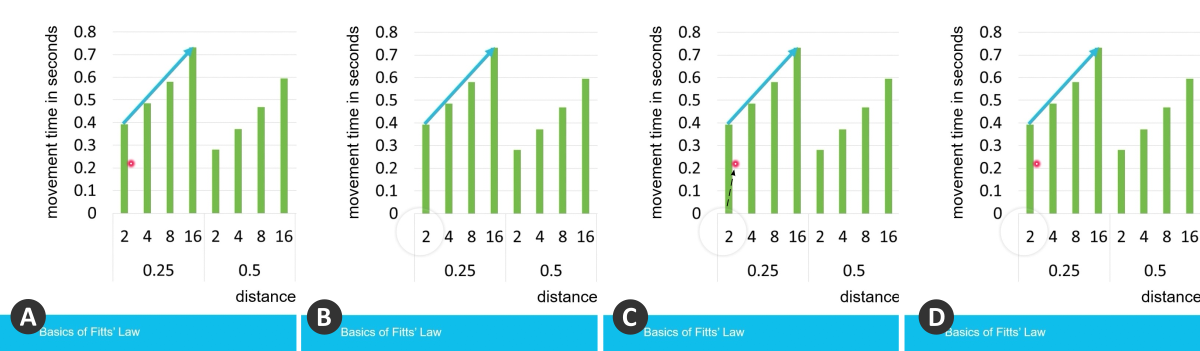

VRisbee: How Hand Visibility Impacts Throwing Accuracy and Experience in Virtual Reality

Malte Borgwardt (University of Bremen), Jonas Boueke (University of Bremen), María Fernanda Sanabria (University of Bremen), Michael Bonfert (Digital Media Lab, University of Bremen), Robert Porzel (Digital Media Lab, University of Bremen)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Borgwardt2023VRisbee,

title = {VRisbee: How Hand Visibility Impacts Throwing Accuracy and Experience in Virtual Reality},

author = {Malte Borgwardt (University of Bremen), Jonas Boueke (University of Bremen), María Fernanda Sanabria (University of Bremen), Michael Bonfert (Digital Media Lab, University of Bremen), Robert Porzel (Digital Media Lab, University of Bremen)},

url = {https://www.uni-bremen.de/dmlab, Website

https://twitter.com/dmlabbremen, twitter},

doi = {10.1145/3544549.3585868},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Hand interaction plays a key role in virtual reality (VR) sports. While in reality, athletes mostly rely on haptic perception when holding and throwing objects, these sensational cues can be missing or differ in virtual environments. In this work, we investigated how the visibility of a virtual hand can support players when throwing and what impact it has on the overall experience. We developed a Frisbee simulation in VR and asked 29 study participants to hit a target. We measured the throwing accuracy and self-reports of presence, disc control, and body ownership. The results show a subtle advantage of hand visibility in terms of accuracy. Visible hands further improved the subjective impression of realism, body ownership and subjective control over the disc.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}