We curated a list of this year’s publications — including links to social media, lab websites, and supplemental material. We have six journal articles, 67 full papers, 30 LBWs, eleven interactivities, one alt.chi paper, one DC paper, lead three workshops and give two courses. Four papers were awarded a best paper award, and seven papers received an honourable mention.

The papers for the contributing labs were also curated in a PDF booklet by Michael Chamunorwa, and it can be downloaded here: Booklet 2023

Your publication is missing? Send us an email: contact@germanhci.de

Demonstrating CleAR Sight: Transparent Interaction Panels for Augmented Reality

Wolfgang Büschel (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Katja Krug (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Konstantin Klamka (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Raimund Dachselt (Interactive Media Lab Dresden, Technische Universität Dresden, Germany)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{Bueschel2023CleARb,

title = {Demonstrating CleAR Sight: Transparent Interaction Panels for Augmented Reality},

author = {Wolfgang Büschel (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Katja Krug (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Konstantin Klamka (Interactive Media Lab Dresden, Technische Universität Dresden, Germany), Raimund Dachselt (Interactive Media Lab Dresden, Technische Universität Dresden, Germany)},

url = {https://imld.de/clear-sight, Website

https://twitter.com/imldresden, twitter

https://www.youtube.com/watch?v=-SffqiCInh0, Teaser Video

https://www.youtube.com/watch?v=tRR8htyfeX0, Full Video},

doi = {10.1145/3544549.3583891},

year = {2023},

date = {2023-04-23},

publisher = {ACM},

abstract = {In this work, we demonstrate our concepts for transparent interaction panels in augmented-reality environments. Mobile devices can support interaction with head-mounted displays by providing additional input channels, such as touch & pen input and spatial device input, and also an additional, personal display. However, occlusion of the physical context, other people, or the virtual content can be problematic. To address this, we previously introduced CleAR Sight, a concept and research platform for transparent interaction panels to support interaction in HMD-based mixed reality. Here, we will demonstrate the different interaction and visualization techniques supported in CleAR Sight that facilitate basic manipulation, data exploration, and sketching & annotation for various use cases such as 3D volume visualization, collaborative data analysis, and smart home control.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

Demonstrating Trusscillator: A System for Fabricating Human-Scale Human-Powered Oscillating Devices

Robert Kovacs (Hasso Plattner Institute, Potsdam, Germany), Lukas Rambold (Hasso Plattner Institute, Potsdam, Germany), Lukas Fritzsche (Hasso Plattner Institute, Potsdam, Germany), Dominik Meier (Hasso Plattner Institute, Potsdam, Germany), Jotaro Shigeyama (Hasso Plattner Institute, Potsdam, Germany), Shohei Katakura (Hasso Plattner Institute, Potsdam, Germany), Ran Zhang HCI (Hasso Plattner Institute, Potsdam, Germany), Patrick Baudisch (Hasso Plattner Institute, Potsdam, Germany

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{Kovacs2023Trusscillator,

title = {Demonstrating Trusscillator: A System for Fabricating Human-Scale Human-Powered Oscillating Devices},

author = {Robert Kovacs (Hasso Plattner Institute, Potsdam, Germany), Lukas Rambold (Hasso Plattner Institute, Potsdam, Germany), Lukas Fritzsche (Hasso Plattner Institute, Potsdam, Germany), Dominik Meier (Hasso Plattner Institute, Potsdam, Germany), Jotaro Shigeyama (Hasso Plattner Institute, Potsdam, Germany), Shohei Katakura (Hasso Plattner Institute, Potsdam, Germany), Ran Zhang HCI (Hasso Plattner Institute, Potsdam, Germany), Patrick Baudisch (Hasso Plattner Institute, Potsdam, Germany},

url = {https://hpi.de/baudisch/home.html, Website

https://www.youtube.com/@HassoPlattnerInstituteHCI, Youtube

https://www.youtube.com/watch?v=kyaL_wG0rJI, Teaser Video

https://www.youtube.com/watch?v=urW4iWfA-nQ&t=2s, Full Video},

doi = {10.1145/3472749.3474807},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

abstract = {Trusscillator is an end-to-end system that allows non-engineers to create human-scale human-powered devices that perform oscillatory movements, such as playground equipment, workout devices, and interactive kinetic installations. While recent research has been focusing on generating mechanisms that produce specific movement-path, without considering the required energy for the motion (kinematic approach), Trusscillator supports users in designing mechanisms that recycle energy in the system in the form of oscillating mechanisms (dynamic approach), specifically with the help of coil-springs. The presented system features a novel set of tools tailored for designing the dynamic experience of the motion. These tools allow designers to focus on user experience-specific aspects, such as motion range, tempo, and effort while abstracting away the underlying technicalities of eigenfrequencies, spring constants, and energy. Since the forces involved in the resulting devices can be high, Trusscillator helps users to fabricate from steel by picking out appropriate steal springs, generating part lists, and producing stencils and welding jigs that help weld with precision. To validate our system, we designed, built, and tested a series of unique playground equipment featuring 2-4 degrees of movement.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

Design and Fabrication of Body-Based Interfaces (Demo of Saarland HCI Lab)

Jürgen Steimle (Saarland University), Marie Muehlhaus (Saarland University), Madalina Nicolae (Saarland University), Aditya Nittala (Saarland University), Narges Pourjafarian (Saarland University), Adwait Sharma (Saarland University), Marc Teyssier (Saarland University), Marion Koelle (Saarland University), Bruno Fruchard (Saarland University), Paul Strohmeier (Saarland University)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{Steimle2023Design,

title = {Design and Fabrication of Body-Based Interfaces (Demo of Saarland HCI Lab) },

author = {Jürgen Steimle (Saarland University), Marie Muehlhaus (Saarland University), Madalina Nicolae (Saarland University), Aditya Nittala (Saarland University), Narges Pourjafarian (Saarland University), Adwait Sharma (Saarland University), Marc Teyssier (Saarland University), Marion Koelle (Saarland University), Bruno Fruchard (Saarland University), Paul Strohmeier (Saarland University)},

url = {https://hci.cs.uni-saarland.de/, Website

https://twitter.com/HCI_Saarland, twitter},

doi = {10.1145/3544549.3583916},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

abstract = {This Interactivity shows live demonstrations of our lab's most recent work on body-based interfaces. The soft, curved and deformable surface of the human body presents unique opportunities and challenges for interfaces. New form factors, materials and interaction techniques are required that move past the conventional rigid, planar and rectangular devices and the corresponding interaction styles. We highlight three themes of challenges for soft body-based interfaces: 1) How to design interfaces that are optimized for the body? We demonstrate how interactive computational design tools can help novices and experts to create better device designs. 2) Once they are designed, how to physically prototype and fabricate soft interfaces? We show accessible DIY fabrication methods for soft devices made of functional materials that make use of biomaterials. 3) How to leverage the richness of interacting on the body? We demonstrate on-body and off-body interactions that leverage the soft properties of the interface. },

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

Interacting with Neural Radiance Fields in Immersive Virtual Reality

Ke Li (Universität Hamburg, Deutsches Elektronen-Synchrotron DESY), Tim Rolff (Universität Hamburg ), Susanne Schmidt (Universität Hamburg), Reinhard Bacher (Deutsches Elektronen Synchrotron DESY), Wim Leemans (Deutsches Elektronen Synchrotron DESY), Frank Steinicke (Universität Hamburg)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{Li2023neural,

title = {Interacting with Neural Radiance Fields in Immersive Virtual Reality},

author = {Ke Li (Universität Hamburg, Deutsches Elektronen-Synchrotron DESY),

Tim Rolff (Universität Hamburg ),

Susanne Schmidt (Universität Hamburg),

Reinhard Bacher (Deutsches Elektronen Synchrotron DESY),

Wim Leemans (Deutsches Elektronen Synchrotron DESY),

Frank Steinicke (Universität Hamburg)},

url = {https://www.inf.uni-hamburg.de/en/inst/ab/hci.html, Webseite

},

doi = {10.1145/3544549.3583920},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {"Recent advancements in the neural radiance field (NeRF) technology, in particular its extension by instant neural graphics primitives, provide tremendous opportunities for the use of real-time immersive virtual reality (VR) applications. Moreover, the recent release

of an immersive neural graphics primitives framework (immersive-ngp) brings real-time, stereoscopic NeRF rendering to the Unity game engine. However, the system and application research combining NeRF and human-computer interaction in VR is still at the

very beginning.

In this demo, we present multiple interactive system features for immersive-ngp with design principles focusing on improving the usability and interactivity of the framework for small to medium-scale NeRF scenes. We demonstrate that these new feature implementations such as exocentric manipulation, VR tunneling effects, and immersive scene appearance editing enable novel VR-NeRF experiences, for example, for customized experiences in inspecting a particle accelerator environment."},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

of an immersive neural graphics primitives framework (immersive-ngp) brings real-time, stereoscopic NeRF rendering to the Unity game engine. However, the system and application research combining NeRF and human-computer interaction in VR is still at the

very beginning.

In this demo, we present multiple interactive system features for immersive-ngp with design principles focusing on improving the usability and interactivity of the framework for small to medium-scale NeRF scenes. We demonstrate that these new feature implementations such as exocentric manipulation, VR tunneling effects, and immersive scene appearance editing enable novel VR-NeRF experiences, for example, for customized experiences in inspecting a particle accelerator environment."

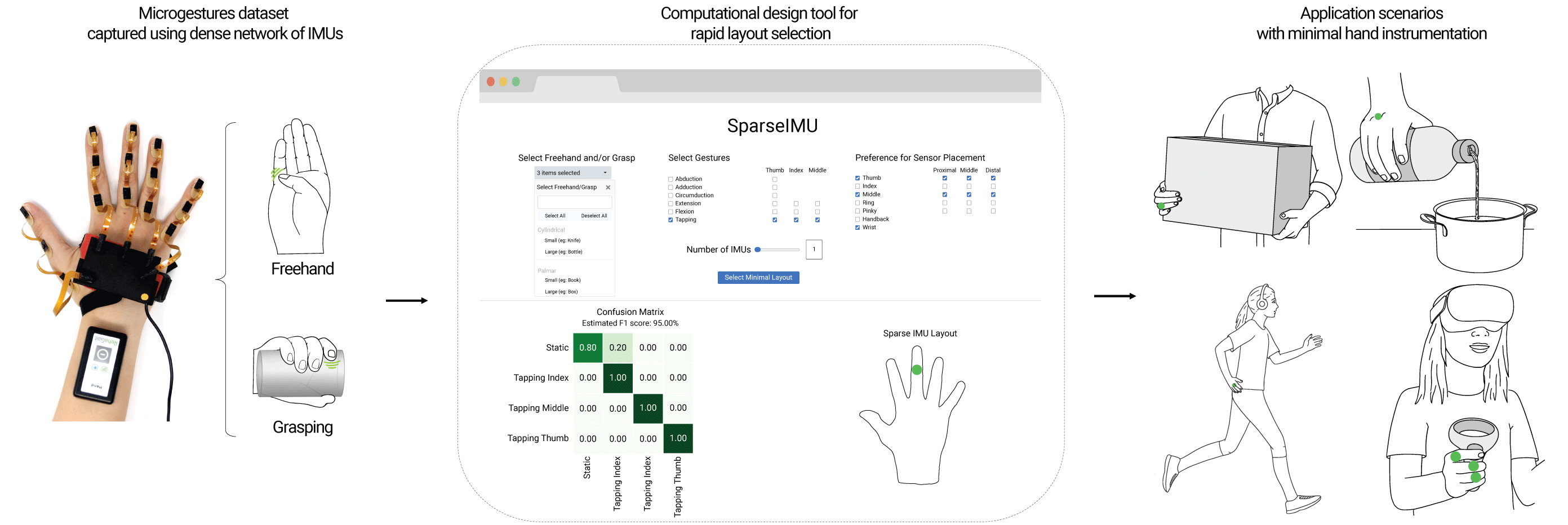

SparseIMU: Computational Design of Sparse IMU Layouts for Sensing Fine-Grained Finger Microgestures

Adwait Sharma (Saarland University, Saarland Informatics Campus, Germany), Christina Salchow-Hömmen (Department of Neurology, Charité-Universitätsmedizin Berlin, Germany & Control Systems Group, Technische Universität Berlin, Germany), Vimal Suresh Mollyn (Saarland University, Saarland Informatics Campus, Germany), Aditya Shekhar Nittala (Saarland University, Saarland Informatics Campus, Germany), Michael A. Hedderich (Saarland University, Saarland Informatics Campus, Germany), Marion Koelle (Saarland University, Saarland Informatics Campus, Germany), Thomas Seel (Control Systems Group, Technische Universität Berlin, Germany & Department of AI in Biomedical Engineering, FAU Erlangen-Nürnberg, Germany), Jürgen Steimle (Saarland University, Saarland Informatics Campus, Germany)

Abstract | Tags: Interactivity/Demonstration, Journal | Links:

@inproceedings{Sharma2023SparseIMU,

title = {SparseIMU: Computational Design of Sparse IMU Layouts for Sensing Fine-Grained Finger Microgestures},

author = {Adwait Sharma (Saarland University, Saarland Informatics Campus, Germany), Christina Salchow-Hömmen (Department of Neurology, Charité-Universitätsmedizin Berlin, Germany & Control Systems Group, Technische Universität Berlin, Germany), Vimal Suresh Mollyn (Saarland University, Saarland Informatics Campus, Germany), Aditya Shekhar Nittala (Saarland University, Saarland Informatics Campus, Germany), Michael A. Hedderich (Saarland University, Saarland Informatics Campus, Germany), Marion Koelle (Saarland University, Saarland Informatics Campus, Germany), Thomas Seel (Control Systems Group, Technische Universität Berlin, Germany & Department of AI in Biomedical Engineering, FAU Erlangen-Nürnberg, Germany), Jürgen Steimle (Saarland University, Saarland Informatics Campus, Germany)},

url = {https://hci.cs.uni-saarland.de/, Website

https://twitter.com/HCI_Saarland, twitter},

doi = {10.1145/3569894},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Gestural interaction with freehands and while grasping an everyday object enables always-available input. To sense such gestures, minimal instrumentation of the user's hand is desirable. However, the choice of an effective but minimal IMU layout remains challenging, due to the complexity of the multi-factorial space that comprises diverse finger gestures, objects and grasps. We present SparseIMU, a rapid method for selecting minimal inertial sensor-based layouts for effective gesture recognition. Furthermore, we contribute a computational tool to guide designers with optimal sensor placement. Our approach builds on an extensive microgestures dataset that we collected with a dense network of 17 inertial measurement units (IMUs). We performed a series of analyses, including an evaluation of the entire combinatorial space for freehand and grasping microgestures (393K layouts), and quantified the performance across different layout choices, revealing new gesture detection opportunities with IMUs. Finally, we demonstrate the versatility of our method with four scenarios.},

keywords = {Interactivity/Demonstration, Journal},

pubstate = {published},

tppubtype = {inproceedings}

}

The Aachen Lab Demo: From Fundamental Perception to Design Tools

Jan Borchers (RWTH Aachen University), Anke Brocker (RWTH Aachen University), Sebastian Hueber (RWTH Aachen University), Oliver Nowak (RWTH Aachen University),; René Schäfer (RWTH Aachen University), Adrian Wagner (RWTH Aachen University), Paul Preuschoff (RWTH Aachen University), Lea Schirp (RWTH Aachen University)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{Borchers2023Demo,

title = {The Aachen Lab Demo: From Fundamental Perception to Design Tools},

author = {Jan Borchers (RWTH Aachen University), Anke Brocker (RWTH Aachen University), Sebastian Hueber (RWTH Aachen University), Oliver Nowak (RWTH Aachen University),; René Schäfer (RWTH Aachen University), Adrian Wagner (RWTH Aachen University), Paul Preuschoff (RWTH Aachen University), Lea Schirp (RWTH Aachen University)},

url = {https://hci.rwth-aachen.de, Website

https://youtu.be/t5btQe8GECs, Teaser Video

https://youtu.be/26fHE-nev4g, Full Video},

doi = {10.1145/3544549.3583937},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

abstract = {This year, the Media Computing Group at RWTH Aachen University turns 20. We celebrate this anniversary with a Lab Interactivity Demo at CHI that showcases not past achievements, but the range of currently ongoing research at the lab. It features hands-on interactive demos ranging from fundamental research in perception and cognition with traditional devices, such as experiencing input latency and Dark Patterns, to new input and output techniques beyond the desktop, such as user-perspective rendering in handheld AR and interaction with time-based media through conducting, to physical interfaces and the tools and processes for their design and fabrication, such as textile icons and sliders, soft robotics, and 3D printing fabric-covered objects.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

The Art of Privacy - A Theatrical Privacy Installation in Virtual Reality

Frederike Jung (OFFIS - Institute for Information Technology), Jonah-Noël Kaiser (University of Oldenburg), Kai von Holdt (OFFIS - Institute for Information Technology), Wilko Heuten (OFFIS - Institute for Information Technology), Jochen Meyer (OFFIS - Institute for Information Technology)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{jung2023privacy,

title = {The Art of Privacy - A Theatrical Privacy Installation in Virtual Reality},

author = {Frederike Jung (OFFIS - Institute for Information Technology), Jonah-Noël Kaiser (University of Oldenburg), Kai von Holdt (OFFIS - Institute for Information Technology), Wilko Heuten (OFFIS - Institute for Information Technology), Jochen Meyer (OFFIS - Institute for Information Technology)},

url = {https://bit.ly/3xmjnJF, Teaser Video},

doi = {10.1145/3544549.3583893},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {In a digitized world, matters of (online) privacy become increasingly immanent in people’s lives. Paradoxically, while consumers claim they care about what happens to their personal data, they undertake little to protect it. Awareness is a crucial step towards making informed privacy decisions. Therefore, we present the Art of Privacy, a Virtual Reality (VR) installation, generated in collaboration with theater artists. This Interactivity immerses viewers into the world of data and unfolds possible consequences of clicking ‘accept’, without reading the terms and conditions or privacy policies. With this work, we contribute an artistic VR installation, designed to shed new light on digital privacy, spark discussions and encourage self-reflection of personal privacy behaviors.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

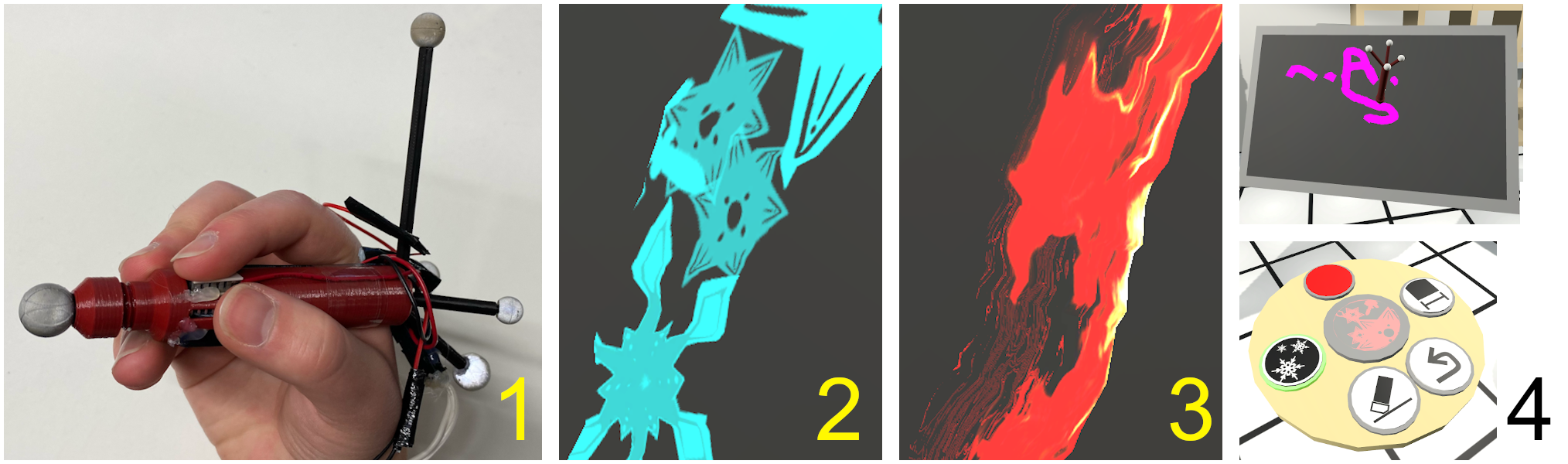

ThermalPen: Adding Thermal Haptic Feedback to 3D Sketching

Philipp Pascal Hoffmann (TU Darmstadt), Hesham Elsayed (TU Darmstadt), Max Mühlhäuser (TU Darmstadt), Rina Wehbe (Dalhousie University), Mayra D. Barrera Machuca (Dalhousie University)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{Hoffmann2023ThemalPen,

title = {ThermalPen: Adding Thermal Haptic Feedback to 3D Sketching},

author = {Philipp Pascal Hoffmann (TU Darmstadt), Hesham Elsayed (TU Darmstadt), Max Mühlhäuser (TU Darmstadt), Rina Wehbe (Dalhousie University), Mayra D. Barrera Machuca (Dalhousie University)},

url = {https://www.teamdarmstadt.de/, Website

https://www.facebook.com/teamdarmstadt/, Facebook

https://www.instagram.com/teamdarmstadt/, instagram

https://www.youtube.com/user/TKLabs, Youtube

https://drive.google.com/file/d/1K7Ad7xZRQ1zHB16rlXldPJ2i3QvPV9VA/view, Teaser Video

https://drive.google.com/file/d/16toXSiT1crEn7oOHhmoGg2gN7ncZyMGt/view, Full Video},

doi = {10.1145/3544549.3583901},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

abstract = {Sketching in virtual 3D environments has enabled new forms of artistic expression and a variety of novel design use-cases. However, the lack of haptic feedback proves to be one of the main challenges in this field. While prior work has investigated vibrotactile and force-feedback devices, this paper proposes the addition of thermal feedback. We present ThermalPen, a novel pen for 3D sketching that associates the texture and colour of strokes with different thermal properties. For example, a fire texture elicits an increase in temperature, while an ice texture causes a temperature drop in the pen. Our goal with ThermalPen is to enhance the 3D sketching experience and allow users to use this tool to increase their creativity while sketching. We plan on evaluating the influence of thermal feedback on the 3D sketching experience, with a focus on user creativity in the future.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

Towards More Inclusive and Accessible Virtual Reality: Conducting Large-scale Studies in the Wild

Thereza Schmelter (Berlin University of Applied Sciences, Technologies), Lucie Kruse (Human-Computer Interaction, Universität Hamburg), Sukran Karaosmanoglu (Human-Computer Interaction, Universität Hamburg), Sebastian Rings(Human-Computer Interaction, Universität Hamburg), Frank Steinicke (Human-Computer Interaction, Universität Hamburg), Kristian Hildebrand (Berlin University of Applied Sciences, Technologies)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{Schmelter2023Virtual,

title = {Towards More Inclusive and Accessible Virtual Reality: Conducting Large-scale Studies in the Wild},

author = {Thereza Schmelter (Berlin University of Applied Sciences and Technologies), Lucie Kruse (Human-Computer Interaction, Universität Hamburg), Sukran Karaosmanoglu (Human-Computer Interaction, Universität Hamburg), Sebastian Rings(Human-Computer Interaction, Universität Hamburg), Frank Steinicke (Human-Computer Interaction, Universität Hamburg), Kristian Hildebrand (Berlin University of Applied Sciences and Technologies)},

url = {http://hildebrand.beuth-hochschule.de/, Website

https://www.inf.uni-hamburg.de/en/inst/ab/hci.html, Website

https://twitter.com/uhhhci, twitter

},

doi = {10.1145/3544549.3583888},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {In this work, we demonstrate a mobile laboratory with virtual and augmented reality (VR/AR) technology housed in a truck that enables large-scale VR/AR studies and therapies in real-world environments. This project aims to improve accessibility and inclusiveness in human-computer interaction (HCI) methods, providing a platform for researchers, medical professionals, and patients to utilize laboratory hardware and space. The mobile laboratory is equipped with motion tracking technology and other hardware to allow for a range of user groups to participate in VR studies and therapies that could otherwise never partake or benefit from these services. Our findings, applications, and experiences will be presented at the CHI interactivity track, with the goal of fostering future research opportunities.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

Versatile Immersive Virtual and Augmented Tangible OR - Using VR, AR and Tangibles to Support Surgical Practice

Anke V. Reinschluessel (Digital Media Lab, University of Bremen), Thomas Muender (Digital Media Lab, University of Bremen), Roland Fischer (CGVR, University of Bremen), Valentin Kraft (Fraunhofer MEVIS, Bremen), Verena N. Uslar (University Hospital for Visceral Surgery, University of Oldenburg), Dirk Weyhe (University Hospital for Visceral Surgery, Pius-Hospital Oldenburg), Andrea Schenk (Fraunhofer Institute for Digital Medicine MEVIS), Gabriel Zachmann (Mathematics, Computer Science, University of Bremen), Tanja Döring (Digital Media Lab / HCI Group, University of Bremen), Rainer Malaka (Digital Media Lab, University of Bremen)

Abstract | Tags: Interactivity/Demonstration | Links:

@inproceedings{Reinschluessel2023Versatile,

title = {Versatile Immersive Virtual and Augmented Tangible OR - Using VR, AR and Tangibles to Support Surgical Practice },

author = {Anke V. Reinschluessel (Digital Media Lab, University of Bremen), Thomas Muender (Digital Media Lab, University of Bremen), Roland Fischer (CGVR, University of Bremen), Valentin Kraft (Fraunhofer MEVIS, Bremen), Verena N. Uslar (University Hospital for Visceral Surgery, University of Oldenburg), Dirk Weyhe (University Hospital for Visceral Surgery, Pius-Hospital Oldenburg), Andrea Schenk (Fraunhofer Institute for Digital Medicine MEVIS), Gabriel Zachmann (Mathematics and Computer Science, University of Bremen), Tanja Döring (Digital Media Lab / HCI Group, University of Bremen), Rainer Malaka (Digital Media Lab, University of Bremen)

},

url = {https://www.uni-bremen.de/dmlab/, Website

https://twitter.com/dmlabbremen, twitter

https://youtu.be/NlxaAM7Gexw, Video Figure

},

doi = {10.1145/3544549.3583895},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

abstract = {Immersive technologies such as virtual reality (VR) and augmented reality (AR), in combination with advanced image segmentation and visualization, have considerable potential to improve and support a surgeon's work. We demonstrate a solution to help surgeons plan and perform surgeries and educate future medical staff using VR, AR, and tangibles. A VR planning tool improves spatial understanding of an individual's anatomy, a tangible organ model allows for intuitive interaction, and AR gives contactless access to medical images in the operating room. Additionally, we present improvements regarding point cloud representations to provide detailed visual information to a remote expert and about the remote expert. Therefore, we give an exemplary setup showing how recent interaction techniques and modalities benefit an area that can positively change the life of patients.},

keywords = {Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}

What's That Shape? Investigating Eyes-Free Recognition of Textile Icons

René Schäfer (RWTH Aachen University), Oliver Nowak (RWTH Aachen University), Lovis Suchmann (RWTH Aachen University), Sören Schröder(RWTH Aachen University), Jan Borchers (RWTH Aachen University)

Abstract | Tags: Full Paper, Interactivity/Demonstration | Links:

@inproceedings{Schaefer2023Shape,

title = {What's That Shape? Investigating Eyes-Free Recognition of Textile Icons},

author = {René Schäfer (RWTH Aachen University), Oliver Nowak (RWTH Aachen University), Lovis Suchmann (RWTH Aachen University), Sören Schröder(RWTH Aachen University), Jan Borchers (RWTH Aachen University)

},

url = {https://hci.rwth-aachen.de, Website

https://youtu.be/7EgvDO3Ny44, Teaser Video

https://youtu.be/PFCvn-KhxbQ, Full Video},

doi = {10.1145/3544548.3580920},

year = {2023},

date = {2023-04-28},

urldate = {2023-04-28},

publisher = {ACM},

abstract = {Textile surfaces, such as on sofas, cushions, and clothes, offer promising alternative locations to place controls for digital devices. Textiles are a natural, even abundant part of living spaces, and support unobtrusive input. While there is solid work on technical implementations of textile interfaces, there is little guidance regarding their design—especially their haptic cues, which are essential for eyes-free use. In particular, icons easily communicate information visually in a compact fashion, but it is unclear how to adapt them to the haptics-centric textile interface experience. Therefore, we investigated the recognizability of 84 haptic icons on fabrics. Each combines a shape, height profile (raised, recessed, or flat), and affected area (filled or outline). Our participants clearly preferred raised icons, and identified them with the highest accuracy and at competitive speeds. We also provide insights into icons that look very different, but are hard to distinguish via touch alone.},

keywords = {Full Paper, Interactivity/Demonstration},

pubstate = {published},

tppubtype = {inproceedings}

}