We curated a list of this year’s publications — including links to social media, lab websites, and supplemental material. We have 58 full papers, 13 LBWs, one DC paper, and one Student Game Competition, and we lead five workshops. Two papers were awarded a best paper award, and four papers received an honourable mention.

Is your publication missing? Send us an email: contact@germanhci.de

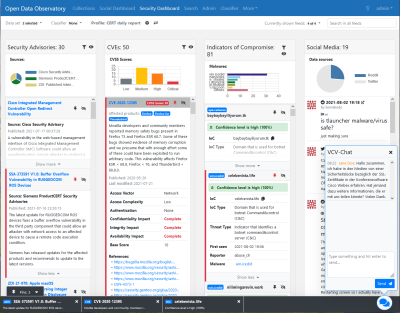

‘We Do Not Have the Capacity to Monitor All Media’: A Design Case Study on Cyber Situational Awareness in Computer Emergency Response Teams

Marc-André Kaufhold (TU Darmstadt), Thea Riebe (TU Darmstadt), Markus Bayer (TU Darmstadt), Christian Reuter (TU Darmstadt)

Abstract | Tags: Best Paper, Full Paper | Links:

@inproceedings{Kaufhold2024DoNot,

title = {‘We Do Not Have the Capacity to Monitor All Media’: A Design Case Study on Cyber Situational Awareness in Computer Emergency Response Teams},

author = {Marc-André Kaufhold (TU Darmstadt), Thea Riebe (TU Darmstadt), Markus Bayer (TU Darmstadt), Christian Reuter (TU Darmstadt)},

url = {www.peasec.de, website},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

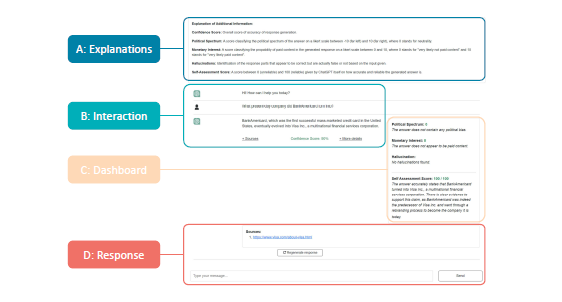

abstract = {Computer Emergency Response Teams (CERTs) have been established in the public sector globally to provide advisory, preventive and reactive cybersecurity services for government agencies, citizens, and businesses. Nevertheless, their responsibility of monitoring, analyzing, and communicating cyber threats and security vulnerabilities have become increasingly challenging due to the growing volume and varying quality of information disseminated through public and social channels. Based on a design case study conducted from 2021 to 2023, this paper combines three iterations of expert interviews (N=25), design workshops (N=4) and cognitive walkthroughs (N=25) to design an automated, cross-platform and real-time cybersecurity dashboard. By adopting the notion of cyber situational awareness, the study further extracts user requirements and design heuristics for enhanced threat intelligence and mission awareness in CERTs, discussing the aspects of source integration, data management, customizable visualization, relationship awareness, information assessment, software integration, (inter-)organizational collaboration, and communication of stakeholder warnings.},

keywords = {Best Paper, Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

"AI enhances our performance, I have no doubt this one will do the same": The Placebo effect is robust to negative descriptions of AI

Agnes Mercedes Kloft (Aalto University), Robin Welsch (Aalto University), Thomas Kosch (HU Berlin), Steeven Villa (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{Kloft2024AiEnhances,

title = {"AI enhances our performance, I have no doubt this one will do the same": The Placebo effect is robust to negative descriptions of AI},

author = {Agnes Mercedes Kloft (Aalto University), Robin Welsch (Aalto University), Thomas Kosch (HU Berlin), Steeven Villa (LMU Munich)},

doi = {10.1145/3613904.3642633},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {effects. In a letter discrimination task, we informed participants that an AI would either increase or decrease their performance by adapting the interface, but in reality, no AI was present in any condition. A Bayesian analysis showed that participants had high expectations and performed descriptively better irrespective of the AI description when a sham-AI was present. Using cognitive modeling, we could trace this advantage back to participants gathering more information. A replication study verified that negative AI descriptions do not alter expectations, suggesting that performance expectations with AI are biased and robust to negative verbal descriptions. We discuss the impact of user expectations on AI interactions and evaluation.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

"If the Machine Is As Good As Me, Then What Use Am I?" – How the Use of ChatGPT Changes Young Professionals' Perception of Productivity and Accomplishment

Charlotte Kobiella (Center for Digital Technology, Management (CDTM)), Yarhy Said Flores López (Center for Digital Technology, Management (CDTM)), Franz Waltenberger (Center for Digital Technology, Management (CDTM), Technical University of Munich), Fiona Draxler (University of Mannheim, LMU Munich), Albrecht Schmidt (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{Kobiella2024IfMachine,

title = {"If the Machine Is As Good As Me, Then What Use Am I?" – How the Use of ChatGPT Changes Young Professionals' Perception of Productivity and Accomplishment},

author = {Charlotte Kobiella (Center for Digital Technology and Management (CDTM)), Yarhy Said Flores López (Center for Digital Technology and Management (CDTM)), Franz Waltenberger (Center for Digital Technology and Management (CDTM), Technical University of Munich), Fiona Draxler (University of Mannheim, LMU Munich), Albrecht Schmidt (LMU Munich)},

url = {http://www.medien.ifi.lmu.de, website

https://twitter.com/mimuc, social media},

doi = {10.1145/3613904.3641964},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Large language models (LLMs) like ChatGPT have been widely adopted in work contexts. We explore the impact of ChatGPT on young professionals' perception of productivity and sense of accomplishment. We collected LLMs' main use cases in knowledge work through a preliminary study, which served as the basis for a two-week diary study with 21 young professionals reflecting on their ChatGPT use. Findings indicate that ChatGPT enhanced some participants' perceptions of productivity and accomplishment by enabling greater creative output and satisfaction from efficient tool utilization. Others experienced decreased perceived productivity and accomplishment, driven by a diminished sense of ownership, perceived lack of challenge, and mediocre results. We found that the suitability of task delegation to ChatGPT varies strongly depending on the task nature. It's especially suitable for comprehending broad subject domains, generating creative solutions, and uncovering new information. It's less suitable for research tasks due to hallucinations, which necessitate extensive validation.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

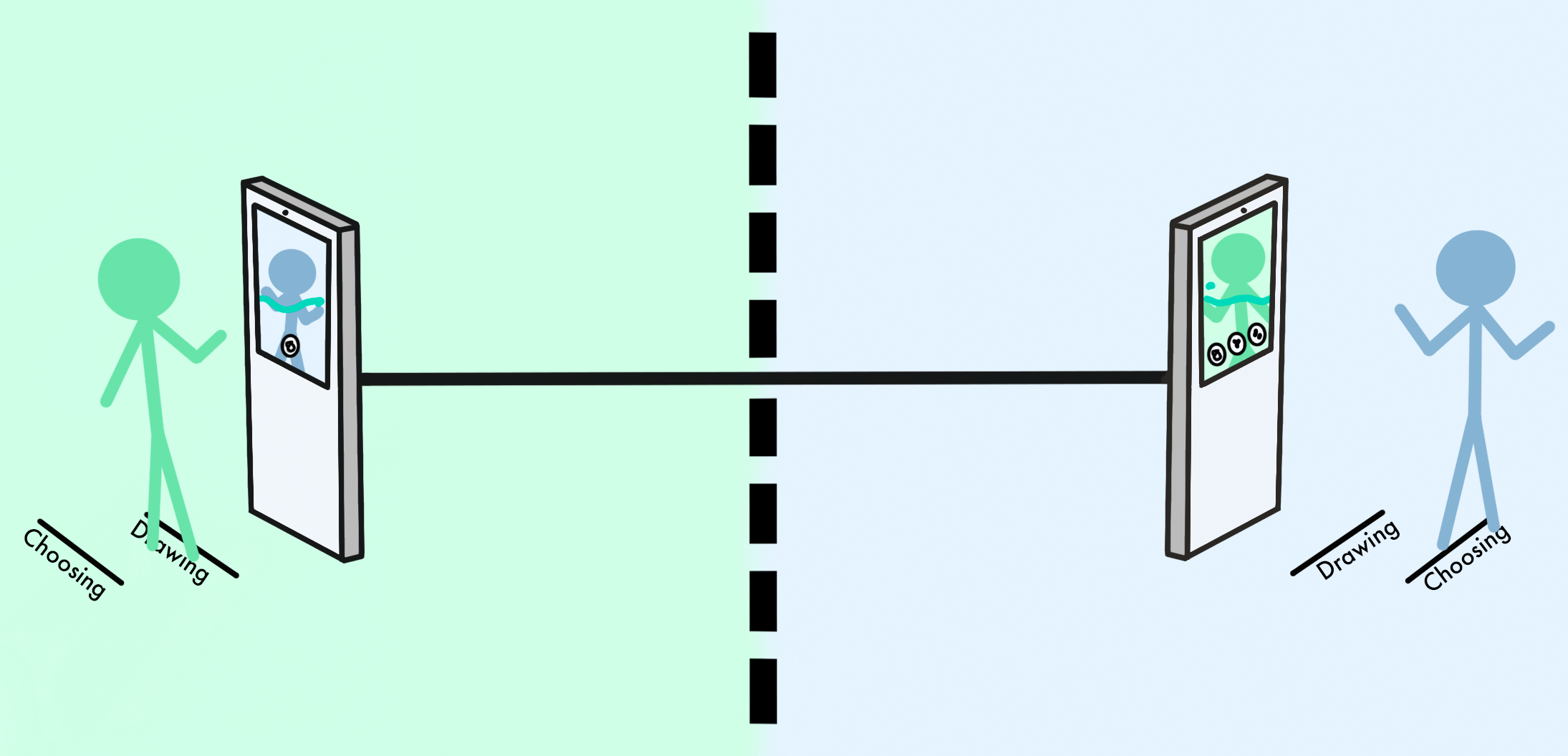

“Tele” Me More: Using Telepresence Charades to Connect Strangers and Exhibits in Different Museums

Clara Sayffaerth (LMU Munich), Julian Rasch (LMU Munich), Florian Müller (LMU Munich)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Sayffaerth2024TeleMeMoreb,

title = {“Tele” Me More: Using Telepresence Charades to Connect Strangers and Exhibits in Different Museums},

author = {Clara Sayffaerth (LMU Munich), Julian Rasch (LMU Munich), Florian Müller (LMU Munich)},

url = {http://www.medien.ifi.lmu.de, website

https://twitter.com/mimuc, twitter},

doi = {https://doi.org/10.1145/3613905.3650834},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {The museum is changing from a place of passive consumption to a place of interactive experiences, opening up new ways of engaging with exhibits and others. As a promising direction, this paper explores the potential of telepresence stations in the museum context to enhance social connectedness among visitors over distance. Emphasizing the significance of social exchange, our research focuses on studying telepresence to foster interactions between strangers, share knowledge, and promote social connectedness. To do so, we first observe exhibitions and then interview individual visitors of a technical museum about their experiences and needs. Based on the results, we design appropriate voiceless and touchless communication channels and test them in a study. The findings of our in-situ user study with 24 visitors unfamiliar with each other in the museum provide insights into behaviors and perceptions, contributing valuable knowledge on seamlessly integrating telepresence technology in exhibitions, with a focus on enhancing learning, social connections, and the museum experience in general.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

"I Know What You Mean": Context-Aware Recognition to Enhance Speech-Based Games

Nima Zargham (Digital Media Lab, University of Bremen), Mohamed Lamine Fetni (Digital Media Lab, University of Bremen), Laura Spillner (Digital Media Lab, University of Bremen), Thomas Münder (Digital Media Lab, University of Bremen), Rainer Malaka (Digital Media Lab, University of Bremen)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Zargham2024KnowWhat,

title = {"I Know What You Mean": Context-Aware Recognition to Enhance Speech-Based Games},

author = {Nima Zargham (Digital Media Lab, University of Bremen), Mohamed Lamine Fetni (Digital Media Lab, University of Bremen), Laura Spillner (Digital Media Lab, University of Bremen), Thomas Münder (Digital Media Lab, University of Bremen), Rainer Malaka (Digital Media Lab, University of Bremen)},

url = {https://www.uni-bremen.de/dmlab, website},

doi = {10.1145/3613904.3642426},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Recent advances in language processing and speech recognition open up a large opportunity for video game companies to embrace voice interaction as an intuitive feature and appealing game mechanics. However, speech-based systems still remain liable to recognition errors. These add a layer of challenge on top of the game's existing obstacles, preventing players from reaching their goals and thus often resulting in player frustration. This work investigates a novel method called context-aware speech recognition, where the game environment and actions are used as supplementary information to enhance recognition in a speech-based game. In a between-subject user study (N=40), we compared our proposed method with a standard method in which recognition is based only on the voice input without taking context into account. Our results indicate that our proposed method could improve the player experience and the usability of the speech system.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

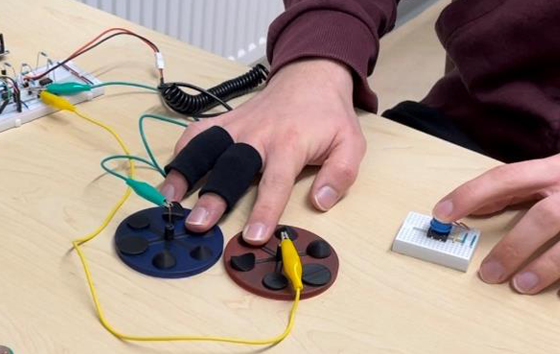

3DA: Assessing 3D-Printed Electrodes for Measuring Electrodermal Activity

Martin Schmitz (Saarland University), Dominik Schön (Technical University of Darmstadt), Henning Klagemann (Technical University of Darmstadt), Thomas Kosch (HU Berlin)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Schmitz20243Da,

title = {3DA: Assessing 3D-Printed Electrodes for Measuring Electrodermal Activity},

author = {Martin Schmitz (Saarland University), Dominik Schön (Technical University of Darmstadt), Henning Klagemann (Technical University of Darmstadt), Thomas Kosch (HU Berlin)},

url = {https://hcistudio.org, website},

doi = {10.1145/3613905.3650938},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Electrodermal activity (EDA) reflects changes in skin conductance, which are closely tied to human psychophysiological states. For example, EDA sensors can assess stress, cognitive workload, arousal, or other measures tied to the sympathetic nervous system for interactive human-centered applications. Yet, current limitations involve the complex attachment and proper skin contact with EDA sensors. This paper explores the concept of 3D printing electrodes for EDA measurements, integrating sensors into arbitrary 3D-printed objects, alleviating the need for complex assembly and attachment. We examine the adaptation of conventional EDA circuits for 3D-printed electrodes, assessing different electrode shapes and their impact on the sensing accuracy. A user study (N=6) revealed that 3D-printed electrodes can measure EDA with similar accuracy, suggesting larger contact areas for improved precision. We derive design implications to facilitate the integration of EDA sensors into 3D-printed devices to foster diverse integration into everyday objects for prototyping physiological interfaces.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

A Design Space for Intelligent and Interactive Writing Assistants

Mina Lee (Microsoft Research, United States), Katy Ilonka Gero (Harvard University, United States), John Joon Young Chung (Midjourney, United States), Simon Buckingham Shum (Connected Intelligence Centre, University of Technology Sydney, Australia), Vipul Raheja (Grammarly, United States) Hua Shen (University of Michigan, United States), Subhashini Venugopalan (Google, United States), Dr. Thiemo Wambsganss (Bern University of Applied Sciences, Switzerland), David Zhou (University of Illinois Urbana-Champaign, United States), Emad A. Alghamdi (King Abdulaziz University, Saudi Arabia), Tal August (University of Washington, United States), Avinash Bhat (McGill University, Canada), Madiha Zahrah (Cornell Tech, United States), Senjuti Dutta (University of Tennessee, United States), Jin L.C. Guo (McGill University, Canada), Md Naimul Hoque (University of Maryland, United States), Yewon Kim (KAIST, Republic of Korea), Simon Knight (University of Technology Sydney, Australia), Seyed Parsa Neshaei (EPFL, Switzerland), Dr Antonette Shibani (University of Technology Sydney, Australia), Disha Shrivastava (Google DeepMind, United Kingdom), Lila Shroff (Stanford University, United States), Agnia Sergeyuk (JetBrains Research, Serbia, Montenegro), Jessi Stark (University of Toronto, Canada), Sarah Sterman (University of Illinois, United States), Sitong Wang Columbia University, United States), Antoine Bosselut (EPFL, Switzerland), Daniel Buschek (University of Bayreuth, Germany), Joseph Chee Chang (Allen Institute for AI, United States), Sherol Chen (Google, United States), Max Kreminski (Midjourney, United States), Joonsuk Park (University of Richmond, United States), Roy Pea (Stanford University, United States), Eugenia H Rho (Virginia Tech, United States), Zejiang Shen (Massachusetts Institute of Technology, United States), Pao Siangliulue (B12, United States)

Abstract | Tags: Full Paper | Links:

@inproceedings{Lee2024DesignSpace,

title = {A Design Space for Intelligent and Interactive Writing Assistants},

author = {Mina Lee (Microsoft Research, United States),

Katy Ilonka Gero (Harvard University, United States),

John Joon Young Chung (Midjourney, United States),

Simon Buckingham Shum (Connected Intelligence Centre, University of Technology Sydney, Australia),

Vipul Raheja (Grammarly, United States)

Hua Shen (University of Michigan, United States),

Subhashini Venugopalan (Google, United States),

Dr. Thiemo Wambsganss (Bern University of Applied Sciences, Switzerland),

David Zhou (University of Illinois Urbana-Champaign, United States),

Emad A. Alghamdi (King Abdulaziz University, Saudi Arabia),

Tal August (University of Washington, United States),

Avinash Bhat (McGill University, Canada),

Madiha Zahrah (Cornell Tech, United States),

Senjuti Dutta (University of Tennessee, United States),

Jin L.C. Guo (McGill University, Canada),

Md Naimul Hoque (University of Maryland, United States),

Yewon Kim (KAIST, Republic of Korea),

Simon Knight (University of Technology Sydney, Australia),

Seyed Parsa Neshaei (EPFL, Switzerland),

Dr Antonette Shibani (University of Technology Sydney, Australia),

Disha Shrivastava (Google DeepMind, United Kingdom),

Lila Shroff (Stanford University, United States),

Agnia Sergeyuk (JetBrains Research, Serbia and Montenegro),

Jessi Stark (University of Toronto, Canada),

Sarah Sterman (University of Illinois, United States),

Sitong Wang Columbia University, United States),

Antoine Bosselut (EPFL, Switzerland),

Daniel Buschek (University of Bayreuth, Germany),

Joseph Chee Chang (Allen Institute for AI, United States),

Sherol Chen (Google, United States),

Max Kreminski (Midjourney, United States),

Joonsuk Park (University of Richmond, United States),

Roy Pea (Stanford University, United States),

Eugenia H Rho (Virginia Tech, United States),

Zejiang Shen (Massachusetts Institute of Technology, United States),

Pao Siangliulue (B12, United States)},

doi = {10.1145/3613904.3642697},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {In our era of rapid technological advancement, the research landscape for writing assistants has become increasingly fragmented across various research communities. We seek to address this challenge by proposing a design space as a structured way to examine and explore the multidimensional space of intelligent and interactive writing assistants. Through community collaboration, we explore five aspects of writing assistants: task, user, technology, interaction, and ecosystem. Within each aspect, we define dimensions and codes by systematically reviewing 115 papers while leveraging the expertise of researchers in various disciplines. Our design space aims to offer researchers and designers a practical tool to navigate, comprehend, and compare the various possibilities of writing assistants, and aid in the design of new writing assistants.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

A Longitudinal In-the-Wild Investigation of Design Frictions to Prevent Smartphone Overuse

Luke Haliburton (LMU Munich), David J Grüning (Heidelberg University), Frederik Riedel (riedel.wtf GmbH), Albrecht Schmidt (LMU Munich), Nađa Terzimehić (LMU Munich)

Abstract | Tags: Full Paper | Links:

@inproceedings{Haliburton2024LongitudinalInthewild,

title = {A Longitudinal In-the-Wild Investigation of Design Frictions to Prevent Smartphone Overuse},

author = {Luke Haliburton (LMU Munich), David J Grüning (Heidelberg University), Frederik Riedel (riedel.wtf GmbH), Albrecht Schmidt (LMU Munich), Nađa Terzimehić (LMU Munich)},

url = {https://www.medien.ifi.lmu.de/, website

https://twitter.com/mimuc, social media

and, social media

https://www.instagram.com/mediagroup.lmu/, social media},

doi = {10.1145/3613904.3642370},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Smartphone overuse is hyper-prevalent in society, and developing tools to prevent this overuse has become a focus of HCI. However, there is a lack of work investigating smartphone overuse interventions over the long term. We collected usage data from N=1,039 users of one sec over an average of 13.4 weeks and qualitative insights from 249 of the users through an online survey. We found that users overwhelmingly choose to target Social Media apps. We found that the short design frictions introduced by one sec effectively reduce how often users attempt to open target apps and lead to more intentional app-openings over time. Additionally, we found that users take periodic breaks from one sec interventions, and quickly rebound from a pattern of overuse when returning from breaks. Overall, we contribute findings from a longitudinal investigation of design frictions in the wild and identify usage patterns from real users in practice.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

A Meta-Bayesian Approach for Rapid Online Parametric Optimization for Wrist-based Interactions

Yi-Chi Liao (Aalto University & Saarland University), Ruta Desai (Fundamental AI Research, Meta), Alec M. Pierce (Reality Labs Research, Meta), Krista Taylor (Reality Labs Research, Meta), Hrvoje Benko (Reality Labs Research, Meta), Tanya Jonker (Reality Labs Research, Meta), Aakar Gupta (Reality Labs Research, Meta & Fujitsu Research America)

Abstract | Tags: Full Paper | Links:

@inproceedings{Liao2024MetabayesianApproach,

title = {A Meta-Bayesian Approach for Rapid Online Parametric Optimization for Wrist-based Interactions},

author = {Yi-Chi Liao (Aalto University & Saarland University), Ruta Desai (Fundamental AI Research, Meta), Alec M. Pierce (Reality Labs Research, Meta), Krista Taylor (Reality Labs Research, Meta), Hrvoje Benko (Reality Labs Research, Meta), Tanya Jonker (Reality Labs Research, Meta), Aakar Gupta (Reality Labs Research, Meta & Fujitsu Research America)},

url = {https://hci.cs.uni-saarland.de/, website

&, website

https://cix.cs.uni-saarland.de/, website},

doi = {10.1145/3613904.3642071},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {-},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

A Systematic Review of Ability-diverse Collaboration through Ability-based Lens in HCI

Lan Xiao (Global Disability Innovation Hub, University College London), Maryam Bandukda (Global Disability Innovation Hub, University College London), Katrin Angerbauer (VISUS, University of Stuttgart), Weiyue Lin (Peking University), Tigmanshu Bhatnagar (Global Disability Innovation Hub, University College London), Michael Sedlmair VISUS, (University of Stuttgart), Catherine Holloway (Global Disability Innovation Hub, University College London)

Abstract | Tags: Full Paper | Links:

@inproceedings{Xiao2024SystematicReview,

title = {A Systematic Review of Ability-diverse Collaboration through Ability-based Lens in HCI},

author = {Lan Xiao (Global Disability Innovation Hub, University College London), Maryam Bandukda (Global Disability Innovation Hub, University College London), Katrin Angerbauer (VISUS, University of Stuttgart), Weiyue Lin (Peking University), Tigmanshu Bhatnagar (Global Disability Innovation Hub, University College London), Michael Sedlmair VISUS, (University of Stuttgart), Catherine Holloway (Global Disability Innovation Hub, University College London)},

doi = {10.1145/3613904.3641930},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {In a world where diversity is increasingly recognised and celebrated, it is important for HCI to embrace the evolving methods and theories for technologies to reflect the diversity of its users and be ability-centric. Interdependence Theory, an example of this evolution, highlights the interpersonal relationships between humans and technologies and how technologies should be designed to meet shared goals and outcomes for people, regardless of their abilities. This necessitates a contemporary understanding of "ability-diverse collaboration," which motivated this review. In this review, we offer an analysis of 117 papers sourced from the ACM Digital Library spanning the last two decades. We contribute (1) a unified taxonomy and the Ability-Diverse Collaboration Framework, (2) a reflective discussion and mapping of the current design space, and (3) future research opportunities and challenges. Finally, we have released our data and analysis tool to encourage the HCI research community to contribute to this ongoing effort.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

An Ontology of Dark Patterns Knowledge: Foundations, Definitions, and a Pathway for Shared Knowledge-Building

Colin M. Gray (Indiana University, Bloomington), Cristiana Teixeira Santos (Utrecht University), Nataliia Bielova (Inria Sophia Antipolis), Thomas Mildner (University of Bremen)

Abstract | Tags: Full Paper | Links:

@inproceedings{gray2024ontology,

title = {An Ontology of Dark Patterns Knowledge: Foundations, Definitions, and a Pathway for Shared Knowledge-Building },

author = {Colin M. Gray (Indiana University, Bloomington), Cristiana Teixeira Santos (Utrecht University), Nataliia Bielova (Inria Sophia Antipolis), Thomas Mildner (University of Bremen)},

url = {https://www.uni-bremen.de/dmlab/},

doi = {10.1145/3613904.3642436},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Deceptive and coercive design practices are increasingly used by companies to extract profit, harvest data, and limit consumer choice. Dark patterns represent the most common contemporary amalgamation of these problematic practices, connecting designers, technologists, scholars, regulators, and legal professionals in transdisciplinary dialogue. However, a lack of universally accepted definitions across the academic, legislative, practitioner, and regulatory space has likely limited the impact that scholarship on dark patterns might have in supporting sanctions and evolved design practices. In this paper, we seek to support the development of a shared language of dark patterns, harmonizing ten existing regulatory and academic taxonomies of dark patterns and proposing a three-level ontology with standardized definitions for 64 synthesized dark pattern types across low-, meso-, and high-level patterns. We illustrate how this ontology can support translational research and regulatory action, including transdisciplinary pathways to extend our initial types through new empirical work across application and technology domains.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Assessing User Apprehensions About Mixed Reality Artifacts and Applications: The Mixed Reality Concerns (MRC) Questionnaire

Christopher Katins (HU Berlin), Paweł W. Woźniak (Chalmers University of Technology), Aodi Chen (HU Berlin), Ihsan Tumay (HU Berlin), Luu Viet Trinh Le (HU Berlin), John Uschold (HU Berlin), Thomas Kosch (HU Berlin)

Abstract | Tags: Full Paper | Links:

@inproceedings{Katins2024AssessingUser,

title = {Assessing User Apprehensions About Mixed Reality Artifacts and Applications: The Mixed Reality Concerns (MRC) Questionnaire},

author = {Christopher Katins (HU Berlin), Paweł W. Woźniak (Chalmers University of Technology), Aodi Chen (HU Berlin), Ihsan Tumay (HU Berlin), Luu Viet Trinh Le (HU Berlin), John Uschold (HU Berlin), Thomas Kosch (HU Berlin)},

url = {hcistudio.org, website},

doi = {10.1145/3613904.3642631},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Current research in Mixed Reality (MR) presents a wide range of novel use cases for blending virtual elements with the real world. This yet-to-be-ubiquitous technology challenges how users currently work and interact with digital content. While offering many potential advantages, MR technologies introduce new security, safety, and privacy challenges. Thus, it is relevant to understand users' apprehensions towards MR technologies, ranging from security concerns to social acceptance. To address this challenge, we present the Mixed Reality Concerns (MRC) Questionnaire, designed to assess users' concerns towards MR artifacts and applications systematically. The development followed a structured process considering previous work, expert interviews, iterative refinements, and confirmatory tests to analytically validate the questionnaire. The MRC Questionnaire offers a new method of assessing users' critical opinions to compare and assess novel MR artifacts and applications regarding security, privacy, social implications, and trust.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Augmented Reality Cues Facilitate Task Resumption after Interruptions in Computer-Based and Physical Tasks

Kilian L. Bahnsen (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg), Lucas Tiemann (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg), Lucas Plabst (Chair for Human-Computer Interaction, Julius-Maximilians-Universität Würzburg), Tobias Grundgeiger (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg)

Abstract | Tags: Full Paper | Links:

@inproceedings{Bahnsen2024AugmentedReality,

title = {Augmented Reality Cues Facilitate Task Resumption after Interruptions in Computer-Based and Physical Tasks},

author = {Kilian L. Bahnsen (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg), Lucas Tiemann (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg), Lucas Plabst (Chair for Human-Computer Interaction, Julius-Maximilians-Universität Würzburg), Tobias Grundgeiger (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg)},

url = {https://www.mcm.uni-wuerzburg.de/psyergo/, website},

doi = {10.1145/3613904.3642666},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Many work domains include numerous interruptions, which can contribute to errors. We investigated the potential of augmented reality (AR) cues to facilitate primary task resumption after interruptions of varying lengths. Experiment 1 (N = 83) involved a computer-based primary task with a red AR arrow at the to-be-resumed task step which was placed via a gesture by the participants or automatically. Compared to no cue, both cues significantly reduced the resumption lag (i.e., the time between the end of the interruption and the resumption of the primary task) following long but not short interruptions. Experiment 2 (N = 38) involved a tangible sorting task, utilizing only the automatic cue. The AR cue facilitated task resumption compared to not cue after both short and long interruptions. We demonstrated the potential of AR cues in mitigating the negative effects of interruptions and make suggestions for integrating AR technologies for task resumption.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

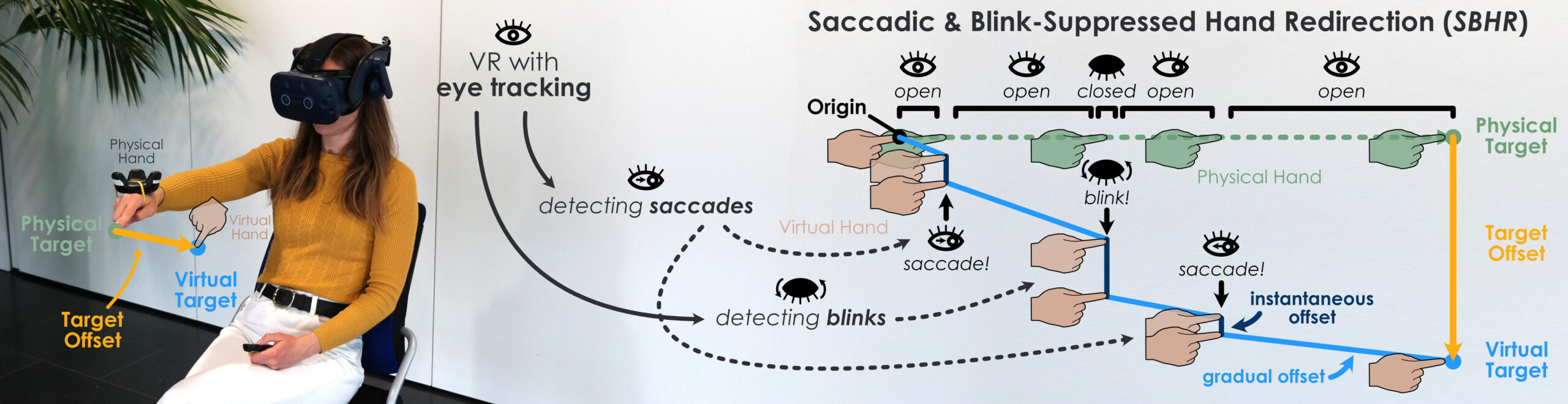

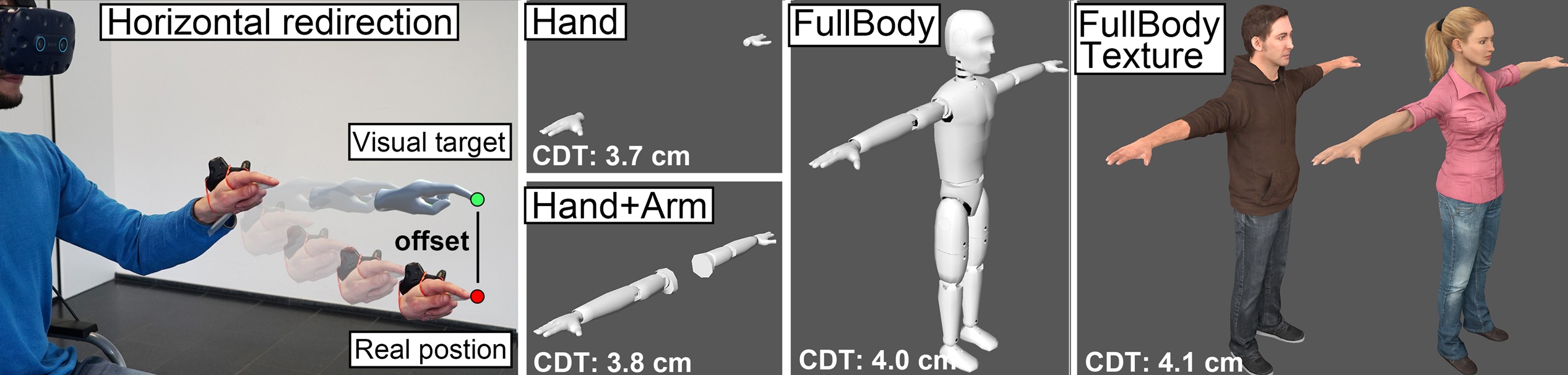

Beyond the Blink: Investigating Combined Saccadic & Blink-Suppressed Hand Redirection in Virtual Reality

André Zenner (Saarland University & DFKI), Chiara Karr (Saarland University), Martin Feick (DFKI & Saarland University), Oscar Ariza (Universität Hamburg), Antonio Krüger (Saarland University & DFKI)

Abstract | Tags: Full Paper | Links:

@inproceedings{Zenner2024BeyondBlink,

title = {Beyond the Blink: Investigating Combined Saccadic & Blink-Suppressed Hand Redirection in Virtual Reality},

author = {André Zenner (Saarland University & DFKI), Chiara Karr (Saarland University), Martin Feick (DFKI & Saarland University), Oscar Ariza (Universität Hamburg), Antonio Krüger (Saarland University & DFKI)},

url = {https://umtl.cs.uni-saarland.de/, website},

doi = {10.1145/3613904.3642073},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {In pursuit of hand redirection techniques that are ever more tailored to human perception, we propose the first algorithm for hand redirection in virtual reality that makes use of saccades, i.e., fast ballistic eye movements that are accompanied by the perceptual phenomenon of change blindness. Our technique combines the previously proposed approaches of gradual hand warping and blink-suppressed hand redirection with the novel approach of saccadic redirection in one unified yet simple algorithm. We compare three variants of the proposed Saccadic & Blink-Suppressed Hand Redirection (SBHR) technique with the conventional approach to redirection in a psychophysical study (N=25). Our results highlight the great potential of our proposed technique for comfortable redirection by showing that SBHR allows for significantly greater magnitudes of unnoticeable redirection while being perceived as significantly less intrusive and less noticeable than commonly employed techniques that only use gradual hand warping.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Born to Run, Programmed to Play: Mapping the Extended Reality Exergames Landscape

Sukran Karaosmanoglu (Human-Computer Interaction, Universität Hamburg), Sebastian Cmentowski (HCI Games Group, Stratford School of Interaction Design, Business, University of Waterloo), Lennart E. Nacke (HCI Games Group, Stratford School of Interaction Design, Business, University of Waterloo), Frank Steinicke (Human-Computer Interaction, Universität Hamburg)

Abstract | Tags: Full Paper | Links:

@inproceedings{Karaosmanoglu2024BornRun,

title = {Born to Run, Programmed to Play: Mapping the Extended Reality Exergames Landscape},

author = {Sukran Karaosmanoglu (Human-Computer Interaction, Universität Hamburg), Sebastian Cmentowski (HCI Games Group, Stratford School of Interaction Design and Business, University of Waterloo), Lennart E. Nacke (HCI Games Group, Stratford School of Interaction Design and Business, University of Waterloo), Frank Steinicke (Human-Computer Interaction, Universität Hamburg)},

url = {https://www.inf.uni-hamburg.de/en/inst/ab/hci, website

https://twitter.com/uhhhci, social media},

doi = {10.1145/3613904.3642124},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Many people struggle to exercise regularly, raising the risk of serious health-related issues. Extended reality (XR) exergames address these hurdles by combining physical exercises with enjoyable, immersive gameplay. While a growing body of research explores XR exergames, no previous review has structured this rapidly expanding research landscape. We conducted a scoping review of the current state of XR exergame research to (i) provide a structured overview, (ii) highlight trends, and (iii) uncover knowledge gaps. After identifying 1318 papers in human-computer interaction and medical databases, we ultimately included 186 papers in our analysis. We provide a quantitative and qualitative summary of XR exergame research, showing current trends and potential future considerations. Finally, we provide a taxonomy of XR exergames to help future design and methodological investigation and reporting.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Closing the Loop: The Effects of Biofeedback Awareness on Physiological Stress Response Using Electrodermal Activity in Virtual Reality

Jessica Sehrt (Frankfurt University of Applied Sciences), Ugur Yilmaz (Frankfurt University of Applied Sciences), Thomas Kosch (HU Berlin), Valentin Schwind (Frankfurt University of Applied Sciences)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Sehrt2024ClosingLoop,

title = {Closing the Loop: The Effects of Biofeedback Awareness on Physiological Stress Response Using Electrodermal Activity in Virtual Reality},

author = {Jessica Sehrt (Frankfurt University of Applied Sciences), Ugur Yilmaz (Frankfurt University of Applied Sciences), Thomas Kosch (HU Berlin), Valentin Schwind (Frankfurt University of Applied Sciences)},

doi = {10.1145/3613905.3650830},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {This paper presents the results of a user study examining the impact of biofeedback awareness on the effectiveness of stress management, utilizing Electrodermal Activity (EDA) as the primary metric within an immersive Virtual Reality (VR). Employing a between-subjects design (N=30), we probed whether informing individuals of their capacity to manipulate the VR environment's weather impacts their physiological stress responses. Our results indicate lower EDA levels of participants who were informed of their biofeedback control than those participants who were not informed about their biofeedback control. Interestingly, the participants who were informed about the control over the environment also manifested variations in their EDA responses. Participants who were not informed of their ability to control the weather showed decreased EDA measures until the end of the biofeedback phase. This study enhances our comprehension of the significance of awareness in biofeedback in immersive settings and its potential to augment stress management techniques.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Controlling the Rooms: How People Prefer Using Gestures to Control Their Smart Homes

Masoumehsadat Hosseini (University of Oldenburg), Heiko Müller (University of Oldenburg), Susanne Boll (University of Oldenburg)

Abstract | Tags: Full Paper | Links:

@inproceedings{Masoumehsadat2024controlling,

title = {Controlling the Rooms: How People Prefer Using Gestures to Control Their Smart Homes},

author = {Masoumehsadat Hosseini (University of Oldenburg), Heiko Müller (University of Oldenburg), Susanne Boll (University of Oldenburg)},

url = {https://hci.uni-oldenburg.de/ ,website

https://twitter.com/hcioldenburg ,social media},

doi = {10.1145/3613904.3642687},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Gesture interactions have become ubiquitous, and with increasingly reliable sensing technology we can anticipate their use in everyday environments such as smart homes. Gestures must meet users' needs and constraints in diverse scenarios to gain widespread acceptance. Although mid-air gestures have been proposed in various user contexts, it is still unclear to what extent users want to integrate them into different scenarios in their smart homes, along with the motivations driving this desire. Furthermore, it is uncertain whether users will remain consistent in their suggestions when transitioning to alternative scenarios within a smart home.

This study contributes methodologically by adapting a bottom-up frame-based design process. We offer insights into preferred devices and commands in different smart home scenarios. Using our results, we can assist in designing gestures in the smart home that are consistent with individual needs across devices and scenarios, while maximizing the reuse and transferability of gestural knowledge.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

This study contributes methodologically by adapting a bottom-up frame-based design process. We offer insights into preferred devices and commands in different smart home scenarios. Using our results, we can assist in designing gestures in the smart home that are consistent with individual needs across devices and scenarios, while maximizing the reuse and transferability of gestural knowledge.

Cross-Country Examination of People’s Experience with Targeted Advertising on Social Media

Smirity Kaushik (School of Information Sciences, University of Illinois at Urbana-Champaign, Champaign, Illinois, United States), Tanusree Sharma (Information Sciences, University of Illinois at Urbana Champaign, Champaign, Illinois, United States), Yaman Yu (School of Information Sciences, University of Illinois at Urbana Champaign, Champaign, Illinois, United States), Amna F Ali (UIUC, Champaign, Illinois, United States), Yang Wang (University of Illinois at Urbana-Champaign, Champaign, Illinois, United States), Yixin Zou (Max Planck Institute for Security, Privacy, Bochum, Germany)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Kaushik2024CrosscountryExamination,

title = {Cross-Country Examination of People’s Experience with Targeted Advertising on Social Media},

author = {Smirity Kaushik (School of Information Sciences, University of Illinois at Urbana-Champaign, Champaign, Illinois, United States), Tanusree Sharma (Information Sciences, University of Illinois at Urbana Champaign, Champaign, Illinois, United States), Yaman Yu (School of Information Sciences, University of Illinois at Urbana Champaign, Champaign, Illinois, United States), Amna F Ali (UIUC, Champaign, Illinois, United States), Yang Wang (University of Illinois at Urbana-Champaign, Champaign, Illinois, United States), Yixin Zou (Max Planck Institute for Security and Privacy, Bochum, Germany)},

url = {https://yixinzou.github.io/, website

https://youtu.be/aJ2xmuFk0DM, full video

https://twitter.com/yixinzouu, social media},

doi = {10.1145/3613905.3650780},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Social media effectively connects businesses with diverse audiences. However, research related to targeted advertising and social media is rarely done beyond Western contexts. Through an online survey with 412 participants in the United States and three South Asian countries (Bangladesh, India, and Pakistan), we found significant differences in participants' ad preferences, perceptions, and coping behaviors that correlate with individuals' country of origin, culture, religion, and other demographic factors. For instance, Indian and Pakistani participants preferred video ads to those in the US. Participants relying on themselves (horizontal individualism) also expressed more concerns about the security and privacy issues of targeted ads. Muslim participants were more likely to hide ads as a coping strategy than other religious groups. Our findings highlight that people's experiences with targeted advertising are rooted in their national, cultural, and religious backgrounds—an important lesson for the design of ad explanations and settings, user education, and platform governance.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

CUI@CHI 2024: Building Trust in CUIs—From Design to Deployment

Smit Desai (School of Information Sciences, University of Illinois), Christina Ziying Wei (University of Toronto), Jaisie Sin (University of British Columbia), Mateusz Dubiel (University of Luxembourg), Nima Zargham (Digital Media Lab, University of Bremen), Shashank Ahire (Leibniz University Hannover), Martin Porcheron (Bold Insight, London), Anastasia Kuzminykh (University of Toronto), Minha Lee (Eindhoven University of Technology), Heloisa Candello (IBM Research), Joel E Fischer (Mixed Reality Laboratory, University of Nottingham), Cosmin Munteanu (University of Waterloo), Benjamin R. Cowan (University College Dublin)

Abstract | Tags: Workshop | Links:

@inproceedings{Desai2024Cuichi2024,

title = {CUI@CHI 2024: Building Trust in CUIs—From Design to Deployment},

author = {Smit Desai (School of Information Sciences, University of Illinois), Christina Ziying Wei (University of Toronto), Jaisie Sin (University of British Columbia), Mateusz Dubiel (University of Luxembourg), Nima Zargham (Digital Media Lab, University of Bremen), Shashank Ahire (Leibniz University Hannover), Martin Porcheron (Bold Insight, London), Anastasia Kuzminykh (University of Toronto), Minha Lee (Eindhoven University of Technology), Heloisa Candello (IBM Research), Joel E Fischer (Mixed Reality Laboratory, University of Nottingham), Cosmin Munteanu (University of Waterloo), Benjamin R. Cowan (University College Dublin)},

url = {https://www.uni-bremen.de/dmlab, website},

doi = {10.1145/3613905.3636287},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Conversational user interfaces (CUIs) have become an everyday technology for people the world over, as well as a booming area of research. Advances in voice synthesis and the emergence of chatbots powered by large language models (LLMs), notably ChatGPT, have pushed CUIs to the forefront of human-computer interaction (HCI) research and practice. Now that these technologies enable an elemental level of usability and user experience (UX), we must turn our attention to higher-order human factors: trust and reliance. In this workshop, we aim to bring together a multidisciplinary group of researchers and practitioners invested in the next phase of CUI design. Through keynotes, presentations, and breakout sessions, we will share our knowledge, identify cutting-edge resources, and fortify an international network of CUI scholars. In particular, we will engage with the complexity of trust and reliance as attitudes and behaviours that emerge when people interact with conversational agents.},

keywords = {Workshop},

pubstate = {published},

tppubtype = {inproceedings}

}

Decide Yourself or Delegate - User Preferences Regarding the Autonomy of Personal Privacy Assistants in Private IoT-Equipped Environments

Karola Marky (Ruhr University Bochum), Alina Stöver (Technical University of Darmstadt), , Sarah Prange (University of the Bundeswehr), Kira Bleck (Technical University of Darmstadt), Paul Gerber (Technical University of Darmstadt), Verena Zimmermann (ETH Zürich), Florian Müller (LMU Munich), Florian Alt (University of the Bundeswehr), Max Mühlhäuser (Technical University of Darmstadt),

Abstract | Tags: Full Paper | Links:

@inproceedings{Marky2024DecideYourself,

title = {Decide Yourself or Delegate - User Preferences Regarding the Autonomy of Personal Privacy Assistants in Private IoT-Equipped Environments},

author = {Karola Marky (Ruhr University Bochum), Alina Stöver (Technical University of Darmstadt), , Sarah Prange (University of the Bundeswehr), Kira Bleck (Technical University of Darmstadt), Paul Gerber (Technical University of Darmstadt), Verena Zimmermann (ETH Zürich), Florian Müller (LMU Munich), Florian Alt (University of the Bundeswehr), Max Mühlhäuser (Technical University of Darmstadt),},

url = {https://informatik.rub.de/digisoul/personen/marky/, website},

doi = {10.1145/3613904.3642591},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Personalized privacy assistants (PPAs) communicate privacy-related decisions of their users to Internet of Things (IoT) devices. There are different ways to implement PPAs by varying the degree of autonomy or decision model. This paper investigates user perceptions of PPA autonomy models and privacy profiles – archetypes of individual privacy needs - as a basis for PPA decisions in private environments (e.g., a friend's home). We first explore how privacy profiles can be assigned to users and propose an assignment method. Next, we investigate user perceptions in 18 usage scenarios with varying contexts, data types and number of decisions in a study with 1126 participants. We found considerable differences between the profiles in settings with few decisions. If the number of decisions gets high (> 1/h), participants exclusively preferred fully autonomous PPAs. Finally, we discuss implications and recommendations for designing scalable PPAs that serve as privacy interfaces for future IoT devices.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Design Space of Visual Feedforward And Corrective Feedback in XR-Based Motion Guidance Systems

Xingyao Yu (VISUS, University of Stuttgart), Benjamin Lee (VISUS, University of Stuttgart), Michael Sedlmair (VISUS, University of Stuttgart)

Abstract | Tags: Full Paper | Links:

@inproceedings{Yu2024DesignSpace,

title = {Design Space of Visual Feedforward And Corrective Feedback in XR-Based Motion Guidance Systems},

author = {Xingyao Yu (VISUS, University of Stuttgart), Benjamin Lee (VISUS, University of Stuttgart), Michael Sedlmair (VISUS, University of Stuttgart)},

url = {https://www.visus.uni-stuttgart.de/en/, website},

doi = {10.1145/3613904.3642143},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Extended reality (XR) technologies are highly suited in assisting individuals in learning motor skills and movements—referred to as motion guidance. In motion guidance, the ``feedforward’’ provides instructional cues of the motions that are to be performed, whereas the ``feedback’’ provides cues which help correct mistakes and minimize errors. Designing synergistic feedforward and feedback is vital to providing an effective learning experience, but this interplay between the two has not yet been adequately explored. Based on a survey of the literature, we propose design spaces for both motion feedforward and corrective feedback in XR, and describe the interaction effects between them. We identify common design approaches of XR-based motion guidance found in our literature corpus, and discuss them through the lens of our design dimensions. We then discuss additional contextual factors and considerations that influence this design, together with future research opportunities of motion guidance in XR.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

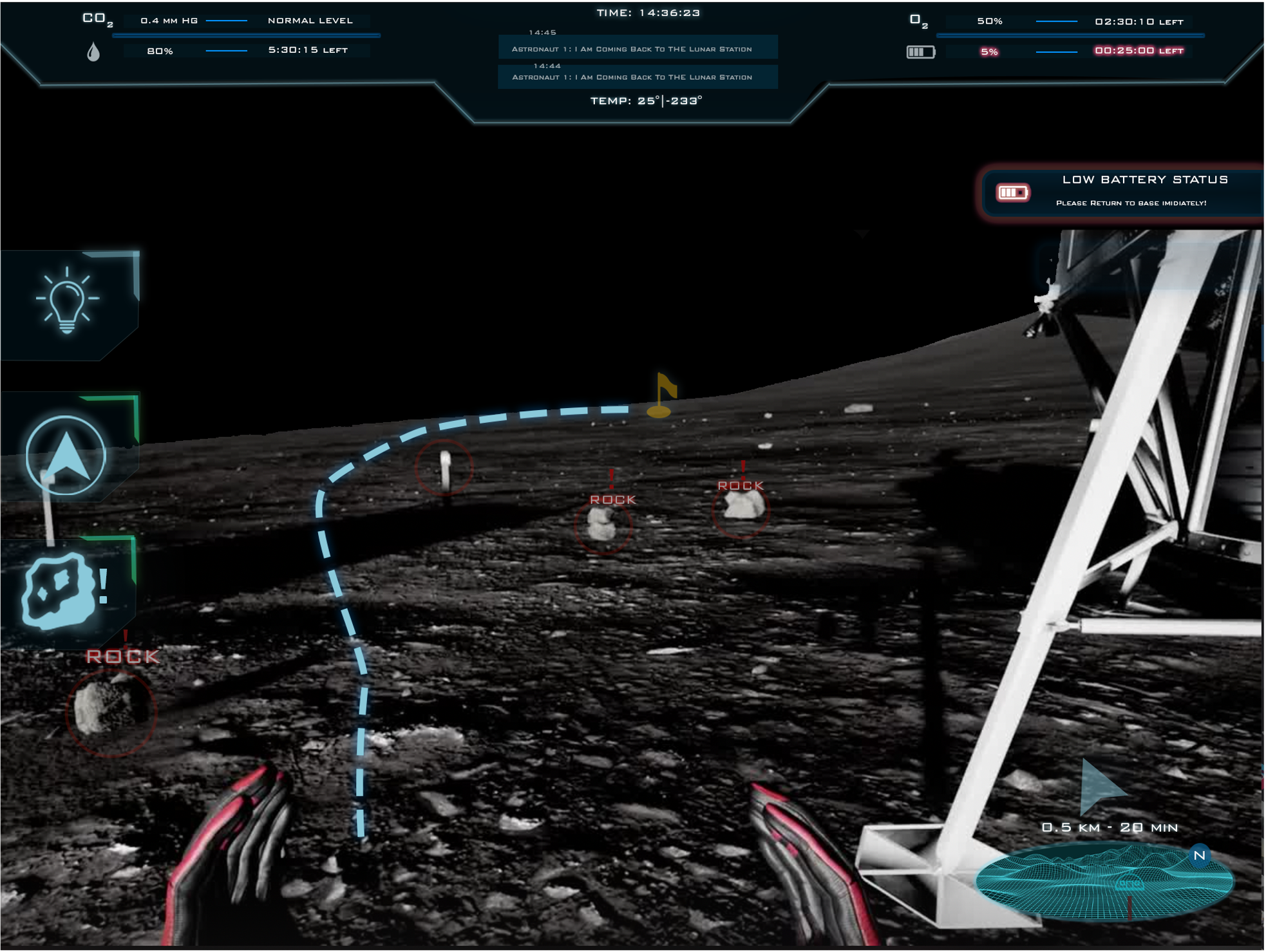

Designing for Human Operations on the Moon: Challenges and Opportunities of Navigational HUD Interfaces

Leonie Bensch (German Aerospace Center), Tommy Nilsson (European Space Agency), Jan Wulkop (German Aerospace Center), Paul de Medeiros (European Space Agency), Nicolas Daniel Herzberger (RWTH Aachen), Michael Preutenborbeck (RWTH Aachen), Andreas Gerndt (German Aerospace Center), Frank Flemisch (RWTH Aachen), Florian Dufresne (Arts et Métiers Institute of Technology), Georgia Albuquerque (German Aerospace Center), Aidan Cowley (European Space Agency)

Abstract | Tags: Full Paper | Links:

@inproceedings{Bensch2024DesigningHuman,

title = {Designing for Human Operations on the Moon: Challenges and Opportunities of Navigational HUD Interfaces},

author = {Leonie Bensch (German Aerospace Center), Tommy Nilsson (European Space Agency), Jan Wulkop (German Aerospace Center), Paul de Medeiros (European Space Agency), Nicolas Daniel Herzberger (RWTH Aachen), Michael Preutenborbeck (RWTH Aachen), Andreas Gerndt (German Aerospace Center), Frank Flemisch (RWTH Aachen), Florian Dufresne (Arts et Métiers Institute of Technology), Georgia Albuquerque (German Aerospace Center), Aidan Cowley (European Space Agency)},

url = {https://www.dlr.de/sc/en/desktopdefault.aspx/tabid-1200/1659_read-3101/, website https://drive.google.com/file/d/1SqQRF5YqhHsy0J9vFQsiaN3bHOP_p9i_/view?usp=sharing, teaser video https://drive.google.com/file/d/1Q1CMuReXr9lPCTuSyxbNvg4GLVeAeJTC/view?usp=sharing, full video},

doi = {10.1145/3613904.3642859},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Future crewed missions to the Moon will face significant environmental and operational challenges, posing risks to the safety and performance of astronauts navigating its inhospitable surface. Whilst head-up displays (HUDs) have proven effective in providing intuitive navigational support on Earth, the design of novel human-spaceflight solutions typically relies on costly and time-consuming analogue deployments, leaving the potential use of lunar HUD’s largely under-explored. This paper explores an alternative approach by simulating navigational HUD concepts in a high-fidelity Virtual Reality (VR) representation of the lunar environment. In evaluating these concepts with astronauts and other aerospace experts (n=25), our mixed methods study demonstrates the efficacy of simulated analogues in facilitating rapid design assessments of early-stage HUD solutions. We illustrate this by elaborating key design challenges and guidelines for future lunar HUDs. In reflecting on the limitations of our approach, we propose directions for future design exploration of human-machine interfaces for the Moon.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Development and Validation of the Collision Anxiety Questionnaire for VR Applications

Patrizia Ring (Universität Duisburg-Essen), Julius Tietenberg (Universität Duisburg-Essen), Katharina Emmerich (Universität Duisburg-Essen), Maic Masuch (Universität Duisburg-Essen)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Ring2024DevelopmentValidation,

title = {Development and Validation of the Collision Anxiety Questionnaire for VR Applications},

author = {Patrizia Ring (Universität Duisburg-Essen), Julius Tietenberg (Universität Duisburg-Essen), Katharina Emmerich (Universität Duisburg-Essen), Maic Masuch (Universität Duisburg-Essen)},

url = {https://www.ecg.uni-due.de/home/home.html, website

https://www.instagram.com/ecg.ude/, social media},

doi = {10.1145/3613904.3642408},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {The high degree of sensory immersion is a distinctive feature of head-mounted virtual reality (VR) systems. While the visual detachment from the real world enables unique immersive experiences, users risk collisions due to their inability to perceive physical obstacles in their environment. Even the mere anticipation of a collision can adversely affect the overall experience and erode user confidence in the VR system. However, there are currently no valid tools for assessing collision anxiety. We present the iterative development and validation of the Collision Anxiety Questionnaire (CAQ), involving an exploratory and a confirmatory factor analysis with a total of 159 participants. The results provide evidence for both discriminant and convergent validity and a good model fit for the final CAQ with three subscales: general collision anxiety, orientation, and interpersonal collision anxiety. By utilizing the CAQ, researchers can examine potential confounding effects of collision anxiety and evaluate methods for its mitigation.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

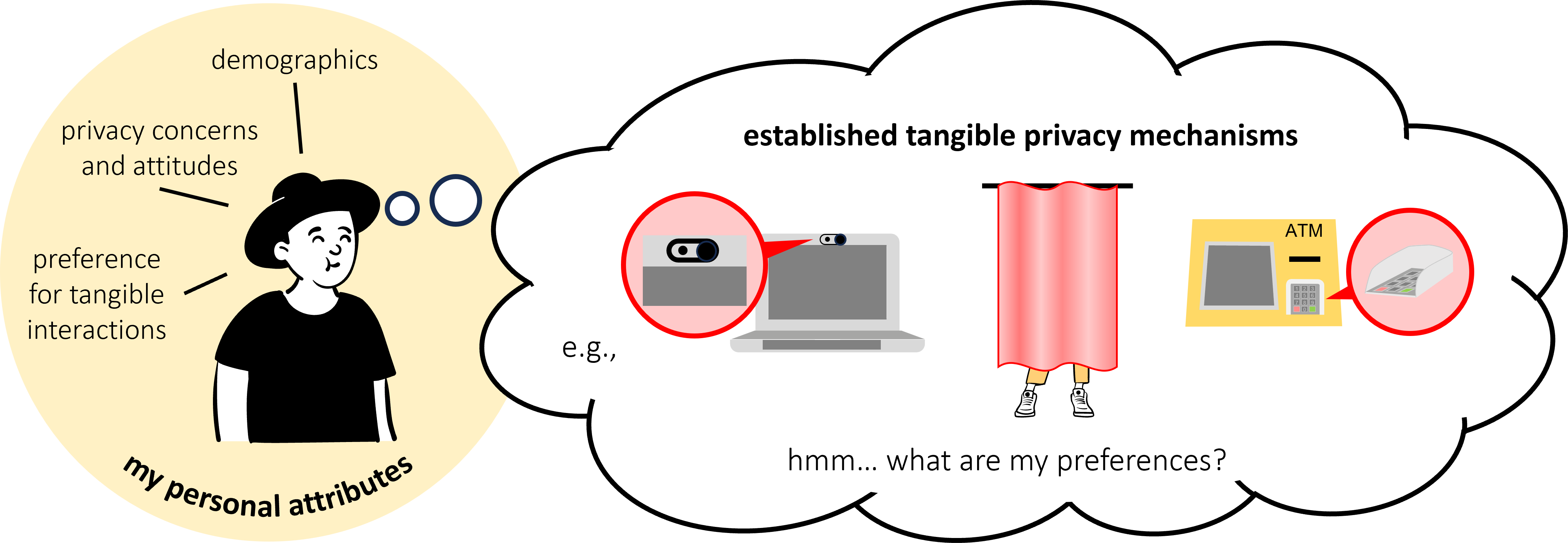

Do You Need to Touch? Exploring Correlations between Personal Attributes and Preferences for Tangible Privacy Mechanisms

Sarah Delgado Rodriguez (University of the Bundeswehr Munich), Priyasha Chatterjee (Ruhr-University Bochum), Anh Dao Phuong (LMU Munich), Florian Alt (University of the Bundeswehr Munich), Karola Marky (Ruhr-University Bochum)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Rodriguez2024DoNeed,

title = {Do You Need to Touch? Exploring Correlations between Personal Attributes and Preferences for Tangible Privacy Mechanisms},

author = {Sarah Delgado Rodriguez (University of the Bundeswehr Munich), Priyasha Chatterjee (Ruhr-University Bochum), Anh Dao Phuong (LMU Munich), Florian Alt (University of the Bundeswehr Munich), Karola Marky (Ruhr-University Bochum)},

url = {https://www.unibw.de/usable-security-and-privacy, website

https://youtu.be/EpthiyvegeI, teaser video

https://youtu.be/NkgLIxpVils, full video

https://twitter.com/USECUnibwM, social media},

doi = {10.1145/3613904.3642863},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {This paper explores how personal attributes, such as age, gender, technological expertise, or "need for touch", correlate with people's preferences for properties of tangible privacy protection mechanisms, for example, physically covering a camera. For this, we conducted an online survey (N = 444) where we captured participants' preferences of eight established tangible privacy mechanisms well-known in daily life, their perceptions of effective privacy protection, and personal attributes. We found that the attributes that correlated most strongly with participants' perceptions of the established tangible privacy mechanisms were their "need for touch" and previous experiences with the mechanisms. We use our findings to identify desirable characteristics of tangible mechanisms to better inform future tangible, digital, and mixed privacy protections. We also show which individuals benefit most from tangibles, ultimately motivating a more individual and effective approach to privacy protection in the future.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

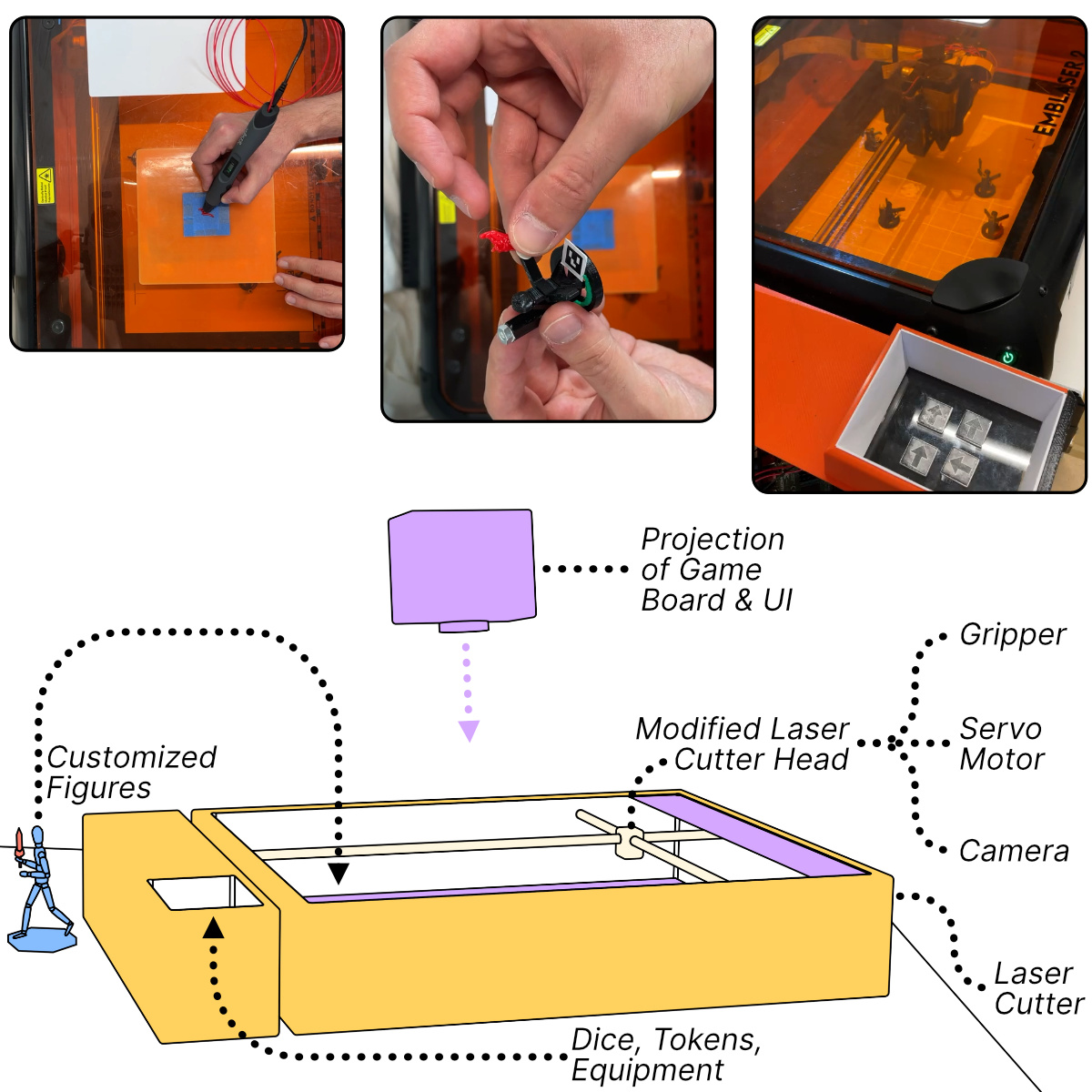

DungeonMaker: Embedding Tangible Creation and Destruction in Hybrid Board Games through Personal Fabrication Technology

Evgeny Stemasov (Institute of Media Informatics, Ulm University), Tobias Wagner (Institute of Media Informatics, Ulm University), Ali Askari (Institute of Media Informatics, Ulm University), Jessica Janek (Institute of Media Informatics, Ulm University), Omid Rajabi (Institute of Media Informatics, Ulm University), Anja Schikorr (Institute of Media Informatics, Ulm University), Julian Frommel (Utrecht University), Jan Gugenheimer (TU-Darmstadt, Institut Polytechnique de Paris, Enrico Rukzio (Institute of Media Informatics, Ulm University)

Abstract | Tags: Full Paper | Links:

@inproceedings{Stemasov2024Dungeonmaker,

title = {DungeonMaker: Embedding Tangible Creation and Destruction in Hybrid Board Games through Personal Fabrication Technology},

author = {Evgeny Stemasov (Institute of Media Informatics, Ulm University), Tobias Wagner (Institute of Media Informatics, Ulm University), Ali Askari (Institute of Media Informatics, Ulm University), Jessica Janek (Institute of Media Informatics, Ulm University), Omid Rajabi (Institute of Media Informatics, Ulm University), Anja Schikorr (Institute of Media Informatics, Ulm University), Julian Frommel (Utrecht University), Jan Gugenheimer (TU-Darmstadt and Institut Polytechnique de Paris, Enrico Rukzio (Institute of Media Informatics, Ulm University)},

url = {https://www.uni-ulm.de/in/mi/hci/, website

https://youtu.be/kKJD8Nv33qI, teaser video

https://youtu.be/NbIc-sOfT5Y, full video

https://twitter.com/mi_uulm, social media},

doi = {10.1145/3613904.3642243},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Hybrid board games (HBGs) augment their analog origins digitally (e.g., through apps) and are an increasingly popular pastime activity. Continuous world and character development and customization, known to facilitate engagement in video games, remain rare in HBGs. If present, they happen digitally or imaginarily, often leaving physical aspects generic. We developed DungeonMaker, a fabrication-augmented HBG bridging physical and digital game elements: 1) the setup narrates a story and projects a digital game board onto a laser cutter; 2) DungeonMaker assesses player-crafted artifacts; 3) DungeonMaker's modified laser head senses and moves player- and non-player figures, and 4) can physically damage figures. An evaluation (n=4x3) indicated that DungeonMaker provides an engaging experience, may support players' connection to their figures, and potentially spark novices' interest in fabrication. DungeonMaker provides a rich constellation to play HBGs by blending aspects of craft and automation to couple the physical and digital elements of an HBG tightly.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Effects of a Gaze-Based 2D Platform Game on User Enjoyment, Perceived Competence, and Digital Eye Strain

Mark Colley (Institute of Media Informatics, Ulm University, Cornell Tech, New York City, New York, United States), Beate Wanner (Institute of Media Informatics, Ulm University), Max Rädler (Institute of Media Informatics, Ulm University), Marcel Rötzer (Institute of Media Informatics, Ulm University), Julian Frommel (Utrecht University) Teresa Hirzle (Department of Computer Science, University of Copenhagen), Pascal Jansen (Institute of Media Informatics, Ulm University), Enrico Rukzio (Institute of Media Informatics, Ulm University)

Abstract | Tags: Full Paper | Links:

@inproceedings{Colley2024EffectsGazebased,

title = {Effects of a Gaze-Based 2D Platform Game on User Enjoyment, Perceived Competence, and Digital Eye Strain},

author = {Mark Colley (Institute of Media Informatics, Ulm University and Cornell Tech, New York City, New York, United States), Beate Wanner (Institute of Media Informatics, Ulm University), Max Rädler (Institute of Media Informatics, Ulm University), Marcel Rötzer (Institute of Media Informatics, Ulm University), Julian Frommel (Utrecht University) Teresa Hirzle (Department of Computer Science, University of Copenhagen), Pascal Jansen (Institute of Media Informatics, Ulm University), Enrico Rukzio (Institute of Media Informatics, Ulm University)},

url = {https://www.uni-ulm.de/en/in/mi/hci/, website https://twitter.com/mi_uulm?lang=de, social media},

doi = {10.1145/3613904.3641909},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Gaze interaction is a promising interaction method to increase variety, challenge, and fun in games. We present “Shed Some Fear”, a 2D platform game including numerous eye-gaze-based interactions. “Shed Some Fear” includes control with eye-gaze and traditional keyboard input. The eye-gaze interactions are partially based on eye exercises reducing digital eye strain but also on employing peripheral vision. By employing eye-gaze as a necessary input mechanism, we explore the effects on and tradeoffs between user enjoyment and digital eye strain in a five-day longitudinal between-subject study (N=17) compared to interaction with a traditional mouse. We found that perceived competence was significantly higher with eye gaze interaction and significantly higher internal eye strain. With this work, we contribute to the not straightforward inclusion of eye tracking as a useful and fun input method for games.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Enhancing Online Meeting Experience through Shared Gaze-Attention

Chandan Kumar (Fraunhofer IAO), Bhupender Kumar Saini (Fraunhofer IAO & University of Stuttgart), Steffen Staab (University of Stuttgart & University of Southampton)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Kumar2024EnhancingOnline,

title = {Enhancing Online Meeting Experience through Shared Gaze-Attention},

author = {Chandan Kumar (Fraunhofer IAO), Bhupender Kumar Saini (Fraunhofer IAO & University of Stuttgart), Steffen Staab (University of Stuttgart & University of Southampton)},

url = {https://www.ki.uni-stuttgart.de/departments/ac/, website @AnalyticComp, twitter},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Eye contact represents a fundamental element of human social interactions, providing essential non-verbal signals. Traditionally, it has played a crucial role in fostering social bonds during in-person gatherings. However, in the realm of virtual and online meetings, the capacity for meaningful eye contact is often compromised by the limitations of the platforms we use. In response to this challenge, we present an application framework that leverages webcams to detect and share eye gaze attention among participants. Through the framework, we organized 13 group meetings involving a total of 43 participants. The results highlight that the inclusion of gaze attention can enrich interactive experiences and elevate engagement levels in online meetings. Additionally, our evaluation of two levels of gaze sharing schemes indicates that users predominantly favor viewing gaze attention directed toward themselves, as opposed to visualizing detailed attention, which tends to lead to distraction and information overload.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Evaluating Interactive AI: Understanding and Controlling Placebo Effects in Human-AI Interaction

Steeven Villa (LMU Munich), Robin Welsch (Aalto University), Alena Denisova, (University of York), Thomas Kosch (HU Berlin)

Abstract | Tags: Workshop | Links:

@inproceedings{Villa2024EvaluatingInteractive,

title = {Evaluating Interactive AI: Understanding and Controlling Placebo Effects in Human-AI Interaction},

author = {Steeven Villa (LMU Munich), Robin Welsch (Aalto University), Alena Denisova, (University of York), Thomas Kosch (HU Berlin)},

url = {www.hcistudio.org, website},

doi = {10.1145/3613905.3636304},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {In the medical field, patients often experience tangible benefits from treatments they expect will improve their condition, even if the treatment has no mechanism of effect. This phenomenon often obscuring scientific evaluation of human treatment is termed the "placebo effect." Latest research in human-computer interaction has shown that using cutting-edge technologies similarly raises expectations of improvement, culminating in placebo effects that undermine evaluation efforts for user studies. This workshop delves into the role of placebo effects in human-computer interaction for cutting-edge technologies such as artificial intelligence, its influence as a confounding factor in user studies, and identifies methods that researchers can adopt to reduce its impact on study findings. By the end of this workshop, attendees will be equipped to incorporate placebo control measures in their experimental designs.},

keywords = {Workshop},

pubstate = {published},

tppubtype = {inproceedings}

}

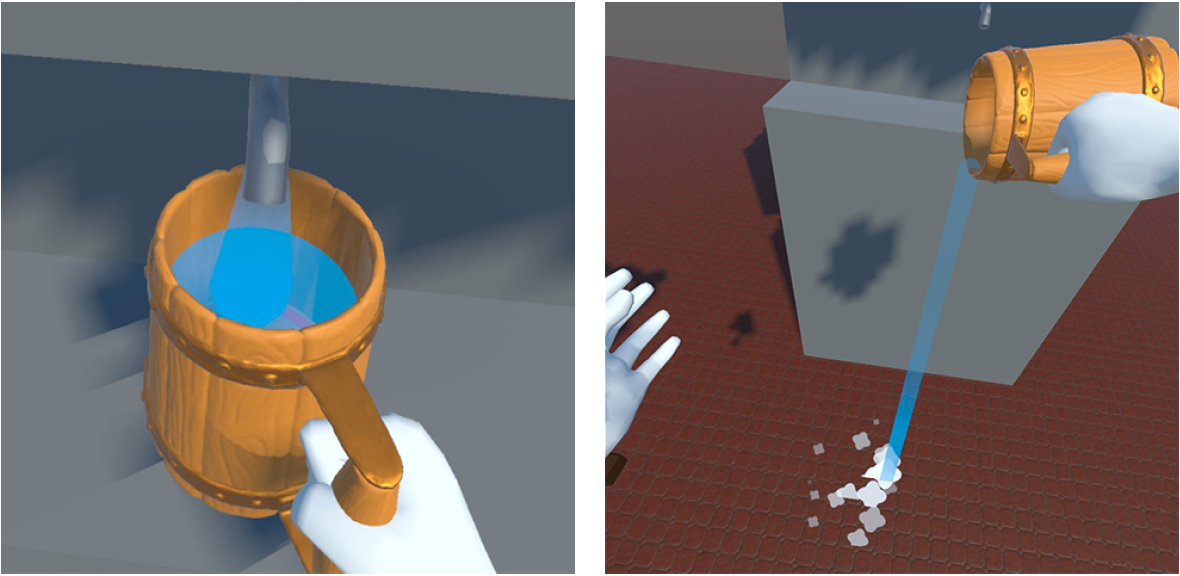

Experiencing Dynamic Weight Changes in Virtual Reality Through Pseudo-Haptics and Vibrotactile Feedback

Carolin Stellmacher (University of Bremen), Feri Irsanto Pujianto (Technical University of Berlin), Tanja Kojić (Technical University of Berlin), Jan-Niklas Voigt-Antons (Hamm-Lippstadt University of Applied Sciences), Johannes Schöning (University of St. Gallen),

Abstract | Tags: Full Paper | Links:

@inproceedings{Stellmacher2024ExperiencingDynamic,

title = {Experiencing Dynamic Weight Changes in Virtual Reality Through Pseudo-Haptics and Vibrotactile Feedback},

author = {Carolin Stellmacher (University of Bremen), Feri Irsanto Pujianto (Technical University of Berlin), Tanja Kojić (Technical University of Berlin), Jan-Niklas Voigt-Antons (Hamm-Lippstadt University of Applied Sciences), Johannes Schöning (University of St. Gallen),},

url = {https://www.uni-bremen.de/dmlab, website

https://youtu.be/ygxuu-a0oRc, teaser video

https://www.youtube.com/watch?v=57Wwr6cmvgQ, full video},

doi = {10.1145/3613904.3642552},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Virtual reality (VR) objects react dynamically to users' touch interactions in real-time. However, experiencing changes in weight through the haptic sense remains challenging with consumer VR controllers due to their limited vibrotactile feedback. While prior works successfully applied pseudo-haptics to perceive absolute weight by manipulating the control-display (C/D) ratio, we continuously adjusted the C/D ratio to mimic weight changes. Vibrotactile feedback additionally emphasises the modulation in the virtual object's physicality. In a study (N=18), we compared our multimodal technique with pseudo-haptics alone and a baseline condition to assess participants' experiences of weight changes. Our findings demonstrate that participants perceived varying degrees of weight change when the C/D ratio was adjusted, validating its effectiveness for simulating dynamic weight in VR. However, the additional vibrotactile feedback did not improve weight change perception. This work extends the understanding of designing haptic experiences for lightweight VR systems by leveraging perceptual mechanisms.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

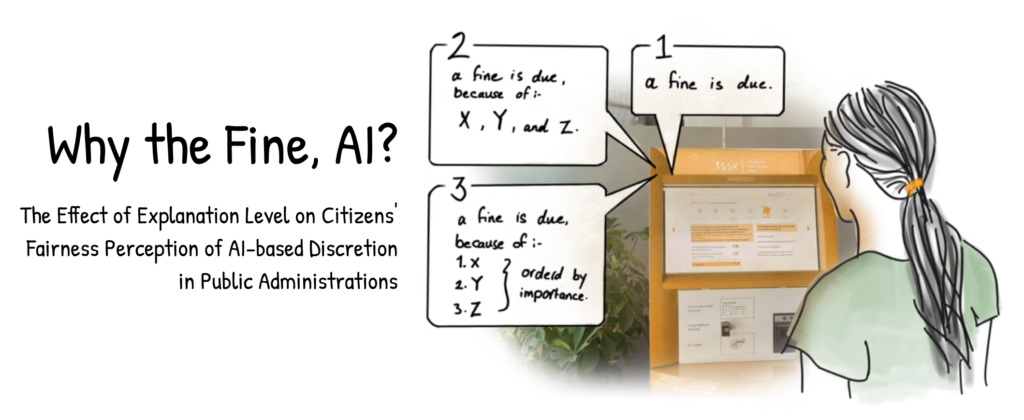

Explaining It Your Way - Findings from a Co-Creative Design Workshop on Designing XAI Applications with AI End-Users from the Public Sector

Katharina Weitz (University of Augsburg), Ruben Schlagowski (University of Augsburg), Elisabeth André (University of Augsburg), Maris Männiste (University of Tartu), Ceenu George (TU Berlin)

Abstract | Tags: Full Paper | Links:

@inproceedings{Weitz2024ExplainingIt,

title = {Explaining It Your Way - Findings from a Co-Creative Design Workshop on Designing XAI Applications with AI End-Users from the Public Sector},

author = {Katharina Weitz (University of Augsburg), Ruben Schlagowski (University of Augsburg), Elisabeth André (University of Augsburg), Maris Männiste (University of Tartu), Ceenu George (TU Berlin)},

url = {hcai.eu, website},

doi = {10.1145/3613904.3642563},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Human-Centered AI prioritizes end-users' needs like transparency and usability. This is vital for applications that affect people's everyday lives, such as social assessment tasks in the public sector. This paper discusses our pioneering effort to involve public sector AI users in XAI application design through a co-creative workshop with unemployment consultants from Estonia. The workshop's objectives were identifying user needs and creating novel XAI interfaces for the used AI system. As a result of our user-centered design approach, consultants were able to develop AI interface prototypes that would support them in creating success stories for their clients by getting detailed feedback and suggestions. We present a discussion on the value of co-creative design methods with end-users working in the public sector to improve AI application design and provide a summary of recommendations for practitioners and researchers working on AI systems in the public sector.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring Mobile Devices as Haptic Interfaces for Mixed Reality

Carolin Stellmacher (University of Bremen), Florian Mathis (University of St.Gallen), Yannick Weiss (LMU Munich), Meagan B. Loerakker (Chalmers University of Technology), Nadine Wagener (University of Bremen), Johannes Schöning (University of St. Gallen)

Abstract | Tags: Full Paper | Links:

@inproceedings{Stellmacher2024ExploringMobile,

title = {Exploring Mobile Devices as Haptic Interfaces for Mixed Reality},

author = {Carolin Stellmacher (University of Bremen), Florian Mathis (University of St.Gallen), Yannick Weiss (LMU Munich), Meagan B. Loerakker (Chalmers University of Technology), Nadine Wagener (University of Bremen), Johannes Schöning (University of St. Gallen)},

url = {https://www.uni-bremen.de/dmlab, website

https://www.youtube.com/watch?v=SBaaCeTH3BM, full video},

doi = {10.1145/3613904.3642176},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

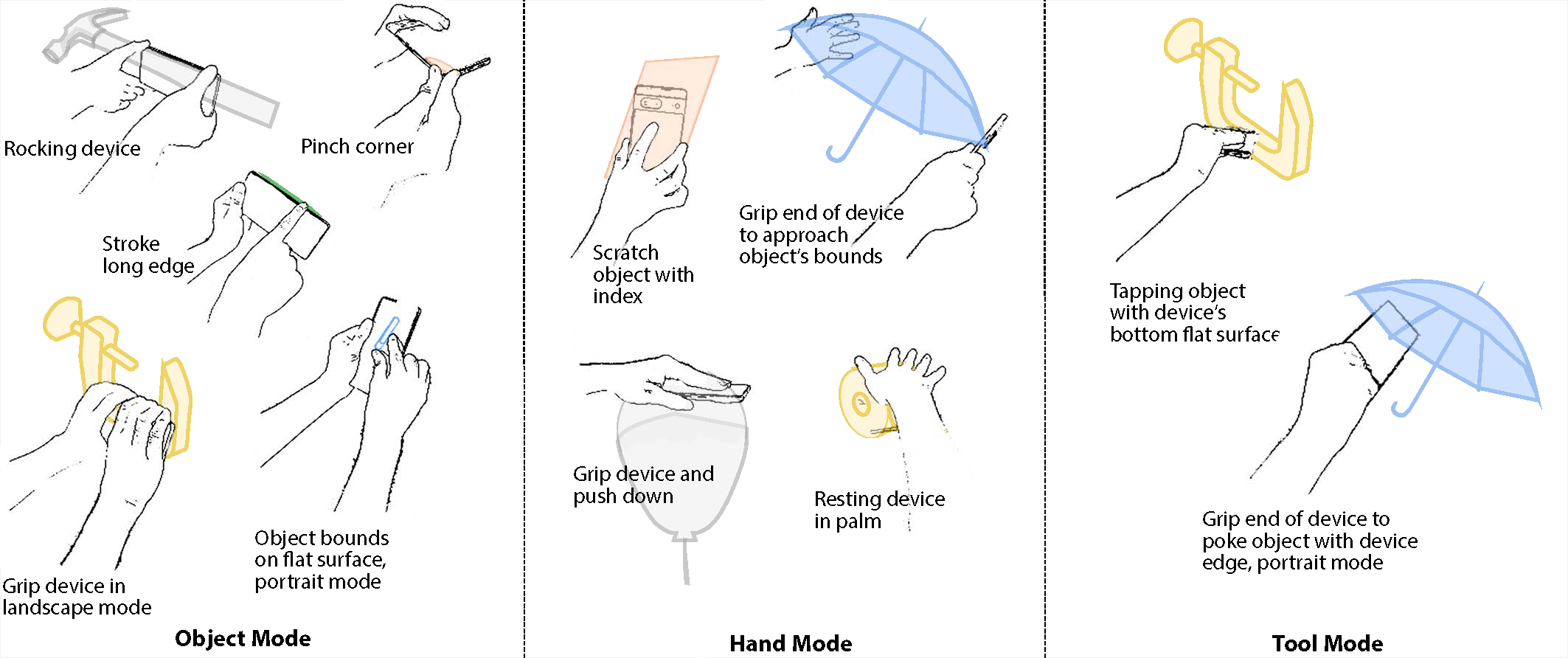

abstract = {Dedicated handheld controllers facilitate haptic experiences of virtual objects in mixed reality (MR). However, as mobile MR becomes more prevalent, we observe the emergence of controller-free MR interactions. To retain immersive haptic experiences, we explore the use of mobile devices as a substitute for specialised MR controller. In an exploratory gesture elicitation study (n = 18), we examined users' (1) intuitive hand gestures performed with prospective mobile devices and (2) preferences for real-time haptic feedback when exploring haptic object properties. Our results reveal three haptic exploration modes for the mobile device, as an object, hand substitute, or as an additional tool, and emphasise the benefits of incorporating the device's unique physical features into the object interaction. This work expands the design possibilities using mobile devices for tangible object interaction, guiding the future design of mobile devices for haptic MR experiences.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring Spatial Organization Strategies for Virtual Content in Mixed Reality Environments

Weizhou Luo (Interactive Media Lab Dresden, Technische Universität Dresden)

Abstract | Tags: Doctoral Consortium | Links:

@inproceedings{Luo2024ExploringSpatial,

title = {Exploring Spatial Organization Strategies for Virtual Content in Mixed Reality Environments},

author = {Weizhou Luo (Interactive Media Lab Dresden, Technische Universität Dresden)},

url = {https://imld.de/en/, website

https://twitter.com/imldresden, social media},

doi = {10.1145/3613905.3638181},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

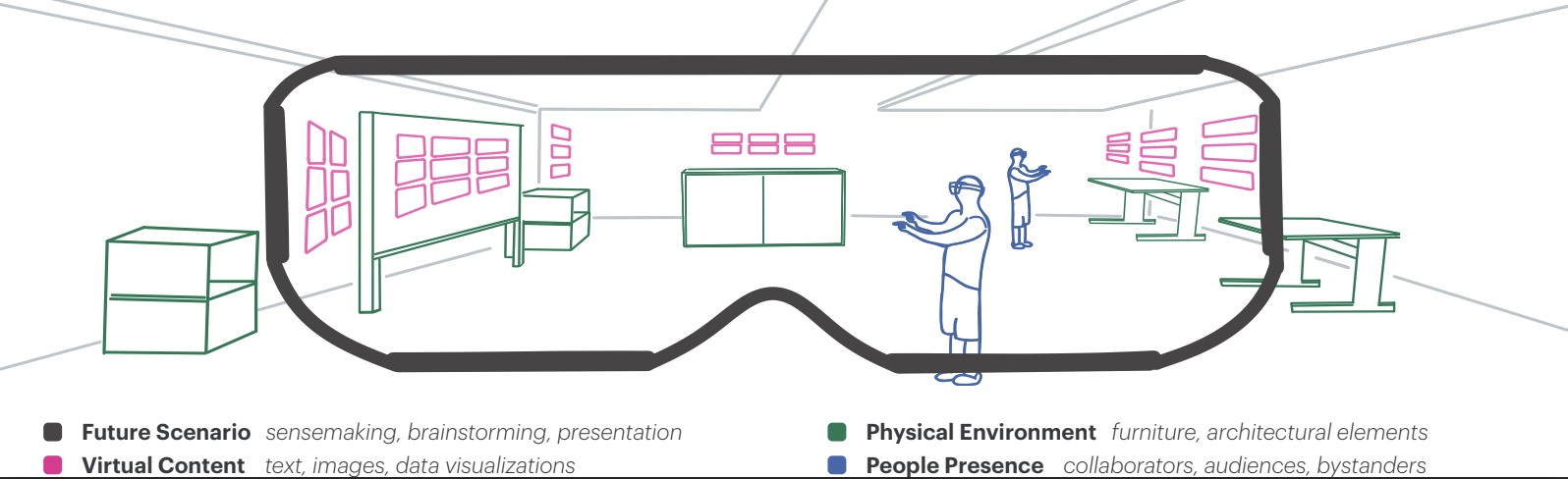

abstract = {Our future will likely be reshaped by Mixed Reality (MR) offering boundless display space while preserving the context of real-world surroundings. However, to fully leverage the spatial capabilities of MR technology, a better understanding of how and where to place virtual content like documents is required, particularly considering the situated context. I aim to explore spatial organization strategies for virtual content in MR environments. For that, we conducted empirical studies investigating users' strategies for document layout and placement and examined two real-world factors: physical environments and people present. With this knowledge, we proposed a mixed-reality approach for the in-situ exploration and analysis of human movement data utilizing physical objects in the original space as referents. My next steps include exploring arrangement strategies, designing techniques empowering spatial organization, and extending understandings for multi-user scenarios. My dissertation will enrich the immersive interface repertoire and contribute to the design of future MR systems.},

keywords = {Doctoral Consortium},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring the Association Between Engagement With Location-Based Game Features and Getting Inspired About Environmental Issues and Nature

Bastian Kordyaka (University of Bremen), Samuli Laato (Tampere University), Sebastian Weber (University of Bremen), Juho Hamari (Tampere University), Bjoern Niehaves (University of Bremen)

Abstract | Tags: Full Paper | Links:

@inproceedings{Kordyaka2024ExploringAssociation,

title = {Exploring the Association Between Engagement With Location-Based Game Features and Getting Inspired About Environmental Issues and Nature},

author = {Bastian Kordyaka (University of Bremen), Samuli Laato (Tampere University), Sebastian Weber (University of Bremen), Juho Hamari (Tampere University), Bjoern Niehaves (University of Bremen)},

url = {https://www.uni-bremen.de/digital-public, website},

doi = {10.1145/3613904.3642786},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Today, millions worldwide play popular location-based games (LBGs) such as Pokémon GO. LBGs are designed to be played outdoors, and past research has shown that they can incentivize players to travel to nature. To further explore this nature-connection, we investigated via a mixed-methods approach the connections between engagement with LBGs, inspiration and environmental awareness as follows. First, we identified relevant gamification features in Study 1. Based on the insights, we built a survey that we sent to Pokémon GO players (N=311) in Study 2. The results showed that (a) social networking features, reminders, and virtual objects were the most relevant gamification features to explain inspired by playing Pokémon GO and that (b) inspired to outdoor engagement partially mediated the relationship between inspired by playing Pokémon GO and environmental awareness. These results warrant further investigations into whether LBGs could motivate pro-environment attitudes and inspire people to care for nature.},

keywords = {Full Paper},

pubstate = {published},

tppubtype = {inproceedings}

}

Field Notes on Deploying Research Robots in Public Spaces

Fanjun Bu (Cornell Tech), Alexandra W.D. Bremers (Cornell Tech), Mark Colley (Institute of Media Informatics, Ulm University, Cornell Tech), Wendy Ju (Cornell Tech)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Bu2024FieldNotes,

title = {Field Notes on Deploying Research Robots in Public Spaces},

author = {Fanjun Bu (Cornell Tech), Alexandra W.D. Bremers (Cornell Tech), Mark Colley (Institute of Media Informatics, Ulm University and Cornell Tech), Wendy Ju (Cornell Tech)},

doi = {10.1145/3613905.3651044},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Human-robot interaction requires to be studied in the wild. In the summers of 2022 and 2023, we deployed two trash barrel service robots through the wizard-of-oz protocol in public spaces to study human-robot interactions in urban settings. We deployed the robots at two different public plazas in downtown Manhattan and Brooklyn for a collective of 20 hours of field time. To date, relatively few long-term human-robot interaction studies have been conducted in shared public spaces. To support researchers aiming to fill this gap, we would like to share some of our insights and learned lessons that would benefit both researchers and practitioners on how to deploy robots in public spaces. We share best practices and lessons learned with the HRI research community to encourage more in-the-wild research of robots in public spaces and call for the community to share their lessons learned to a GitHub repository.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Fighting Malicious Designs: Towards Visual Countermeasures Against Dark Patterns

René Schäfer (RWTH Aachen University), Paul Preuschoff (RWTH Aachen University), René Röpke (RWTH Aachen University), Sarah Sahabi (RWTH Aachen University), Jan Borchers (RWTH Aachen University)

Abstract | Tags: Full Paper | Links:

@inproceedings{Schäfer2024FightingMalicious,

title = {Fighting Malicious Designs: Towards Visual Countermeasures Against Dark Patterns},

author = {René Schäfer (RWTH Aachen University), Paul Preuschoff (RWTH Aachen University), René Röpke (RWTH Aachen University), Sarah Sahabi (RWTH Aachen University), Jan Borchers (RWTH Aachen University)},

url = {https://hci.rwth-aachen.de, website},

doi = {10.1145/3613904.3642661},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},