We curated a list of this year’s publications — including links to social media, lab websites, and supplemental material. We have 58 full papers, 13 LBWs, one DC paper, and one Student Game Competition, and we lead five workshops. Two papers were awarded a best paper award, and four papers received an honourable mention.

Is your publication missing? Send us an email: contact@germanhci.de

"I Know What You Mean": Context-Aware Recognition to Enhance Speech-Based Games

Nima Zargham (Digital Media Lab, University of Bremen), Mohamed Lamine Fetni (Digital Media Lab, University of Bremen), Laura Spillner (Digital Media Lab, University of Bremen), Thomas Münder (Digital Media Lab, University of Bremen), Rainer Malaka (Digital Media Lab, University of Bremen)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Zargham2024KnowWhat,

title = {"I Know What You Mean": Context-Aware Recognition to Enhance Speech-Based Games},

author = {Nima Zargham (Digital Media Lab, University of Bremen), Mohamed Lamine Fetni (Digital Media Lab, University of Bremen), Laura Spillner (Digital Media Lab, University of Bremen), Thomas Münder (Digital Media Lab, University of Bremen), Rainer Malaka (Digital Media Lab, University of Bremen)},

url = {https://www.uni-bremen.de/dmlab, website},

doi = {10.1145/3613904.3642426},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Recent advances in language processing and speech recognition open up a large opportunity for video game companies to embrace voice interaction as an intuitive feature and appealing game mechanics. However, speech-based systems still remain liable to recognition errors. These add a layer of challenge on top of the game's existing obstacles, preventing players from reaching their goals and thus often resulting in player frustration. This work investigates a novel method called context-aware speech recognition, where the game environment and actions are used as supplementary information to enhance recognition in a speech-based game. In a between-subject user study (N=40), we compared our proposed method with a standard method in which recognition is based only on the voice input without taking context into account. Our results indicate that our proposed method could improve the player experience and the usability of the speech system.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

Development and Validation of the Collision Anxiety Questionnaire for VR Applications

Patrizia Ring (Universität Duisburg-Essen), Julius Tietenberg (Universität Duisburg-Essen), Katharina Emmerich (Universität Duisburg-Essen), Maic Masuch (Universität Duisburg-Essen)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Ring2024DevelopmentValidation,

title = {Development and Validation of the Collision Anxiety Questionnaire for VR Applications},

author = {Patrizia Ring (Universität Duisburg-Essen), Julius Tietenberg (Universität Duisburg-Essen), Katharina Emmerich (Universität Duisburg-Essen), Maic Masuch (Universität Duisburg-Essen)},

url = {https://www.ecg.uni-due.de/home/home.html, website

https://www.instagram.com/ecg.ude/, social media},

doi = {10.1145/3613904.3642408},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {The high degree of sensory immersion is a distinctive feature of head-mounted virtual reality (VR) systems. While the visual detachment from the real world enables unique immersive experiences, users risk collisions due to their inability to perceive physical obstacles in their environment. Even the mere anticipation of a collision can adversely affect the overall experience and erode user confidence in the VR system. However, there are currently no valid tools for assessing collision anxiety. We present the iterative development and validation of the Collision Anxiety Questionnaire (CAQ), involving an exploratory and a confirmatory factor analysis with a total of 159 participants. The results provide evidence for both discriminant and convergent validity and a good model fit for the final CAQ with three subscales: general collision anxiety, orientation, and interpersonal collision anxiety. By utilizing the CAQ, researchers can examine potential confounding effects of collision anxiety and evaluate methods for its mitigation.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

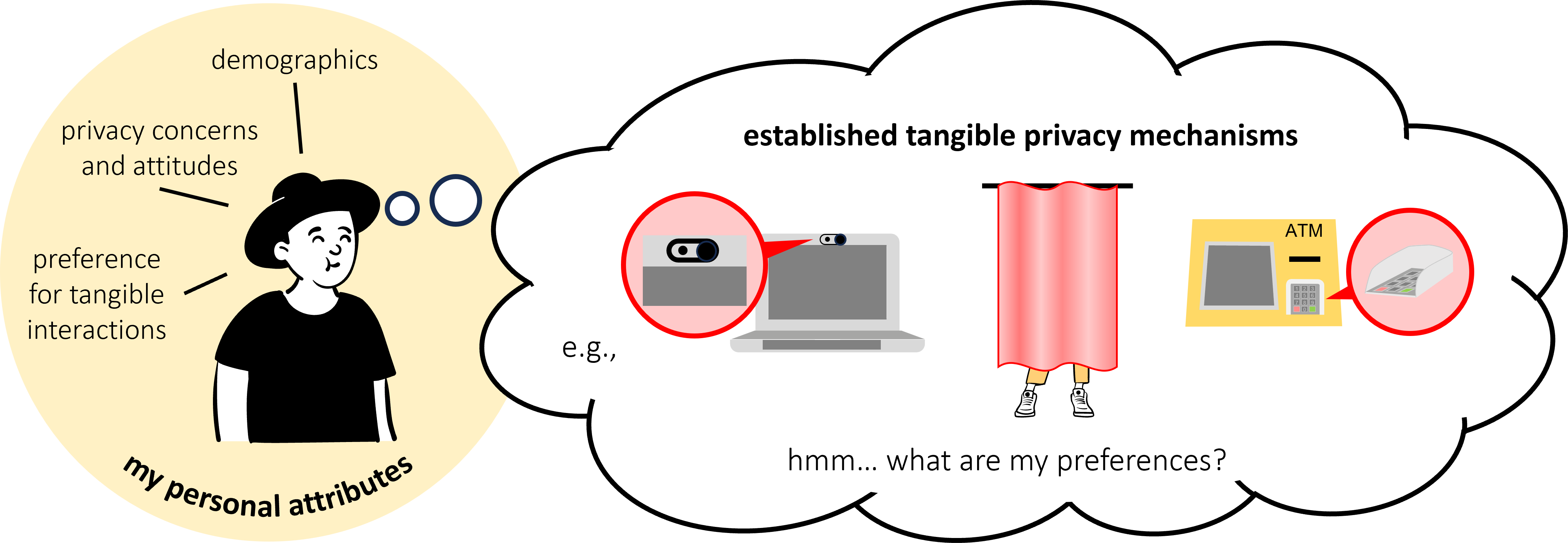

Do You Need to Touch? Exploring Correlations between Personal Attributes and Preferences for Tangible Privacy Mechanisms

Sarah Delgado Rodriguez (University of the Bundeswehr Munich), Priyasha Chatterjee (Ruhr-University Bochum), Anh Dao Phuong (LMU Munich), Florian Alt (University of the Bundeswehr Munich), Karola Marky (Ruhr-University Bochum)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Rodriguez2024DoNeed,

title = {Do You Need to Touch? Exploring Correlations between Personal Attributes and Preferences for Tangible Privacy Mechanisms},

author = {Sarah Delgado Rodriguez (University of the Bundeswehr Munich), Priyasha Chatterjee (Ruhr-University Bochum), Anh Dao Phuong (LMU Munich), Florian Alt (University of the Bundeswehr Munich), Karola Marky (Ruhr-University Bochum)},

url = {https://www.unibw.de/usable-security-and-privacy, website

https://youtu.be/EpthiyvegeI, teaser video

https://youtu.be/NkgLIxpVils, full video

https://twitter.com/USECUnibwM, social media},

doi = {10.1145/3613904.3642863},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {This paper explores how personal attributes, such as age, gender, technological expertise, or "need for touch", correlate with people's preferences for properties of tangible privacy protection mechanisms, for example, physically covering a camera. For this, we conducted an online survey (N = 444) where we captured participants' preferences of eight established tangible privacy mechanisms well-known in daily life, their perceptions of effective privacy protection, and personal attributes. We found that the attributes that correlated most strongly with participants' perceptions of the established tangible privacy mechanisms were their "need for touch" and previous experiences with the mechanisms. We use our findings to identify desirable characteristics of tangible mechanisms to better inform future tangible, digital, and mixed privacy protections. We also show which individuals benefit most from tangibles, ultimately motivating a more individual and effective approach to privacy protection in the future.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

Motionless Movement: Towards Vibrotactile Kinesthetic Displays

Yuran Ding (Max Planck Institute for Informatics, University of Maryland College Park), Nihar Sabnis (Max Planck Institute for Informatics), Paul Strohmeier (Max Planck Institute for Informatics)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Ding2024MotionlessMovement,

title = {Motionless Movement: Towards Vibrotactile Kinesthetic Displays},

author = {Yuran Ding (Max Planck Institute for Informatics, University of Maryland College Park), Nihar Sabnis (Max Planck Institute for Informatics), Paul Strohmeier (Max Planck Institute for Informatics)},

url = {https://sensint.mpi-inf.mpg.de/, website

https://x.com/sensintgroup?s=21&t=Wwo5g9aG_rw4oGszZiCMfQ, social media},

doi = {10.1145/3613904.3642499},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Beyond visual and auditory displays, tactile displays and grounded force feedback devices have become more common. Other sensory modalities are also catered to by a broad range of display devices, including temperature, taste, and olfaction. However, one sensory modality remains challenging to represent: kinesthesia – the sense of movement. Inspired by grain-based compliance illusions, we investigate how vibrotactile cues can evoke kinesthetic experiences, even when no movement is performed. We examine the effects of vibrotactile mappings and granularity on the magnitude of perceived motion; distance-based mappings provided the greatest sense of movement. Using an implementation that combines visual feedback and our prototype kinesthetic display, we demonstrate that action-coupled vibrotactile cues are significantly better at conveying an embodied sense of movement than the corresponding visual stimulus, and that combining vibrotactile and visual feedback is best. These results point towards a future where kinesthetic displays will be used in rehabilitation, sports, virtual-reality and beyond.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}

Perceived Empathy of Technology Scale (PETS): Measuring Empathy of Systems Toward the User

Matthias Schmidmaier (LMU Munich), Jonathan Rupp (University of Innsbruck), Darina Cvetanova (LMU Munich), Sven Mayer (LMU Munich)

Abstract | Tags: Full Paper, Honorable Mention | Links:

@inproceedings{Schmidmaier2024PerceivedEmpathy,

title = {Perceived Empathy of Technology Scale (PETS): Measuring Empathy of Systems Toward the User},

author = {Matthias Schmidmaier (LMU Munich), Jonathan Rupp (University of Innsbruck), Darina Cvetanova (LMU Munich), Sven Mayer (LMU Munich)},

url = {https://www.medien.ifi.lmu.de/, website},

doi = {10.1145/3613904.3642035},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

abstract = {Affective computing improves rapidly, allowing systems to process human emotions. This enables systems such as conversational agents or social robots to show empathy toward users. While there are various established methods to measure the empathy of humans, there is no reliable and validated instrument to quantify the perceived empathy of interactive systems. Thus, we developed the Perceived Empathy of Technology Scale (PETS) to assess and compare how empathic users perceive technology. We followed a standardized multi-phase process of developing and validating scales. In total, we invited 30 experts for item generation, 324 participants for item selection, and 396 additional participants for scale validation. We developed our scale using 22 scenarios with opposing empathy levels, ensuring the scale is universally applicable. This resulted in the PETS, a 10-item, 2-factor scale. The PETS allows designers and researchers to evaluate and compare the perceived empathy of interactive systems rapidly.},

keywords = {Full Paper, Honorable Mention},

pubstate = {published},

tppubtype = {inproceedings}

}