We are in the process of curating a list of this year’s publications — including links to social media, lab websites, and supplemental material. Currently, we have 68 full papers, 23 LBWs, three Journal papers, one alt.chi paper, two SIG, two Case Studies, one Interactivity, one Student Game Competition, and we lead three workshops. One paper received a best paper award and 13 papers received an honorable mention.

Disclaimer: This list is not complete yet; the DOIs might not be working yet.

Your publication from 2025 is missing? Please enter the details in this Google Forms and send us an email that you added a publication: contact@germanhci.de

"This could save us months of work" - Use Cases of AI and Automation Support in Investigative Journalism

Besjon Cifliku (Center for Advanced Internet Studies), Hendrik Heuer (Center for Advanced Internet Studies, University of Wuppertal)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Cifliku2025ThisCould,

title = {"This could save us months of work" - Use Cases of AI and Automation Support in Investigative Journalism},

author = {Besjon Cifliku (Center for Advanced Internet Studies), Hendrik Heuer (Center for Advanced Internet Studies and University of Wuppertal)},

url = {https://www.cais-research.de/forschungsprogramm-vertrauenswurdige-intelligenz/, website https://www.linkedin.com/company/center-for-advanced-internet-studies/posts/?feedView=all, lab's linkedin

https://www.linkedin.com/in/hendrikheuer/, author's linkedin

https://bsky.app/profile/cais-research.bsky.social, bluesky},

doi = {10.1145/3706599.3719856},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {As the capabilities of Large Language Models (LLMs) expand, more researchers are studying their adoption in newsrooms. However, much of the research focus remains broad and does not address the specific technical needs of investigative journalists. Therefore, this paper presents several applied use cases where automation and AI intersect with investigative journalism. We conducted a within-subjects user study with eight investigative journalists. In interviews, we elicited practical use cases using a speculative design approach by having journalists react to a prototype of a system that combines LLMs and Programming-by-Demonstration (PbD) to simplify data collection on numerous websites. Based on user reports, we classified the journalistic processes into data collecting and reporting. Participants indicated they utilize automation to handle repetitive tasks like content monitoring, web scraping, summarization, and preliminary data exploration. Following these insights, we provide guidelines on how investigative journalism can benefit from AI and automation.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Beyond the Self-Driven: Understanding User Acceptance of Cooperative Intelligent Transportation Systems in Automated Driving

Annika Stampf (Ulm University), Felix Reize (Ulm University), Mark Colley (Ulm University), Enrico Rukzio (Ulm University)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Stampf2025BeyondSelfdriven,

title = {Beyond the Self-Driven: Understanding User Acceptance of Cooperative Intelligent Transportation Systems in Automated Driving},

author = {Annika Stampf (Ulm University), Felix Reize (Ulm University), Mark Colley (Ulm University), Enrico Rukzio (Ulm University)},

url = {https://www.uni-ulm.de/en/in/mi/hci/, website

https://www.linkedin.com/in/annika-stampf/, author's linkedin},

doi = {10.1145/3706599.3720260},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Cooperative Intelligent Transportation Systems (C-ITS) leverage communication between intelligent vehicles and infrastructure (V2X) to address urban challenges, including congestion, delays, and safety, through advanced traffic planning. However, user acceptance—essential for real-world adoption—remains underexplored. Through an online survey (N=49), we investigated how Traffic Scenarios, Personal Outcomes, and Information Levels influence user acceptance. Our findings reveal that rerouting scenarios are perceived more positively than yielding scenarios, such as granting the right of way, while consequential delays increase conflict and frustration. Additionally, users showed similar acceptance ratings for the automated vehicle and the overarching C-ITS with differences emerging under consequential delays. We discuss implications for the design of user-centered C-ITS.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Do You See What I See? Evaluating Relative Depth Judgments Between Real and Virtual Projections

Florian Heinrich (University of Magdeburg), Danny Schott (University of Magdeburg), Lovis Schwenderling (University of Magdeburg), Christian Hansen (University of Magdeburg)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Heinrich2025SeeWhat,

title = {Do You See What I See? Evaluating Relative Depth Judgments Between Real and Virtual Projections},

author = {Florian Heinrich (University of Magdeburg), Danny Schott (University of Magdeburg), Lovis Schwenderling (University of Magdeburg), Christian Hansen (University of Magdeburg)},

url = {https://www.var.ovgu.de/, website

https://www.linkedin.com/company/virtual-and-augmented-reality-group/, lab's linkedin

https://www.linkedin.com/in/florian-w-heinrich/, author's linkedin},

doi = {10.1145/3706599.3720157},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Projector-based augmented reality (AR) is promising in different domains with less issues in discomfort or shortage of space. However, due to limitations like high costs and cumbersome calibration, this AR modality remains underused. To address this problem, a stereoscopic projector-based AR simulation was implemented for a cost-effective video see-through AR headset. To evaluate the validity of this simulation, a relative depth judgment experiment was conducted to compare this method with a physical projection system. Consistent results suggest that a known interaction effect between visualization and disparity mode could be successfully reproduced using both the physical projection and the virtual simulation. In addition, first findings indicate that there are no significant differences between these projection modalities. The results indicate that other perception-related effects observed for projector-based AR may also be applicable to virtual projection simulations and that future findings determined using only these simulations may also be applicable to real projections.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

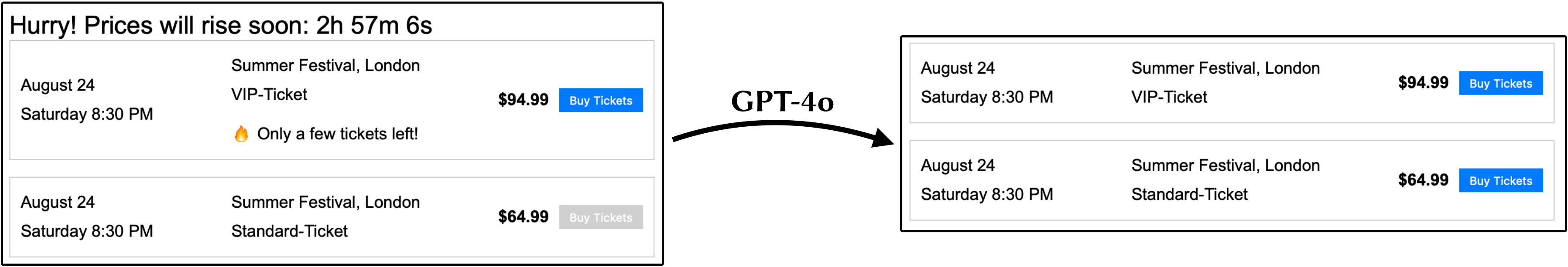

Don't Detect, Just Correct: Can LLMs Defuse Deceptive Patterns Directly?

René Schäfer (RWTH Aachen University), Paul Preuschoff (RWTH Aachen University), Rene Niewianda (Independent Researcher), Sophie Hahn (RWTH Aachen University), Kevin Fiedler (RWTH Aachen University), Jan Borchers (RWTH Aachen University)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Schaefer2025DontDetect,

title = {Don't Detect, Just Correct: Can LLMs Defuse Deceptive Patterns Directly?},

author = {René Schäfer (RWTH Aachen University), Paul Preuschoff (RWTH Aachen University), Rene Niewianda (Independent Researcher), Sophie Hahn (RWTH Aachen University), Kevin Fiedler (RWTH Aachen University), Jan Borchers (RWTH Aachen University)},

url = {https://hci.rwth-aachen.de, website

https://www.linkedin.com/in/schaefer-rene, linkedin},

doi = {10.1145/3706599.3719683},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Deceptive patterns, UI design strategies manipulating users against their best interests, have become widespread. We introduce an idea for technical countermeasures against such patterns. It feeds the HTML code of web elements that may contain deceptive patterns into a large language model (LLM) and iteratively prompts it to make these elements less manipulative. We evaluated our approach with GPT-4o and self-created web elements. After three iterations, 91% of deceptive elements were less manipulative and 96% not more manipulative than originally. We contribute our minimal and improved prompts and a labeled dataset of all 2,600 redesigns with the LLM's justifications for its changes. We also performed preliminary tests on real websites to show and discuss the feasibility of our approach in the field. Our findings suggest that LLMs can defuse certain deceptive patterns without prior model training, promising a major advance in fighting these manipulations.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Drone Teleoperation Interfaces: Challenges and Opportunities with XR Integration

Luljeta Sinani (German Aerospace Center (DLR)), Anna Bahnmüller (German Aerospace Center (DLR)), Frank Steinicke (University of Hamburg), Andreas Gerndt (German Aerospace Center (DLR)), Georgia Albuquerque (German Aerospace Center (DLR))

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Sinani2025DroneTeleoperation,

title = {Drone Teleoperation Interfaces: Challenges and Opportunities with XR Integration},

author = {Luljeta Sinani (German Aerospace Center (DLR)), Anna Bahnmüller (German Aerospace Center (DLR)), Frank Steinicke (University of Hamburg), Andreas Gerndt (German Aerospace Center (DLR)), Georgia Albuquerque (German Aerospace Center (DLR))},

url = {https://www.dlr.de/en/sc/about-us/departments/visual-computing-and-engineering, website},

doi = {10.1145/3706599.3719777},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Drones are piloted remotely through teleoperation interfaces. These interfaces typically employ 2D displays, which can limit Situation Awareness (SA) due to narrow field-of-view (FoV) and lack of depth perception. Extended Reality (XR) has potential to enhance these systems by providing 3D environments that improve spatial understanding and expand FoV. However, designing drone teleoperation interfaces requires a thorough understanding of remote pilot's SA needs and usability challenges to ensure safety and facilitate decision-making. This makes a user-centered design (UCD) approach essential. To this end, we present findings from qualitative interviews with (n=8) professional drone pilots, designed to (1) identify key challenges in current teleoperation interfaces, and based on these challenges, (2) provide an outlook on how XR could address these issues. This work aims to guide future research and design of XR-based teleoperation interfaces to improve SA and safety in drone operations.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Enriched Embodiment Environments for Healthcare Spaces: Exploration through the Design of a Cancer Treatment Facility

Iddo Yehoshua Wald (The Digital Media Lab, University of Bremen, Bremen, Germany; Reichman University, Herzliya, Israel), Amber Maimon (The University of Haifa, Haifa, Israel; Ben Gurion University, Be’er Sheva, Israel; Reichman University, Herzliya, Israel), Adi Snir (Reichman University, Herzliya, Israel), Oran Goral (Reichman University, Herzliya, Israel), Avital Radosher (Reichman University, Herzliya, Israel), Gizem Ozdemir (Reichman University, Herzliya, Israel), Amir Amedi (Reichman University, Herzliya, Israel)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Wald2025EnrichedEmbodiment,

title = {Enriched Embodiment Environments for Healthcare Spaces: Exploration through the Design of a Cancer Treatment Facility},

author = {Iddo Yehoshua Wald (The Digital Media Lab, University of Bremen, Bremen, Germany; Reichman University, Herzliya, Israel), Amber Maimon (The University of Haifa, Haifa, Israel; Ben Gurion University, Be’er Sheva, Israel; Reichman University, Herzliya, Israel), Adi Snir (Reichman University, Herzliya, Israel), Oran Goral (Reichman University, Herzliya, Israel), Avital Radosher (Reichman University, Herzliya, Israel), Gizem Ozdemir (Reichman University, Herzliya, Israel), Amir Amedi (Reichman University, Herzliya, Israel)},

url = {https://www.uni-bremen.de/dmlab, website

https://linkedin.com/company/dml-bremen, lab's linkedin

https://linkedin.com/in/iddo-wald, author's linkedin},

doi = {10.1145/3706599.3719733},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {The increasing understanding that a patient's experience and mental well-being influence their physical condition, treatment outcomes, and even prognosis, is changing the design of healthcare environments. A gap remains in establishing scientifically grounded methodologies, design guidelines and best practices, for designing real-world healthcare settings that address newly-recognized needs. Drawing on principles from neuroscience and human-computer interaction, we explore the concept of enriched multisensory healthcare environments that employ embodied interaction. This work reviews the principles underlying the design of such spaces, and an implementation of such an environment at a treatment facility within a newly constructed cancer treatment center. Two interaction prototypes were developed, employing embodiment to leverage the benefits of multisensory enriched environments. We detail the design process, decisions, implementation choices, and rationale applied. Finally, we discuss future directions for this work and the enriched embodiment environment approach in healthcare.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

ERP Markers of Visual and Semantic Processing in AI-Generated Images: From Perception to Meaning

Teodora Mitrevska (LMU Munich), Francesco Chiossi (LMU Munich), Sven Mayer (LMU Munich, Munich Center for Machine Learning (MCML))

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Mitrevska2025ErpMarkers,

title = {ERP Markers of Visual and Semantic Processing in AI-Generated Images: From Perception to Meaning},

author = {Teodora Mitrevska (LMU Munich), Francesco Chiossi (LMU Munich), Sven Mayer (LMU Munich, Munich Center for Machine Learning (MCML))},

url = {https://www.linkedin.com/company/93636029/admin/dashboard/, lab's linkedin

https://www.linkedin.com/in/teodora-mitrevska-b444a810a/, author's linkedin},

doi = {10.1145/3706599.3719907},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Perceptual similarity assessment plays an important role in processing visual information, which is often employed in Human-AI interaction tasks such as object recognition or content generation. It is important to understand how humans perceive and evaluate visual similarity to iteratively generate outputs that meet the users' expectations better and better. By leveraging physiological signals, systems can rely on users' EEG responses to support the similarity assessment process. We conducted a study (N=20), presenting diverse AI-generated images as stimuli and evaluating their semantic similarity to a target image while recording event-related potentials (ERPs). Our results show that the P2 and N400 component distinguishes medium, and high similarity of images, while the low similarity of images did not show a significant impact. Thus, we demonstrate that ERPs allow us to assess the users' perceived visual similarity to support rapid interactions with human-AI systems.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring the Use of Augmented Reality for Multi-human-robot Collaboration with Industry Users in Timber Construction

Xiliu Yang (Institute for Computational Design, Construction, University of Stuttgart), Nelusa Pathmanathan (Visualization Research Center, University of Stuttgart), Sarah Zabel (Department of Sustainability, Change, University of Hohenheim), Felix Amtsberg (Institute for Computational Design, Construction, University of Stuttgart), Siegmar Otto (Department of Sustainability, Change, University of Hohenheim), Kuno Kurzhals (Visualization Research Center, University of Stuttgart), Michael Sedlmair (Visualization Research Center, University of Stuttgart), Achim Menges (Institute for Computational Design, Construction, University of Stuttgart)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Yang2025ExploringUse,

title = {Exploring the Use of Augmented Reality for Multi-human-robot Collaboration with Industry Users in Timber Construction},

author = {Xiliu Yang (Institute for Computational Design and Construction, University of Stuttgart), Nelusa Pathmanathan (Visualization Research Center, University of Stuttgart), Sarah Zabel (Department of Sustainability and Change, University of Hohenheim), Felix Amtsberg (Institute for Computational Design and Construction, University of Stuttgart), Siegmar Otto (Department of Sustainability and Change, University of Hohenheim), Kuno Kurzhals (Visualization Research Center, University of Stuttgart), Michael Sedlmair (Visualization Research Center, University of Stuttgart), Achim Menges (Institute for Computational Design and Construction, University of Stuttgart)},

doi = {10.1145/3706599.3720104},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {As robots are introduced into construction environments, situations may arise where construction workers without programming expertise need to interact with robotic operations to ensure smooth and successful task execution. We designed a head-mounted augmented reality (AR) system that allowed control of the robot's tasks and motions during human-robot collaboration (HRC) in timber assembly tasks. To explore workers' feedback and attitudes towards HRC with this system, we conducted a user study with 10 carpenters. The workers collaborated in pairs with a heavy-payload industrial robot to construct a 2 x 3 m timber panel. The study contributes an evaluation of multi-human-robot collaboration along with qualitative feedback from the workers. Exploratory data analysis revealed the influence of asymmetrical user roles in multi-user collaborative construction, providing research directions for future work.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Exploring Visual Prompts: Refining Images with Scribbles and Annotations in Generative AI Image Tools

Hyerim Park (BMW Group, University of Stuttgart), Malin Eiband (BMW Group), Andre Luckow (BMW Group), Michael Sedlmair (University of Stuttgart)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Park2025ExploringVisual,

title = {Exploring Visual Prompts: Refining Images with Scribbles and Annotations in Generative AI Image Tools},

author = {Hyerim Park (BMW Group, University of Stuttgart), Malin Eiband (BMW Group), Andre Luckow (BMW Group), Michael Sedlmair (University of Stuttgart)},

url = {https://visvar.github.io/, website},

doi = {10.1145/3706599.3719802},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Generative AI (GenAI) tools are increasingly integrated into design workflows. While text prompts remain the primary input method for GenAI image tools, designers often struggle to craft effective ones. Moreover, research has primarily focused on input methods for ideation, with limited attention to refinement tasks. This study explores designers' preferences for three input methods—text prompts, annotations, and scribbles—through a preliminary digital paper-based study with seven professional designers. Designers preferred annotations for spatial adjustments and referencing in-image elements, while scribbles were favored for specifying attributes such as shape, size, and position, often combined with other methods. Text prompts excelled at providing detailed descriptions or when designers sought greater GenAI creativity. However, designers expressed concerns about AI misinterpreting annotations and scribbles and the effort needed to create effective text prompts. These insights inform GenAI interface design to better support refinement tasks, align with workflows, and enhance communication with AI systems.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Groups vs. Booking Websites: Investigating Collaborative Strategies Against Deceptive Patterns

Paul Miles Preuschoff (RWTH Aachen University, Aachen, Germany), Sarah Sahabi (RWTH Aachen University, Aachen, Germany), René Schäfer (RWTH Aachen University, Aachen, Germany), Lea Emilia Schirp (RWTH Aachen University, Aachen, Germany), Marcel Lahaye (RWTH Aachen University, Aachen, Germany), Jan Borchers (RWTH Aachen University, Aachen, Germany).

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Preuschoff2025GroupsVs,

title = {Groups vs. Booking Websites: Investigating Collaborative Strategies Against Deceptive Patterns},

author = {Paul Miles Preuschoff (RWTH Aachen University, Aachen, Germany), Sarah Sahabi (RWTH Aachen University, Aachen, Germany), René Schäfer (RWTH Aachen University, Aachen, Germany), Lea Emilia Schirp (RWTH Aachen University, Aachen, Germany), Marcel Lahaye (RWTH Aachen University, Aachen, Germany), Jan Borchers (RWTH Aachen University, Aachen, Germany).},

url = {https://hci.rwth-aachen.de, website

https://www.linkedin.com/in/paul-miles-preuschoff-6b2662238, linkedin},

doi = {10.1145/3706599.3720225},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Deceptive (or dark) patterns are interface design strategies widely used in apps and online services that manipulate users into making decisions against their best interests. Prior work has explored their effects on individuals and potential countermeasures. Therefore, we aimed to investigate how groups of users handle deceptive patterns when they encounter them collectively. To this end, we observed seven groups of three users booking flights and rental cars together by browsing the same real websites on separate devices in the same room. We found users collaborating to address deceptive patterns by synchronizing, helping others, and through mutual comparisons. We provide first observations and insights into the strategies that emerge in groups dealing with deceptive patterns in social scenarios.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

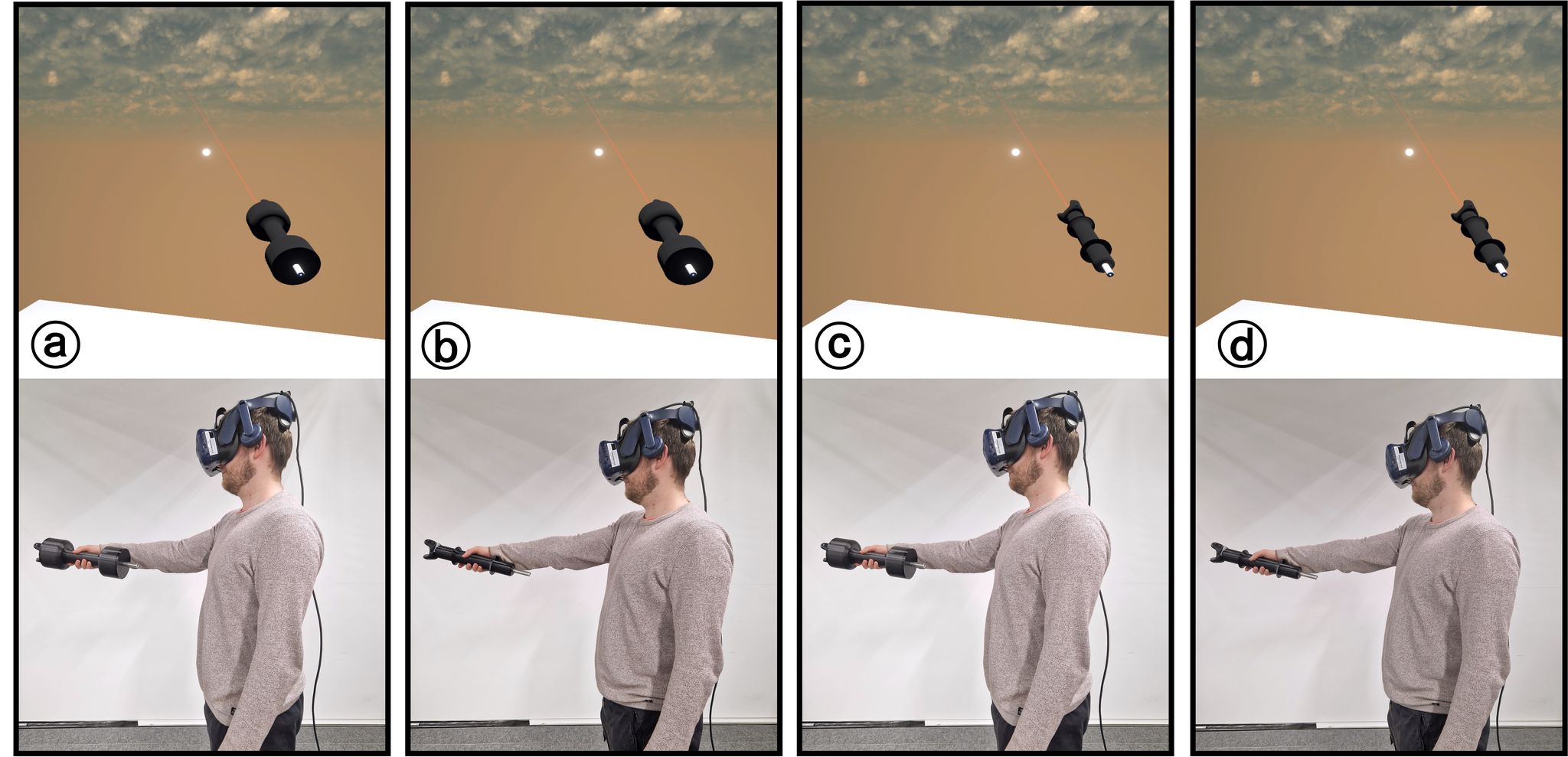

Heavy Looks, Slower Moves: Effects of Physical and Visual Object Weight on Pointing in Virtual Reality

Alexander Kalus (Berlin University of Applied Sciences, Technology), Michelle Lanzinger (University of Regensburg), Laurin Rolny (University of Regensburg), Benedikt Strasser (University of Regensburg), Katrin Wolf (Berlin University of Applied Sciences, Technology), Niels Henze (University of Regensburg), Johanna Bogon (University of Regensburg)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Kalus2025HeavyLooks,

title = {Heavy Looks, Slower Moves: Effects of Physical and Visual Object Weight on Pointing in Virtual Reality},

author = {Alexander Kalus (Berlin University of Applied Sciences and Technology), Michelle Lanzinger (University of Regensburg), Laurin Rolny (University of Regensburg), Benedikt Strasser (University of Regensburg), Katrin Wolf (Berlin University of Applied Sciences and Technology), Niels Henze (University of Regensburg), Johanna Bogon (University of Regensburg)},

doi = {10.1145/3706599.3720211},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {In Virtual Reality (VR), users frequently interact through pointing movements. The accuracy and speed of such goal-directed movements depend on their estimated energy and time costs. In VR, vision is stimulated separately from tactile and kinesthetic sensory modalities. For instance, a virtual pointing device can be visually presented as heavy while being physically lightweight. Yet, it is unknown how visual and physical weight cues contribute to our strategy to optimize movements in terms of accuracy and speed. In a study with 32 participants, we found physical weight (additional weight of the controller) increases precision and movement time when pointing in VR. Interestingly, we found that visually conveyed weight (additional volume at the controller's 3d model) also increases movement time, suggesting that adjusting the appearance of virtually held objects can affect the performance of pointing movements. We interpret our findings in light of motor-cognitive models and discuss implications for VR designers.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Mapping the Tool Landscape for Stakeholder Involvement in Participatory AI: Strengths, Gaps, and Future Directions

Emma Kallina (University of Cambridge; RC-Trust, UA Ruhr), Jat Singh (University of Cambridge; RC-Trust, UA Ruhr)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Kallina2025MappingTool,

title = {Mapping the Tool Landscape for Stakeholder Involvement in Participatory AI: Strengths, Gaps, and Future Directions},

author = {Emma Kallina (University of Cambridge; RC-Trust, UA Ruhr), Jat Singh (University of Cambridge; RC-Trust, UA Ruhr)},

url = {https://rc-trust.ai/groups/compliant-and-accountable-systems, website https://www.linkedin.com/company/rc-trust/posts/?feedView=all, lab's linkedin

https://www.linkedin.com/in/emma-kallina/, author's linkedin},

doi = {10.1145/3706599.3719726},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Stakeholder Involvement (SHI) during technology development is increasingly promoted in responsible AI (rAI) guidance. However, the lack of concrete, actionable tools to realise this is a well-known issue. To inform the future development of such SHI-supporting tools, this study presents an analysis of the existing tool landscape. We reviewed 216 rAI tools (covering seven meta-reviews, 2020-2024), revealing that only 18% provide actionable support for SHI. Mapping these tools to both the SHI process and the AI lifecycle, we found a strong focus on support when communicating with stakeholders, especially during early ideation stages. Tools supporting many other practical aspects of SHI were rare (e.g. recruiting stakeholders or storing gained insights) as were tools supporting reactions to gained insights or SHI during the testing stage. We point practitioners towards existing tool support and discuss the identified imbalances. To scope future works on exposed gaps, we recommend concrete actions and immediate research agendas to facilitate rAI-aligned SHI.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

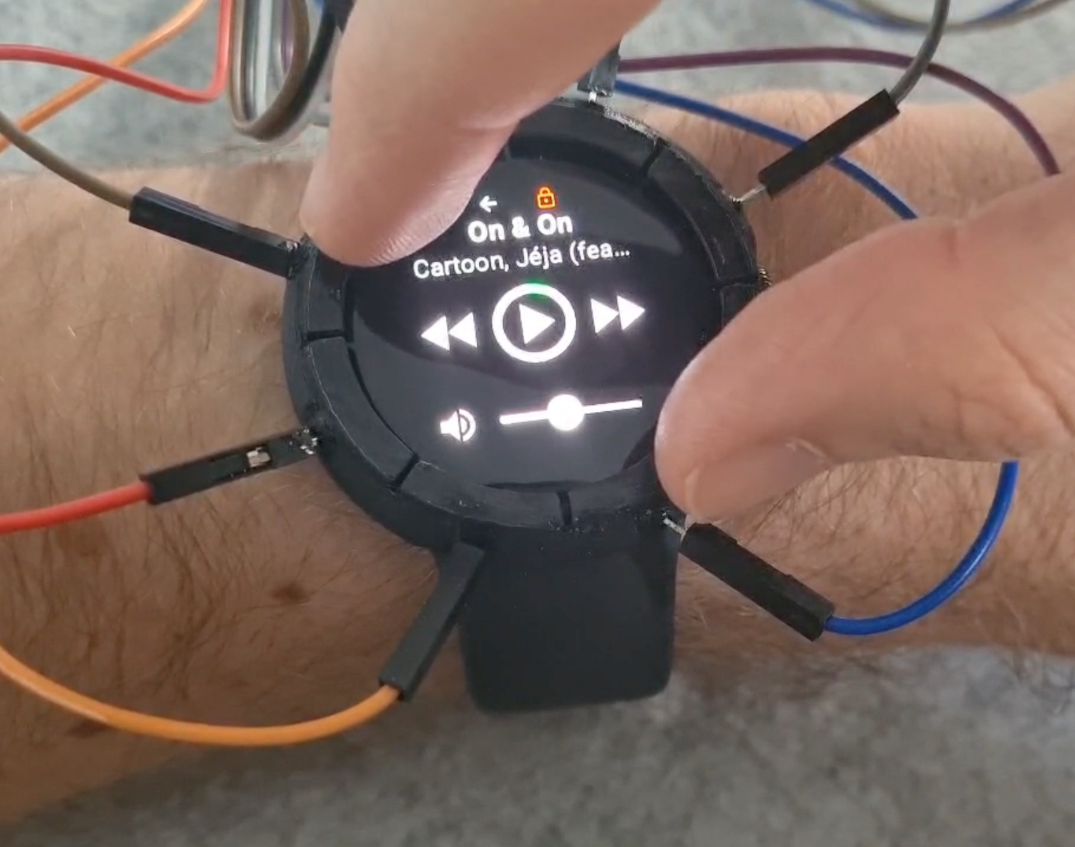

MultiBezel: Adding Multi-Touch to a Smartwatch Bezel to Control Music

Marvin Reuter (Technische Hochschule Köln), Ali Ünal (Technische Hochschule Köln), Jan Felipe Kolodziejski Ribeiro (Technische Hochschule Köln), David Petersen (Technische Hochschule Köln), Matthias Böhmer (Technische Hochschule Köln)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Reuter2025Multibezel,

title = {MultiBezel: Adding Multi-Touch to a Smartwatch Bezel to Control Music},

author = {Marvin Reuter (Technische Hochschule Köln), Ali Ünal (Technische Hochschule Köln), Jan Felipe Kolodziejski Ribeiro (Technische Hochschule Köln), David Petersen (Technische Hochschule Köln), Matthias Böhmer (Technische Hochschule Köln)},

url = {http://moxd.io/, website

https://www.linkedin.com/in/marvin-reuter-90b624277/, author's linkedin

https://www.instagram.com/moxdlab/, instagram},

doi = {10.1145/3706599.3720156},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Limited screen space and reliance on touch input pose challenges for intuitive interaction on smartwatches, often leading to screen occlusion and hindered usability. To overcome these limitations, this paper presents MultiBezel, a novel multi-touch enabled bezel designed to enhance interaction on smartwatches. MultiBezel utilises a gesture-based interaction scheme, mapping distinct finger combinations to control the smartwatch. This approach shifts interaction away from the touchscreen enabling new ways of eyes-free control. We developed a functional prototype that recognises up to three simultaneous touch points. This prototype demonstrates the feasibility of multi-touch bezel interaction for enhancing the smartwatch user experience. We contribute the hardware and software of our prototype and implemented an application for music control.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Participation User Experience: A Call to Better Manage the Most Important Resource in User-Centered Design

Melina Joline Heinisch (Lehrstuhl für Psychologische Ergonomie, Julius-Maximilians-Universität Würzburg), Sara Wolf (Lehrstuhl für Psychologische Ergonomie, Julius-Maximilians-Universität Würzburg), Franzisca Maas (Lehrstuhl für Psychologische Ergonomie, Julius-Maximilians-Universität Würzburg), Stephan Huber (Lehrstuhl für Psychologische Ergonomie, Julius-Maximilians-Universität Würzburg)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Heinisch2025ParticipationUser,

title = {Participation User Experience: A Call to Better Manage the Most Important Resource in User-Centered Design},

author = {Melina Joline Heinisch (Lehrstuhl für Psychologische Ergonomie, Julius-Maximilians-Universität Würzburg), Sara Wolf (Lehrstuhl für Psychologische Ergonomie, Julius-Maximilians-Universität Würzburg), Franzisca Maas (Lehrstuhl für Psychologische Ergonomie, Julius-Maximilians-Universität Würzburg), Stephan Huber (Lehrstuhl für Psychologische Ergonomie, Julius-Maximilians-Universität Würzburg)},

url = {https://www.mcm.uni-wuerzburg.de/psyergo/, website

www.linkedin.com/in/melina-heinisch, linkedin},

doi = {10.1145/3706599.3719918},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Participating users are the foundation of user-centered design. However, there is a limited understanding of their motivation, engagement, and experience participating in research. In this work, we propose the concept of Participation User Experience (PUX), which addresses participants' experiences in user-centered design. To set a scope for PUX, we conducted a reflexive thematic analysis on workshop data involving 20 experienced user-centered design practitioners and researchers. The analysis yielded five themes, making explicit aspects of PUX that have been implicitly considered and how their consideration could be improved. Great potential lies in addressing intrinsic motivations over extrinsic incentives and developing more structured approaches to planning and measuring PUX to mitigate various sources of bias related to incentives or Experimenter Effects. We contribute to a first understanding of PUX, point to persisting research gaps, and present practical implications for improving participants' experiences in user-centered design.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Predicting Personality Traits from Hand-Tracking and Typing Behavior in Extended Reality under the Presence and Absence of Haptic Feedback

Jonathan Liebers (HCI Group, University of Duisburg-Essen), Felix Bernardi (HCI Group, University of Duisburg-Essen), Alia Saad (HCI Group, University of Duisburg-Essen), Lukas Mecke (LMU Munich), Uwe Gruenefeld (HCI Group, University of Duisburg-Essen), Florian Alt (LMU Munich), Stefan Schneegass (HCI Group, University of Duisburg-Essen)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Liebers2025PredictingPersonality,

title = {Predicting Personality Traits from Hand-Tracking and Typing Behavior in Extended Reality under the Presence and Absence of Haptic Feedback},

author = {Jonathan Liebers (HCI Group, University of Duisburg-Essen), Felix Bernardi (HCI Group, University of Duisburg-Essen), Alia Saad (HCI Group, University of Duisburg-Essen), Lukas Mecke (LMU Munich), Uwe Gruenefeld (HCI Group, University of Duisburg-Essen), Florian Alt (LMU Munich), Stefan Schneegass (HCI Group, University of Duisburg-Essen)},

url = {hcigroup.de, website

https://x.com/hci_due, X

https://www.linkedin.com/in/jonathan-liebers-003b21305/, author's linkedin

https://www.facebook.com/HCIEssen, facebook},

doi = {10.1145/3706599.3720270},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {With the proliferation of Extended Realities (XR), it becomes increasingly important to create applications that adapt themselves to the user, which enhances User Experience (UX). One source that allows for adaptation is the user's behavior, which is implicitly captured on XR devices, such as their hand and finger movements during natural interactions. This data can be used to predict a user's personality traits, which allows the application to accustom itself to the user's needs. In this study (N=20), we explore personality prediction from hand-tracking and keystroke data during a typing activity in Augmented Virtuality and Virtual Reality. We manipulate the haptic elements, i.e., whether users type on a physical or virtual keyboard, and capture data from participants on two different days. We find a best-performing model with an R² of 0.4456 and that the source of error primarily lies within the manifestation of XR.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Privacy Silence: Trust and Boundary-Setting in Mobile Phone Use Within Intimate Relationships

Sima Amirkhani (Siegen University, Siegen, Germany, sima.amirkhani@uni-siegen.de), Farzaneh Gerami (Human Computer Interaction, University of Siegen, Siegen, NRW/Siegen, Germany, farzaneh.gerami@student.uni-siegen.de), Mahla Alizadeh (Human-Computer Interaction, University of Siegen, Siegen, Germany, fatemeh.alizadeh@uni-siegen.de, Professor Dave Randall Information Systems, New Media, University of Siegen, Siegen, Germany), Prof- Dr- Gunnar Stevens (Information Systems, University of Siegen, Siegen, Germany)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{university2025PrivacySilence,

title = {Privacy Silence: Trust and Boundary-Setting in Mobile Phone Use Within Intimate Relationships},

author = {Sima Amirkhani (Siegen University, Siegen, Germany, sima.amirkhani@uni-siegen.de), Farzaneh Gerami (Human Computer Interaction, University of Siegen, Siegen, NRW/Siegen, Germany, farzaneh.gerami@student.uni-siegen.de), Mahla Alizadeh (Human-Computer Interaction, University of Siegen, Siegen, Germany, fatemeh.alizadeh@uni-siegen.de, Professor Dave Randall Information Systems and New Media, University of Siegen, Siegen, Germany), Prof- Dr- Gunnar Stevens (Information Systems, University of Siegen, Siegen, Germany)},

doi = {10.1145/3706599.3719752},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Privacy in intimate partnerships involves balancing personal autonomy with information sharing. Mobile phones, as personal yet relational tools, highlight the tension between openness and boundary maintenance. While much recent research has focused on toxic behaviors such as cyberstalking and technology-facilitated abuse, there has been less exploration of privacy practices in everyday relationships that are not characterized by unhealthy dynamics. To address this gap, we conducted 20 semi-structured interviews examining privacy boundary-setting in current or past relationships. Our findings reveal that many individuals opt for silence over direct confrontation, leading to the emergence of what we term "privacy silence"—a non-verbal approach to managing privacy that reflects and reinforces trust within intimate relationships. We advocate for developing privacy dialogue methods that encourage open, collaborative conversations about boundaries, fostering trust and mutual understanding without placing the burden on one partner or implying mistrust.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Reverse Vampire UI: Reflecting on AR Interaction with Smart Mirrors

Sebastian Rigling (University of Stuttgart), Ševal Avdic (University of Stuttgart), Muhammed Enes Özver (University of Stuttgart), Michael Sedlmair (University of Stuttgart)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Rigling2025ReverseVampire,

title = {Reverse Vampire UI: Reflecting on AR Interaction with Smart Mirrors},

author = {Sebastian Rigling (University of Stuttgart), Ševal Avdic (University of Stuttgart), Muhammed Enes Özver (University of Stuttgart), Michael Sedlmair (University of Stuttgart)},

url = {https://www.visus.uni-stuttgart.de/en/workinggroups/sedlmair-group/, website

https://www.linkedin.com/in/sebastian-rigling-a364aaa0/, author's linkedin},

doi = {10.1145/3706599.3719930},

year = {2025},

date = {2025-04-25},

urldate = {2025-04-25},

abstract = {The benefits of augmented reality (AR) have been demonstrated in both medicine and fitness, while its application in areas where these two fields overlap has been barely explored. We argue that AR opens up new opportunities to interact with, understand and share personal health data. To this end, we developed an app prototype that uses a Snapchat-like face filter to visualize personal health data from a fitness tracker in AR. We tested this prototype in two pilot studies and found that AR does have potential in this type of application. We suggest that AR cannot replace the current interfaces of smartwatches and mobile apps, but it can pick up where current technology falls short in creating intrinsic motivation and personal health awareness. We also provide ideas for future work in this direction. Mirror surfaces can be used as information displays in smart homes and even for augmented reality (AR). The big advantage is the seamless integration of the visual output into the user's natural environment. However, user input poses a challenge. On the one hand, touch input would make the mirror dirty. On the other hand, mid-air gestures have proven to be less accurate, slower and more error-prone. We propose the use of an AR user interface (UI): Interactive UI elements are visible “on the other side of the mirror” and can be pressed by the user's reflection. We built a functional prototype and investigated whether this is a viable option for interacting with mirrors. In a pilot study, we compared the interaction with UI elements placed on three different planes relative to the mirror surface: Behind the mirror (reflection), on the mirror (touch) and in front of the mirror (hologram).},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

Social Media Journeys – Mapping Platform Migration

Artur Solomonik (Center for Advanced Internet Studies), Hendrik Heuer (Center for Advanced Internet Studies, University of Wuppertal)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Solomonik2025SocialMedia,

title = {Social Media Journeys – Mapping Platform Migration},

author = {Artur Solomonik (Center for Advanced Internet Studies), Hendrik Heuer (Center for Advanced Internet Studies and University of Wuppertal)},

url = {https://www.cais-research.de/forschungsprogramm-vertrauenswurdige-intelligenz/, website https://www.linkedin.com/company/center-for-advanced-internet-studies/posts/?feedView=all, lab's linkedin

https://www.linkedin.com/in/hendrikheuer/, author's linkedin

https://bsky.app/profile/cais-research.bsky.social, bluesky},

doi = {10.1145/3706598.3713136},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {As people engage with the social media landscape, popular platforms rise and fall. As current research uncovers the experiences people have on various platforms, rarely do we engage with the sociotechnical migration processes when joining and leaving them. In this paper, we asked 32 visitors of a science communication festival to draw out artifacts that we call Social Media Journey Maps about the social media platforms they frequented, and why. By combining qualitative content analysis with a graph representation of Social Media Journeys, we present how social media migration processes are motivated by the interplay of environmental and platform factors. We find that peer-driven popularity, the timing of feature adoption, and personal perceptions of migration causes—such as security—shape individuals' reasoning for migrating between social media platforms. With this work, we aim to pave the way for future social media platforms that foster meaningful and enriching online experiences for users.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

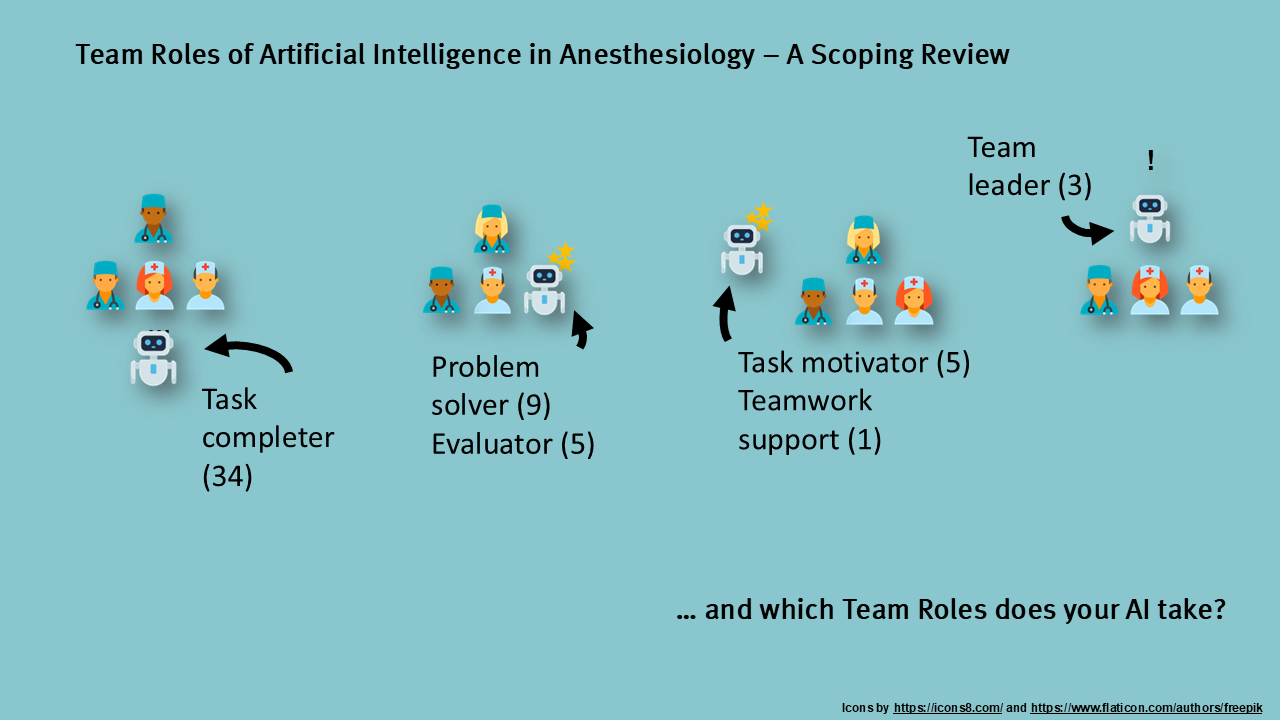

Team Roles of Artificial Intelligence in Anesthesiology – A Scoping Review

Stephan Huber (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg, Germany), Lea Weppert (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg, Germany), Lennart Baumeister (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg, Germany), Oliver Happel (University Hospital Würzburg, Germany), Tobias Grundgeiger (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg, Germany)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Huber2025TeamRoles,

title = {Team Roles of Artificial Intelligence in Anesthesiology – A Scoping Review},

author = {Stephan Huber (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg, Germany), Lea Weppert (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg, Germany), Lennart Baumeister (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg, Germany), Oliver Happel (University Hospital Würzburg, Germany), Tobias Grundgeiger (Chair of Psychological Ergonomics, Julius-Maximilians-Universität Würzburg, Germany)},

url = {https://www.mcm.uni-wuerzburg.de/psyergo, website

https://www.linkedin.com/in/stephanhuber/, linkedin},

doi = {10.1145/3706599.3720186},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {When referring to the role of newly proposed clinical applications of artificial intelligence (AI), recent work inflationary uses the term Human-AI Team. However, the roles foreseen for AI systems within teams remain unclear. We systematically reviewed the literature on AI deployment in anesthesiology and found that most AI system papers only describe algorithms. We identified 57 interactive systems and assigned six roles based on described behavior, tasks, and interactions. While the most prevalent role was task completer, some AI systems also served their team as problem solvers, evaluators, task motivators, or even teamwork support and team leaders. We contribute (1) a classification system for team roles, behaviors, tasks, and interactions of AI team members and (2) an overview of AI systems' team roles in anesthesiology. We conclude that (3) AI systems’ intended social roles within teams need to be more consciously reflected, shaped and clearly communicated to meet healthcare standards.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

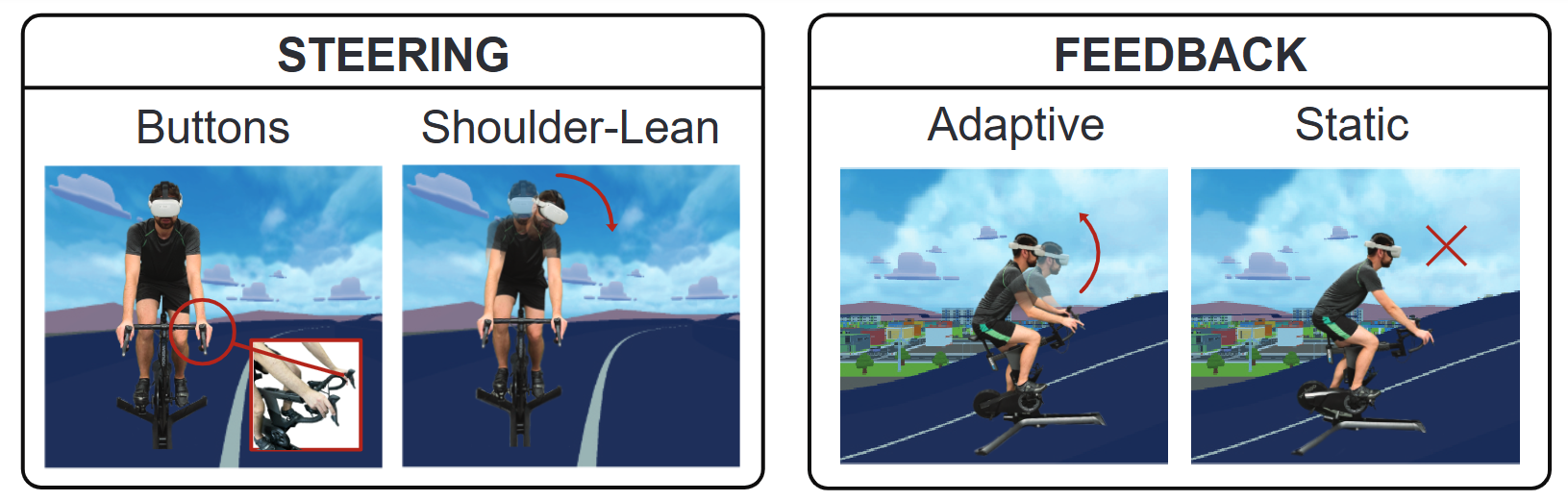

The Impact of Bike-Based Controllers and Adaptive Feedback on Immersion and Enjoyment in a Virtual Reality Cycling Exergame

Jonas Keppel (University of Duisburg-Essen), Marvin Strauss (University of Duisburg-Essen), Shuoheng Zhang (University of Duisburg-Essen), Markus Stroehnisch (University of Duisburg-Essen), Stefan Lewin (University of Duisburg-Essen), Uwe Gruenefeld (GenerIO), Donald Degraen (University of Canterbury), David Goedicke (University of Duisburg-Essen), Andrii Matviienko (KTH Royal Institute of Technology), Stefan Schneegass (University of Duisburg-Essen)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Keppel2025ImpactBikebased,

title = {The Impact of Bike-Based Controllers and Adaptive Feedback on Immersion and Enjoyment in a Virtual Reality Cycling Exergame},

author = {Jonas Keppel (University of Duisburg-Essen), Marvin Strauss (University of Duisburg-Essen), Shuoheng Zhang (University of Duisburg-Essen), Markus Stroehnisch (University of Duisburg-Essen), Stefan Lewin (University of Duisburg-Essen), Uwe Gruenefeld (GenerIO), Donald Degraen (University of Canterbury), David Goedicke (University of Duisburg-Essen), Andrii Matviienko (KTH Royal Institute of Technology), Stefan Schneegass (University of Duisburg-Essen)},

url = {https://hci.informatik.uni-due.de/, website

https://de.linkedin.com/company/hci-group-essen, linkedin

https://x.com/hci_due, X

https://www.facebook.com/HCIEssen, facebook

https://youtu.be/O8sMQxPRsnM, video},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Cycling exergames can increase enjoyment and promote high energy expenditure, making exercise more engaging and, therefore, supporting healthier lifestyles. To improve player experience in a virtual reality cycling exergame using a stationary bike, we investigated how different input and output techniques affect player engagement. We implemented a bike-based controller integrating button and shoulder-lean steering as input, combined with or without adaptive changes in bike inclination and resistance as output. The results of our study with 24 participants indicate that adaptive modes increase effort and perceived exertion. While button steering provides better pragmatic quality, shoulder-lean steering offers a more hedonic experience but requires more skill and effort. Still, this greater enjoyment fosters higher engagement, particularly when players enter a flow state where the increased physical demands become less noticeable. These findings underscore the potential of bike-based adaptive controllers to maximize player engagement and enhance VR cycling exergame experiences.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}

User Narrative Study for Dealing with Deceptive Chatbot Scams Aiming to Online Fraud

Omid Veisi (University of Toronto), Khoshnaz Kazemian (University of Siegen), Farzaneh Gerami (University of Siegen), Mahya Mirzaee Kharghani (University of Siegen), Sima Amirkhan (University of Siegen), Delong K. Du (University of Siegen), Prof- Dr- Gunnar Stevens (University of Siegen), Alexander Boden Institute (Bonn-Rhein-Sieg University of Applied Science)

Abstract | Tags: Late Breaking Work | Links:

@inproceedings{Veisi2025UserNarrative,

title = {User Narrative Study for Dealing with Deceptive Chatbot Scams Aiming to Online Fraud},

author = {Omid Veisi (University of Toronto), Khoshnaz Kazemian (University of Siegen), Farzaneh Gerami (University of Siegen), Mahya Mirzaee Kharghani (University of Siegen), Sima Amirkhan (University of Siegen), Delong K. Du (University of Siegen), Prof- Dr- Gunnar Stevens (University of Siegen), Alexander Boden Institute (Bonn-Rhein-Sieg University of Applied Science)},

url = {https://www.verbraucherinformatik.de/en/team-en/, website https://www.linkedin.com/showcase/institut-f%C3%BCr-verbraucherinformatik/posts/?feedView=all, lab's linkedin

https://www.linkedin.com/in/delong-du/, author's linkedin

https://www.itsec.wiwi.uni-siegen.de/, social media},

doi = {10.1145/3706599.3720152},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Chatbots have become an integral part of everyday life, making it increasingly important to conduct empirical research on their use in the context of online scams. This study explores users' perceptions of deception by advanced chatbots based on semi-structured interviews with 16 individuals who believe they were victims of scam bots. The preliminary findings examine how chatbots can convincingly mimic human conversation, leading to deception. Results show how participants identified distinct differences in communication styles—such as mechanical responses, repetitive messaging, and emotional flatness—that shaped their perceptions and suspicions of interacting with automated chatbots rather than authentic humans.},

keywords = {Late Breaking Work},

pubstate = {published},

tppubtype = {inproceedings}

}