We are in the process of curating a list of this year’s publications — including links to social media, lab websites, and supplemental material. Currently, we have 68 full papers, 23 LBWs, three Journal papers, one alt.chi paper, two SIG, two Case Studies, one Interactivity, one Student Game Competition, and we lead three workshops. One paper received a best paper award and 14 papers received an honorable mention.

Disclaimer: This list is not complete yet; the DOIs might not be working yet.

Your publication from 2025 is missing? Please enter the details in this Google Forms and send us an email that you added a publication: contact@germanhci.de

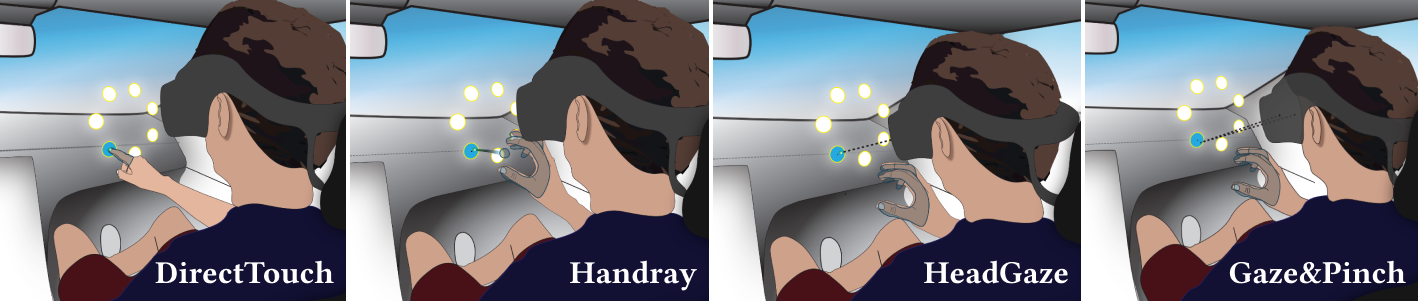

Bumpy Ride? Understanding the Effects of External Forces on Spatial Interactions in Moving Vehicles

Markus Sasalovici (Mercedes-Benz Tech Motion GmbH, Ulm University), Albin Zeqiri (Ulm University), Robin Connor Schramm (Mercedes-Benz Tech Motion GmbH, RheinMain University of Applied Sciences), Oscar Javier Ariza Nunez (Mercedes-Benz Tech Motion GmbH), Pascal Jansen (Ulm University), Jann Philipp Freiwald (Mercedes-Benz Tech Motion GmbH), Mark Colley (Ulm University, UCL), Christian Winkler (Mercedes-Benz Tech Motion GmbH), Enrico Rukzio (Ulm University)

Abstract | Tags: Full Paper, Mixed Reality | Links:

@inproceedings{Sasalovici2025BumpyRide,

title = {Bumpy Ride? Understanding the Effects of External Forces on Spatial Interactions in Moving Vehicles},

author = {Markus Sasalovici (Mercedes-Benz Tech Motion GmbH, Ulm University), Albin Zeqiri (Ulm University), Robin Connor Schramm (Mercedes-Benz Tech Motion GmbH, RheinMain University of Applied Sciences), Oscar Javier Ariza Nunez (Mercedes-Benz Tech Motion GmbH), Pascal Jansen (Ulm University), Jann Philipp Freiwald (Mercedes-Benz Tech Motion GmbH), Mark Colley (Ulm University, UCL), Christian Winkler (Mercedes-Benz Tech Motion GmbH), Enrico Rukzio (Ulm University)},

url = {https://www.uni-ulm.de/en/in/mi/hci/, website

https://cloudstore.uni-ulm.de/s/MWfsPFrBzL93Z4m, teaser video

https://www.linkedin.com/in/markus-sasalovici-b979981a6/, linkedin},

doi = {10.1145/3706598.3714077},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {As the use of Head-Mounted Displays in moving vehicles increases, passengers can immerse themselves in visual experiences independent of their physical environment. However, interaction methods are susceptible to physical motion, leading to input errors and reduced task performance. This work investigates the impact of G-forces, vibrations, and unpredictable maneuvers on 3D interaction methods. We conducted a field study with 24 participants in both stationary and moving vehicles to examine the effects of vehicle motion on four interaction methods: (1) Gaze&Pinch, (2) DirectTouch, (3) Handray, and (4) HeadGaze. Participants performed selections in a Fitts' Law task. Our findings reveal a significant effect of vehicle motion on interaction accuracy and duration across the tested combinations of Interaction Method x Road Type x Curve Type. We found a significant impact of movement on throughput, error rate, and perceived workload. Finally, we propose future research considerations and recommendations on interaction methods during vehicle movement.},

keywords = {Full Paper, Mixed Reality},

pubstate = {published},

tppubtype = {inproceedings}

}

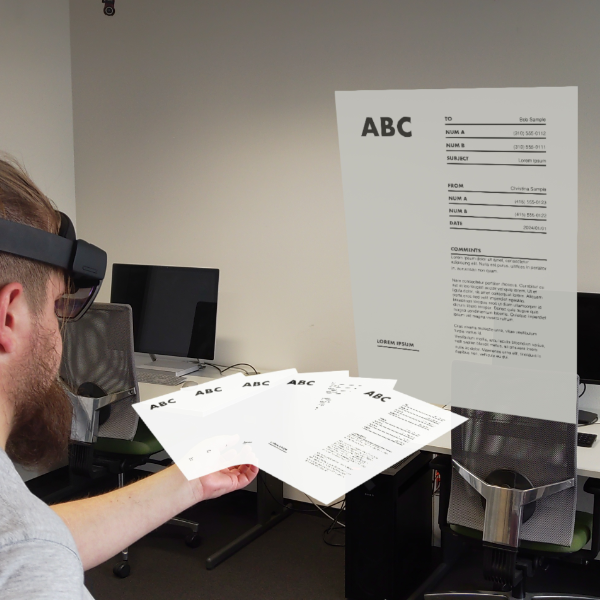

Documents in Your Hands: Exploring Interaction Techniques for Spatial Arrangement of Augmented Reality Documents

Weizhou Luo (Interactive Media Lab Dresden, TUD Dresden University of Technology), Mats Ole Ellenberg (Interactive Media Lab Dresden, TUD Dresden University of Technology, Centre for Tactile Internet with Human-in-the-Loop (CeTI), TUD Dresden University of Technology), Marc Satkowski (Interactive Media Lab Dresden, TUD Dresden University of Technology), Raimund Dachselt (Interactive Media Lab Dresden, TUD Dresden University of Technology, Centre for Tactile Internet with Human-in-the-Loop (CeTI), TUD Dresden University of Technology, Centre for Scalable Data Analytics, Artificial Intelligence (ScaDS.AI), Dresden/Leipzig)

Abstract | Tags: Full Paper, Mixed Reality | Links:

@inproceedings{Luo2025DocumentsYour,

title = {Documents in Your Hands: Exploring Interaction Techniques for Spatial Arrangement of Augmented Reality Documents},

author = {Weizhou Luo (Interactive Media Lab Dresden, TUD Dresden University of Technology), Mats Ole Ellenberg (Interactive Media Lab Dresden, TUD Dresden University of Technology, Centre for Tactile Internet with Human-in-the-Loop (CeTI), TUD Dresden University of Technology), Marc Satkowski (Interactive Media Lab Dresden, TUD Dresden University of Technology), Raimund Dachselt (Interactive Media Lab Dresden, TUD Dresden University of Technology, Centre for Tactile Internet with Human-in-the-Loop (CeTI), TUD Dresden University of Technology, Centre for Scalable Data Analytics and Artificial Intelligence (ScaDS.AI), Dresden/Leipzig)},

url = {https://drive.google.com/file/d/1zlcyKIexrXMUVfsb5umWsOWmFkDzLopY/view?usp=share_link, full video

https://imld.de/en/, website

https://de.linkedin.com/company/interactive-media-lab-dresden, research group linkedin

https://de.linkedin.com/in/weizhou-luo-8ab457bb, author linkedin},

doi = {10.1145/3706598.3713518},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Augmented Reality (AR) promises to enhance daily office activities involving numerous textual documents, slides, and spreadsheets by expanding workspaces and enabling more direct interaction. However, there is a lack of systematic understanding of how knowledge workers can manage multiple documents and organize, explore, and compare them in AR environments. Therefore, we conducted a user-centered design study (N = 21) using predefined spatial document layouts in AR to elicit interaction techniques, resulting in 790 observation notes. Thematic analysis identified various interaction methods for aggregating, distributing, transforming, inspecting, and navigating document collections. Based on these findings, we propose a design space and distill design implications for AR document arrangement systems, such as enabling body-anchored storage, facilitating layout spreading and compressing, and designing interactions for layout transformation. To demonstrate their usage, we developed a rapid prototyping system and exemplify three envisioned scenarios. With this, we aim to inspire the design of future immersive offices.},

keywords = {Full Paper, Mixed Reality},

pubstate = {published},

tppubtype = {inproceedings}

}