We are in the process of curating a list of this year’s publications — including links to social media, lab websites, and supplemental material. Currently, we have 68 full papers, 23 LBWs, three Journal papers, one alt.chi paper, two SIG, two Case Studies, one Interactivity, one Student Game Competition, and we lead three workshops. One paper received a best paper award and 13 papers received an honorable mention.

Disclaimer: This list is not complete yet; the DOIs might not be working yet.

Your publication from 2025 is missing? Please enter the details in this Google Forms and send us an email that you added a publication: contact@germanhci.de

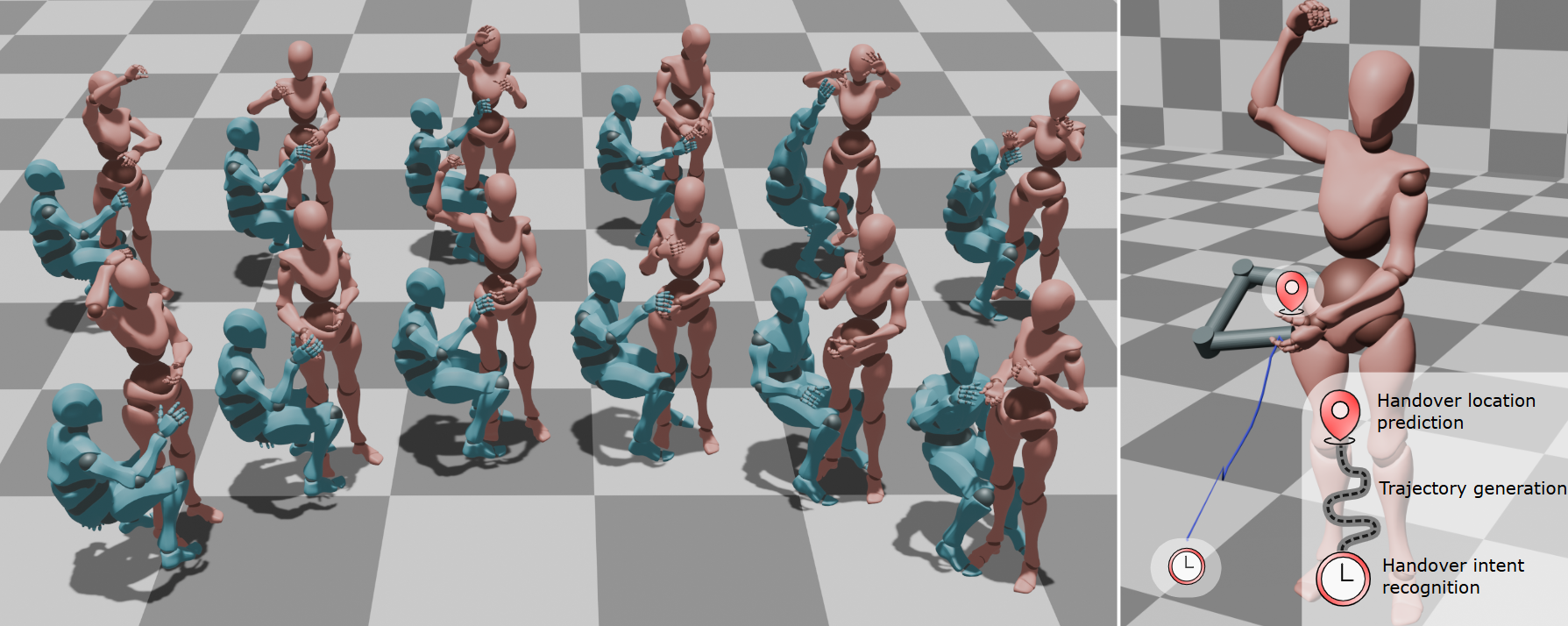

3HANDS Dataset: Learning from Humans for Generating Naturalistic Handovers with Supernumerary Robotic Limbs

Artin Saberpour (Saarland Informatics Campus), Yi-Chi Liao (ETH), Ata Otaran (Saarland Informatics Campus), Rishabh Dabral (Max-Planck Institute for Informatics), Marie Muehlhaus (Saarland Informatics Campus), Christian Theobalt (Max-Planck Institute for Informatics), Martin Schmitz (Saarland Informatics Campus), Juergen Steimle (Saarland Informatics Campus)

Abstract | Tags: Full Paper, Robotics | Links:

@inproceedings{Saberpour20253HandsDataset,

title = {3HANDS Dataset: Learning from Humans for Generating Naturalistic Handovers with Supernumerary Robotic Limbs},

author = {Artin Saberpour (Saarland Informatics Campus), Yi-Chi Liao (ETH), Ata Otaran (Saarland Informatics Campus), Rishabh Dabral (Max-Planck Institute for Informatics), Marie Muehlhaus (Saarland Informatics Campus), Christian Theobalt (Max-Planck Institute for Informatics), Martin Schmitz (Saarland Informatics Campus), Juergen Steimle (Saarland Informatics Campus)},

url = {https://hci.cs.uni-saarland.de/, website},

doi = {10.1145/3706598.3713306},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Supernumerary robotic limbs are robotic structures integrated closely with the user's body, which augment human physical capabilities and necessitate seamless, naturalistic human-machine interaction. For effective assistance in physical tasks, enabling SRLs to hand over objects to humans is crucial. Yet, designing heuristic-based policies for robots is time-consuming, difficult to generalize across tasks, and results in less human-like motion. When trained with proper datasets, generative models are powerful alternatives for creating naturalistic handover motions. We introduce 3HANDS, a novel dataset of object handover interactions between a participant performing a daily activity and another participant enacting a hip-mounted SRL in a naturalistic manner. 3HANDS captures the unique characteristics of SRL interactions: operating in intimate personal space with asymmetric object origins, implicit motion synchronization, and the user’s engagement in a primary task during the handover. To demonstrate the effectiveness of our dataset, we present three models: one that generates naturalistic handover trajectories, another that determines the appropriate handover endpoints, and a third that predicts the moment to initiate a handover. In a user study (N=10), we compare the handover interaction performed with our method compared to a baseline. The findings show that our method was perceived as significantly more natural, less physically demanding, and more comfortable.},

keywords = {Full Paper, Robotics},

pubstate = {published},

tppubtype = {inproceedings}

}

Developing and Validating the Perceived System Curiosity Scale (PSC): Measuring Users' Perceived Curiosity of Systems

Jan Leusmann (LMU Munich), Steeven Villa (LMU Munich), Burak Berberoglu (LMU Munich), Chao Wang (Honda Research Institute Europe), Sven Mayer (LMU Munich)

Honorable MentionAbstract | Tags: Full Paper, Honorable Mention, Robotics | Links:

@inproceedings{Leusmann2025DevelopingValidating,

title = {Developing and Validating the Perceived System Curiosity Scale (PSC): Measuring Users' Perceived Curiosity of Systems},

author = {Jan Leusmann (LMU Munich), Steeven Villa (LMU Munich), Burak Berberoglu (LMU Munich), Chao Wang (Honda Research Institute Europe), Sven Mayer (LMU Munich)},

url = {https://www.medien.ifi.lmu.de/, website https://www.linkedin.com/search/results/all/?fetchDeterministicClustersOnly=true&heroEntityKey=urn%3Ali%3Aorganization%3A93636029&keywords=lmu%20media%20informatics%20group&origin=RICH_QUERY_SUGGESTION&position=1&searchId=730aeabf-245a-4e69-af0b-ee7df6bed910&sid=GuZ&spellCorrectionEnabled=false, lab\\\'s linkedin

https://www.linkedin.com/in/jan-leusmann/, author\\\'s linkedin},

doi = {10.1145/3706598.3713087},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Like humans, today's systems, such as robots and voice assistants, can express curiosity to learn and engage with their surroundings. While curiosity is a well-established human trait that enhances social connections and drives learning, no existing scales assess the perceived curiosity of systems. Thus, we introduce the Perceived System Curiosity (PSC) scale to determine how users perceive curious systems. We followed a standardized process of developing and validating scales, resulting in a validated 12-item scale with 3 individual sub-scales measuring explorative, investigative, and social dimensions of system curiosity. In total, we generated 831 items based on literature and recruited 414 participants for item selection and 320 additional participants for scale validation. Our results show that the PSC scale has inter-item reliability and convergent and construct validity. Thus, this scale provides an instrument to explore how perceived curiosity influences interactions with technical systems systematically.},

keywords = {Full Paper, Honorable Mention, Robotics},

pubstate = {published},

tppubtype = {inproceedings}

}

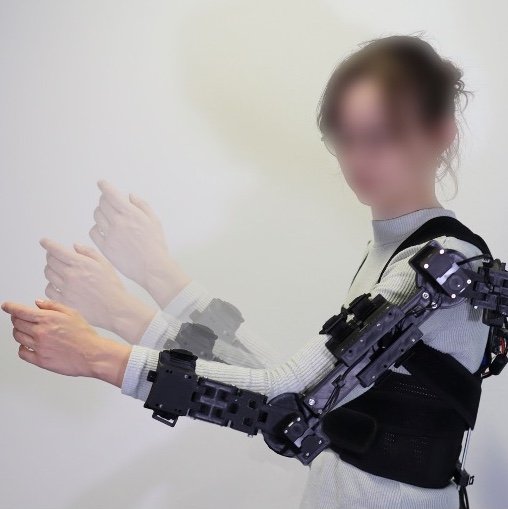

ExoKit: A Toolkit for Rapid Prototyping of Interactions for Arm-based Exoskeletons

Marie Muehlhaus (Saarland University), Alexander Liggesmeyer (Saarland University), Jürgen Steimle (Saarland University)

Abstract | Tags: Full Paper, Robotics | Links:

@inproceedings{Muehlhaus2025Exokit,

title = {ExoKit: A Toolkit for Rapid Prototyping of Interactions for Arm-based Exoskeletons},

author = {Marie Muehlhaus (Saarland University), Alexander Liggesmeyer (Saarland University), Jürgen Steimle (Saarland University)},

url = {https://hci.cs.uni-saarland.de, website

https://www.linkedin.com/company/saarhcilab/, research group linkedin

https://www.linkedin.com/in/marie-mühlhaus-21b2b8202/, author's linkedin},

doi = {10.1145/3706598.3713815},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Exoskeletons open up a unique interaction space that seamlessly integrates users' body movements with robotic actuation. Despite its potential, human-exoskeleton interaction remains an underexplored area in HCI, largely due to the lack of accessible prototyping tools that enable designers to easily develop exoskeleton designs and customized interactive behaviors. We present ExoKit, a do-it-yourself toolkit for rapid prototyping of low-fidelity, functional exoskeletons targeted at novice roboticists. ExoKit includes modular hardware components for sensing and actuating shoulder and elbow joints, which are easy to fabricate and (re)configure for customized functionality and wearability. To simplify the programming of interactive behaviors, we propose functional abstractions that encapsulate high-level human-exoskeleton interactions. These can be readily accessed either through ExoKit’s command-line or graphical user interface, a Processing library, or microcontroller firmware, each targeted at different experience levels. Findings from implemented application cases and two usage studies demonstrate the versatility and accessibility of ExoKit for early-stage interaction design.},

keywords = {Full Paper, Robotics},

pubstate = {published},

tppubtype = {inproceedings}

}

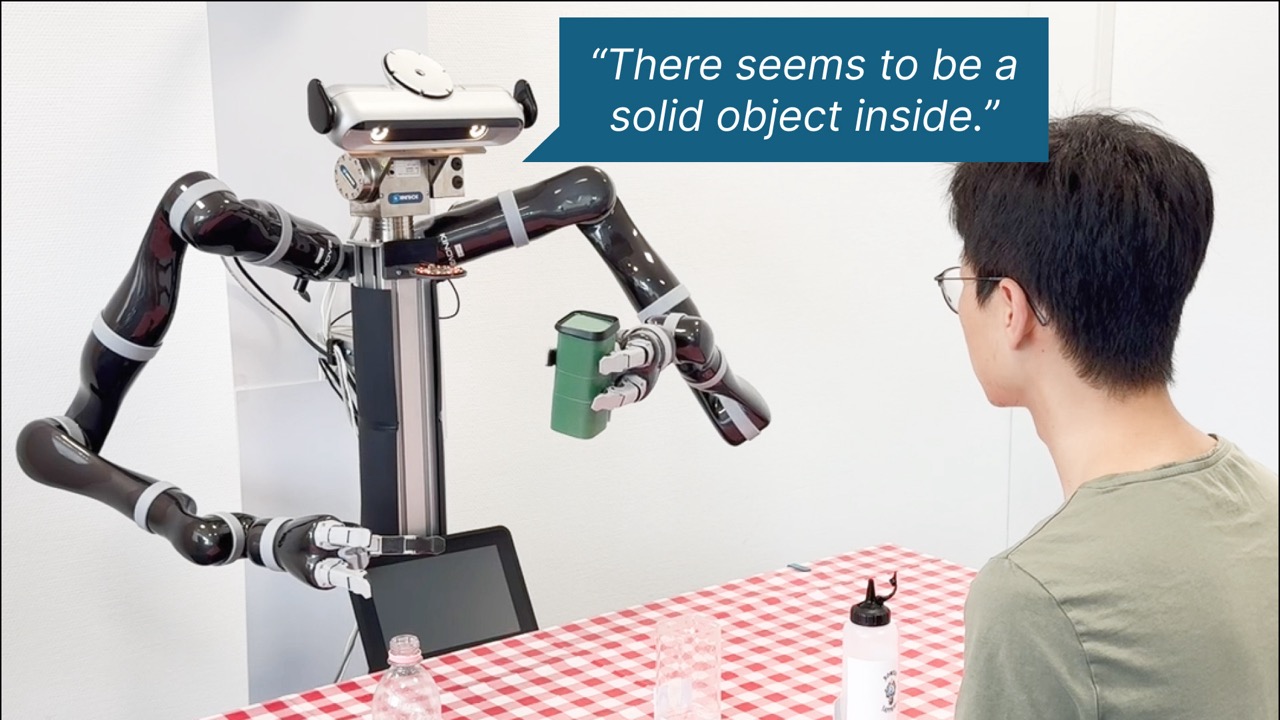

Investigating LLM-Driven Curiosity in Human-Robot Interaction

Jan Leusmann (LMU Munich), Anna Belardinelli (Honda Research Institute Europe), Luke Haliburton (LMU Munich), Stephan Hasler (Honda Research Institute Europe), Albrecht Schmidt (LMU Munich), Sven Mayer (LMU Munich), Michael Gienger (Honda Research Institute Europe), Chao Wang (Honda Research Institute Europe)

Abstract | Tags: Full Paper, Robotics | Links:

@inproceedings{Leusmann2025InvestigatingLlmdriven,

title = {Investigating LLM-Driven Curiosity in Human-Robot Interaction},

author = {Jan Leusmann (LMU Munich), Anna Belardinelli (Honda Research Institute Europe), Luke Haliburton (LMU Munich), Stephan Hasler (Honda Research Institute Europe), Albrecht Schmidt (LMU Munich), Sven Mayer (LMU Munich), Michael Gienger (Honda Research Institute Europe), Chao Wang (Honda Research Institute Europe)},

url = {https://www.medien.ifi.lmu.de/, website https://www.linkedin.com/company/lmu-media-informatics-group/posts/?feedView=all, lab's linkedin

https://www.linkedin.com/in/jan-leusmann/, author's linkedin},

doi = {10.1145/3706598.3713923},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Integrating curious behavior traits into robots is essential for them to learn and adapt to new tasks over their lifetime and to enhance human-robot interaction. However, the effects of robots expressing curiosity on user perception, user interaction, and user experience in collaborative tasks are unclear. In this work, we present a Multimodal Large Language Model-based system that equips a robot with non-verbal and verbal curiosity traits. We conducted a user study (N=20) to investigate how these traits modulate the robot's behavior and the users' impressions of sociability and quality of interaction. Participants prepared cocktails or pizzas with a robot, which was either curious or non-curious. Our results show that we could create user-centric curiosity, which users perceived as more human-like, inquisitive, and autonomous while resulting in a longer interaction time. We contribute a set of design recommendations allowing system designers to take advantage of curiosity in collaborative tasks.},

keywords = {Full Paper, Robotics},

pubstate = {published},

tppubtype = {inproceedings}

}

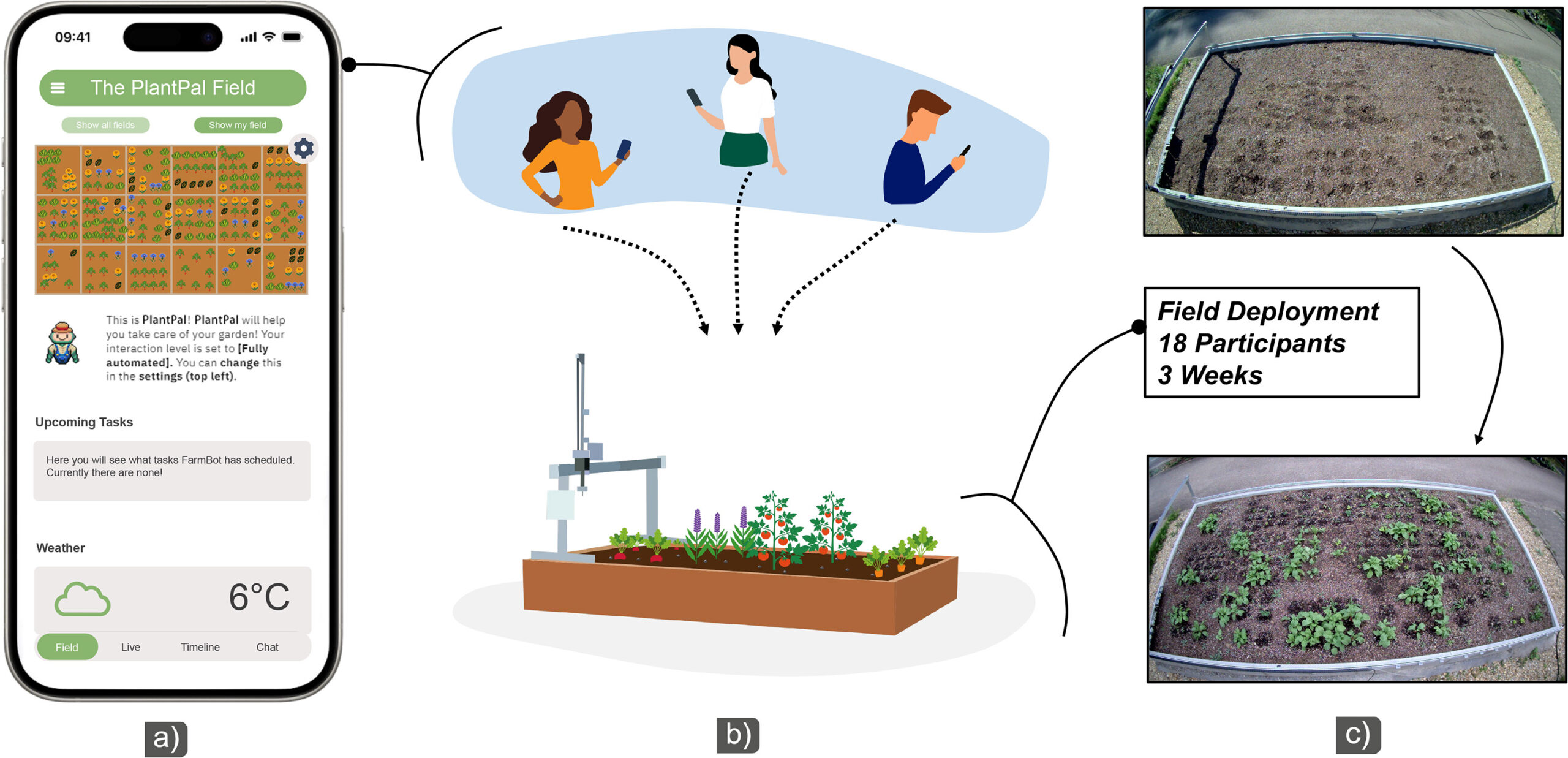

PlantPal: Leveraging Precision Agriculture Robots to Facilitate Remote Engagement in Urban Gardening

Albin Zeqiri (Ulm University), Julian Britten (Ulm University), Clara Schramm (Ulm University), Pascal Jansen (Ulm University), Michael Rietzler (Ulm University), Enrico Rukzio (Ulm University)

Abstract | Tags: Full Paper, Robotics | Links:

@inproceedings{Zeqiri2025Plantpal,

title = {PlantPal: Leveraging Precision Agriculture Robots to Facilitate Remote Engagement in Urban Gardening},

author = {Albin Zeqiri (Ulm University), Julian Britten (Ulm University), Clara Schramm (Ulm University), Pascal Jansen (Ulm University), Michael Rietzler (Ulm University), Enrico Rukzio (Ulm University)},

url = {https://www.uni-ulm.de/en/in/mi/hci/, website

https://www.linkedin.com/in/albinzeq/, linkedin},

doi = {10.1145/3706598.3713180},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Urban gardening is widely recognized for its numerous health and environmental benefits. However, the lack of suitable garden spaces, demanding daily schedules and limited gardening expertise present major roadblocks for citizens looking to engage in urban gardening. While prior research has explored smart home solutions to support urban gardeners, these approaches currently do not fully address these practical barriers. In this paper, we present PlantPal, a system that enables the cultivation of garden spaces irrespective of one's location, expertise level, or time constraints. PlantPal enables the shared operation of a precision agriculture robot (PAR) that is equipped with garden tools and a multi-camera system. Insights from a 3-week deployment (N=18) indicate that PlantPal facilitated the integration of gardening tasks into daily routines, fostered a sense of connection with one's field, and provided an engaging experience despite the remote setting. We contribute design considerations for future robot-assisted urban gardening concepts.},

keywords = {Full Paper, Robotics},

pubstate = {published},

tppubtype = {inproceedings}

}