We are in the process of curating a list of this year’s publications — including links to social media, lab websites, and supplemental material. Currently, we have 65 full papers, 22 LBWs, three Journal papers, one alt.chi paper, two SIG, two Case Studies, one Interactivity, one Student Game Competition, and we lead two workshops. 13 papers received an honorable mention.

Disclaimer: This list is not complete yet; the DOIs might not be working yet.

Your publication from 2025 is missing? Please enter the details in this Google Forms and send us an email that you added a publication: contact@germanhci.de

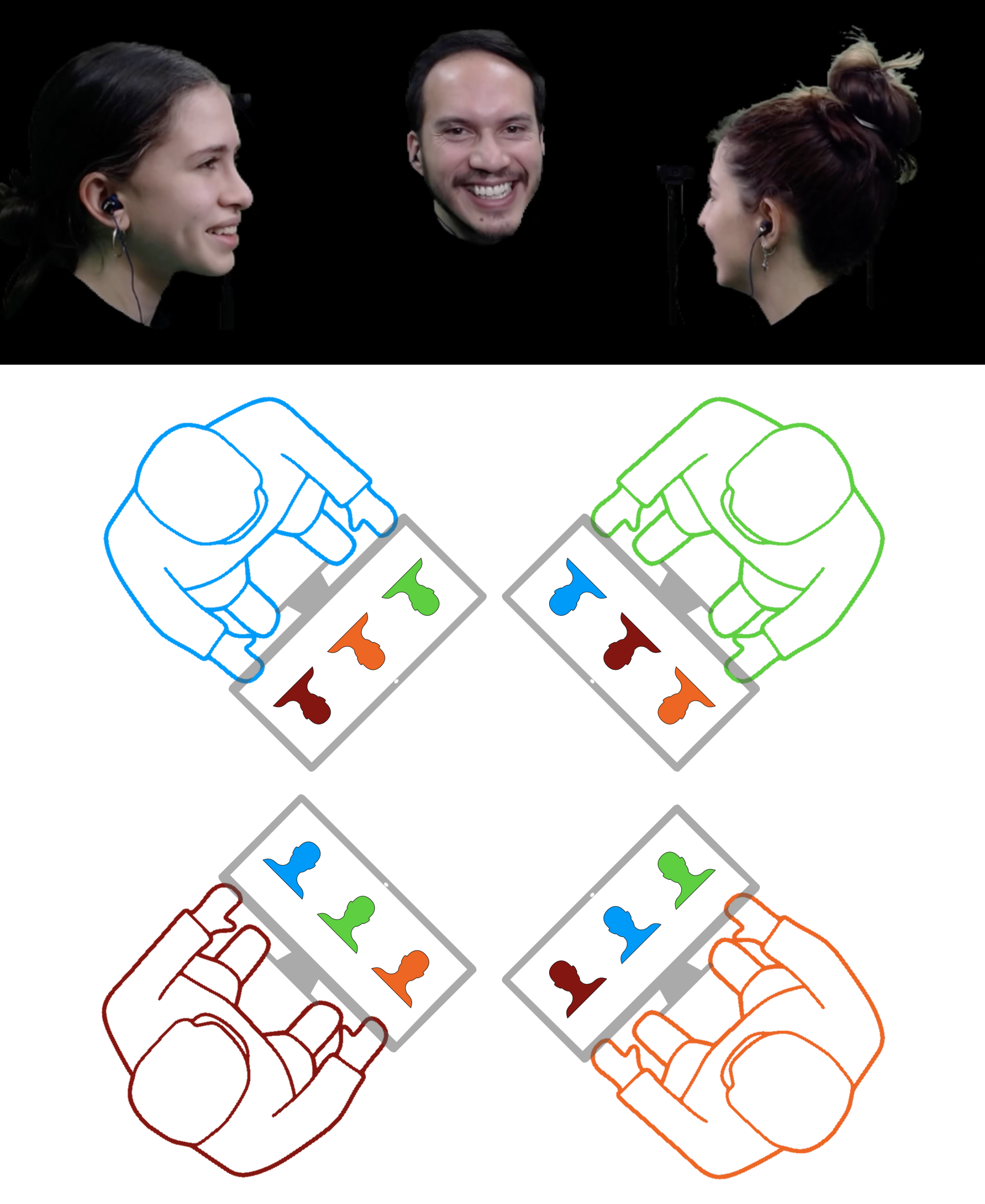

Gazing Heads: Investigating Gaze Perception in Video-Mediated Communication

Martin Schuessler (University of Heidelberg), Luca Hormann (University of Heidelberg), Raimund Dachselt (Technische Universität Dresden), Andrew Blake (Clare Hall, University of Cambridge), Carsten Rother (University of Heidelberg)

Abstract | Tags: Gaze & Visual Perception, Journal | Links:

@inproceedings{Schuessler2025GazingHeads,

title = {Gazing Heads: Investigating Gaze Perception in Video-Mediated Communication},

author = {Martin Schuessler (University of Heidelberg), Luca Hormann (University of Heidelberg), Raimund Dachselt (Technische Universität Dresden), Andrew Blake (Clare Hall, University of Cambridge), Carsten Rother (University of Heidelberg)},

url = {https://imld.de/en/, website

https://de.linkedin.com/company/interactive-media-lab-dresden, research group linkedin

https://de.linkedin.com/in/raimund-dachselt-b2a16b1a0, author's linkedin},

doi = {10.1145/3660343},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Videoconferencing has become a ubiquitous medium for collaborative work. It does suffer however from various drawbacks such as zoom fatigue. This paper addresses the quality of user experience by exploring an enhanced system concept with the capability of conveying gaze and attention. Gazing Heads is a round-table virtual meeting concept that uses only a single screen per participant. It enables direct eye contact, and signals gaze via controlled head rotation. The technology to realise this novel concept is not quite mature though, so we built a camera-based simulation for four simultaneous videoconference users. We conducted a user study comparing Gazing Heads with a conventional “Tiled View” video conferencing system, for 20 groups of 4 people, on each of two tasks. The study found that head rotation clearly conveys gaze and strongly enhances the perception of attention. Measurements of turn-taking behaviour did not differ decisively between the two systems (though there were significant differences between the two tasks). A novel insight in comparison to prior studies is that there was a significant increase in mutual eye contact with Gazing Heads, and that users clearly felt more engaged, encouraged to participate and more socially present. Overall, participants expressed a clear preference for Gazing Heads. These results suggest that fully implementing the Gazing Heads concept, using modern computer vision technology as it matures, could significantly enhance the experience of videoconferencing.},

keywords = {Gaze & Visual Perception, Journal},

pubstate = {published},

tppubtype = {inproceedings}

}

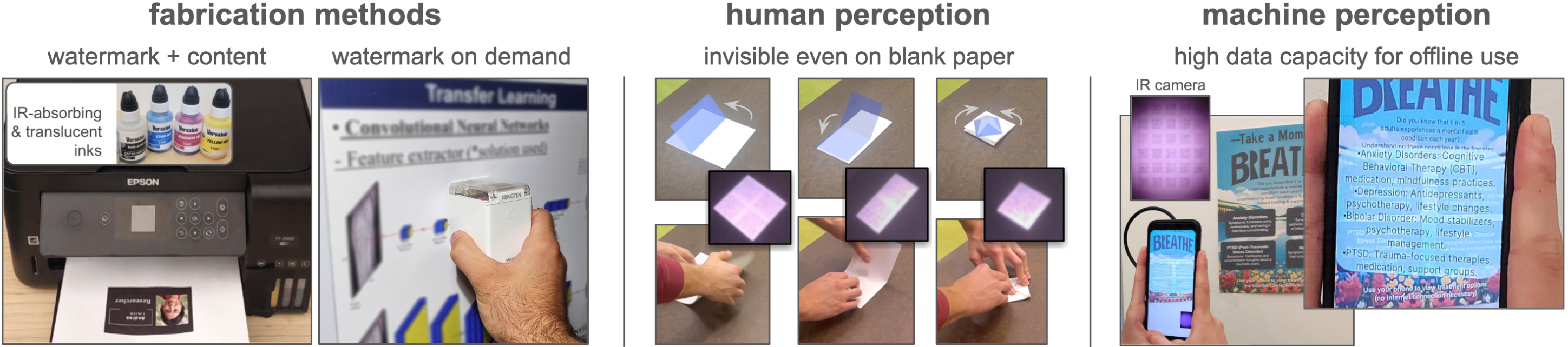

Imprinto: Enhancing Infrared Inkjet Watermarking for Human and Machine Perception

Martin Feick (DFKI, Saarland University, Saarland Informatics Campus, Saarbrücken, Germany, MIT CSAIL, Cambridge, Massachusetts, United States), Xuxin Tang (Virginia Tech, Computer Science Department, Blacksburg, Virginia, United States, MIT CSAIL, Cambridge, Massachusetts, United States), Raul Garcia-Martin (University Group for Identification Technologies, Universidad Carlos III de Madrid, Leganes, Madrid, Spain, MIT CSAIL, Cambridge, Massachusetts, United States), Alexandru Luchianov (MIT CSAIL, Cambridge, Massachusetts, United States), Roderick Wei Xiao Huang (MIT CSAIL, Cambridge, Massachusetts, United States), Chang Xiao (Adobe Research, San Jose, California, United States), Alexa Siu (Adobe Research, San Jose, California, United States), Mustafa Doga Dogan (Adobe Research, Basel, Switzerland, MIT CSAIL, Cambridge, Massachusetts, United States)

Abstract | Tags: Full Paper, Gaze & Visual Perception | Links:

@inproceedings{Feick2025Imprinto,

title = {Imprinto: Enhancing Infrared Inkjet Watermarking for Human and Machine Perception},

author = {Martin Feick (DFKI and Saarland University, Saarland Informatics Campus, Saarbrücken, Germany and MIT CSAIL, Cambridge, Massachusetts, United States), Xuxin Tang (Virginia Tech, Computer Science Department, Blacksburg, Virginia, United States and MIT CSAIL, Cambridge, Massachusetts, United States), Raul Garcia-Martin (University Group for Identification Technologies, Universidad Carlos III de Madrid, Leganes, Madrid, Spain and MIT CSAIL, Cambridge, Massachusetts, United States), Alexandru Luchianov (MIT CSAIL, Cambridge, Massachusetts, United States), Roderick Wei Xiao Huang (MIT CSAIL, Cambridge, Massachusetts, United States), Chang Xiao (Adobe Research, San Jose, California, United States), Alexa Siu (Adobe Research, San Jose, California, United States), Mustafa Doga Dogan (Adobe Research, Basel, Switzerland and MIT CSAIL, Cambridge, Massachusetts, United States)},

url = {https://umtl.cs.uni-saarland.de/, website},

doi = {10.1145/3706598.3713286},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

abstract = {Hybrid paper interfaces leverage augmented reality to combine the desired tangibility of paper documents with the affordances of interactive digital media. Typically, virtual content can be embedded through direct links (e.g., QR codes); however, this impacts the aesthetics of the paper print and limits the available visual content space. To address this problem, we present Imprinto, an infrared inkjet watermarking technique that allows for invisible content embeddings only by using off-the-shelf IR inks and a camera. Imprinto was established through a psychophysical experiment, studying how much IR ink can be used while remaining invisible to users regardless of background color. We demonstrate that we can detect invisible IR content through our machine learning pipeline, and we developed an authoring tool that optimizes the amount of IR ink on the color regions of an input document for machine and human detectability. Finally, we demonstrate several applications, including augmenting paper documents and objects.},

keywords = {Full Paper, Gaze & Visual Perception},

pubstate = {published},

tppubtype = {inproceedings}

}